🔗 Paper: arxiv.org/abs/2501.08319

🔗 HF: huggingface.co/papers/2501.08319

🔗 Code: github.com/yoavgur/Feature-Descriptions

7/

🔗 Paper: arxiv.org/abs/2501.08319

🔗 HF: huggingface.co/papers/2501.08319

🔗 Code: github.com/yoavgur/Feature-Descriptions

7/

But combining the two works best! 🚀 5/

But combining the two works best! 🚀 5/

- vocabulary projection (a.k.a logit lens)

- tokens with max probability change in the output

Our output-centric methods require no more than a few inference passes! 4/

- vocabulary projection (a.k.a logit lens)

- tokens with max probability change in the output

Our output-centric methods require no more than a few inference passes! 4/

Our output-based eval measures how well a description of a feature captures its effect on the model's generation. 3/

Our output-based eval measures how well a description of a feature captures its effect on the model's generation. 3/

This is problematic ⚠️

1. Collecting activations for large data is expensive, time-consuming, and often unfeasible.

2. It overlooks how features affect model outputs!

2/

This is problematic ⚠️

1. Collecting activations for large data is expensive, time-consuming, and often unfeasible.

2. It overlooks how features affect model outputs!

2/

Paper: arxiv.org/abs/2412.11965

Code: github.com/amitelhelo/M...

(10/10!)

Paper: arxiv.org/abs/2412.11965

Code: github.com/amitelhelo/M...

(10/10!)

We also observe interesting operations implemented by heads, like the extension of time periods (day → month → year) and association of known figures with years relevant to their historical significance (9/10)

We also observe interesting operations implemented by heads, like the extension of time periods (day → month → year) and association of known figures with years relevant to their historical significance (9/10)

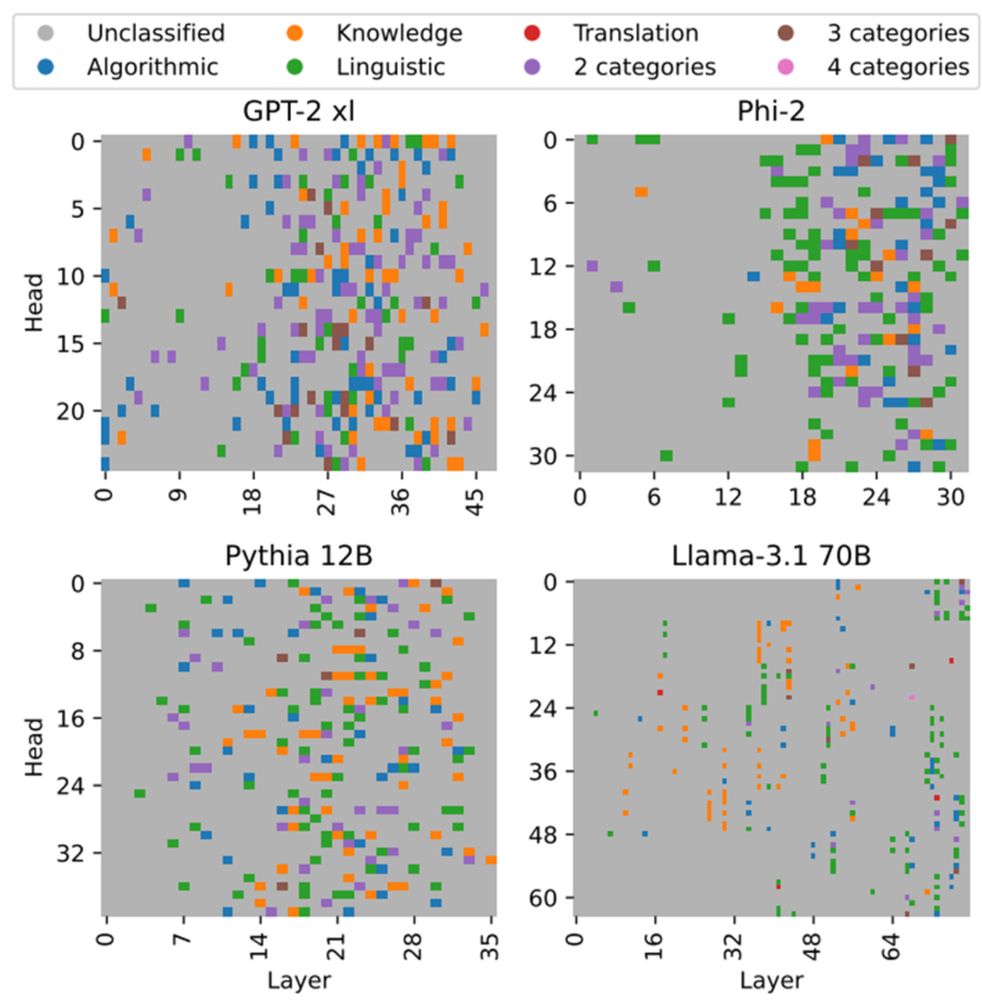

We map the attention heads of Pythia 6.9B and GPT2-xl and manage to identify operations for most heads, reaching 60%-96% in the middle and upper layers (8/10)

We map the attention heads of Pythia 6.9B and GPT2-xl and manage to identify operations for most heads, reaching 60%-96% in the middle and upper layers (8/10)

(4) In Llama-3.1 models, which use grouped-query attention, grouped heads often implement the same or similar relations (7/10)

(4) In Llama-3.1 models, which use grouped-query attention, grouped heads often implement the same or similar relations (7/10)

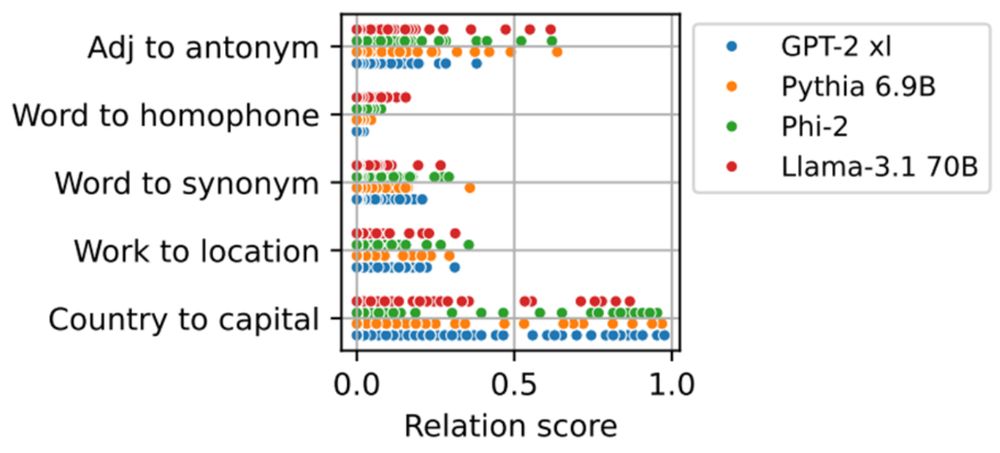

(2) Different heads implement the same relation to varying degrees, which has implications for localization and editing of LLMs (6/10)

(2) Different heads implement the same relation to varying degrees, which has implications for localization and editing of LLMs (6/10)

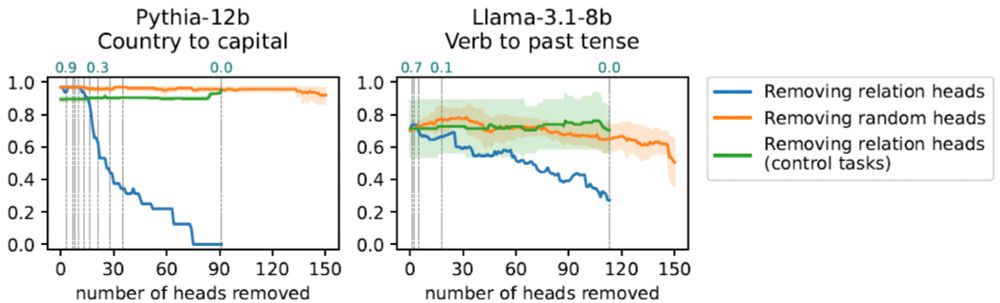

Ablating heads implementing an operation damages the model’s ability to perform tasks requiring the operation compared to removing other heads (4/10)

Ablating heads implementing an operation damages the model’s ability to perform tasks requiring the operation compared to removing other heads (4/10)