> Follow @actinterp.bsky.social

> Website actionable-interpretability.github.io

@talhaklay.bsky.social @anja.re @mariusmosbach.bsky.social @sarah-nlp.bsky.social @iftenney.bsky.social

Paper submission deadline: May 9th!

> Follow @actinterp.bsky.social

> Website actionable-interpretability.github.io

@talhaklay.bsky.social @anja.re @mariusmosbach.bsky.social @sarah-nlp.bsky.social @iftenney.bsky.social

Paper submission deadline: May 9th!

But combining the two works best! 🚀 5/

But combining the two works best! 🚀 5/

Our output-based eval measures how well a description of a feature captures its effect on the model's generation. 3/

Our output-based eval measures how well a description of a feature captures its effect on the model's generation. 3/

Current pipelines use activating inputs, which is costly and ignores how features causally affect model outputs!

We propose efficient output-centric methods that better predict the steering effect of a feature.

New preprint led by @yoav.ml 🧵1/

Current pipelines use activating inputs, which is costly and ignores how features causally affect model outputs!

We propose efficient output-centric methods that better predict the steering effect of a feature.

New preprint led by @yoav.ml 🧵1/

We also observe interesting operations implemented by heads, like the extension of time periods (day → month → year) and association of known figures with years relevant to their historical significance (9/10)

We also observe interesting operations implemented by heads, like the extension of time periods (day → month → year) and association of known figures with years relevant to their historical significance (9/10)

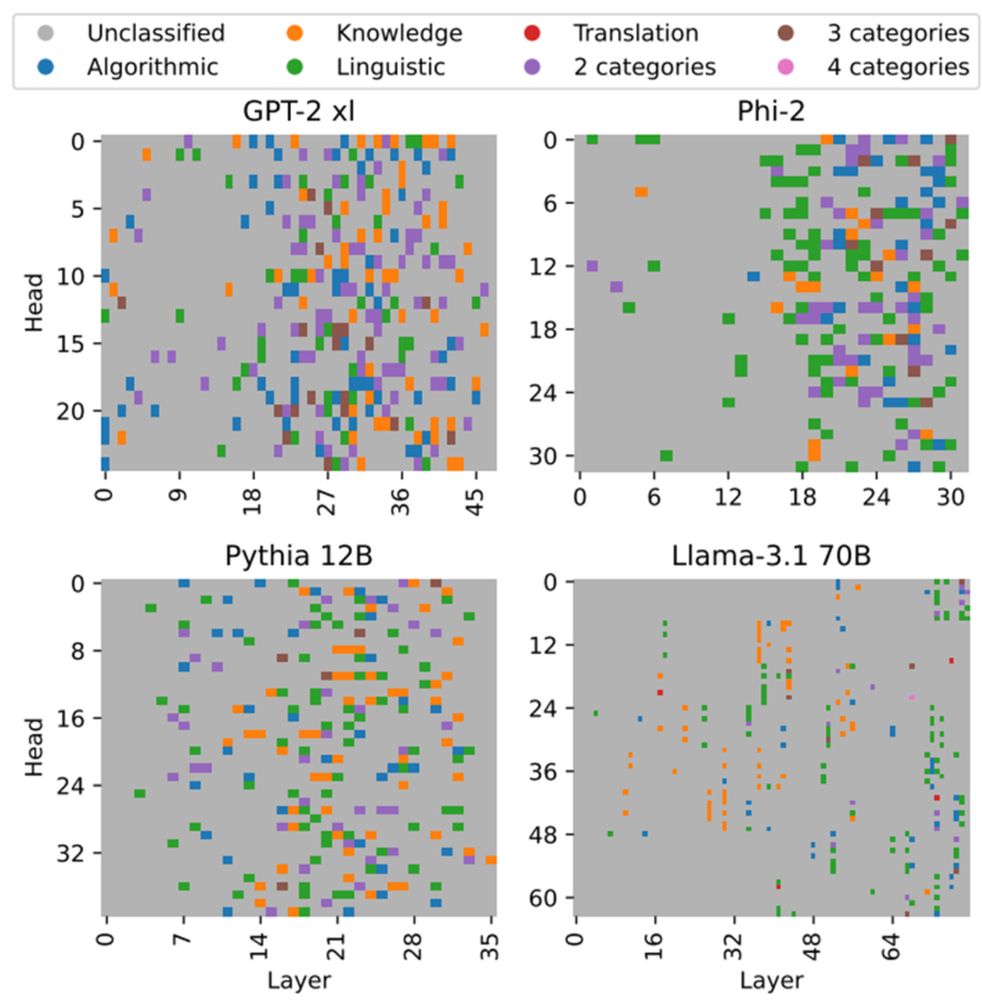

(4) In Llama-3.1 models, which use grouped-query attention, grouped heads often implement the same or similar relations (7/10)

(4) In Llama-3.1 models, which use grouped-query attention, grouped heads often implement the same or similar relations (7/10)

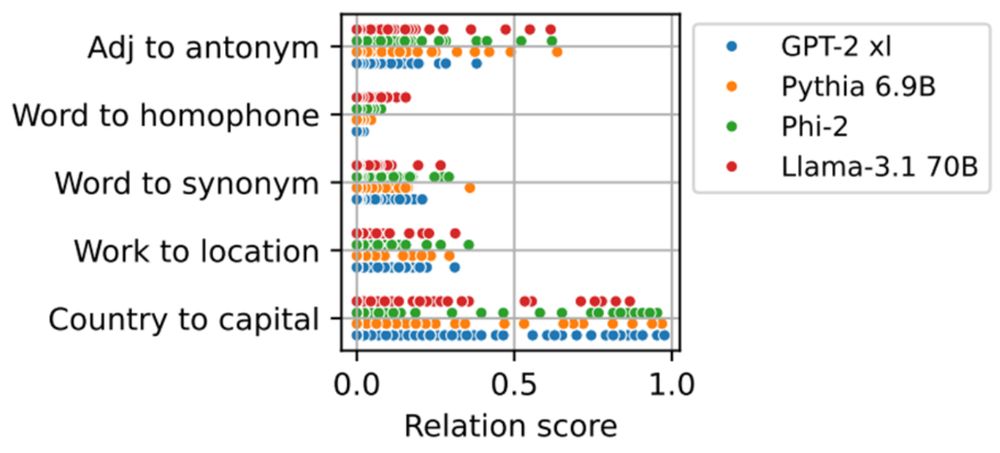

(2) Different heads implement the same relation to varying degrees, which has implications for localization and editing of LLMs (6/10)

(2) Different heads implement the same relation to varying degrees, which has implications for localization and editing of LLMs (6/10)

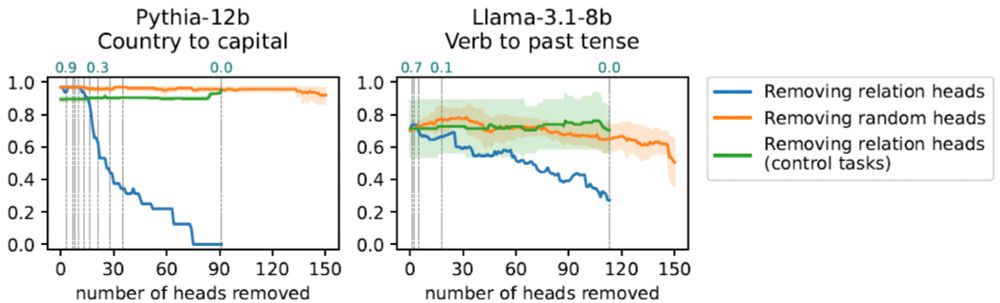

Ablating heads implementing an operation damages the model’s ability to perform tasks requiring the operation compared to removing other heads (4/10)

Ablating heads implementing an operation damages the model’s ability to perform tasks requiring the operation compared to removing other heads (4/10)

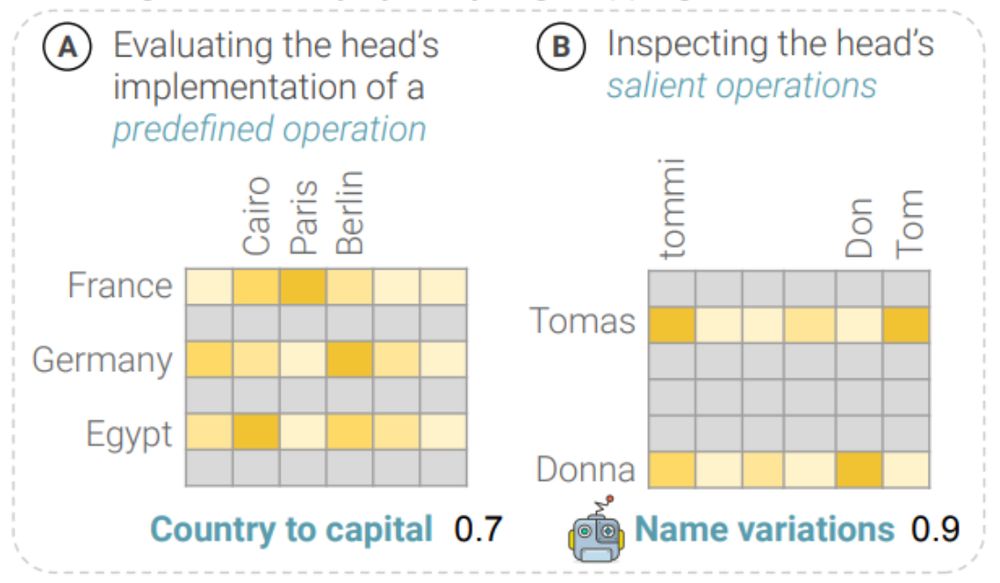

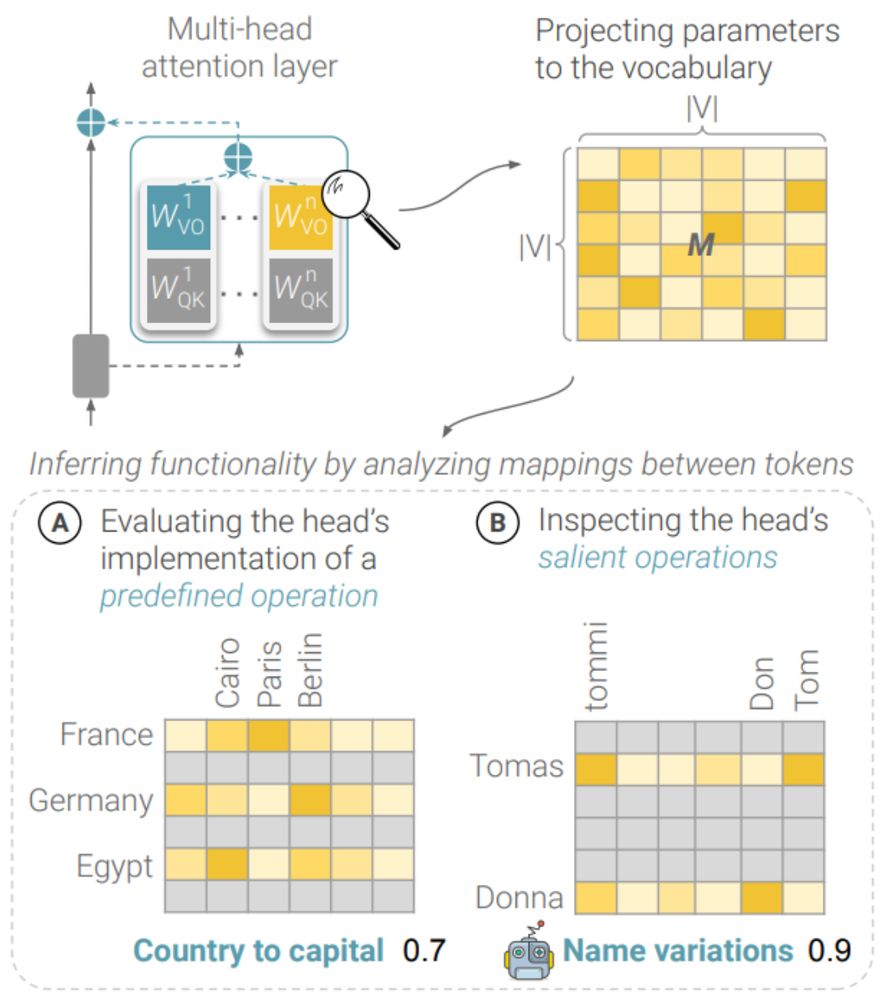

(A) Predefined relations: groups expressing certain relations (e.g. city of a country)

(B) Salient operations: groups for which the head induces the most prominent effect (3/10)

(A) Predefined relations: groups expressing certain relations (e.g. city of a country)

(B) Salient operations: groups for which the head induces the most prominent effect (3/10)

We present an efficient framework – MAPS – for inferring the functionality of attention heads in LLMs ✨directly from their parameters✨

A new preprint with Amit Elhelo 🧵 (1/10)

We present an efficient framework – MAPS – for inferring the functionality of attention heads in LLMs ✨directly from their parameters✨

A new preprint with Amit Elhelo 🧵 (1/10)