mariusmosbach.com

w/ Michelle Yang, @sivareddyg.bsky.social , @msonderegger.bsky.social and @dallascard.bsky.social👇(1/12)

w/ Michelle Yang, @sivareddyg.bsky.social , @msonderegger.bsky.social and @dallascard.bsky.social👇(1/12)

W2 (TT W3) Professorship in Computer Science "AI for People & Society"

@saarland-informatics-campus.de/@uni-saarland.de is looking to appoint an outstanding individual in the field of AI for people and society who has made significant contributions in one or more of the following areas:

W2 (TT W3) Professorship in Computer Science "AI for People & Society"

@saarland-informatics-campus.de/@uni-saarland.de is looking to appoint an outstanding individual in the field of AI for people and society who has made significant contributions in one or more of the following areas:

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

You can find me at these posters:

Tuesday: How Much Can We Forget about Data Contamination? icml.cc/virtual/2025...

You can find me at these posters:

Tuesday: How Much Can We Forget about Data Contamination? icml.cc/virtual/2025...

Paper: arxiv.org/abs/2410.03249

👇🧵

Paper: arxiv.org/abs/2410.03249

👇🧵

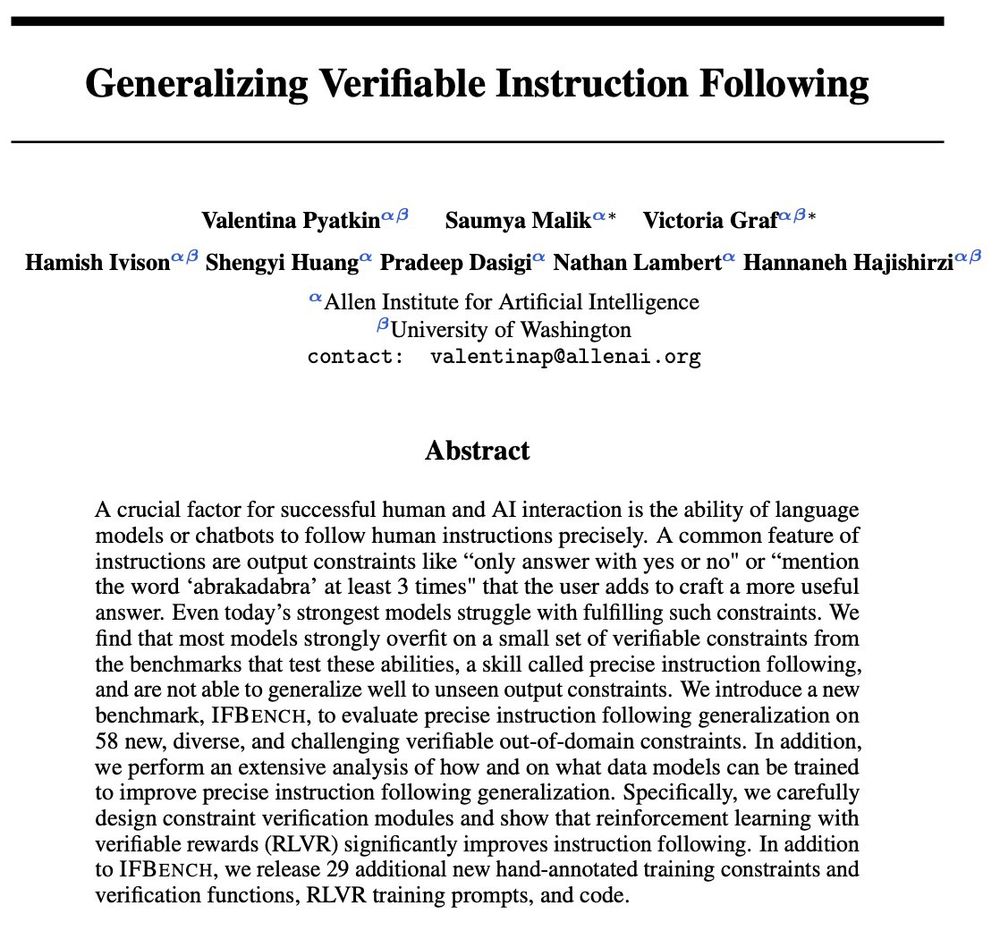

But the set of constraints and verifier functions is limited and most models overfit on IFEval.

We introduce IFBench to measure model generalization to unseen constraints.

But the set of constraints and verifier functions is limited and most models overfit on IFEval.

We introduce IFBench to measure model generalization to unseen constraints.

Paper: arxiv.org/abs/2506.09301 to appear @ #ACL2025 (Main)

Paper: arxiv.org/abs/2506.09301 to appear @ #ACL2025 (Main)

Behind the Research of AI:

We look behind the scenes, beyond the polished papers 🧐🧪

If this sounds fun, check out our first "official" episode with the awesome Gauthier Gidel

from @mila-quebec.bsky.social :

open.spotify.com/episode/7oTc...

Behind the Research of AI:

We look behind the scenes, beyond the polished papers 🧐🧪

If this sounds fun, check out our first "official" episode with the awesome Gauthier Gidel

from @mila-quebec.bsky.social :

open.spotify.com/episode/7oTc...

🤖 ML track: algorithms, math, computation

📚 Socio-technical track: policy, ethics, human participant research

🤖 ML track: algorithms, math, computation

📚 Socio-technical track: policy, ethics, human participant research

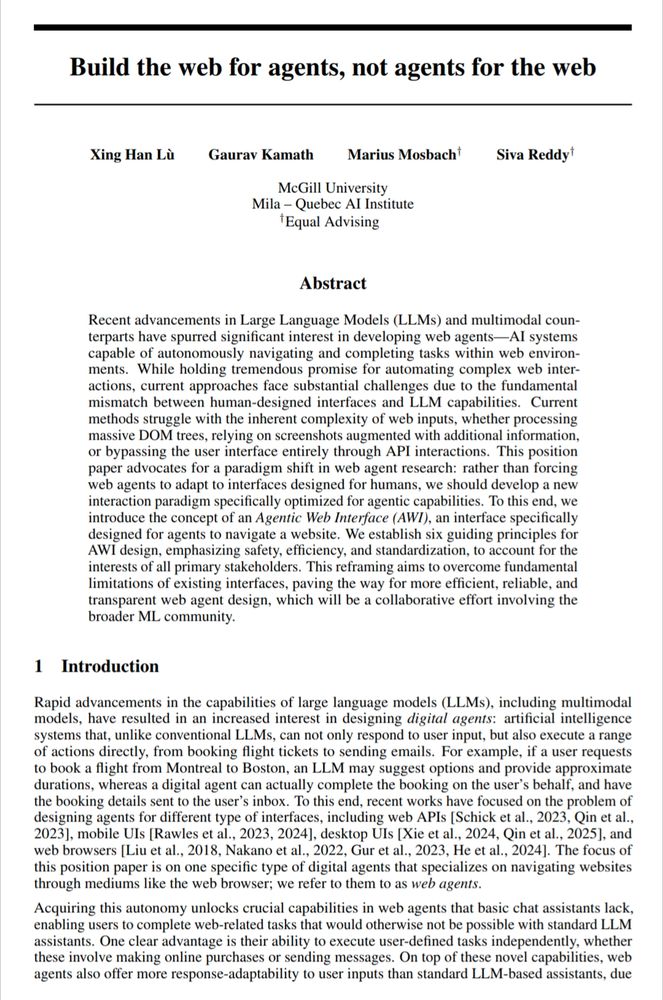

This position paper argues that rather than forcing web agents to adapt to UIs designed for humans, we should develop a new interface optimized for web agents, which we call Agentic Web Interface (AWI).

arxiv.org/abs/2506.10953

This position paper argues that rather than forcing web agents to adapt to UIs designed for humans, we should develop a new interface optimized for web agents, which we call Agentic Web Interface (AWI).

arxiv.org/abs/2506.10953

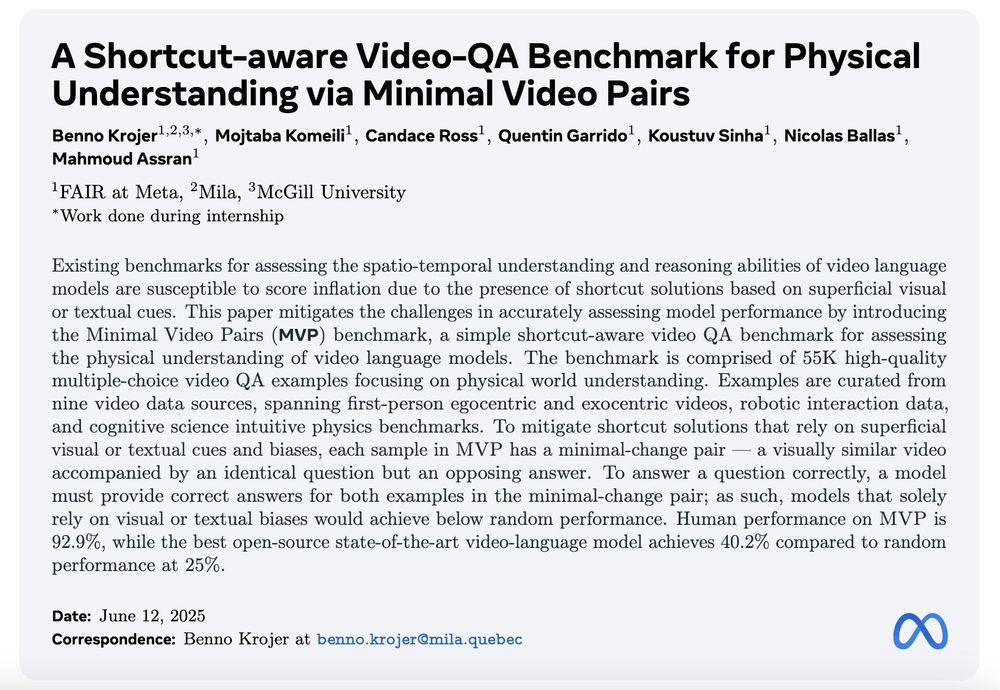

We ask 🤔

What subtle shortcuts are VideoLLMs taking on spatio-temporal questions?

And how can we instead curate shortcut-robust examples at a large-scale?

We release: MVPBench

Details 👇🔬

We ask 🤔

What subtle shortcuts are VideoLLMs taking on spatio-temporal questions?

And how can we instead curate shortcut-robust examples at a large-scale?

We release: MVPBench

Details 👇🔬

@interspeech.bsky.social

A Robust Model for Arabic Dialect Identification using Voice Conversion

Paper 📝 arxiv.org/pdf/2505.24713

Demo 🎙️https://shorturl.at/rrMm6

#Arabic #SpeechTech #NLProc #AI #Speech #ArabicDialects #Interspeech2025 #ArabicNLP

@interspeech.bsky.social

A Robust Model for Arabic Dialect Identification using Voice Conversion

Paper 📝 arxiv.org/pdf/2505.24713

Demo 🎙️https://shorturl.at/rrMm6

#Arabic #SpeechTech #NLProc #AI #Speech #ArabicDialects #Interspeech2025 #ArabicNLP

Our #ACL2025 (Main) paper shows that hallucinations under irrelevant contexts follow a systematic failure mode — revealing how LLMs generalize using abstract classes + context cues, albeit unreliably.

📎 Paper: arxiv.org/abs/2505.22630 1/n

Our #ACL2025 (Main) paper shows that hallucinations under irrelevant contexts follow a systematic failure mode — revealing how LLMs generalize using abstract classes + context cues, albeit unreliably.

📎 Paper: arxiv.org/abs/2505.22630 1/n

The Actionable Interpretability Workshop at #ICML2025 has moved its submission deadline to May 19th. More time to submit your work 🔍🧠✨ Don’t miss out!

The Actionable Interpretability Workshop at #ICML2025 has moved its submission deadline to May 19th. More time to submit your work 🔍🧠✨ Don’t miss out!

🖇️To present my paper "Superlatives in Context", showing how the interpretation of superlatives is very context dependent and often implicit, and how LLMs handle such semantic underspecification

🖇️And we will present RewardBench on Friday

Reach out if you want to chat!

🖇️To present my paper "Superlatives in Context", showing how the interpretation of superlatives is very context dependent and often implicit, and how LLMs handle such semantic underspecification

🖇️And we will present RewardBench on Friday

Reach out if you want to chat!

Vision is much more expressive than language, so some new mech interp rules apply:

Happy to share our “Decoding Vision Transformers: the Diffusion Steering Lens” was accepted at the CVPR 2025 Workshop on Mechanistic Interpretability for Vision!

(1/7)

Vision is much more expressive than language, so some new mech interp rules apply:

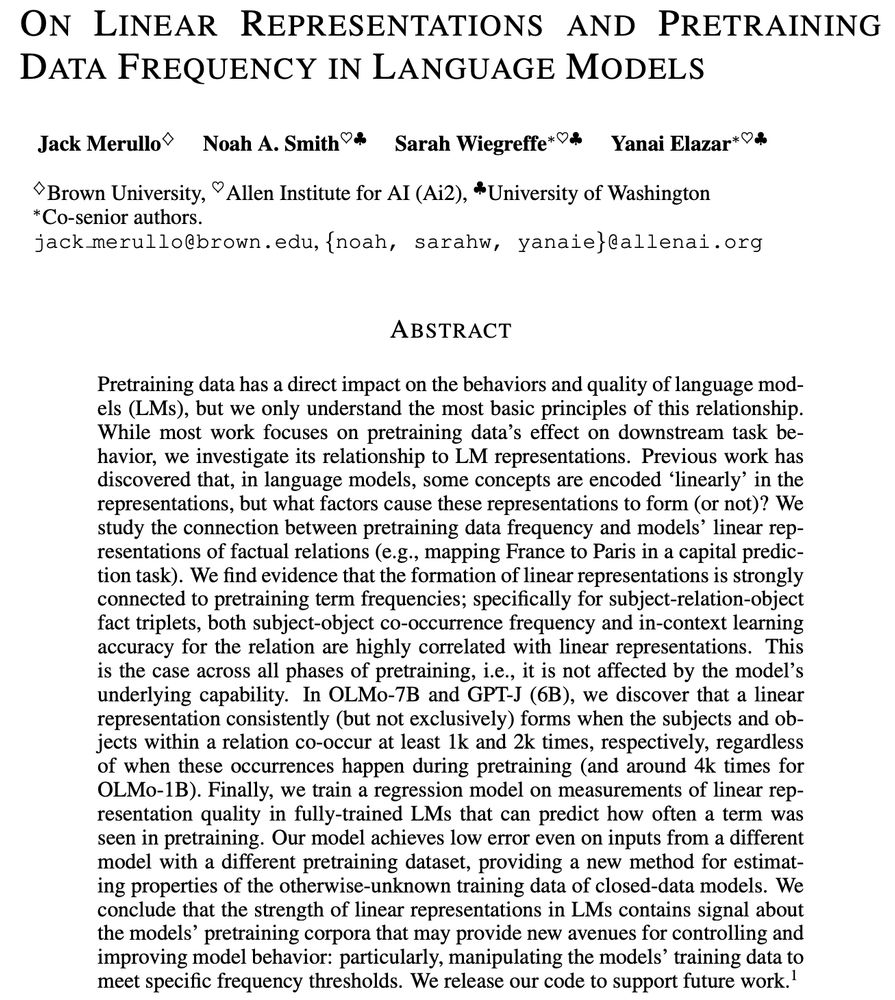

"On Linear Representations and Pretraining Data Frequency in Language Models":

We provide an explanation for when & why linear representations form in large (or small) language models.

Led by @jackmerullo.bsky.social, w/ @nlpnoah.bsky.social & @sarah-nlp.bsky.social

"On Linear Representations and Pretraining Data Frequency in Language Models":

We provide an explanation for when & why linear representations form in large (or small) language models.

Led by @jackmerullo.bsky.social, w/ @nlpnoah.bsky.social & @sarah-nlp.bsky.social

We propose 😎 𝗠𝗜𝗕: a 𝗠echanistic 𝗜nterpretability 𝗕enchmark!

We propose 😎 𝗠𝗜𝗕: a 𝗠echanistic 𝗜nterpretability 𝗕enchmark!

From a simple observational measure of overthinking, we introduce Thought Terminator, a black-box, training-free decoding technique where RMs set their own deadlines and follow them

arxiv.org/abs/2504.13367

Also, we are organizing a workshop at #ICML2025 which is inspired by some of the questions discussed in the paper: actionable-interpretability.github.io

Also, we are organizing a workshop at #ICML2025 which is inspired by some of the questions discussed in the paper: actionable-interpretability.github.io

🔗: arxiv.org/abs/2504.07128

🔗: arxiv.org/abs/2504.07128