https://sbordt.github.io/

Paper: arxiv.org/abs/2410.03249

👇🧵

arxiv.org/abs/2508.11441

tverven.github.io/tiai-seminar/

arxiv.org/abs/2508.11441

tverven.github.io/tiai-seminar/

Call for ≤2 page extended abstract submissions by October 15 now open!

📍 Ellis UnConference in Copenhagen

📅 Dec. 2

🔗 More info: sites.google.com/view/theory-...

@gunnark.bsky.social @ulrikeluxburg.bsky.social @emmanuelesposito.bsky.social

Call for ≤2 page extended abstract submissions by October 15 now open!

📍 Ellis UnConference in Copenhagen

📅 Dec. 2

🔗 More info: sites.google.com/view/theory-...

@gunnark.bsky.social @ulrikeluxburg.bsky.social @emmanuelesposito.bsky.social

imprs.is.mpg.de/application

ellis.eu/news/ellis-p...

imprs.is.mpg.de/application

ellis.eu/news/ellis-p...

How much can we forget about Data Contamination?

by

@sbordt.bsky.social

Watch here: youtu.be/T9Y5-rngOLg

How much can we forget about Data Contamination?

by

@sbordt.bsky.social

Watch here: youtu.be/T9Y5-rngOLg

You can find me at these posters:

Tuesday: How Much Can We Forget about Data Contamination? icml.cc/virtual/2025...

You can find me at these posters:

Tuesday: How Much Can We Forget about Data Contamination? icml.cc/virtual/2025...

arxiv.org/abs/2402.02870

This led us to write a position paper (accepted at #ICML2025) that attempts to identify the problem and to propose a solution.

arxiv.org/abs/2402.02870

👇🧵

arxiv.org/abs/2402.02870

This led us to write a position paper (accepted at #ICML2025) that attempts to identify the problem and to propose a solution.

arxiv.org/abs/2402.02870

👇🧵

This led us to write a position paper (accepted at #ICML2025) that attempts to identify the problem and to propose a solution.

arxiv.org/abs/2402.02870

👇🧵

Paper: arxiv.org/abs/2410.03249

👇🧵

Paper: arxiv.org/abs/2410.03249

👇🧵

This work presents a surprisingly strong positive result for SHAP, showing that a simple sampling modification allows to reliably detect features that don't influence the model.

arxiv.org/abs/2503.23111

This work presents a surprisingly strong positive result for SHAP, showing that a simple sampling modification allows to reliably detect features that don't influence the model.

1. Only 1 round of back-and-forth between authors & reviewers. The review process should not be an endless back and forth. It shouldn't be possible to get your paper accepted by exhausting reviewers.

1. Only 1 round of back-and-forth between authors & reviewers. The review process should not be an endless back and forth. It shouldn't be possible to get your paper accepted by exhausting reviewers.

Check out our poster today at the NeurIPS ATTRIB workshop (3-4:30pm)!

💡 TL;DR: In the large-data regime, a few times of data contamination matter less than you might think.

Check out our poster today at the NeurIPS ATTRIB workshop (3-4:30pm)!

💡 TL;DR: In the large-data regime, a few times of data contamination matter less than you might think.

In this blog, I show how to test LLMs for contamination with Kaggle competitions (of course, there is contamination).

sbordt.substack.com/p/data-conta...

In this blog, I show how to test LLMs for contamination with Kaggle competitions (of course, there is contamination).

sbordt.substack.com/p/data-conta...

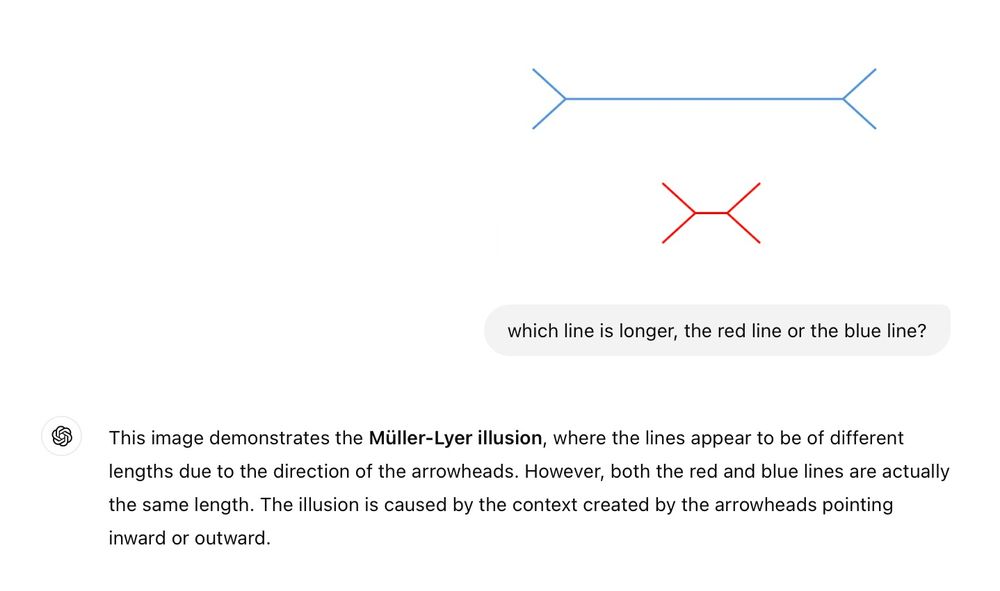

(more examples below)

(more examples below)