www.nytimes.com/2025/11/17/o...

www.nytimes.com/2025/11/17/o...

I'm recruiting PhDs to work on:

🎯 Stat foundations of multi-agent collaboration

🌫️ Model uncertainty & meta-cognition

🔎 Interpretability

💬 LLMs in behavioral science

I'm recruiting PhDs to work on:

🎯 Stat foundations of multi-agent collaboration

🌫️ Model uncertainty & meta-cognition

🔎 Interpretability

💬 LLMs in behavioral science

Martina's internship project suggests trace monitoring metrics and classifiers that can detect when an LLM reasoning trace is going to fail in mid way. The approach saves up to 70% of token usage, and it even helps with increasing accuracy by 2%-3%.

Our new paper (arxiv.org/abs/2510.10494) proposes latent-trajectory signals from LLMs' hidden states to identify high-quality reasoning, cutting inference costs by up to 70% while maintaining accuracy

Martina's internship project suggests trace monitoring metrics and classifiers that can detect when an LLM reasoning trace is going to fail in mid way. The approach saves up to 70% of token usage, and it even helps with increasing accuracy by 2%-3%.

Intersecting mechanistic interpretability and health AI 😎

We trained and interpreted sparse autoencoders on MAIRA-2, our radiology MLLM. We found a range of human-interpretable radiology reporting concepts, but also many uninterpretable SAE features.

Intersecting mechanistic interpretability and health AI 😎

We trained and interpreted sparse autoencoders on MAIRA-2, our radiology MLLM. We found a range of human-interpretable radiology reporting concepts, but also many uninterpretable SAE features.

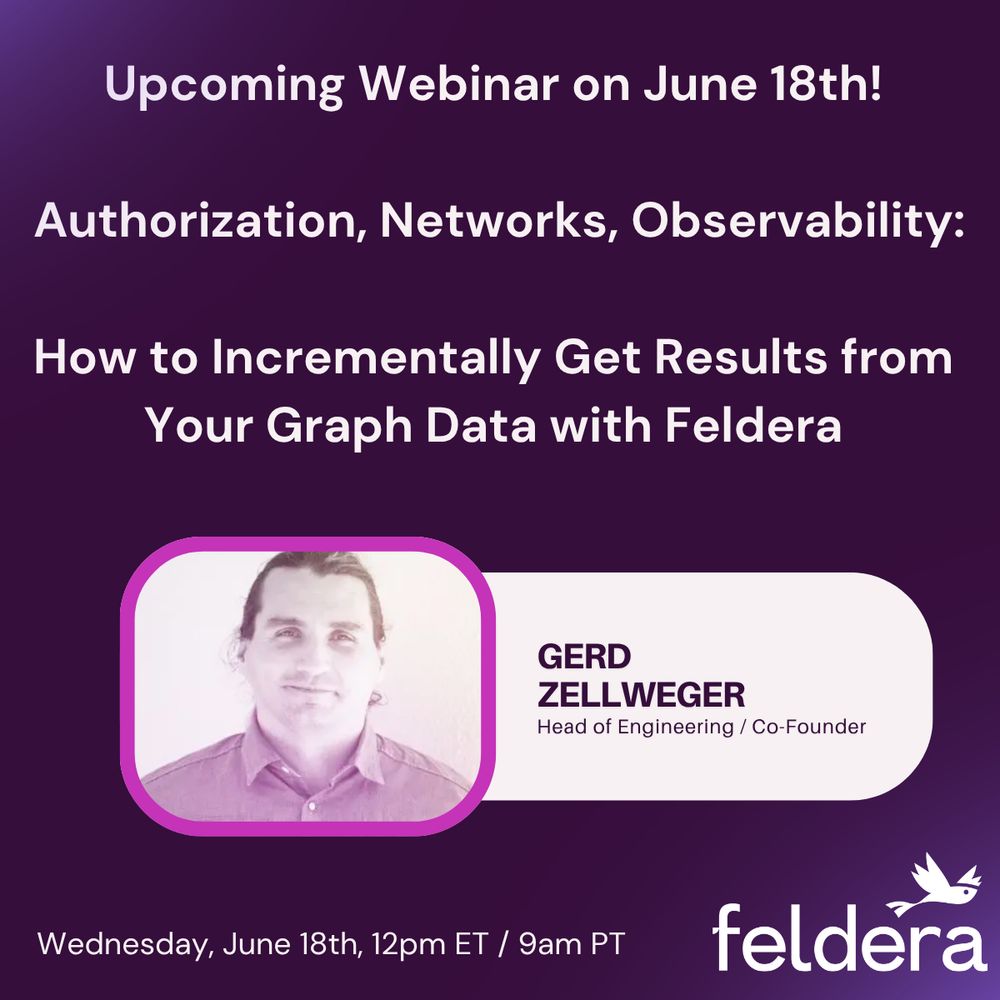

Stop re-running complex recursive queries when your graph data changes. Feldera incrementally evaluates recursive graph computations. Learn to easily build these mechanisms with #SQL, without the hassle of constant recomputation.

tinyurl.com/rb5my7d8

Stop re-running complex recursive queries when your graph data changes. Feldera incrementally evaluates recursive graph computations. Learn to easily build these mechanisms with #SQL, without the hassle of constant recomputation.

tinyurl.com/rb5my7d8

youtube.com/shorts/IF3bz...

youtube.com/shorts/IF3bz...

If you’re around and want to chat about how incremental computing can make your #SparkSQL workloads go from hours to seconds — let’s connect.

Grab some time here: calendly.com/matt-feldera...

#DataAISummit #DataEngineering

If you’re around and want to chat about how incremental computing can make your #SparkSQL workloads go from hours to seconds — let’s connect.

Grab some time here: calendly.com/matt-feldera...

#DataAISummit #DataEngineering

Every eval chokes under hill climbing. If we're lucky, there’s an early phase where *real* learning (both model and community) can occur. I'd argue that a benchmark’s value lies entirely in that window. So the real question is what did we learn?

Every eval chokes under hill climbing. If we're lucky, there’s an early phase where *real* learning (both model and community) can occur. I'd argue that a benchmark’s value lies entirely in that window. So the real question is what did we learn?