Excited to land in print in early 2026! Lots of improvements coming soon.

Thanks for the support!

hubs.la/Q03Tc37Q0

Excited to land in print in early 2026! Lots of improvements coming soon.

Thanks for the support!

hubs.la/Q03Tc37Q0

Was ist denn "Lerns Geschichte"?

Zwei Minuten später im Radio: "Lernen's a bissel @geschichte.fm, dann ..." 😲

Was ist denn "Lerns Geschichte"?

Zwei Minuten später im Radio: "Lernen's a bissel @geschichte.fm, dann ..." 😲

submitted the paper 24h before the deadline 😍.

It's integrated into the OLMo trainer here: github.com/allenai/OLMo...

submitted the paper 24h before the deadline 😍.

It's integrated into the OLMo trainer here: github.com/allenai/OLMo...

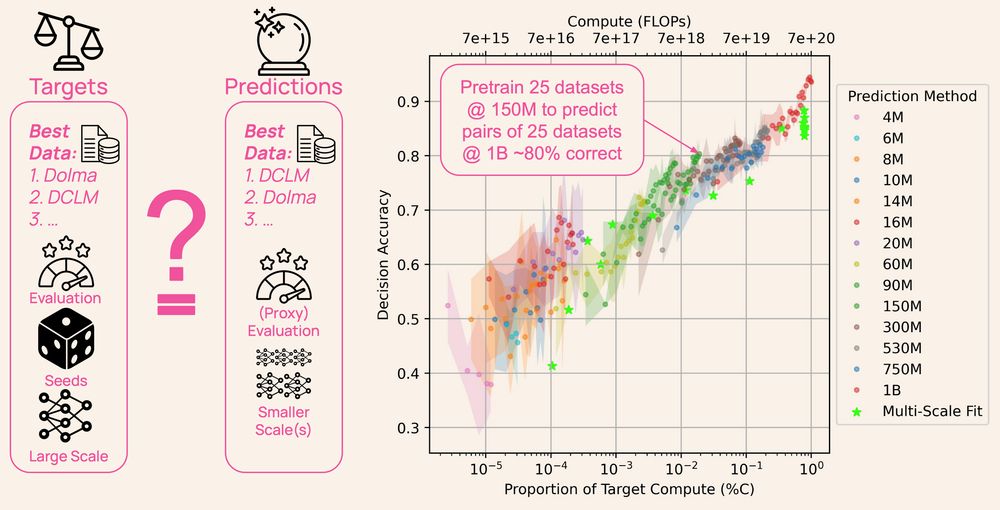

DataDecide opens up the process: 1,050 models, 30k checkpoints, 25 datasets & 10 benchmarks 🧵

DataDecide opens up the process: 1,050 models, 30k checkpoints, 25 datasets & 10 benchmarks 🧵

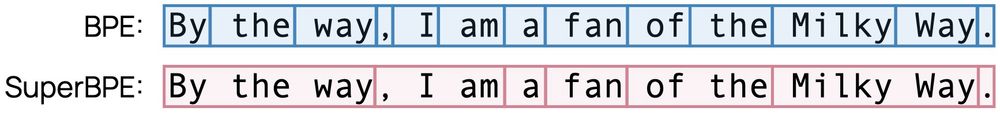

We do this on unprecedented scale and in real time: finding matching text between model outputs and 4 trillion training tokens within seconds. ✨

Introducing OLMoTrace, a new feature in the Ai2 Playground that begins to shed some light. 🔦

We do this on unprecedented scale and in real time: finding matching text between model outputs and 4 trillion training tokens within seconds. ✨

When pretraining at 8B scale, SuperBPE models consistently outperform the BPE baseline on 30 downstream tasks (+8% MMLU), while also being 27% more efficient at inference time.🧵

When pretraining at 8B scale, SuperBPE models consistently outperform the BPE baseline on 30 downstream tasks (+8% MMLU), while also being 27% more efficient at inference time.🧵

🐟our largest & best fully open model to-date

🐠right up there w similar size weights-only models from big companies on popular benchmarks

🐡but we used way less compute & all our data, ckpts, code, recipe are free & open

made a nice plot of our post-trained results!✌️

Built for scale, olmOCR handles many document types with high throughput. Run it on your own GPU for free—at over 3000 token/s, equivalent to $190 per million pages, or 1/32 the cost of GPT-4o!

Built for scale, olmOCR handles many document types with high throughput. Run it on your own GPU for free—at over 3000 token/s, equivalent to $190 per million pages, or 1/32 the cost of GPT-4o!

As phones get faster, more AI will happen on device. With OLMoE, researchers, developers, and users can get a feel for this future: fully private LLMs, available anytime.

Learn more from @soldaini.net👇 youtu.be/rEK_FZE5rqQ

As phones get faster, more AI will happen on device. With OLMoE, researchers, developers, and users can get a feel for this future: fully private LLMs, available anytime.

Learn more from @soldaini.net👇 youtu.be/rEK_FZE5rqQ

Interviewing OLMo 2 leads: Open secrets of training language models

What we have learned and are going to do next.

YouTube: https://buff.ly/40IlSFF

Podcast / notes:

Interviewing OLMo 2 leads: Open secrets of training language models

What we have learned and are going to do next.

YouTube: https://buff.ly/40IlSFF

Podcast / notes:

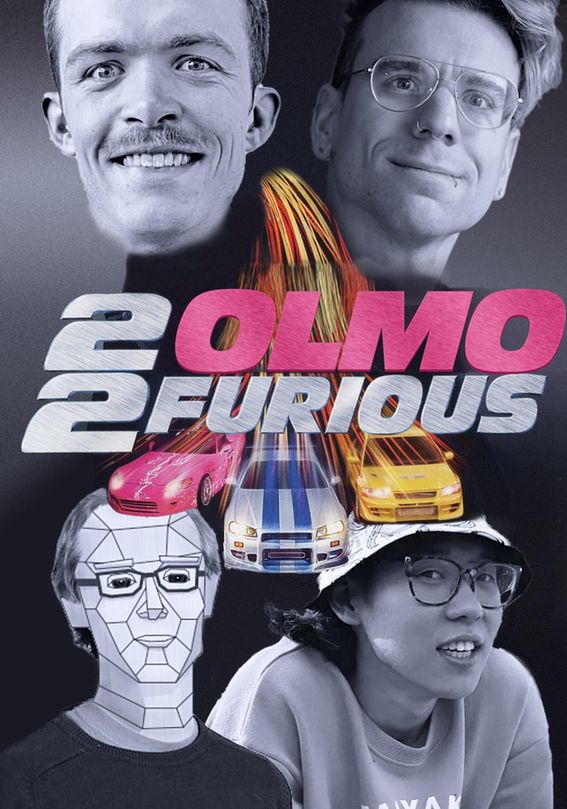

We just released our paper "2 OLMo 2 Furious"

Can't stop us in 2025. Links below.

We just released our paper "2 OLMo 2 Furious"

Can't stop us in 2025. Links below.

LLMs give bland answers because they produce the average of what anyone would have said on the Internet.

LLMs give bland answers because they produce the average of what anyone would have said on the Internet.

github.com/allenai/awes...

github.com/allenai/awes...

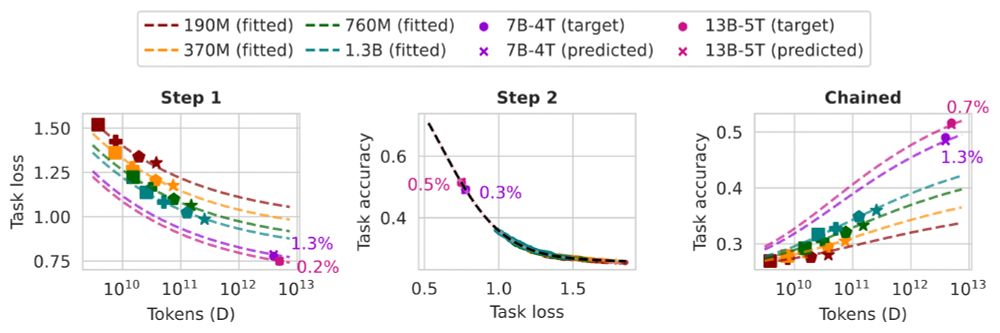

We develop task scaling laws and model ladders, which predict the accuracy on individual tasks by OLMo 2 7B & 13B models within 2 points of absolute error. The cost is 1% of the compute used to pretrain them.

We develop task scaling laws and model ladders, which predict the accuracy on individual tasks by OLMo 2 7B & 13B models within 2 points of absolute error. The cost is 1% of the compute used to pretrain them.