You can train a neural network to *just do things* -- such as *predict the optimum of a function*. But how do you get a big training dataset of "functions with known optima"?

Read the blog post to find out! (link 👇)

This might seem an oddly specific property but the good ol' binding problem reflects a fundamental primitive of cognition, epistemology, you name it.

This might seem an oddly specific property but the good ol' binding problem reflects a fundamental primitive of cognition, epistemology, you name it.

My current advice is to use the stderr of the median (bootstrapped), with a separate metric for reliability if needed.

The latter is less standard - we have been reporting e.g. 80% perf quantile and its stderr (bootstrapped).

My current advice is to use the stderr of the median (bootstrapped), with a separate metric for reliability if needed.

The latter is less standard - we have been reporting e.g. 80% perf quantile and its stderr (bootstrapped).

ChatGPT and Claude did pretty well in different ways. Kimi did the usual (sounds good but meaning falls apart).

ChatGPT and Claude did pretty well in different ways. Kimi did the usual (sounds good but meaning falls apart).

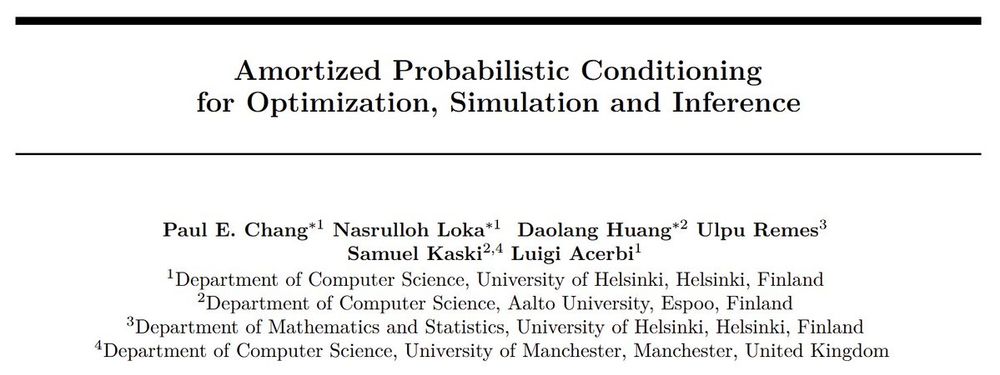

You are still in time to submit a short abstract on amortized (simulator-based) inference (SBI), neural processes, prior-fitted networks (PFNs), amortized experimental design, foundation models, and related applications.

Call: sites.google.com/view/amortiz...

You are still in time to submit a short abstract on amortized (simulator-based) inference (SBI), neural processes, prior-fitted networks (PFNs), amortized experimental design, foundation models, and related applications.

Call: sites.google.com/view/amortiz...

Only a short abstract (½ page), so go ahead!

Workshop: Dec 2, 2025, co-located with EurIPS.

Website: sites.google.com/view/amortiz...

Only a short abstract (½ page), so go ahead!

Workshop: Dec 2, 2025, co-located with EurIPS.

Website: sites.google.com/view/amortiz...

Do you love letter salads such as NPs, PFNs, NPE, SBI, BED?

Then no place is better than the Amortized ProbML workshop we are organizing at #ELLIS UnConference.

Do you love letter salads such as NPs, PFNs, NPE, SBI, BED?

Then no place is better than the Amortized ProbML workshop we are organizing at #ELLIS UnConference.

How we train an open everything model on a new pretraining environment with releasable data (Common Corpus) with an open source framework (Nanotron from HuggingFace).

www.sciencedirect.com/science/arti...

How we train an open everything model on a new pretraining environment with releasable data (Common Corpus) with an open source framework (Nanotron from HuggingFace).

www.sciencedirect.com/science/arti...

We study how two cognitive constraints—action consideration set size & policy complexity—interact in context-dependent decision making, and how humans exploit their synergy to reduce behavioral suboptimality.

osf.io/preprints/ps...

We study how two cognitive constraints—action consideration set size & policy complexity—interact in context-dependent decision making, and how humans exploit their synergy to reduce behavioral suboptimality.

osf.io/preprints/ps...

EurIPS is a community-organized conference where you can present accepted NeurIPS 2025 papers, endorsed by @neuripsconf.bsky.social and @nordicair.bsky.social and is co-developed by @ellis.eu

eurips.cc

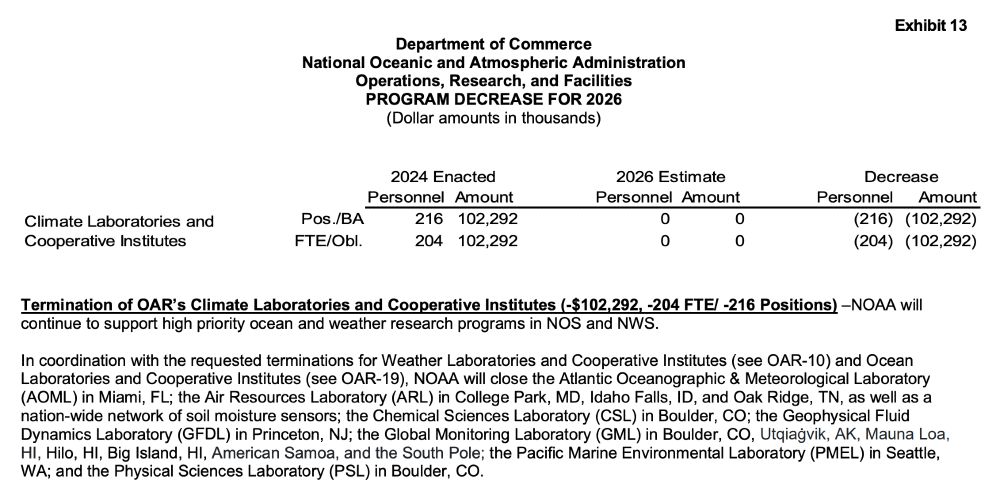

GFDL, NSSL, GML, etc.

This appears to also end the US greenhouse gas sampling network, including at Mauna Loa, the oldest continuous carbon dioxide monitoring site on Earth.

www.commerce.gov/sites/defaul...

You can train a neural network to *just do things* -- such as *predict the optimum of a function*. But how do you get a big training dataset of "functions with known optima"?

Read the blog post to find out! (link 👇)

You can train a neural network to *just do things* -- such as *predict the optimum of a function*. But how do you get a big training dataset of "functions with known optima"?

Read the blog post to find out! (link 👇)

youtu.be/iJC455bmkbo

youtu.be/iJC455bmkbo

The Trump regime is the most corrupt in US history—it’s worth repeating ad nauseam

The Trump regime is the most corrupt in US history—it’s worth repeating ad nauseam

A shiver goes through my spine at the thought that LinkedIn might be a viable alternative.

I tried making the transition, but talking about AI here is just really fraught in ways that are tough to mitigate & make it hard to have good discussions (the point of social!). Maybe it changes

A shiver goes through my spine at the thought that LinkedIn might be a viable alternative.

Read more 👇

Read more 👇

He should have had a guest appearance in the League of Extraordinary Gentlemen.

(The man on the right is Santiago Ramón y Cajal.)

Related: Oliver Sacks repping a 600lbs Squat.... Incredible.

He should have had a guest appearance in the League of Extraordinary Gentlemen.

(The man on the right is Santiago Ramón y Cajal.)

#AISTATS2025

Poster session 1

Place: Hall A-E

Time: Sat 3 May 3 p.m. — 6 p.m.

Poster number: 23

#AISTATS2025

Poster session 1

Place: Hall A-E

Time: Sat 3 May 3 p.m. — 6 p.m.

Poster number: 23

Please share widely!

www.reddit.com/r/AskHistori...

Please share widely!

www.reddit.com/r/AskHistori...