Do you love letter salads such as NPs, PFNs, NPE, SBI, BED?

Then no place is better than the Amortized ProbML workshop we are organizing at #ELLIS UnConference.

Do you love letter salads such as NPs, PFNs, NPE, SBI, BED?

Then no place is better than the Amortized ProbML workshop we are organizing at #ELLIS UnConference.

You can train a neural network to *just do things* -- such as *predict the optimum of a function*. But how do you get a big training dataset of "functions with known optima"?

Read the blog post to find out! (link 👇)

You can train a neural network to *just do things* -- such as *predict the optimum of a function*. But how do you get a big training dataset of "functions with known optima"?

Read the blog post to find out! (link 👇)

Go talk to my incredible PhD students @huangdaolang.bsky.social & @chengkunli.bsky.social + amazing collaborator Severi Rissanen.

@univhelsinkics.bsky.social FCAI

Go talk to my incredible PhD students @huangdaolang.bsky.social & @chengkunli.bsky.social + amazing collaborator Severi Rissanen.

@univhelsinkics.bsky.social FCAI

Read the full paper #AABI2025 Proceedings Track!

Work by @chengkunli.bsky.social, @abacabadabacaba.bsky.social, Petrus Mikkola, & yours truly.

📜 Paper: arxiv.org/abs/2504.11554

💻 Code: github.com/acerbilab/no...

📄 Overview: acerbilab.github.io/normalizing-...

Let us know what you think!

Read the full paper #AABI2025 Proceedings Track!

Work by @chengkunli.bsky.social, @abacabadabacaba.bsky.social, Petrus Mikkola, & yours truly.

📜 Paper: arxiv.org/abs/2504.11554

💻 Code: github.com/acerbilab/no...

📄 Overview: acerbilab.github.io/normalizing-...

Let us know what you think!

In sum: NFR offers a practical way to perform Bayesian inference for expensive models by recycling existing log-likelihood evaluations (e.g., from MAP). You get a usable posterior *and* model evidence estimate directly!

Or, as GPT-4.5 put it, "Fast Flows, Not Slow Chains".

In sum: NFR offers a practical way to perform Bayesian inference for expensive models by recycling existing log-likelihood evaluations (e.g., from MAP). You get a usable posterior *and* model evidence estimate directly!

Or, as GPT-4.5 put it, "Fast Flows, Not Slow Chains".

Across 5 increasingly gnarly and realistic problems, up to D=12 NFR, wins or ties every metric against strong baselines.

Across 5 increasingly gnarly and realistic problems, up to D=12 NFR, wins or ties every metric against strong baselines.

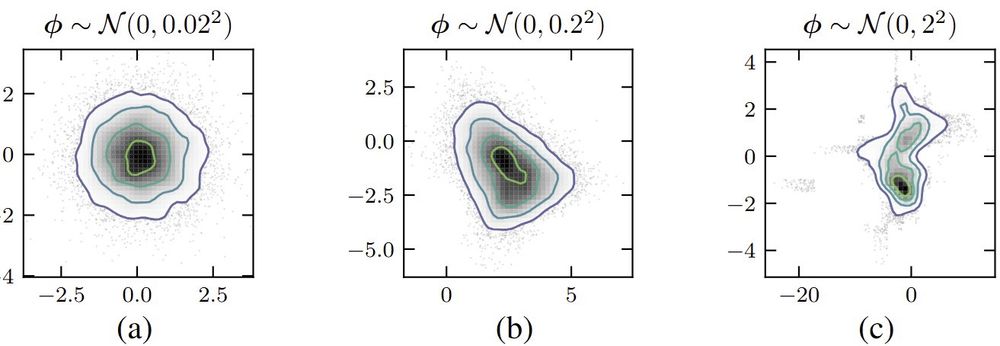

We use annealed optimization (β‑tempering) so the model morphs smoothly from the base distribution to the target posterior.

We use annealed optimization (β‑tempering) so the model morphs smoothly from the base distribution to the target posterior.

We place a prior on flow parameters - 👉a prior over distributions👈 - to tame them!

This regularizes the learned posterior shape, preventing weird fits.

We place a prior on flow parameters - 👉a prior over distributions👈 - to tame them!

This regularizes the learned posterior shape, preventing weird fits.

We censor values below a very-low-density threshold, focusing training on the high‑probability regions that matter.

We censor values below a very-low-density threshold, focusing training on the high‑probability regions that matter.

Our idea: Use a **Normalizing Flow** not just for density estimation, but as a *regression* model!

NFR directly fits the flow to existing log-density evaluations, learning the posterior shape *and* the normalizing constant C in one go.

Our idea: Use a **Normalizing Flow** not just for density estimation, but as a *regression* model!

NFR directly fits the flow to existing log-density evaluations, learning the posterior shape *and* the normalizing constant C in one go.

Normalizing Flow Regression (NFR) — an offline Bayesian inference method.

What if you could get a full posterior using *only* the evaluations you *already* have, maybe from optimization runs?

Normalizing Flow Regression (NFR) — an offline Bayesian inference method.

What if you could get a full posterior using *only* the evaluations you *already* have, maybe from optimization runs?

acerbilab.github.io/amortized-co...

acerbilab.github.io/amortized-co...

You judge the result...

(text continues 👇)

You judge the result...

(text continues 👇)

Nice to see sample-efficient Bayesian inference for expensive computational models used in the wild!

(Although we feel a bit more pressure to triple-check that our implementation has no bugs...)

Nice to see sample-efficient Bayesian inference for expensive computational models used in the wild!

(Although we feel a bit more pressure to triple-check that our implementation has no bugs...)

Then started the "Slowdown" ending and, erm, superintelligences need to speak in English in Slack and cannot steganography their way out?

Yep, this is the Studio Cut.

Then started the "Slowdown" ending and, erm, superintelligences need to speak in English in Slack and cannot steganography their way out?

Yep, this is the Studio Cut.

I just tried your prompt and it seems it found something, but for example it did not ignore reviews, and not sure about the highly-cited.

I just tried your prompt and it seems it found something, but for example it did not ignore reviews, and not sure about the highly-cited.

We still cannot quite generate correct plots for slides and papers just by asking nicely, but this is way better than anything we had before.

We still cannot quite generate correct plots for slides and papers just by asking nicely, but this is way better than anything we had before.