It's often said that hippocampal replay, which helps to build up a model of the world, is biased by reward. But the canonical temporal-difference learning requires updates proportional to reward-prediction error (RPE), not reward magnitude

1/4

rdcu.be/eRxNz

🧪

#AcademicSky

www.nature.com/articles/d41...

🧪

#AcademicSky

www.nature.com/articles/d41...

(OP @drgbuckingham.bsky.social )

(OP @drgbuckingham.bsky.social )

- People are happy they can ask questions quickly without judgment or looking for the Right Person to ask in the office.

- People are unhappy that nobody asksthem questions, because that is how they get to know colleagues and win their trust.

- Developers like Claude Code + Claude Opus 4.5.

- People appreciate that AI does not judge. Unlike coworkers, who may silently label you as incompetent if you ask one too many “stupid” questions, AI will answer every question - including the ones you are hesitate to ask.

- People are happy they can ask questions quickly without judgment or looking for the Right Person to ask in the office.

- People are unhappy that nobody asksthem questions, because that is how they get to know colleagues and win their trust.

google-deepmind.github.io/disco_rl/

google-deepmind.github.io/disco_rl/

www.sciencedirect.com/science/arti...

www.sciencedirect.com/science/arti...

www.nature.com/articles/s41...

www.nature.com/articles/s41...

I am a comma stan.

I am a comma stan.

#philsci #cogsky #CognitiveNeuroscience

@phaueis.bsky.social

aktuell.uni-bielefeld.de/2025/11/24/t...

#philsci #cogsky #CognitiveNeuroscience

@phaueis.bsky.social

aktuell.uni-bielefeld.de/2025/11/24/t...

That is, in Go, your reward is 0 for most time steps and only +1/-1 at end. That sound's sparse, but not from an algorithmic perspective.

It's often said that hippocampal replay, which helps to build up a model of the world, is biased by reward. But the canonical temporal-difference learning requires updates proportional to reward-prediction error (RPE), not reward magnitude

1/4

rdcu.be/eRxNz

It's often said that hippocampal replay, which helps to build up a model of the world, is biased by reward. But the canonical temporal-difference learning requires updates proportional to reward-prediction error (RPE), not reward magnitude

1/4

rdcu.be/eRxNz

Congratulations to Matt Jones & Nathan Lepora for seeing this through to the end!

www.nature.com/articles/s41...

Congratulations to Matt Jones & Nathan Lepora for seeing this through to the end!

www.nature.com/articles/s41...

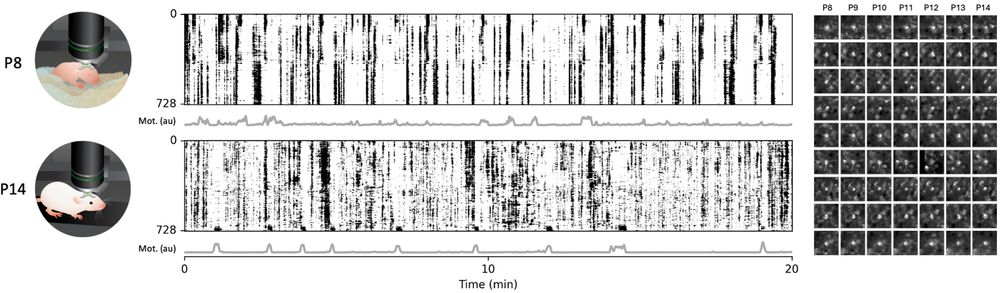

Check out our latest preprint, where we tracked the activity of the same neurons throughout early postnatal development: www.biorxiv.org/content/10.1...

see 🧵 (1/?)

Check out our latest preprint, where we tracked the activity of the same neurons throughout early postnatal development: www.biorxiv.org/content/10.1...

see 🧵 (1/?)

www.sciencedirect.com/science/arti...

www.sciencedirect.com/science/arti...