Anne Scheel

@annemscheel.bsky.social

Assistant prof at Utrecht University, trying to make science as reproducible as non-scientists think it is. Blogs at @the100ci.

Reposted by Anne Scheel

My Shiny app containing 3530 Open Science blog posts discussing the replication crisis is updated - you can now use the SEARCH box. I fixed it as my new PhD Julia wanted to know who had called open scientists 'Methodological Terrorists' :) shiny.ieis.tue.nl/open_science...

Open Science Blog Browser

Open Science Blog Browser

shiny.ieis.tue.nl

November 8, 2025 at 7:15 PM

My Shiny app containing 3530 Open Science blog posts discussing the replication crisis is updated - you can now use the SEARCH box. I fixed it as my new PhD Julia wanted to know who had called open scientists 'Methodological Terrorists' :) shiny.ieis.tue.nl/open_science...

Reposted by Anne Scheel

Oops. Ooooooooooooops.

I do hope that nobody has been given or denied a job/promotion based on their SpringerNature citation counts in the past 15 years.

arxiv.org/pdf/2511.01675

h/t @nathlarigaldie.bsky.social

I do hope that nobody has been given or denied a job/promotion based on their SpringerNature citation counts in the past 15 years.

arxiv.org/pdf/2511.01675

h/t @nathlarigaldie.bsky.social

November 7, 2025 at 2:02 PM

Oops. Ooooooooooooops.

I do hope that nobody has been given or denied a job/promotion based on their SpringerNature citation counts in the past 15 years.

arxiv.org/pdf/2511.01675

h/t @nathlarigaldie.bsky.social

I do hope that nobody has been given or denied a job/promotion based on their SpringerNature citation counts in the past 15 years.

arxiv.org/pdf/2511.01675

h/t @nathlarigaldie.bsky.social

Reposted by Anne Scheel

Reposted by Anne Scheel

The package formerly known as papercheck has changed its name to metacheck! We're checking more than just papers, with functions to assess OSF projects, github repos, and AsPredicted pre-registrations, with more being developed all the time.

scienceverse.github.io/metacheck/

scienceverse.github.io/metacheck/

Check Research Outputs for Best Practices

A modular, extendable system for automatically checking research outputs for best practices using text search, R code, and/or (optional) LLM queries.

scienceverse.github.io

November 3, 2025 at 4:20 PM

The package formerly known as papercheck has changed its name to metacheck! We're checking more than just papers, with functions to assess OSF projects, github repos, and AsPredicted pre-registrations, with more being developed all the time.

scienceverse.github.io/metacheck/

scienceverse.github.io/metacheck/

Reposted by Anne Scheel

DEAR FOLLOWERS - LINKEDIN POSTING SURGE REQUESTED

November 4, 2025 at 10:57 AM

DEAR FOLLOWERS - LINKEDIN POSTING SURGE REQUESTED

Reposted by Anne Scheel

I successfully defended my Habilitation today, and was honoured by the committee’s positive feedback. I am very grateful for my colleagues, collaborators and students at the University of Bern who made this a very rewarding journey.

November 3, 2025 at 9:54 PM

I successfully defended my Habilitation today, and was honoured by the committee’s positive feedback. I am very grateful for my colleagues, collaborators and students at the University of Bern who made this a very rewarding journey.

Reposted by Anne Scheel

"We included 608 articles, of which 243 articles were identified as problematic (40.0%)." (bit.ly/3X4PtGF) This finding of so many image-related problems by a team that wanted to do a systemic review, is deeply concerning.

October 31, 2025 at 9:41 AM

"We included 608 articles, of which 243 articles were identified as problematic (40.0%)." (bit.ly/3X4PtGF) This finding of so many image-related problems by a team that wanted to do a systemic review, is deeply concerning.

Reposted by Anne Scheel

#episky #statsky

“The student, now a junior researcher, has learned how to operate the hyp test machinery but not how to feed it with meaningful input”

I felt this way when I first learned about DAGs. I could recite the rules, but I was still really confused if I needed to make my own

“The student, now a junior researcher, has learned how to operate the hyp test machinery but not how to feed it with meaningful input”

I felt this way when I first learned about DAGs. I could recite the rules, but I was still really confused if I needed to make my own

I completely agree! But if you realise that you can’t specify a SESOI for the life of you, it usually means that you can’t specify your research hypothesis enough to make it statistically testable. In that case, the best decision may to do something else: journals.sagepub.com/doi/10.1177/...

Why Hypothesis Testers Should Spend Less Time Testing Hypotheses - Anne M. Scheel, Leonid Tiokhin, Peder M. Isager, Daniël Lakens, 2021

For almost half a century, Paul Meehl educated psychologists about how the mindless use of null-hypothesis significance tests made research on theories in the s...

journals.sagepub.com

November 1, 2025 at 11:22 AM

Reposted by Anne Scheel

So (beyond this specific example of anchor-based approaches) I would be happy to see many diverse applied examples on how SESOIs have been reasonably specified in sport and exercise science (if they exist...).

October 31, 2025 at 5:19 AM

So (beyond this specific example of anchor-based approaches) I would be happy to see many diverse applied examples on how SESOIs have been reasonably specified in sport and exercise science (if they exist...).

Reposted by Anne Scheel

New paper finds that selective reporting remains the most replicable finding in science: journals.sagepub.com/doi/full/10.... I especially like their new exploratory metric 'p-values per participant'. Some papers had 11 p-values per participant! 🤯

Sage Journals: Discover world-class research

Subscription and open access journals from Sage, the world's leading independent academic publisher.

journals.sagepub.com

October 31, 2025 at 7:39 AM

New paper finds that selective reporting remains the most replicable finding in science: journals.sagepub.com/doi/full/10.... I especially like their new exploratory metric 'p-values per participant'. Some papers had 11 p-values per participant! 🤯

There still seems to be a lot of confusion about significance testing in psych. No, p-values *don’t* become useless at large N. This flawed point also used to be framed as "too much power". But power isn't the problem – it's 1) unbalanced error rates and 2) the (lack of a) SESOI. 1/ >

But here's, the thing, p values and significance become useless at such large sample sizes. When you're dividing the coefficient by the SE and the sample size is in the tens of thousands, EVERYTHING IS SIGNIFICANT. All you're testing is whether the coefficient is different than zero.

October 31, 2025 at 8:13 AM

There still seems to be a lot of confusion about significance testing in psych. No, p-values *don’t* become useless at large N. This flawed point also used to be framed as "too much power". But power isn't the problem – it's 1) unbalanced error rates and 2) the (lack of a) SESOI. 1/ >

Reposted by Anne Scheel

Are you interested in thinking about which studies are worth replicating? Then you have 10 articles to dig into in Meta-Psychology, representing a very wide range of viewpoint on this topic, out now: open.lnu.se/index.php/me...

LnuOpen

| Meta-Psychology

Original articles

open.lnu.se

October 30, 2025 at 3:43 PM

Are you interested in thinking about which studies are worth replicating? Then you have 10 articles to dig into in Meta-Psychology, representing a very wide range of viewpoint on this topic, out now: open.lnu.se/index.php/me...

Reposted by Anne Scheel

Which reminds me of this old classic on the Noise Miners.

“'Noise mining is a funny thing,' he said. 'When you first see a bit of noise, it doesn’t look so impressive. But as you work it out of the rock, it gets more and more refined.' This process, he explained, is called 'shucking.'"

“'Noise mining is a funny thing,' he said. 'When you first see a bit of noise, it doesn’t look so impressive. But as you work it out of the rock, it gets more and more refined.' This process, he explained, is called 'shucking.'"

October 30, 2025 at 9:24 AM

Which reminds me of this old classic on the Noise Miners.

“'Noise mining is a funny thing,' he said. 'When you first see a bit of noise, it doesn’t look so impressive. But as you work it out of the rock, it gets more and more refined.' This process, he explained, is called 'shucking.'"

“'Noise mining is a funny thing,' he said. 'When you first see a bit of noise, it doesn’t look so impressive. But as you work it out of the rock, it gets more and more refined.' This process, he explained, is called 'shucking.'"

Reposted by Anne Scheel

My paper with @lakens.bsky.social and @annaveer.bsky.social - “Replication value as a function of citation impact and sample size” - has just been published in Meta psychology! open.lnu.se/index.php/me...

October 30, 2025 at 9:11 AM

My paper with @lakens.bsky.social and @annaveer.bsky.social - “Replication value as a function of citation impact and sample size” - has just been published in Meta psychology! open.lnu.se/index.php/me...

Reposted by Anne Scheel

Looks like Christmas came early this year 🤩 @vincentab.bsky.social

October 29, 2025 at 5:22 PM

Looks like Christmas came early this year 🤩 @vincentab.bsky.social

Reposted by Anne Scheel

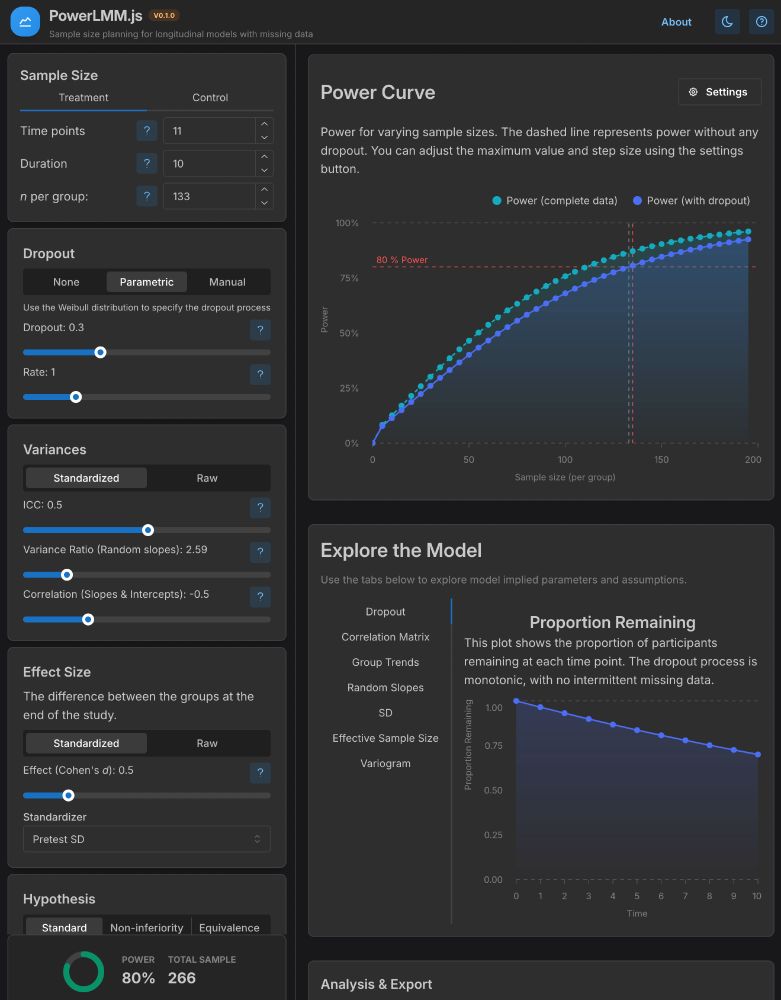

🎉 @rpsychologist.com 's PowerLMM.js is the online statistics application of the year 2025 🎉

powerlmmjs.rpsychologist.com

- Calculate power (etc) for multilevel models

- Examine effects of dropout and other important parameters

- Fast! (Instant results)

powerlmmjs.rpsychologist.com

- Calculate power (etc) for multilevel models

- Examine effects of dropout and other important parameters

- Fast! (Instant results)

October 28, 2025 at 2:37 PM

🎉 @rpsychologist.com 's PowerLMM.js is the online statistics application of the year 2025 🎉

powerlmmjs.rpsychologist.com

- Calculate power (etc) for multilevel models

- Examine effects of dropout and other important parameters

- Fast! (Instant results)

powerlmmjs.rpsychologist.com

- Calculate power (etc) for multilevel models

- Examine effects of dropout and other important parameters

- Fast! (Instant results)

Reposted by Anne Scheel

This is an excellent point that generalizes.

Researchers often defend suboptimal practices by referring to future studies with better designs.

But: Why would anybody run those studies when you can just throw a bunch of variables into a regression and make sweeping "preliminary" claims?

Researchers often defend suboptimal practices by referring to future studies with better designs.

But: Why would anybody run those studies when you can just throw a bunch of variables into a regression and make sweeping "preliminary" claims?

October 28, 2025 at 11:22 AM

This is an excellent point that generalizes.

Researchers often defend suboptimal practices by referring to future studies with better designs.

But: Why would anybody run those studies when you can just throw a bunch of variables into a regression and make sweeping "preliminary" claims?

Researchers often defend suboptimal practices by referring to future studies with better designs.

But: Why would anybody run those studies when you can just throw a bunch of variables into a regression and make sweeping "preliminary" claims?

Reposted by Anne Scheel

We're pleased to be funding early career researchers to explore how AI is transforming science.

The UK Metascience Unit has announced a cohort of 29 researchers that will receive funding through the AI Metascience Fellowship Programme.

Read more: www.ukri.org/news/interna...

The UK Metascience Unit has announced a cohort of 29 researchers that will receive funding through the AI Metascience Fellowship Programme.

Read more: www.ukri.org/news/interna...

International fellowships to explore AI’s impact on science

New £4 million programme funds early career researchers in the UK, US and Canada to investigate how artificial intelligence (AI) is transforming science.

www.ukri.org

October 9, 2025 at 10:36 AM

We're pleased to be funding early career researchers to explore how AI is transforming science.

The UK Metascience Unit has announced a cohort of 29 researchers that will receive funding through the AI Metascience Fellowship Programme.

Read more: www.ukri.org/news/interna...

The UK Metascience Unit has announced a cohort of 29 researchers that will receive funding through the AI Metascience Fellowship Programme.

Read more: www.ukri.org/news/interna...

Reposted by Anne Scheel

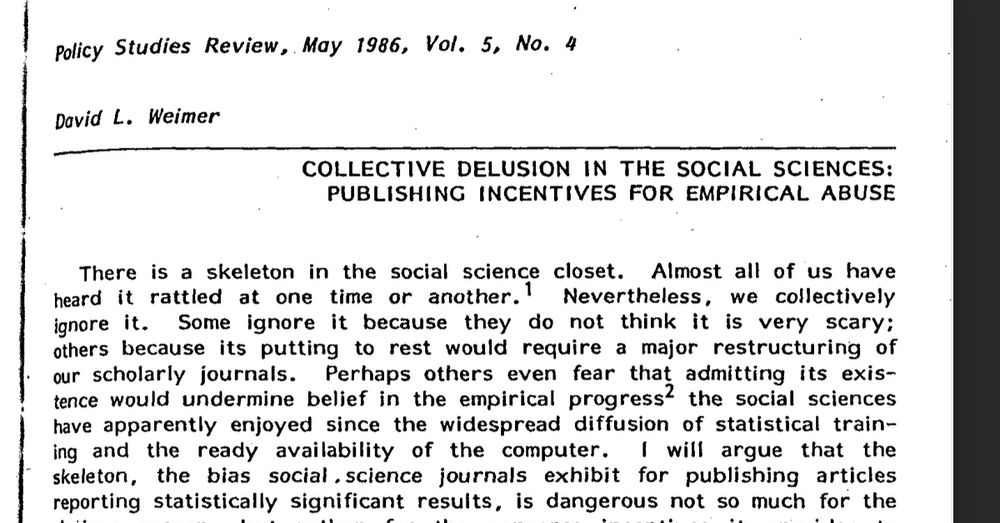

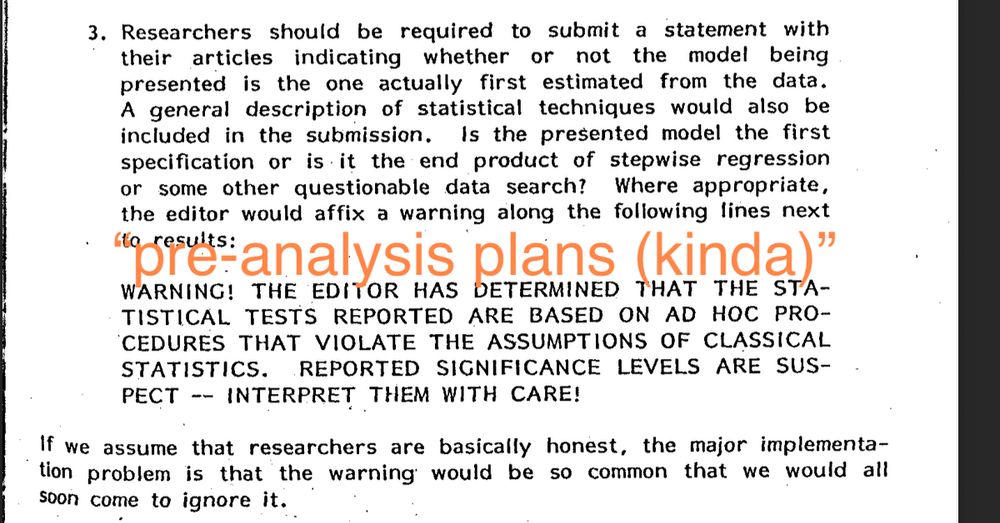

encountered this article by David Weimer from 1986 (cited by 7) with three good ideas for improving science that just a few short decades later, we've started to implement.

Collective Delusion In The Social Sciences: Publishing Incentives For Empirical Abuse

doi.org/10.1111/j.15...

Collective Delusion In The Social Sciences: Publishing Incentives For Empirical Abuse

doi.org/10.1111/j.15...

October 27, 2025 at 12:28 PM

encountered this article by David Weimer from 1986 (cited by 7) with three good ideas for improving science that just a few short decades later, we've started to implement.

Collective Delusion In The Social Sciences: Publishing Incentives For Empirical Abuse

doi.org/10.1111/j.15...

Collective Delusion In The Social Sciences: Publishing Incentives For Empirical Abuse

doi.org/10.1111/j.15...

Reposted by Anne Scheel

New blogpost on @elife.bsky.social's new Replication study type deevybee.blogspot.com/2025/10/prob.... tldr = I think it's doomed.

#Replication #Publishing #Reproducibility

#Replication #Publishing #Reproducibility

Problems with ELife's new article type: Replication studies

I was interested to receive an email from eLife last week, telling me that "As part of our commitment to open science, scientific rigour ...

deevybee.blogspot.com

October 27, 2025 at 9:33 AM

New blogpost on @elife.bsky.social's new Replication study type deevybee.blogspot.com/2025/10/prob.... tldr = I think it's doomed.

#Replication #Publishing #Reproducibility

#Replication #Publishing #Reproducibility

Reposted by Anne Scheel

I think this is kind of neat and I don't think anyone else has noticed it (I've looked and I can't find anyone who has) osf.io/preprints/so...

Maybe I should back off "justification" language, but it's at least a remarkable coincidence. I still think someone else *must* have noticed it...

Maybe I should back off "justification" language, but it's at least a remarkable coincidence. I still think someone else *must* have noticed it...

October 24, 2025 at 12:23 PM

I think this is kind of neat and I don't think anyone else has noticed it (I've looked and I can't find anyone who has) osf.io/preprints/so...

Maybe I should back off "justification" language, but it's at least a remarkable coincidence. I still think someone else *must* have noticed it...

Maybe I should back off "justification" language, but it's at least a remarkable coincidence. I still think someone else *must* have noticed it...

Reposted by Anne Scheel

After submitting a FOIA request UKRI, I obtained success rates by three grant call scheme and I can only say that I am disheartened by the results:

- AHRC Responsive Mode 2025: 2%

- ESRC New Investigator Grant 2025: 1%

- ESRC Research Grant Round 2025: 1%

- AHRC Responsive Mode 2025: 2%

- ESRC New Investigator Grant 2025: 1%

- ESRC Research Grant Round 2025: 1%

October 22, 2025 at 7:55 AM

After submitting a FOIA request UKRI, I obtained success rates by three grant call scheme and I can only say that I am disheartened by the results:

- AHRC Responsive Mode 2025: 2%

- ESRC New Investigator Grant 2025: 1%

- ESRC Research Grant Round 2025: 1%

- AHRC Responsive Mode 2025: 2%

- ESRC New Investigator Grant 2025: 1%

- ESRC Research Grant Round 2025: 1%

This fantastic initiative is exactly the type of thing we need to move things forward. Related to @stianwestlake.bsky.social’s discussion of investing in data infrastructure and data merging at the end of this great @worksinprogress.bsky.social interview: podcast.worksinprogress.co/3 (from 39:32)

October 23, 2025 at 12:10 PM

This fantastic initiative is exactly the type of thing we need to move things forward. Related to @stianwestlake.bsky.social’s discussion of investing in data infrastructure and data merging at the end of this great @worksinprogress.bsky.social interview: podcast.worksinprogress.co/3 (from 39:32)

Reposted by Anne Scheel

▶️Our example analysis shows how to use openESM: We estimated within-person correlations between positive and negative affect across 39 datasets (>500K observations)

▶️We find a robust negative correlation (−0.49 [-0.54, -0.42]) and outline ideas for future research building on this

▶️We find a robust negative correlation (−0.49 [-0.54, -0.42]) and outline ideas for future research building on this

October 22, 2025 at 7:34 PM

▶️Our example analysis shows how to use openESM: We estimated within-person correlations between positive and negative affect across 39 datasets (>500K observations)

▶️We find a robust negative correlation (−0.49 [-0.54, -0.42]) and outline ideas for future research building on this

▶️We find a robust negative correlation (−0.49 [-0.54, -0.42]) and outline ideas for future research building on this

Reposted by Anne Scheel

We built the openESM database:

▶️60 openly available experience sampling datasets (16K+ participants, 740K+ obs.) in one place

▶️Harmonized (meta-)data, fully open-source software

▶️Filter & search all data, simply download via R/Python

Find out more:

🌐 openesmdata.org

📝 doi.org/10.31234/osf...

▶️60 openly available experience sampling datasets (16K+ participants, 740K+ obs.) in one place

▶️Harmonized (meta-)data, fully open-source software

▶️Filter & search all data, simply download via R/Python

Find out more:

🌐 openesmdata.org

📝 doi.org/10.31234/osf...

October 22, 2025 at 7:34 PM

We built the openESM database:

▶️60 openly available experience sampling datasets (16K+ participants, 740K+ obs.) in one place

▶️Harmonized (meta-)data, fully open-source software

▶️Filter & search all data, simply download via R/Python

Find out more:

🌐 openesmdata.org

📝 doi.org/10.31234/osf...

▶️60 openly available experience sampling datasets (16K+ participants, 740K+ obs.) in one place

▶️Harmonized (meta-)data, fully open-source software

▶️Filter & search all data, simply download via R/Python

Find out more:

🌐 openesmdata.org

📝 doi.org/10.31234/osf...