Studying continual learning and adaptation in Brain and ANNs.

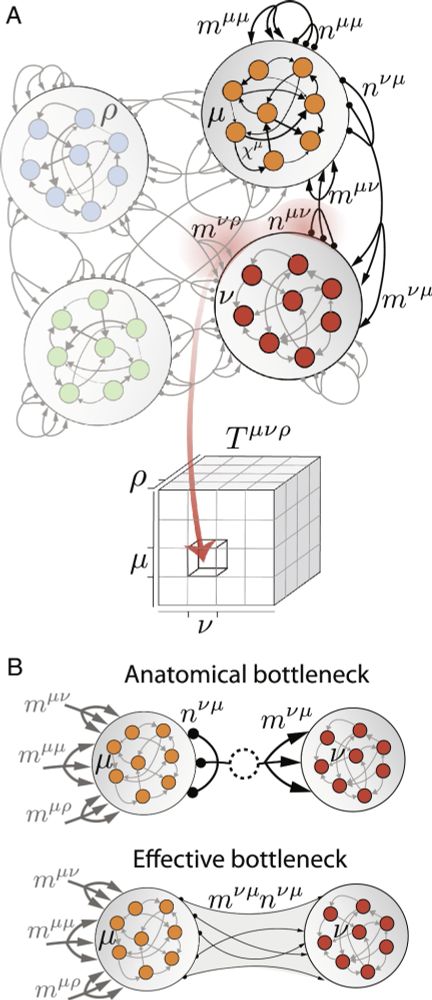

For neuro fans: conn. structure can be invisible in single neurons but shape pop. activity

For low-rank RNN fans: a theory of rank=O(N)

For physics fans: fluctuations around DMFT saddle⇒dimension of activity

For neuro fans: conn. structure can be invisible in single neurons but shape pop. activity

For low-rank RNN fans: a theory of rank=O(N)

For physics fans: fluctuations around DMFT saddle⇒dimension of activity

#neuroskyence

www.thetransmitter.org/neural-dynam...

We show how to exactly map recurrent spiking networks into recurrent rate networks, with the same number of neurons. No temporal or spatial averaging needed!

Presented at Gatsby Neural Dynamics Workshop, London.

We show how to exactly map recurrent spiking networks into recurrent rate networks, with the same number of neurons. No temporal or spatial averaging needed!

Presented at Gatsby Neural Dynamics Workshop, London.

#neuroskyence

www.thetransmitter.org/systems-neur...

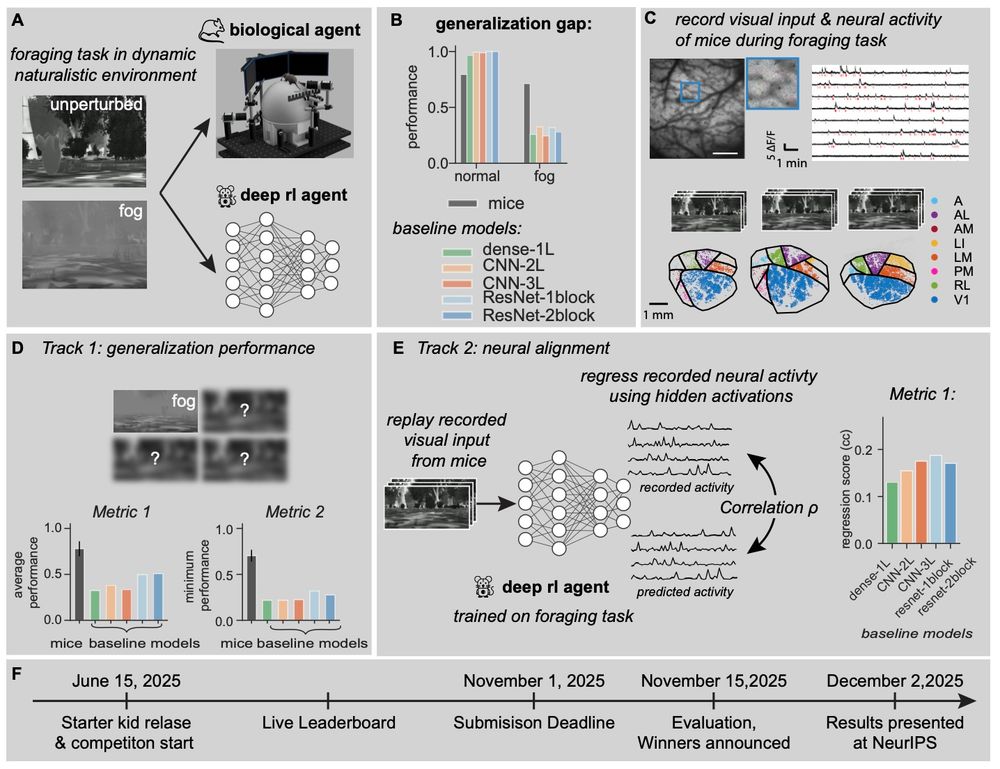

We present our #NeurIPS competition. You can learn about it here: robustforaging.github.io (7/n)

We present our #NeurIPS competition. You can learn about it here: robustforaging.github.io (7/n)

To quote someone from my lab (they can take credit if they want):

Def not news to those of us who use [ANN] models, but a good counter argument to the "but neurons are more complicated" crowd.

arxiv.org/abs/2504.08637

🧠📈 🧪

To quote someone from my lab (they can take credit if they want):

Def not news to those of us who use [ANN] models, but a good counter argument to the "but neurons are more complicated" crowd.

arxiv.org/abs/2504.08637

🧠📈 🧪

More on Spatial Computing:

doi.org/10.1038/s414...

More on Spatial Computing:

doi.org/10.1038/s414...

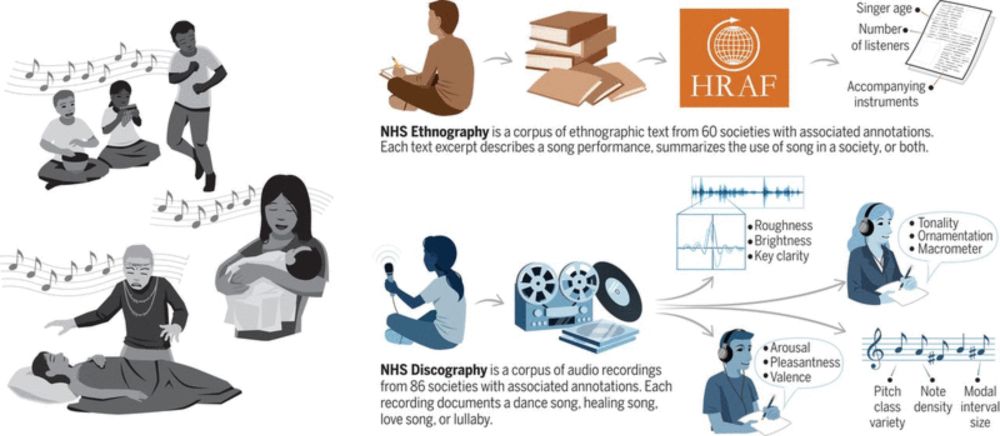

PNAS link: www.pnas.org/doi/10.1073/...

(see dclark.io for PDF)

An explainer thread...

PNAS link: www.pnas.org/doi/10.1073/...

(see dclark.io for PDF)

An explainer thread...

www.science.org/doi/10.1126/...

#neuroscience

www.science.org/doi/10.1126/...

#neuroscience

How do neural dynamics in motor cortex interact with those in subcortical networks to flexibly control movement? I’m beyond thrilled to share our work on this problem, led by Eric Kirk @eric-kirk.bsky.social with help from Kangjia Cai!

www.biorxiv.org/content/10.1...

How do neural dynamics in motor cortex interact with those in subcortical networks to flexibly control movement? I’m beyond thrilled to share our work on this problem, led by Eric Kirk @eric-kirk.bsky.social with help from Kangjia Cai!

www.biorxiv.org/content/10.1...

My group will study offline learning in the sleeping brain: how neural activity self-organizes during sleep and the computations it performs. 🧵

My group will study offline learning in the sleeping brain: how neural activity self-organizes during sleep and the computations it performs. 🧵

Neuromorphic hierarchical modular reservoirs

www.biorxiv.org/content/10.1...

Neuromorphic hierarchical modular reservoirs

www.biorxiv.org/content/10.1...

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7

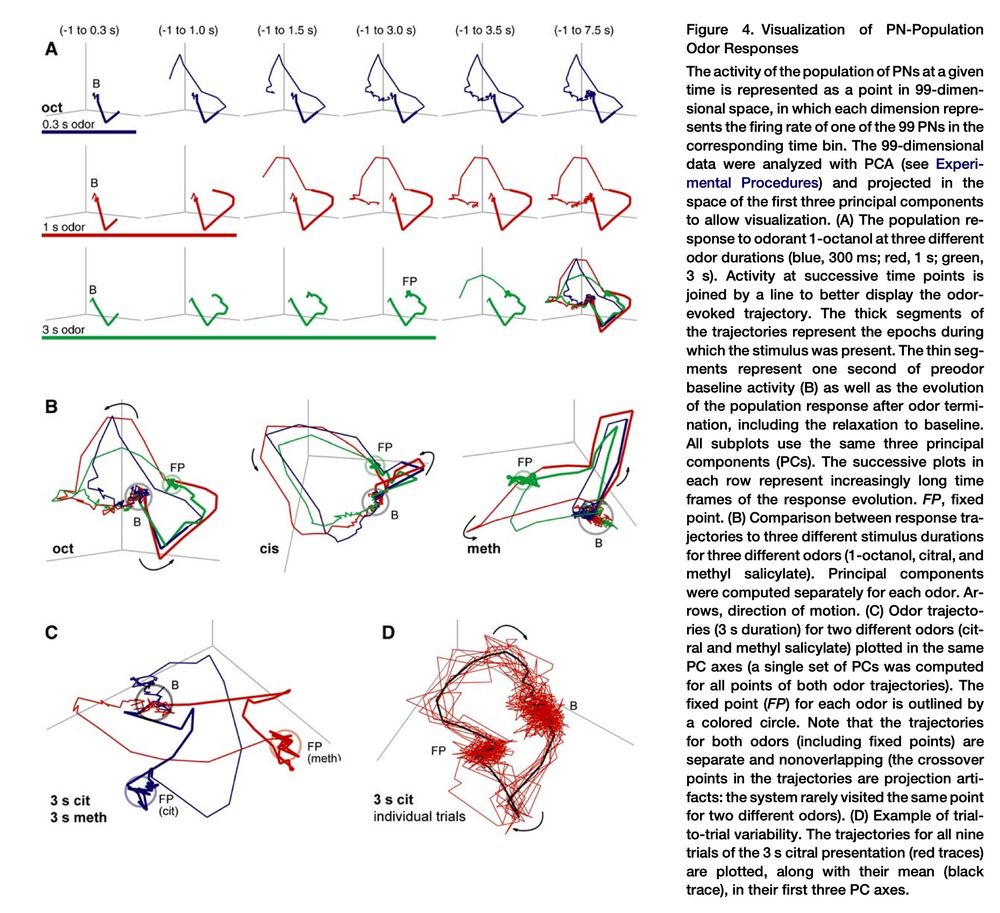

My own first exposure was Gilles Laurent's chapter in "21 Problems in Systems Neuroscience", where he cites odor trajectories in locust AL (2005). This was v inspiring as a biophysics student studying dynamical systems...

My own first exposure was Gilles Laurent's chapter in "21 Problems in Systems Neuroscience", where he cites odor trajectories in locust AL (2005). This was v inspiring as a biophysics student studying dynamical systems...

I think it cannot be assumed that we never wipe memories from our brains completely!

I think it cannot be assumed that we never wipe memories from our brains completely!

#LongCovid and lead to a new scientific discovery. Inspiring story.

Thank you for sharing your journey @jeffmyau.bsky.social

www.youcanknowthings.com/how-one-neur...

#LongCovid and lead to a new scientific discovery. Inspiring story.

Thank you for sharing your journey @jeffmyau.bsky.social

www.youcanknowthings.com/how-one-neur...

www.pnas.org/doi/10.1073/...

www.pnas.org/doi/10.1073/...

www.nature.com/articles/s42...

#neuroscience

www.nature.com/articles/s42...

#neuroscience

arxiv.org/abs/2412.11768

#MLSky #NeuroAI

arxiv.org/abs/2412.11768

#MLSky #NeuroAI

@mattperich.bsky.social @oliviercodol.bsky.social @anirudhgj.bsky.social

@mattperich.bsky.social @oliviercodol.bsky.social @anirudhgj.bsky.social

sakana.ai/namm/

Introducing Neural Attention Memory Models (NAMM), a new kind of neural memory system for Transformers that not only boost their performance and efficiency but are also transferable to other foundation models without any additional training!