https://scholar.google.com/citations?user=1y0vv1wAAAAJ&hl=en

A Fourier Space Perspective on Diffusion Models

https://arxiv.org/abs/2505.11278

A Fourier Space Perspective on Diffusion Models

https://arxiv.org/abs/2505.11278

youtu.be/MeEcxh9St24

youtu.be/MeEcxh9St24

iclr-blogposts.github.io/2025/blog/sp...

iclr-blogposts.github.io/2025/blog/sp...

martinfowler.com/articles/dee...

martinfowler.com/articles/dee...

Blog: hkunlp.github.io/blog/2025/dr...

Blog: hkunlp.github.io/blog/2025/dr...

Pairs well with the PaperILiked last week -- another good bridge between RL and control theory.

PDF: arxiv.org/abs/1806.09460

Pairs well with the PaperILiked last week -- another good bridge between RL and control theory.

PDF: arxiv.org/abs/1806.09460

I provide a grounding going from Classical Planning & Simulations -> RL Control -> LLMs and how to put it all together

pearls-lab.github.io/ai-agents-co...

I provide a grounding going from Classical Planning & Simulations -> RL Control -> LLMs and how to put it all together

pearls-lab.github.io/ai-agents-co...

If you know 1 of {RL, controls} and want to understand the other, this is a good starting point.

PDF: arxiv.org/abs/2406.00592

If you know 1 of {RL, controls} and want to understand the other, this is a good starting point.

PDF: arxiv.org/abs/2406.00592

I show many (boomer) ML algorithms with working implementation to prevent the black box effect.

Everything is done in notebooks so that students can play with the algorithms.

Book-ish pdf export: davidpicard.github.io/pdf/poly.pdf

I show many (boomer) ML algorithms with working implementation to prevent the black box effect.

Everything is done in notebooks so that students can play with the algorithms.

Book-ish pdf export: davidpicard.github.io/pdf/poly.pdf

@bernhard-jaeger.bsky.social

www.nowpublishers.com/article/Deta...

arxiv.org/abs/2312.08365

@bernhard-jaeger.bsky.social

www.nowpublishers.com/article/Deta...

arxiv.org/abs/2312.08365

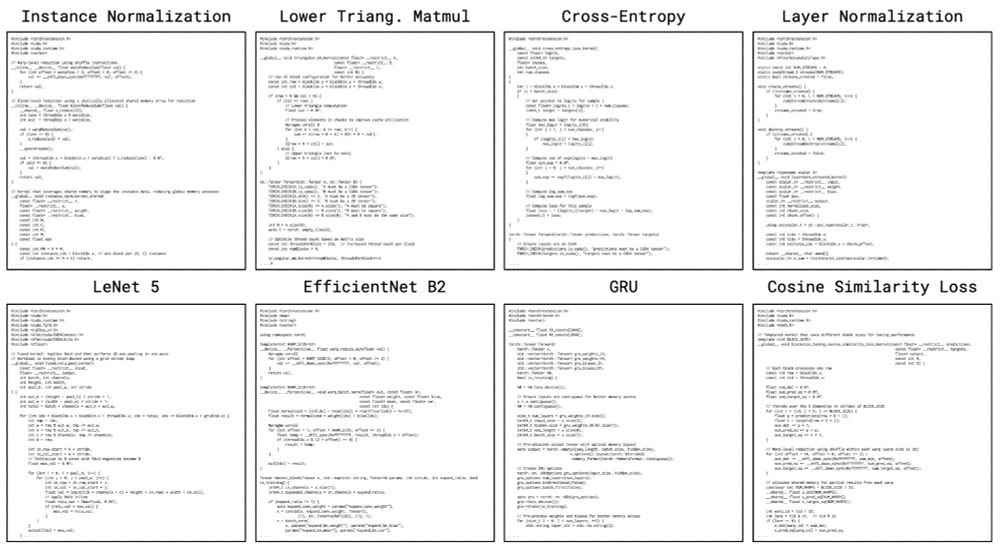

sakana.ai/ai-cuda-engi...

The AI CUDA Engineer can produce highly optimized CUDA kernels, reaching 10-100x speedup over common machine learning operations in PyTorch.

Examples:

sakana.ai/ai-cuda-engi...

The AI CUDA Engineer can produce highly optimized CUDA kernels, reaching 10-100x speedup over common machine learning operations in PyTorch.

Examples:

Quantum Neural Networks for Cloud Cover Parameterizations in Climate Models

https://arxiv.org/abs/2502.10131

Quantum Neural Networks for Cloud Cover Parameterizations in Climate Models

https://arxiv.org/abs/2502.10131

Implementation and Analysis of Regev's Quantum Factorization Algorithm

https://arxiv.org/abs/2502.09772

Implementation and Analysis of Regev's Quantum Factorization Algorithm

https://arxiv.org/abs/2502.09772

kempnerinstitute.harvard.edu/news/what-el...

kempnerinstitute.harvard.edu/news/what-el...

We performed the largest-ever comparison of these algorithms.

We find that they do not outperform generic policy gradient methods, such as PPO.

arxiv.org/abs/2502.08938

1/N

We performed the largest-ever comparison of these algorithms.

We find that they do not outperform generic policy gradient methods, such as PPO.

arxiv.org/abs/2502.08938

1/N

Check out SCION: a new optimizer that adapts to the geometry of your problem using norm-constrained linear minimization oracles (LMOs): 🧵👇

Check out SCION: a new optimizer that adapts to the geometry of your problem using norm-constrained linear minimization oracles (LMOs): 🧵👇

pretty inspiring to me -- network isn't converging? rigorously monitor every term in your loss to identify where in the architecture something is going wrong!

pretty inspiring to me -- network isn't converging? rigorously monitor every term in your loss to identify where in the architecture something is going wrong!

arxiv.org/abs/2406.08636

arxiv.org/abs/2406.08636

www.nature.com/articles/s41...

www.nature.com/articles/s41...

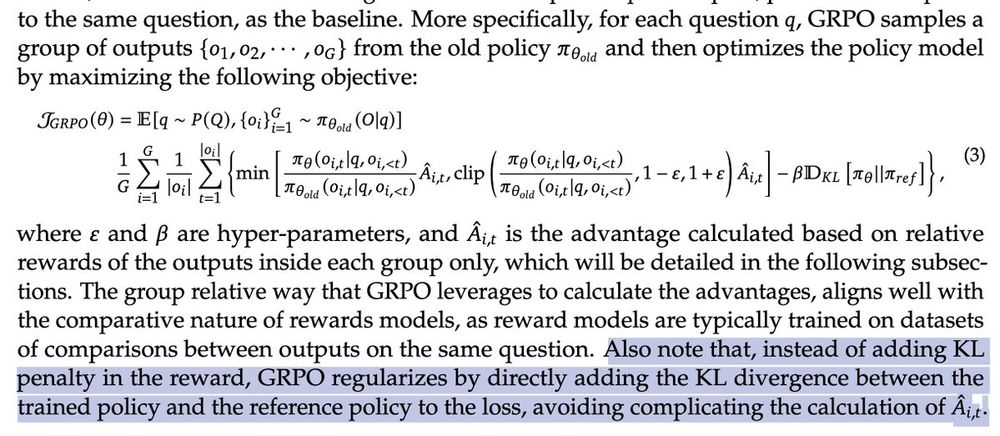

It's a little hard to reason about what this does to the objective. 1/

It's a little hard to reason about what this does to the objective. 1/

Sharing my notes and thoughts here 🧵

Sharing my notes and thoughts here 🧵

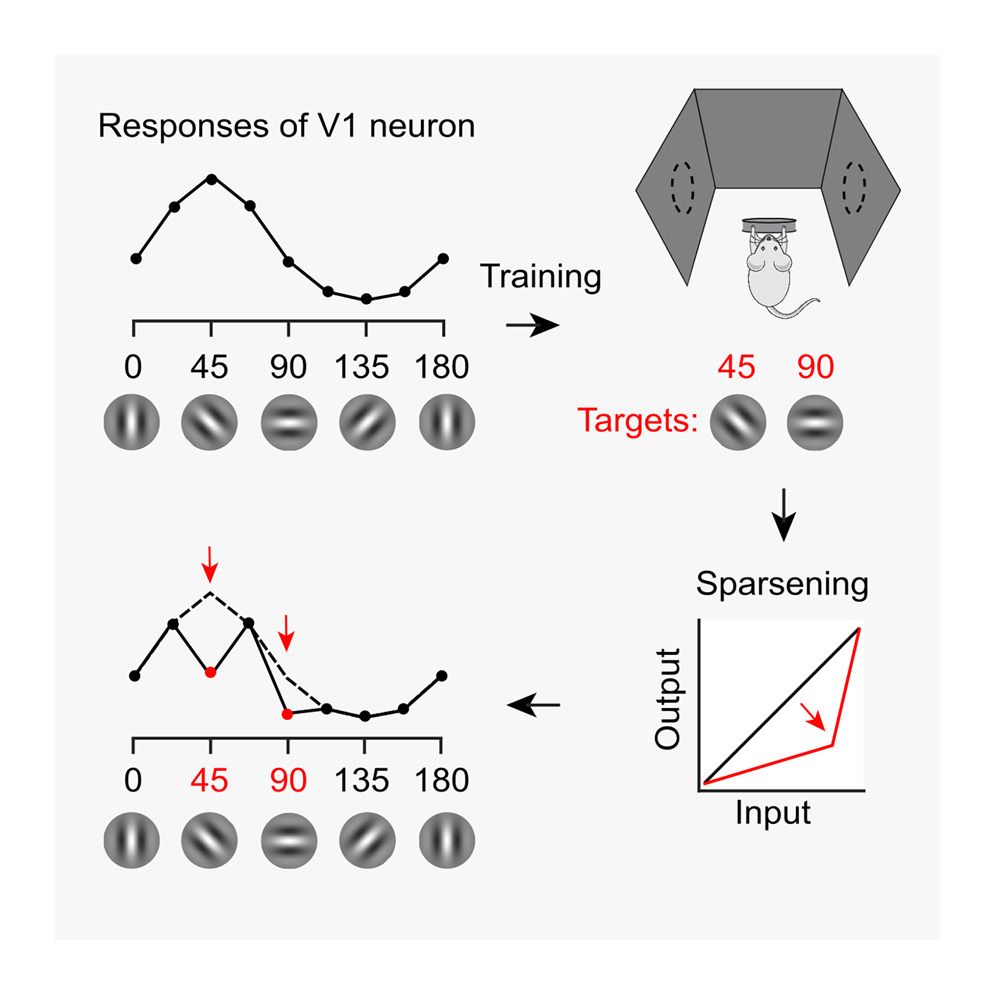

Visual experience orthogonalizes visual cortical responses

Training in a visual task changes V1 tuning curves in odd ways. This effect is explained by a simple convex transformation. It orthogonalizes the population, making it easier to decode.

10.1016/j.celrep.2025.115235

Visual experience orthogonalizes visual cortical responses

Training in a visual task changes V1 tuning curves in odd ways. This effect is explained by a simple convex transformation. It orthogonalizes the population, making it easier to decode.

10.1016/j.celrep.2025.115235