Imagine if you were suddenly told 'we decided not to pay your salary', that's kind of what the grant cuts felt like.

Now imagine if you were suddenly told 'we are going to set your dog on fire', that's what this feels like:

Imagine if you were suddenly told 'we decided not to pay your salary', that's kind of what the grant cuts felt like.

Now imagine if you were suddenly told 'we are going to set your dog on fire', that's what this feels like:

Check out the GenLM control library: github.com/genlm/genlm-...

GenLM supports not only grammars, but arbitrary programmable constraints from type systems to simulators.

If you can write a Python function, you can control your language model!

Check out the GenLM control library: github.com/genlm/genlm-...

GenLM supports not only grammars, but arbitrary programmable constraints from type systems to simulators.

If you can write a Python function, you can control your language model!

Generate a…

- Python program that passes a test suite.

- PDDL plan that satisfies a goal.

- CoT trajectory that yields a positive reward.

The list goes on…

How can we efficiently satisfy these? 🧵👇

Generate a…

- Python program that passes a test suite.

- PDDL plan that satisfies a goal.

- CoT trajectory that yields a positive reward.

The list goes on…

How can we efficiently satisfy these? 🧵👇

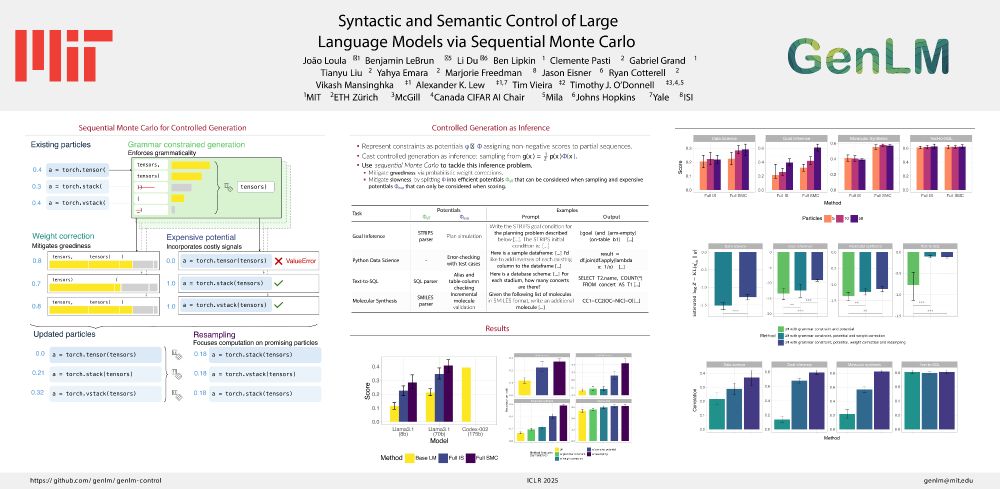

How can we control LMs using diverse signals such as static analyses, test cases, and simulations?

In our paper “Syntactic and Semantic Control of Large Language Models via Sequential Monte Carlo” (w/ @benlipkin.bsky.social,

@alexlew.bsky.social, @xtimv.bsky.social) we:

How can we control LMs using diverse signals such as static analyses, test cases, and simulations?

In our paper “Syntactic and Semantic Control of Large Language Models via Sequential Monte Carlo” (w/ @benlipkin.bsky.social,

@alexlew.bsky.social, @xtimv.bsky.social) we:

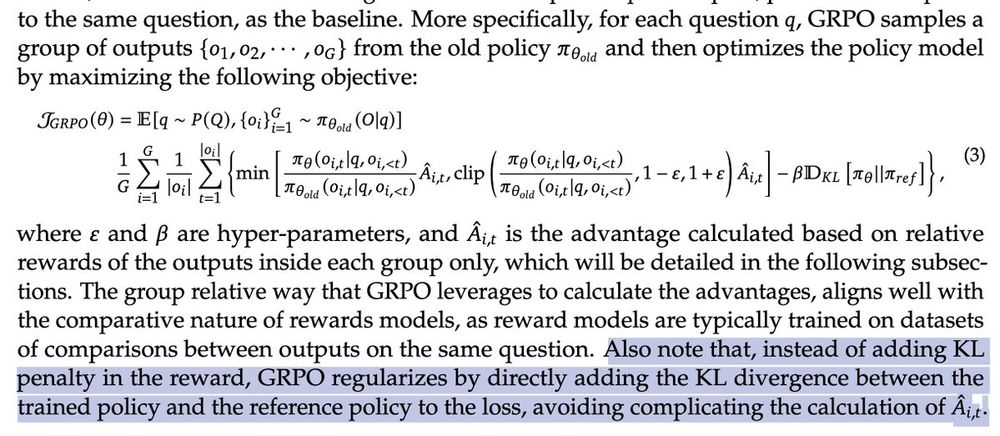

It's a little hard to reason about what this does to the objective. 1/

It's a little hard to reason about what this does to the objective. 1/

We're exploring new approaches to building software that draws inferences and makes predictions. See alexlew.net for details & apply at gsas.yale.edu/admissions/ by Dec. 15

We're exploring new approaches to building software that draws inferences and makes predictions. See alexlew.net for details & apply at gsas.yale.edu/admissions/ by Dec. 15

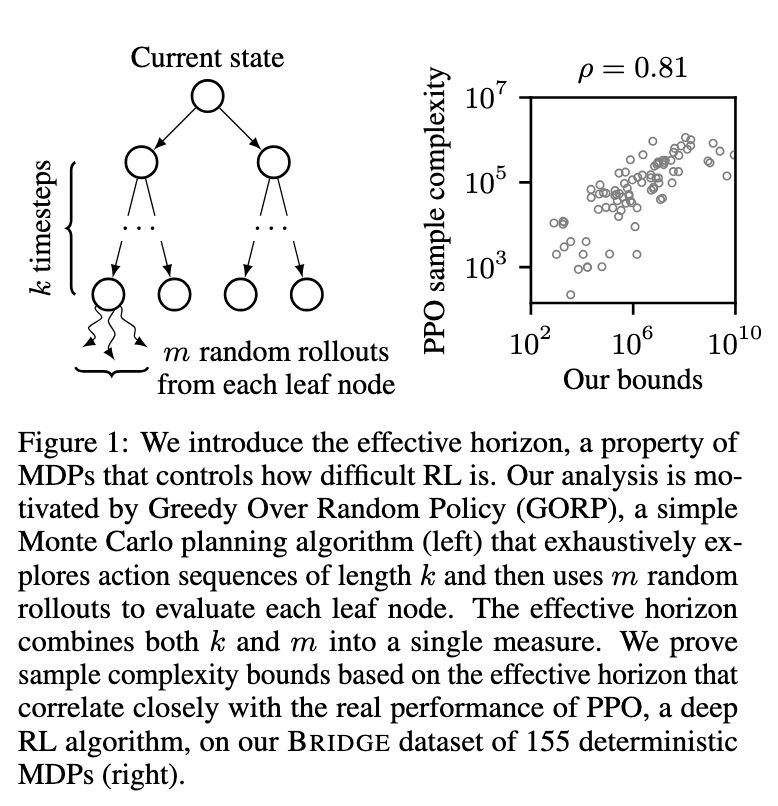

is totally fascinating in that it postulates two underlying, measurable structures that you can use to assess if RL will be easy or hard in an environment

is totally fascinating in that it postulates two underlying, measurable structures that you can use to assess if RL will be easy or hard in an environment

Occasionally they'll still have a human reader, like Sedaris here, and the contrast is insane

Occasionally they'll still have a human reader, like Sedaris here, and the contrast is insane

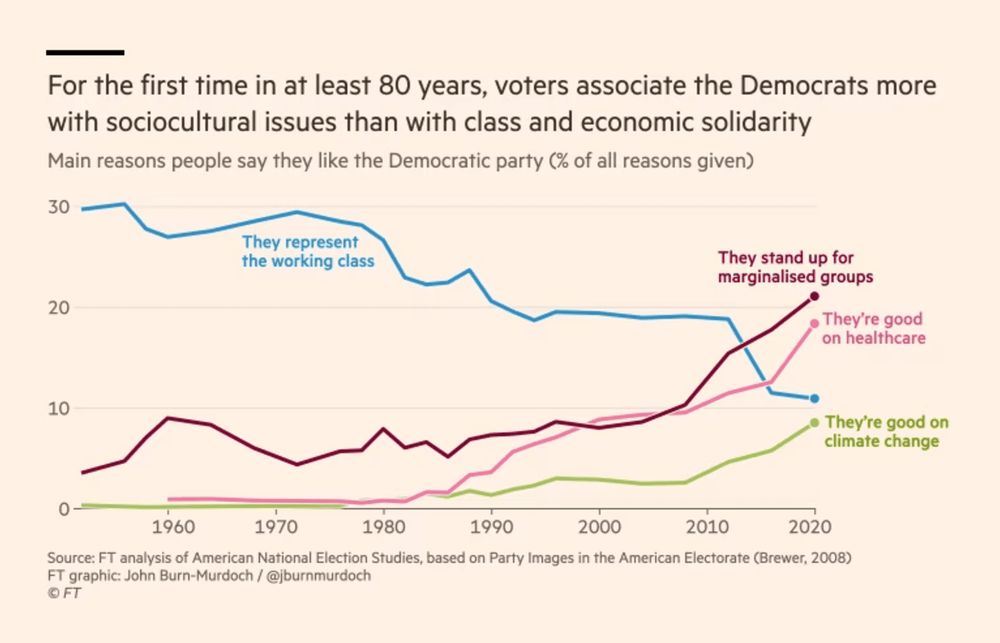

A 🧵 on a topic I find many students struggle with: "why do their 📊 look more professional than my 📊?"

It's *lots* of tiny decisions that aren't the defaults in many libraries, so let's break down 1 simple graph by @jburnmurdoch.bsky.social

🔗 www.ft.com/content/73a1...

A 🧵 on a topic I find many students struggle with: "why do their 📊 look more professional than my 📊?"

It's *lots* of tiny decisions that aren't the defaults in many libraries, so let's break down 1 simple graph by @jburnmurdoch.bsky.social

🔗 www.ft.com/content/73a1...

kernel methods in the space of (short, propositional) programs!!

why memorize and interpolate answers when you can memorize and interpolate answer-producing procedures??

The approach to reasoning LLMs use looks unlike retrieval, and more like a generalisable strategy synthesising procedural knowledge from many documents doing a similar form of reasoning.

kernel methods in the space of (short, propositional) programs!!

why memorize and interpolate answers when you can memorize and interpolate answer-producing procedures??

Surprisal of 'o' following 'Treatment '? 0.11

Surprisal that title includes surprisal of each title character? Priceless [...I did not know titles could do this]

Surprisal of 'o' following 'Treatment '? 0.11

Surprisal that title includes surprisal of each title character? Priceless [...I did not know titles could do this]

LLMs serve as *likelihoods*: how likely would the human be to have issued this (English) command, given a particular (symbolic) plan? No generation, just scoring :)

A Bayesian agent can then resolve ambiguity in really sensible ways

LLMs serve as *likelihoods*: how likely would the human be to have issued this (English) command, given a particular (symbolic) plan? No generation, just scoring :)

A Bayesian agent can then resolve ambiguity in really sensible ways

justindomke.wordpress.com/2009/02/17/a...

justindomke.wordpress.com/2009/02/17/a...

Younger researchers may not realize due to Moore's Law (Lin-Manuel Miranda becomes roughly half as cool every two years), but back when this was published in 2021, it was considered mildly topical

Younger researchers may not realize due to Moore's Law (Lin-Manuel Miranda becomes roughly half as cool every two years), but back when this was published in 2021, it was considered mildly topical