Amazon Scholar (AGI Foundations). IEEE Fellow. ELLIS Fellow.

Check out SCION: a new optimizer that adapts to the geometry of your problem using norm-constrained linear minimization oracles (LMOs): 🧵👇

Check out SCION: a new optimizer that adapts to the geometry of your problem using norm-constrained linear minimization oracles (LMOs): 🧵👇

Check out SCION: a new optimizer that adapts to the geometry of your problem using norm-constrained linear minimization oracles (LMOs): 🧵👇

📍 Find us at Poster #5904 from 16:30 in the West Ballroom.

📍 Find us at Poster #5904 from 16:30 in the West Ballroom.

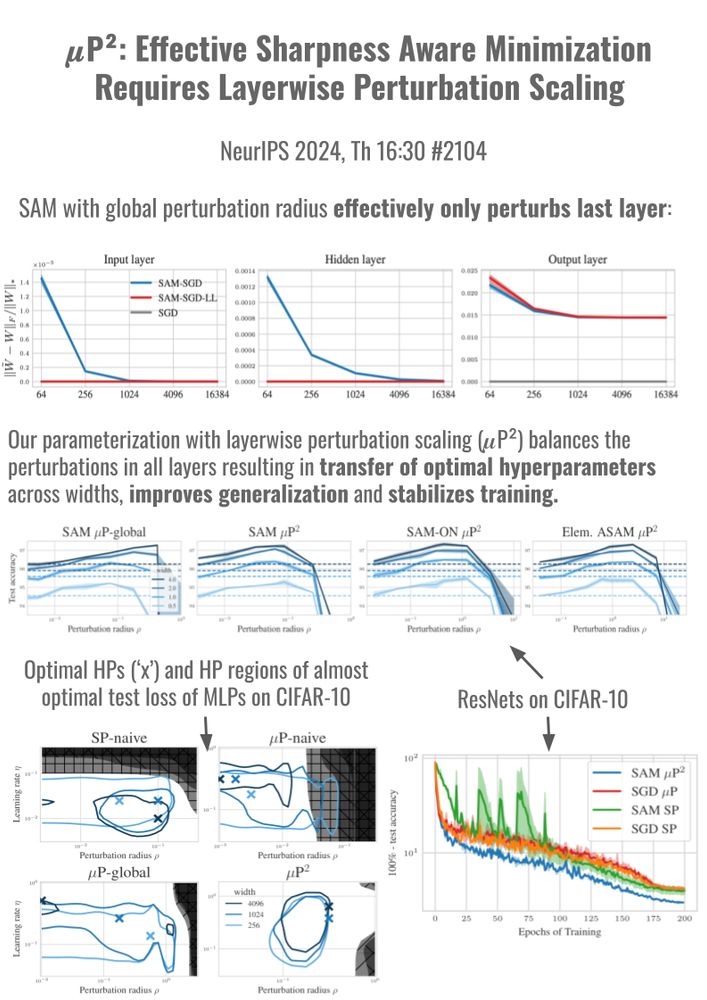

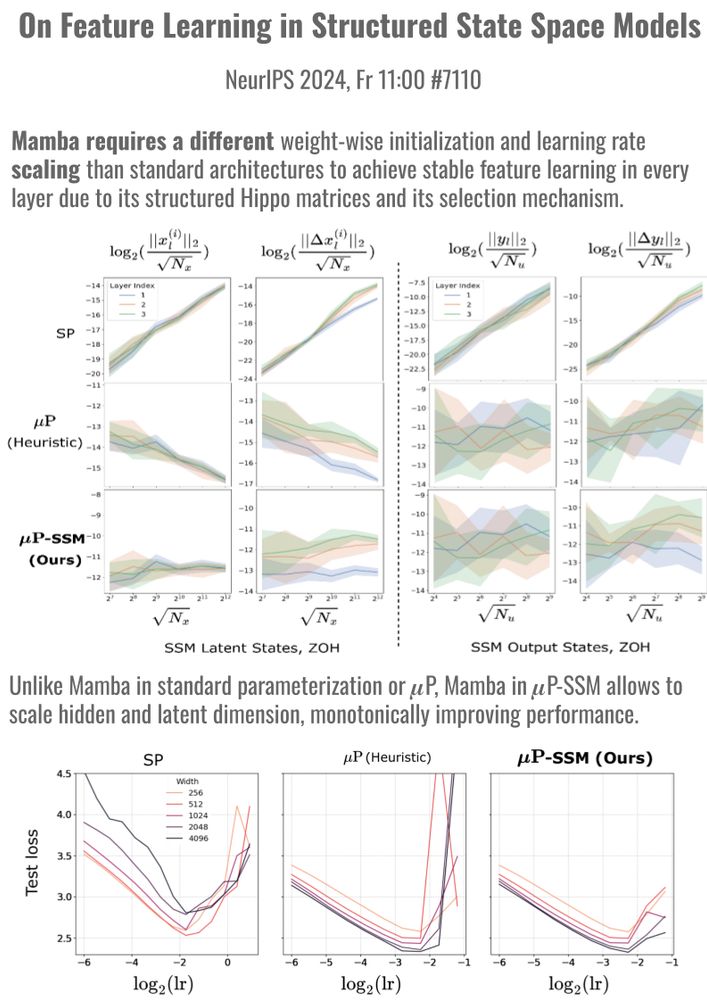

Thrilled to present 2 papers at #NeurIPS 🎉 that study width-scaling in Sharpness Aware Minimization (SAM) (Th 16:30, #2104) and in Mamba (Fr 11, #7110). Our scaling rules stabilize training and transfer optimal hyperparams across scales.

🧵 1/10

Thrilled to present 2 papers at #NeurIPS 🎉 that study width-scaling in Sharpness Aware Minimization (SAM) (Th 16:30, #2104) and in Mamba (Fr 11, #7110). Our scaling rules stabilize training and transfer optimal hyperparams across scales.

🧵 1/10

🧵 10/10

🧵 10/10

aclrollingreview.org/authors#step...

aclrollingreview.org/authors#step...

57% of people rejected their own argument when they thought it was someone else's. So take it easy with the criticism.

57% of people rejected their own argument when they thought it was someone else's. So take it easy with the criticism.