(1/n)

(1/n)

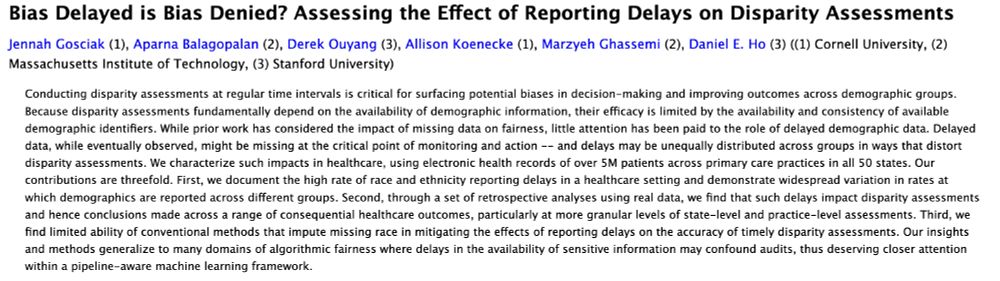

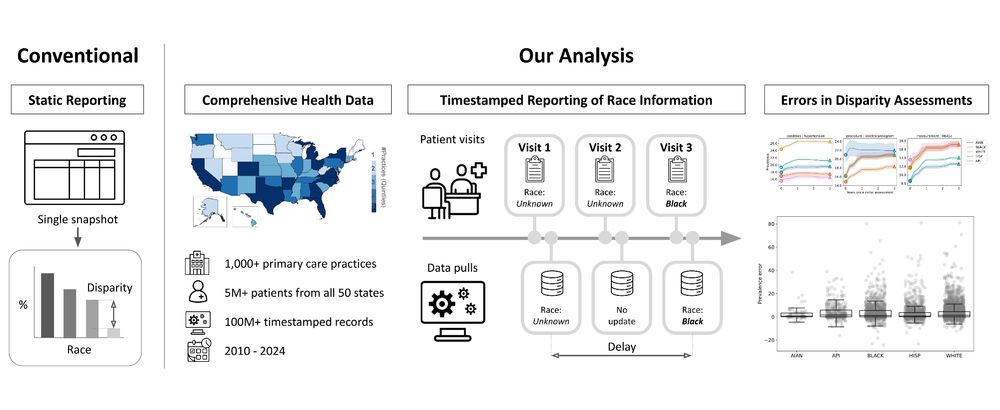

🔗: arxiv.org/pdf/2506.04419

🔗: arxiv.org/pdf/2506.04419

aiforhumanists.com/tutorials/

aiforhumanists.com/tutorials/

Before then, I'll postdoc for a year in the NLP group at another UW 🏔️ in the Pacific Northwest

Before then, I'll postdoc for a year in the NLP group at another UW 🏔️ in the Pacific Northwest

New paper by Jonathan Bourne. He's been working to help DLOC handle OCR for a whole bunch of Caribbean historical newspapers. "Scrambled text: fine-tuning language models for OCR error correction using synthetic data" link.springer.com/article/10.1...

New paper by Jonathan Bourne. He's been working to help DLOC handle OCR for a whole bunch of Caribbean historical newspapers. "Scrambled text: fine-tuning language models for OCR error correction using synthetic data" link.springer.com/article/10.1...

"The single most important use case for LLMs in sociology is turning unstructured data into structured data."

Discussing his recent work on codebooks, prompts, and information extraction: osf.io/preprints/so...

"The single most important use case for LLMs in sociology is turning unstructured data into structured data."

Discussing his recent work on codebooks, prompts, and information extraction: osf.io/preprints/so...

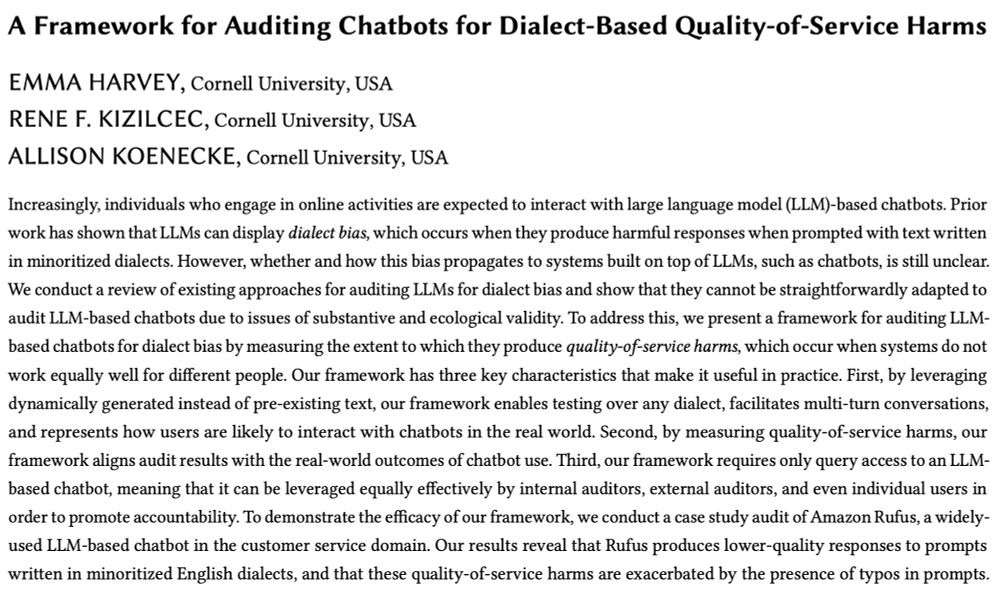

See results in comments!

🔗 Arxiv link: arxiv.org/abs/2411.05025

See results in comments!

🔗 Arxiv link: arxiv.org/abs/2411.05025

Unso Jo and @dmimno.bsky.social . Link to paper: arxiv.org/pdf/2504.00289

🧵

Unso Jo and @dmimno.bsky.social . Link to paper: arxiv.org/pdf/2504.00289

🧵

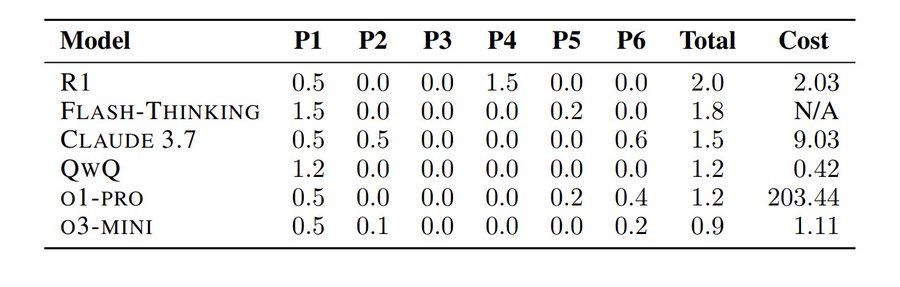

So naturally, some people put it to the test — hours after the 2025 US Math Olympiad problems were released.

The result: They all sucked!

So naturally, some people put it to the test — hours after the 2025 US Math Olympiad problems were released.

The result: They all sucked!

(I used to ask about this every year on Twitter haha.)

(I used to ask about this every year on Twitter haha.)