Google Research | PhD, CMU |

https://arxiv.org/abs/2504.15266 | https://arxiv.org/abs/2403.06963

vaishnavh.github.io

Paper: arxiv.org/abs/2504.15266

Paper: arxiv.org/abs/2504.15266

NeurIPS 2025 Official LLM Policy:

neurips.cc/Conferences/...

NeurIPS 2025 Official LLM Policy:

neurips.cc/Conferences/...

Probability

Probability

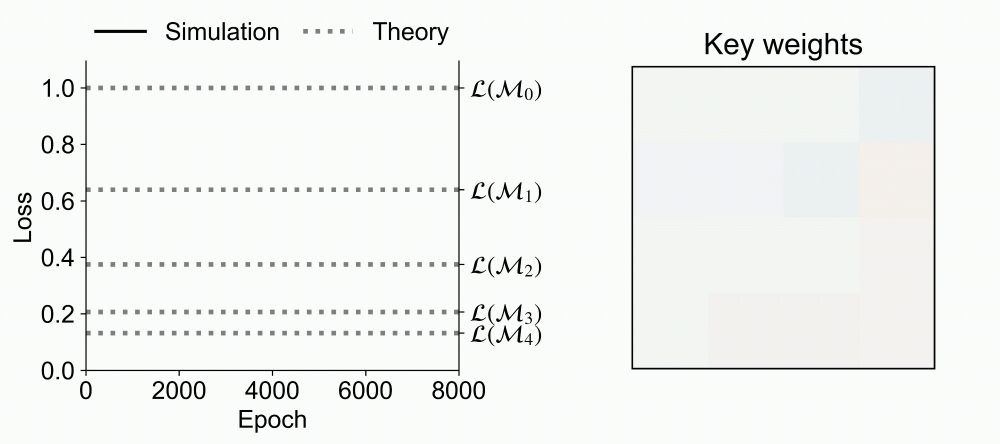

Excited to share our ICML Oral paper on learning dynamics in linear RNNs!

with @clementinedomine.bsky.social @mpshanahan.bsky.social and Pedro Mediano

openreview.net/forum?id=KGO...

Excited to share our ICML Oral paper on learning dynamics in linear RNNs!

with @clementinedomine.bsky.social @mpshanahan.bsky.social and Pedro Mediano

openreview.net/forum?id=KGO...

If you have thoughts/recommendations, please share!

vaishnavh.github.io/2025/04/29/h...

If you have thoughts/recommendations, please share!

vaishnavh.github.io/2025/04/29/h...

If you have thoughts/recommendations, please share!

vaishnavh.github.io/2025/04/29/h...

Sharing our new Spotlight paper @icmlconf.bsky.social: Training Dynamics of In-Context Learning in Linear Attention

arxiv.org/abs/2501.16265

Led by Yedi Zhang with @aaditya6284.bsky.social and Peter Latham

Sharing our new Spotlight paper @icmlconf.bsky.social: Training Dynamics of In-Context Learning in Linear Attention

arxiv.org/abs/2501.16265

Led by Yedi Zhang with @aaditya6284.bsky.social and Peter Latham

→ LLMs are limited in creativity as they learn to predict the next token

→ creativity can be improved via multi-token learning & injecting noise ("seed-conditioning" 🌱) 1/ #MLSky #AI #arxiv 🧵👇🏽

two papers find entropy *minimization*/confidence maximization helps performance,

and the RL-on-one-sample finds entropy maximization/increasing exploration alone helps performance?!

two papers find entropy *minimization*/confidence maximization helps performance,

and the RL-on-one-sample finds entropy maximization/increasing exploration alone helps performance?!

A lot happens in the world every day—how can we update LLMs with belief-changing news?

We introduce a new dataset "New News" and systematically study knowledge integration via System-2 Fine-Tuning (Sys2-FT).

1/n

A lot happens in the world every day—how can we update LLMs with belief-changing news?

We introduce a new dataset "New News" and systematically study knowledge integration via System-2 Fine-Tuning (Sys2-FT).

1/n

What if the news appears in the context upstream of the *same* FT data?

🚨 Contextual Shadowing happens!

Prefixing the news during FT *catastrophically* reduces learning!

10/n

We identified a common root cause to many safety vulnerabilities and pointed out some paths forward to address it!

We identified a common root cause to many safety vulnerabilities and pointed out some paths forward to address it!

- a benchmark for open-ended creativity

- a demonstration of challenges of next-token prediction

- a technique to improve transformer randomness through inputs not sampling

arxiv.org/abs/2504.15266

- a benchmark for open-ended creativity

- a demonstration of challenges of next-token prediction

- a technique to improve transformer randomness through inputs not sampling

arxiv.org/abs/2504.15266

blog.neurips.cc/2025/05/02/r...

blog.neurips.cc/2025/05/02/r...