Google Research | PhD, CMU |

https://arxiv.org/abs/2504.15266 | https://arxiv.org/abs/2403.06963

vaishnavh.github.io

but temp sampling has to articulate multiple latent thoughts in parallel to produce a marginal next-word distribution -- this is more burdensome! 8/👇🏽

but temp sampling has to articulate multiple latent thoughts in parallel to produce a marginal next-word distribution -- this is more burdensome! 8/👇🏽

Remarkably, seed-conditioning produces meaningful diversity even w *greedy* decoding 🤑; it is competitive with temp & in some conditions, superior. 7/👇🏽

Remarkably, seed-conditioning produces meaningful diversity even w *greedy* decoding 🤑; it is competitive with temp & in some conditions, superior. 7/👇🏽

First: Next-token-trained models are largely less creative & memorize much more than multi-token ones (we tried diffusion and teacherless training). 4/👇🏽

First: Next-token-trained models are largely less creative & memorize much more than multi-token ones (we tried diffusion and teacherless training). 4/👇🏽

1. Combinational: making surprising connections from memory, like in wordplay.🧠

2. Exploratory: devising fresh patterns obeying some rules, like in problem-design🧩 3/👇🏽

1. Combinational: making surprising connections from memory, like in wordplay.🧠

2. Exploratory: devising fresh patterns obeying some rules, like in problem-design🧩 3/👇🏽

But how do we ✨cleanly✨ understand/improve LLMs on such subjective, unscalable, noisy metrics? 2/👇🏽

But how do we ✨cleanly✨ understand/improve LLMs on such subjective, unscalable, noisy metrics? 2/👇🏽

→ LLMs are limited in creativity as they learn to predict the next token

→ creativity can be improved via multi-token learning & injecting noise ("seed-conditioning" 🌱) 1/ #MLSky #AI #arxiv 🧵👇🏽

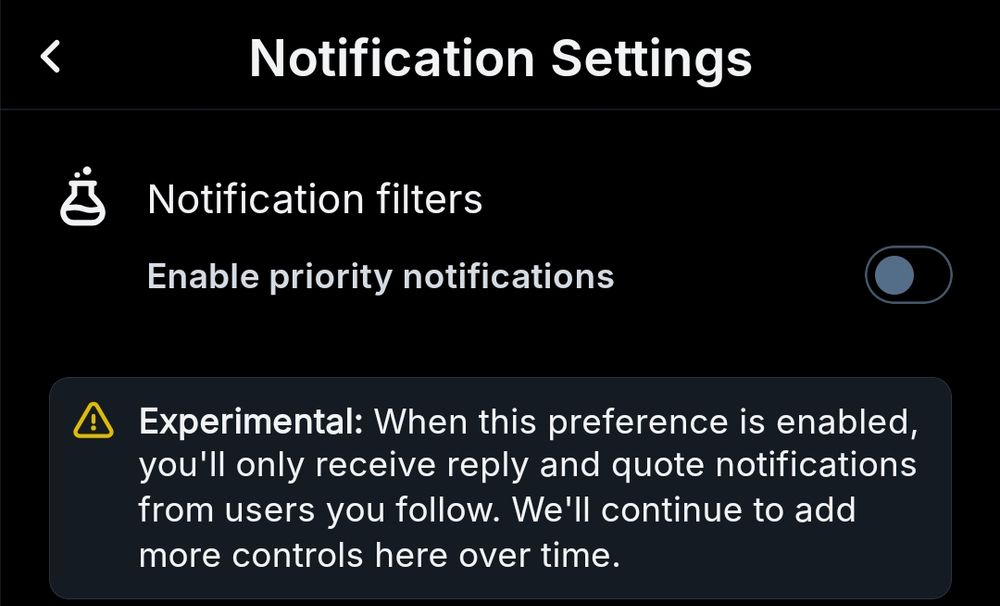

two papers find entropy *minimization*/confidence maximization helps performance,

and the RL-on-one-sample finds entropy maximization/increasing exploration alone helps performance?!

two papers find entropy *minimization*/confidence maximization helps performance,

and the RL-on-one-sample finds entropy maximization/increasing exploration alone helps performance?!

arxiv.org/abs/2405.08448

arxiv.org/abs/2405.08448

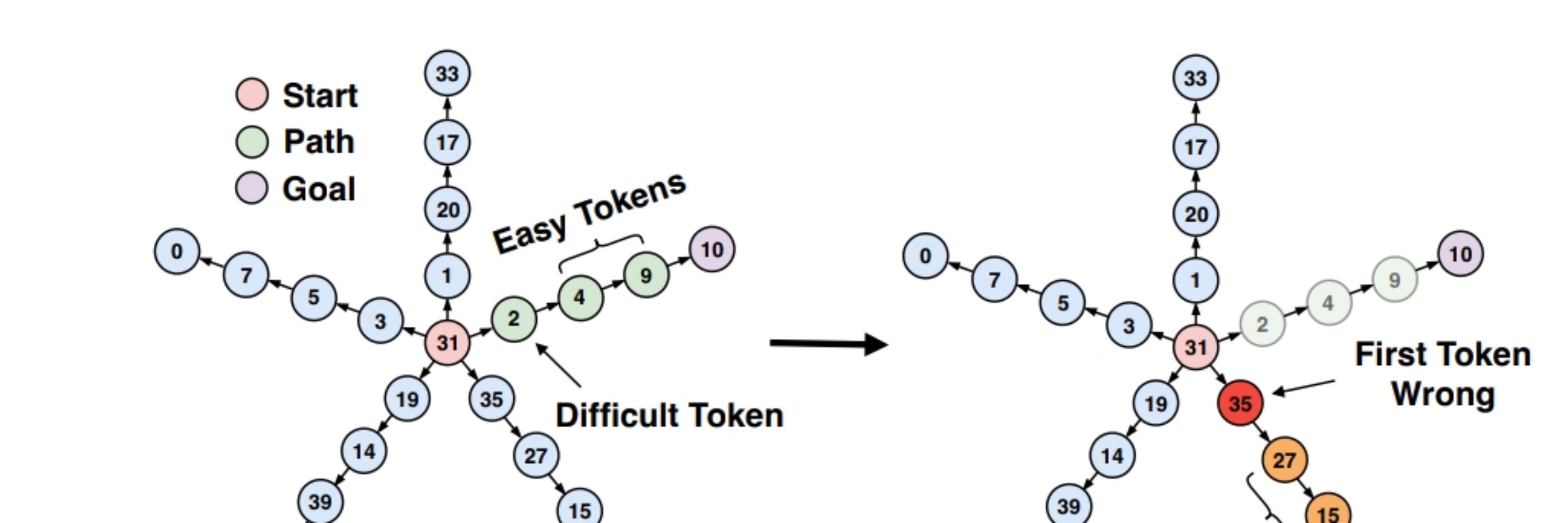

like in this path-star task or when you write a story, shortcuts arise simply from the way you order the tokens. arxiv.org/pdf/2403.06963

like in this path-star task or when you write a story, shortcuts arise simply from the way you order the tokens. arxiv.org/pdf/2403.06963

vaishnavh.github.io/home/talks/n...

vaishnavh.github.io/home/talks/n...

(Artist: annalaura_art@ on Instagram)

(Artist: annalaura_art@ on Instagram)