computer graphics, programming systems, machine learning, differentiable graphics

Probably not much is new but I find I still need to repeat the same things to my students regularly. Will update this document over time hopefully.

Probably not much is new but I find I still need to repeat the same things to my students regularly. Will update this document over time hopefully.

Sorry, have to rant, back to writing proposals. : (

Sorry, have to rant, back to writing proposals. : (

I gave an internal talk at UCSD last year regarding "novelty" in computer science research. In it I "debunked" some of the myth people seem to have about what is good research in computer science these days. People seemed to like it, so I thought I should share.

I gave an internal talk at UCSD last year regarding "novelty" in computer science research. In it I "debunked" some of the myth people seem to have about what is good research in computer science these days. People seemed to like it, so I thought I should share.

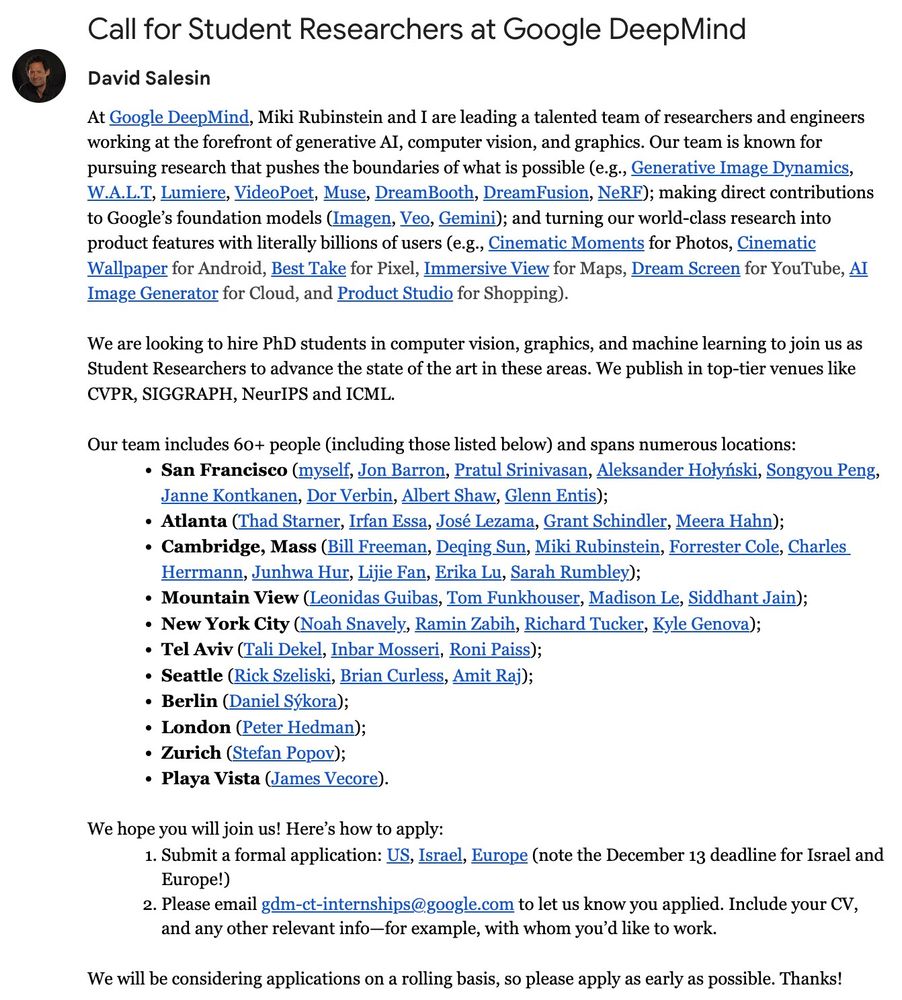

The first author Yash is looking for a job yashbelhe.github.io so talk to us if you're interested.

The first author Yash is looking for a job yashbelhe.github.io so talk to us if you're interested.

www.youtube.com/watch?v=Vyci...

www.youtube.com/watch?v=Vyci...

🙈 Just as Transformers learn long-range relationships between words or pixels, our new paper shows they can also learn how light interacts and bounces around a 3D scene.

🙈 Just as Transformers learn long-range relationships between words or pixels, our new paper shows they can also learn how light interacts and bounces around a 3D scene.

www.aswf.io/blog/openqmc...

www.aswf.io/blog/openqmc...

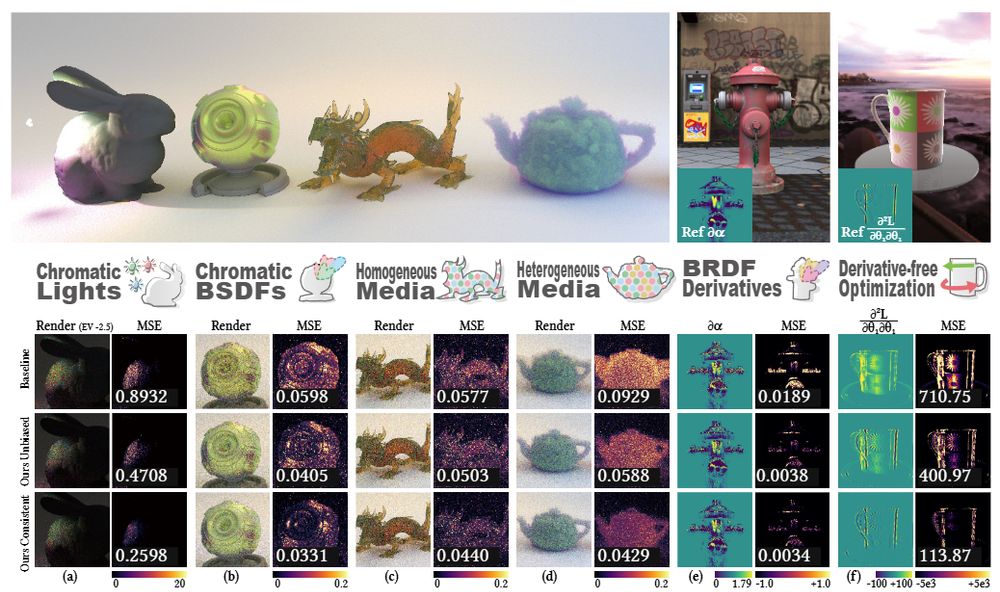

My student Zimo/Cheng's recent work tackles this problem! A lot of recent neural "SDF" optimizers use losses that, even when perfectly minimized, still don't result in actual signed distance fields. Our loss guarantees convergence to distance when minimized.

My student Zimo/Cheng's recent work tackles this problem! A lot of recent neural "SDF" optimizers use losses that, even when perfectly minimized, still don't result in actual signed distance fields. Our loss guarantees convergence to distance when minimized.

Generative AI is a Parasitic Cancer

www.youtube.com/watch?v=-opB...

Generative AI is a Parasitic Cancer

www.youtube.com/watch?v=-opB...

drive.google.com/drive/folder...

drive.google.com/drive/folder...

We're exploring new approaches to building software that draws inferences and makes predictions. See alexlew.net for details & apply at gsas.yale.edu/admissions/ by Dec. 15

We're exploring new approaches to building software that draws inferences and makes predictions. See alexlew.net for details & apply at gsas.yale.edu/admissions/ by Dec. 15

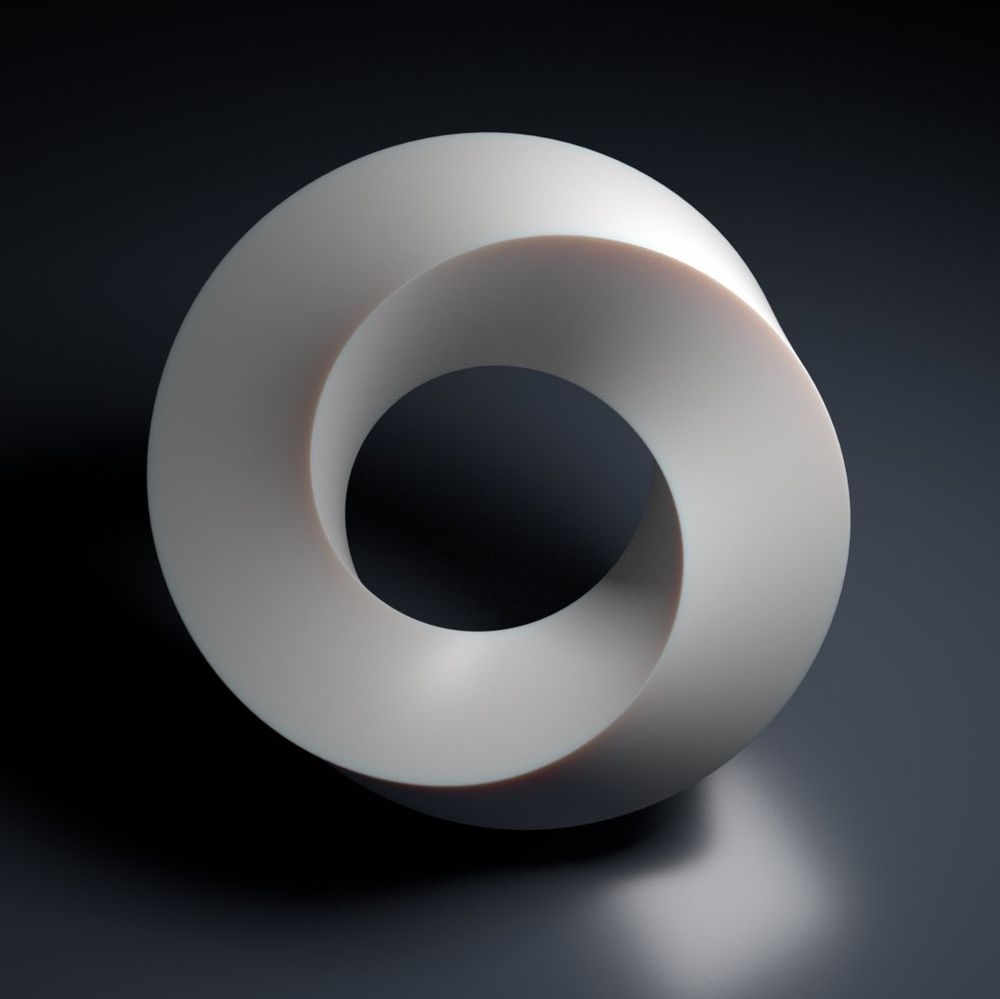

I wrote down some random notes about the connection between score matching (aka diffusion models) and Stein's unbiased risk estimate (SURE). It shows why optimal denoising lead to optimal score. Not a new observation but doesn't seem to be talked enough in literature.

I wrote down some random notes about the connection between score matching (aka diffusion models) and Stein's unbiased risk estimate (SURE). It shows why optimal denoising lead to optimal score. Not a new observation but doesn't seem to be talked enough in literature.

Learn more: www.khronos.org/events/siggr...

#slang #shading #language #compiler

Learn more: www.khronos.org/events/siggr...

#slang #shading #language #compiler

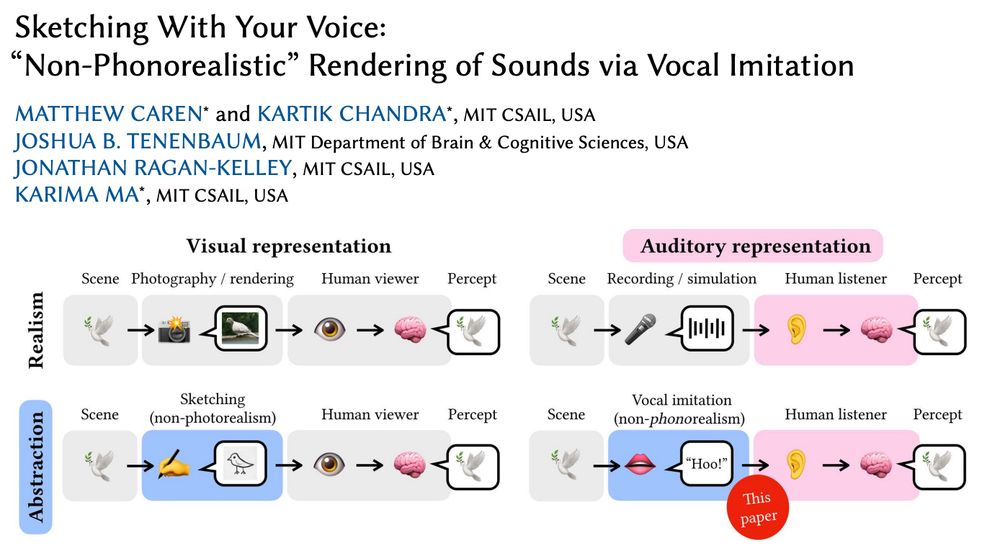

(1) realistic images 📷

(2) non-photorealistic images ✍️

(3) realistic sounds 🎤

What about (4) "non-phono-realistic" sounds?

What could that even mean?

Next week at SIGGRAPH Asia, MIT undergrad Matt Caren will present our proposal… 🧵

arxiv.org/abs/2409.13507

(1) realistic images 📷

(2) non-photorealistic images ✍️

(3) realistic sounds 🎤

What about (4) "non-phono-realistic" sounds?

What could that even mean?

Next week at SIGGRAPH Asia, MIT undergrad Matt Caren will present our proposal… 🧵

arxiv.org/abs/2409.13507