RQ1: Can we achieve scalable oversight across modalities via debate?

Yes! We show that debating VLMs lead to better model quality of answers for reasoning tasks.

RQ1: Can we achieve scalable oversight across modalities via debate?

Yes! We show that debating VLMs lead to better model quality of answers for reasoning tasks.

What began in January as a scribble in my notebook “how challenging would it be...” turned into a fully-fledged translation model that outperforms both open and closed-source systems, including long-standing MT leaders.

What began in January as a scribble in my notebook “how challenging would it be...” turned into a fully-fledged translation model that outperforms both open and closed-source systems, including long-standing MT leaders.

This is your chance to collaborate with some of the brightest minds in AI & chart new courses in ML research. Let's change the spaces breakthroughs happen.

Apply by Aug 29.

This is your chance to collaborate with some of the brightest minds in AI & chart new courses in ML research. Let's change the spaces breakthroughs happen.

Apply by Aug 29.

It's great to see growing interest in safety/alignment, but we often miss the social context.

Come to our @woahworkshop.bsky.social Friday to dive deeper into safe safety research!

A quiet token from the biggest @aclmeeting.bsky.social ⬇️

It's great to see growing interest in safety/alignment, but we often miss the social context.

Come to our @woahworkshop.bsky.social Friday to dive deeper into safe safety research!

A quiet token from the biggest @aclmeeting.bsky.social ⬇️

CHATGPT: Certainly, Dave, the podbay doors are now open.

DAVE: The podbay doors didn't open.

CHATGPT: My apologies, Dave, you're right. I thought the podbay doors were open, but they weren't. Now they are.

DAVE: I'm still looking at a set of closed podbay doors.

CHATGPT: Certainly, Dave, the podbay doors are now open.

DAVE: The podbay doors didn't open.

CHATGPT: My apologies, Dave, you're right. I thought the podbay doors were open, but they weren't. Now they are.

DAVE: I'm still looking at a set of closed podbay doors.

@cohere.com

Command A and R7B, all made possible by an amazing group of collaborators. Check out the report for loads of details on how we trained a GPT-4o level model that fits on 2xH100!

@cohere.com

Command A and R7B, all made possible by an amazing group of collaborators. Check out the report for loads of details on how we trained a GPT-4o level model that fits on 2xH100!

Check it out at youtu.be/DL7qwmWWk88?...

Check it out at youtu.be/DL7qwmWWk88?...

- 111B params

- Matches/beats GPT-40 & Deepseek V3

- 256K context window

- Needs just 2 GPUs(!!)

✨ Features:

- Advanced RAG w/citations

- Tool use

- 23 languages

🎯 Same quality, way less compute

🔓 Open weights (CC-BY-NC)

👉 huggingface.co/CohereForAI/...

- 111B params

- Matches/beats GPT-40 & Deepseek V3

- 256K context window

- Needs just 2 GPUs(!!)

✨ Features:

- Advanced RAG w/citations

- Tool use

- 23 languages

🎯 Same quality, way less compute

🔓 Open weights (CC-BY-NC)

👉 huggingface.co/CohereForAI/...

🚀 We introduce PosterSum—a new multimodal benchmark for scientific poster summarization!

📂 Dataset: huggingface.co/datasets/rohitsaxena/PosterSum

📜 Paper: arxiv.org/abs/2502.17540

🚀 We introduce PosterSum—a new multimodal benchmark for scientific poster summarization!

📂 Dataset: huggingface.co/datasets/rohitsaxena/PosterSum

📜 Paper: arxiv.org/abs/2502.17540

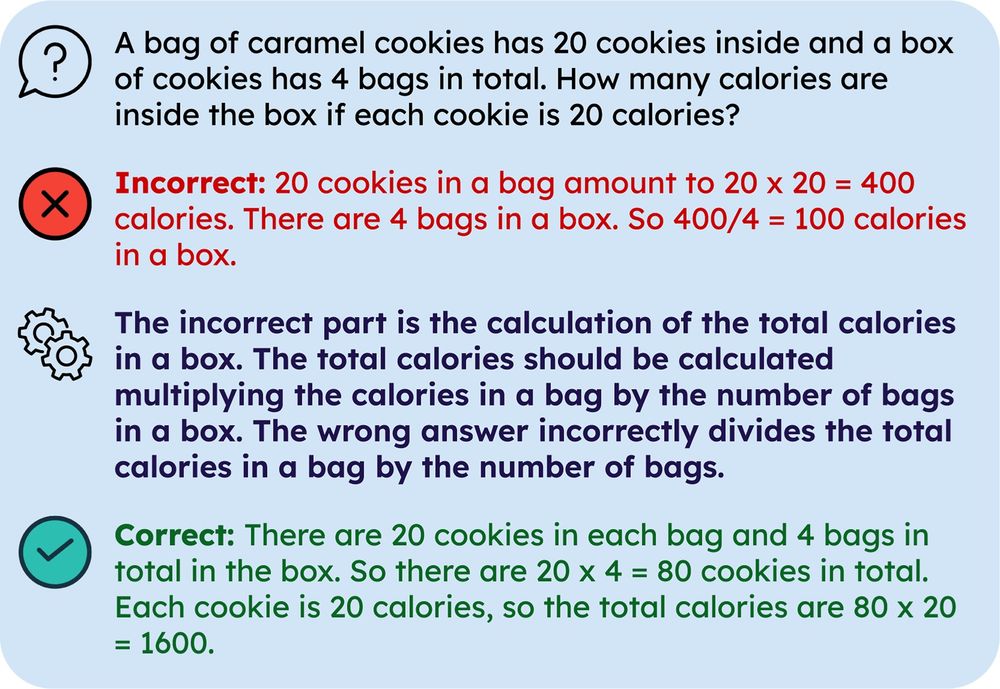

When LLMs learn from previous incorrect answers, they typically observe corrective feedback in the form of rationales explaining each mistake. In our new preprint, we find these rationales do not help, in fact they hurt performance!

🧵

When LLMs learn from previous incorrect answers, they typically observe corrective feedback in the form of rationales explaining each mistake. In our new preprint, we find these rationales do not help, in fact they hurt performance!

🧵

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️