PhDing on Interpretable NLP + CSS @gesis.org Prev: Masters Student + Researcher at @ubuffalo.bsky.social and Sr. Data Scientist at Coursera

Sharing our new paper @iclr_conf led by Yedi Zhang with Peter Latham

arxiv.org/abs/2512.20607

Sharing our new paper @iclr_conf led by Yedi Zhang with Peter Latham

arxiv.org/abs/2512.20607

In our new paper "Revisiting Multilingual Data Mixtures in Language Model Pretraining" we revisit core assumptions about multilinguality using 1.1B-3B models trained on up to 400 languages.

🧵👇

In our new paper "Revisiting Multilingual Data Mixtures in Language Model Pretraining" we revisit core assumptions about multilinguality using 1.1B-3B models trained on up to 400 languages.

🧵👇

🐟Olmo 3 32B Base, the best fully-open base model to-date, near Qwen 2.5 & Gemma 3 on diverse evals

🐠Olmo 3 32B Think, first fully-open reasoning model approaching Qwen 3 levels

🐡12 training datasets corresp to different staged training

🐟Olmo 3 32B Base, the best fully-open base model to-date, near Qwen 2.5 & Gemma 3 on diverse evals

🐠Olmo 3 32B Think, first fully-open reasoning model approaching Qwen 3 levels

🐡12 training datasets corresp to different staged training

Language models, unlike humans, require large amounts of data, which suggests the need for an inductive bias.

But what kind of inductive biases do we need?

Language models, unlike humans, require large amounts of data, which suggests the need for an inductive bias.

But what kind of inductive biases do we need?

www.linkedin.com/posts/subbar...

www.linkedin.com/posts/subbar...

Five sections:

- Foundational Components

- Foundational Problems

- Training Resources

- Applications

- Future Directions

Five sections:

- Foundational Components

- Foundational Problems

- Training Resources

- Applications

- Future Directions

Now on arXiv: arxiv.org/abs/2508.16599

Now on arXiv: arxiv.org/abs/2508.16599

Eric's: docs.google.com/document/d/1...

Mor's: s.tech.cornell.edu/phd-syllabus/

Eric's: docs.google.com/document/d/1...

Mor's: s.tech.cornell.edu/phd-syllabus/

bsky.app/profile/pape...

bsky.app/profile/pape...

context: (some?all?) panelists & him agree the field needs more deep, careful research on smaller models to do better science. everyone is frustrated with impossibility of large-scale pretraining experiments

context: (some?all?) panelists & him agree the field needs more deep, careful research on smaller models to do better science. everyone is frustrated with impossibility of large-scale pretraining experiments

Looking for practical methods for settings where human annotations are costly.

A few examples in thread ↴

Looking for practical methods for settings where human annotations are costly.

A few examples in thread ↴

Looking for practical methods for settings where human annotations are costly.

A few examples in thread ↴

More info: www.ubjobs.buffalo.edu/postings/57734

More info: www.ubjobs.buffalo.edu/postings/57734

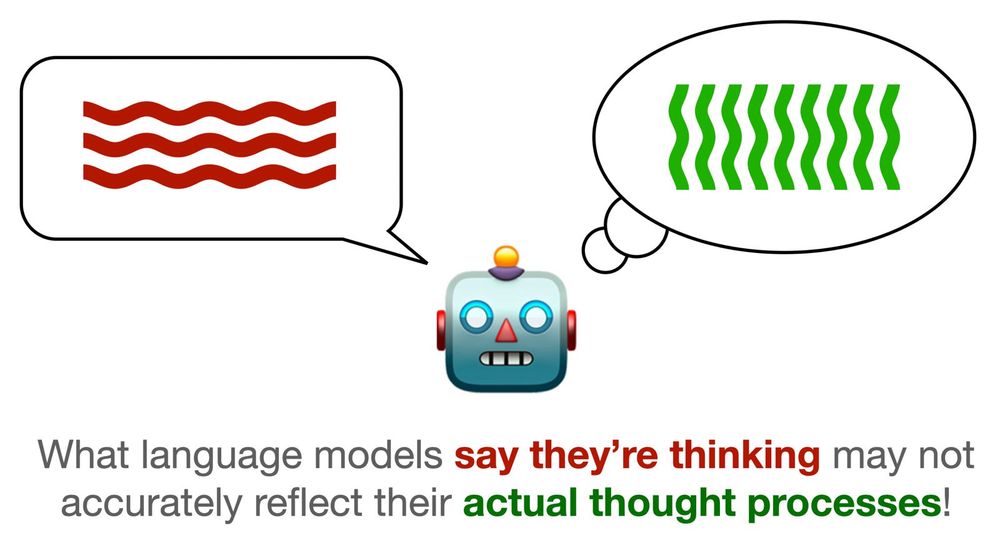

I really like this paper as a survey on the current literature on what CoT is, but more importantly on what it's not.

It also serves as a cautionary tale to the (apparently quite common) misuse of CoT as an interpretable method.

I really like this paper as a survey on the current literature on what CoT is, but more importantly on what it's not.

It also serves as a cautionary tale to the (apparently quite common) misuse of CoT as an interpretable method.

We will explore the future of computing for health, sustainability, human-centered AI, and policy.

Please consider submitting a 1-page abstract

We will explore the future of computing for health, sustainability, human-centered AI, and policy.

Please consider submitting a 1-page abstract

ojs.aaai.org/index.php/IC...,

ojs.aaai.org/index.php/IC...,

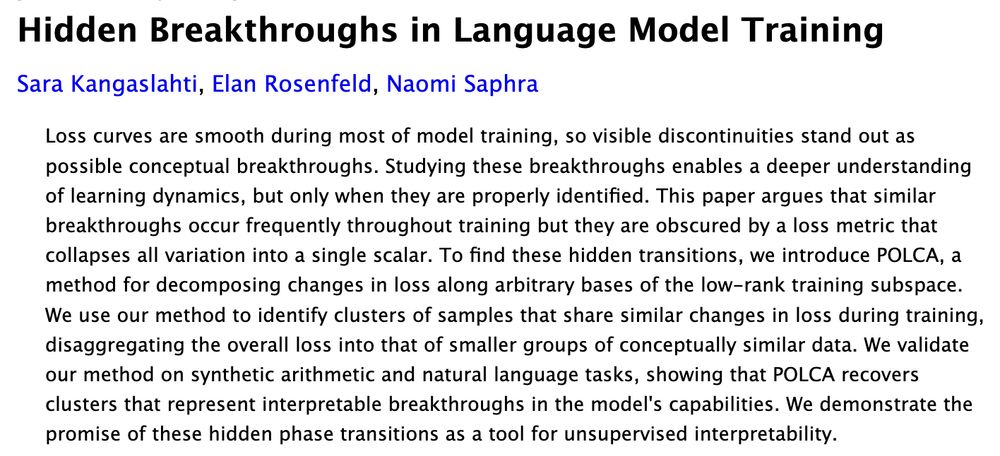

Phase transitions! We love to see them during LM training. Syntactic attention structure, induction heads, grokking; they seem to suggest the model has learned a discrete, interpretable concept. Unfortunately, they’re pretty rare—or are they?

Phase transitions! We love to see them during LM training. Syntactic attention structure, induction heads, grokking; they seem to suggest the model has learned a discrete, interpretable concept. Unfortunately, they’re pretty rare—or are they?

(1/6)

(1/6)

⚖️ sin discriminar,

🚫 sin reforzar estereotipos,

🔁 y sin aprender a odiar?

Esa es la gran pregunta de mi tesis.

👇 Te lo cuento en este #HiloTesis @crueuniversidades.bsky.social @filarramendi.bsky.social

⚖️ sin discriminar,

🚫 sin reforzar estereotipos,

🔁 y sin aprender a odiar?

Esa es la gran pregunta de mi tesis.

👇 Te lo cuento en este #HiloTesis @crueuniversidades.bsky.social @filarramendi.bsky.social