On the job market this cycle!

alexanderhoyle.com

LLMs are often used for text annotation in social science. In some cases, this involves placing text items on a scale: eg, 1 for liberal and 9 for conservative

There are a few ways to handle this task. Which work best? Our new EMNLP paper has some answers🧵

arxiv.org/abs/2509.03116

LLMs are often used for text annotation in social science. In some cases, this involves placing text items on a scale: eg, 1 for liberal and 9 for conservative

There are a few ways to handle this task. Which work best? Our new EMNLP paper has some answers🧵

arxiv.org/abs/2509.03116

There are a few ways to accomplish this task. Which work best? Our new EMNLP paper has some answers🧵

arxiv.org/pdf/2507.00828

There are a few ways to accomplish this task. Which work best? Our new EMNLP paper has some answers🧵

arxiv.org/pdf/2507.00828

In my latest blog post, I argue it’s time we had our own "Econometrics," a discipline devoted to empirical rigor.

doomscrollingbabel.manoel.xyz/p/the-missin...

In my latest blog post, I argue it’s time we had our own "Econometrics," a discipline devoted to empirical rigor.

doomscrollingbabel.manoel.xyz/p/the-missin...

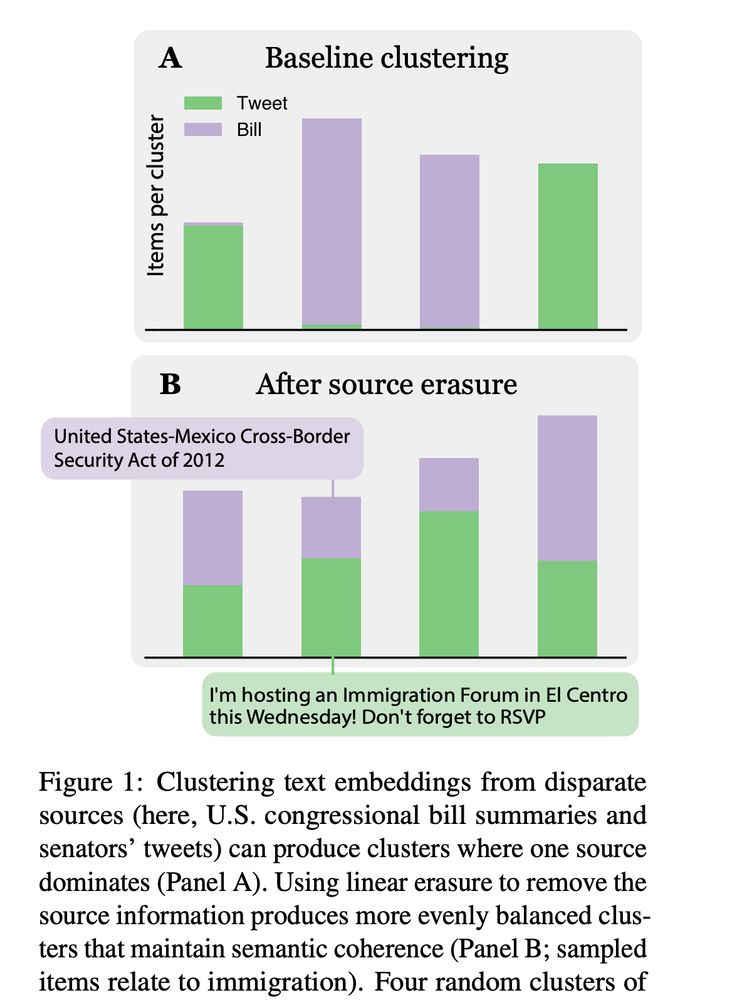

arxiv.org/abs/2507.01234

Turns out there's an easy fix🧵

arxiv.org/abs/2507.01234

This is a useful pass at quantifying some of the risk, and some mitigation strategies arxiv.org/pdf/2509.08825

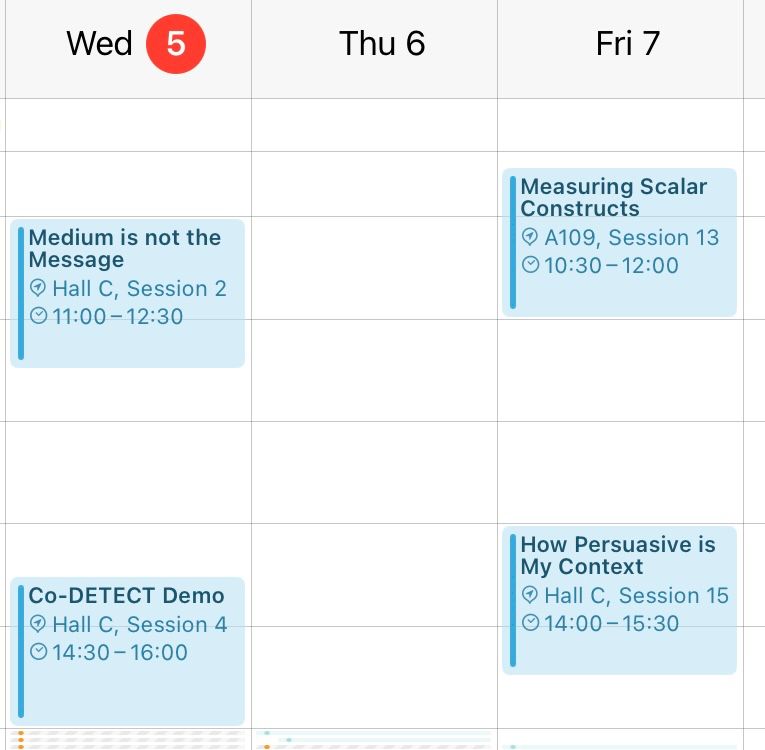

We have two lovely posters:

Tues Session 2, 10:30-11:50 — Large Language Models Struggle to Describe the Haystack without Human Help

Wed Session 4 11:00-12:30 — ProxAnn: Use-Oriented Evaluations of Topic Models and Document Clustering

That ends today (we hope)! Our new ACL paper introduces an LLM-based evaluation protocol 🧵

We have two lovely posters:

Tues Session 2, 10:30-11:50 — Large Language Models Struggle to Describe the Haystack without Human Help

Wed Session 4 11:00-12:30 — ProxAnn: Use-Oriented Evaluations of Topic Models and Document Clustering

Read about our findings ⤵️

Read about our findings ⤵️

youtu.be/87OBxEM8a9E

youtu.be/87OBxEM8a9E

Turns out there's an easy fix🧵

Turns out there's an easy fix🧵

That ends today (we hope)! Our new ACL paper introduces an LLM-based evaluation protocol 🧵

That ends today (we hope)! Our new ACL paper introduces an LLM-based evaluation protocol 🧵

(Perhaps I should be better at responding to those fundraising emails)

(Perhaps I should be better at responding to those fundraising emails)

Are there mutual aid networks for international students? What can we as citizens do here?

Are there mutual aid networks for international students? What can we as citizens do here?

(NB: please don't take this question as a tacit endorsement of BERTopic, I'm just trying to evaluate it fairly)

(NB: please don't take this question as a tacit endorsement of BERTopic, I'm just trying to evaluate it fairly)

(And now the bluesky is taking off you will be connected to NLP’s #1 influencer ! )

Boulder is a lovely college town 30 minutes from Denver and 1 hour from Rocky Mountain National Park 😎

Apply by December 15th!

(And now the bluesky is taking off you will be connected to NLP’s #1 influencer ! )

![“He’ll have to go the whole way to satisfy this audience. “Ah hadn’ meant to say this tonight, but yew-know, if one of those hippies lays down in front of mah car when Ah become President …” They drown out the punch line in happy fulfilled anger. Refrain of some favorite song, it is too longed-for to be audible when it comes.

Their happiness is enough to break the heart. They vomit laughter. Trying to eject the vacuum inside them. They are not hungry or underprivileged or deprived in material ways. Each has, in some minor way, “made it.” And it all means nothing. Washington does not care. The children do not care. They have worked, and for what? As I looked through the crowd—the very young, and then a jump to middle age, no college students there but the protesting peaceniks—I wondered if the young mother from the street corner was there (someone watching her bright smear of baby), the one who screamed at the marching priests. Had the policeman come, the one who said last night that he did not back off in fourteen years? Had he turned in his resignation that day?—the[…]”

Excerpt From

Nixon Agonistes

Garry Wills](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:hqplaidpke4v5anbvw2vehxf/bafkreih663dhrs5qowcb4pavclkh3hsfz2l6ayraquntomiwue225wqp24@jpeg)

twitter.com/emollick/sta...

twitter.com/emollick/sta...

pubmed.ncbi.nlm.nih.gov/15482073/

www.tandfonline.com/doi/epdf/10....

www.tandfonline.com/doi/full/10....

doi.org/10.1613/jair...

pubmed.ncbi.nlm.nih.gov/15482073/

www.tandfonline.com/doi/epdf/10....

www.tandfonline.com/doi/full/10....

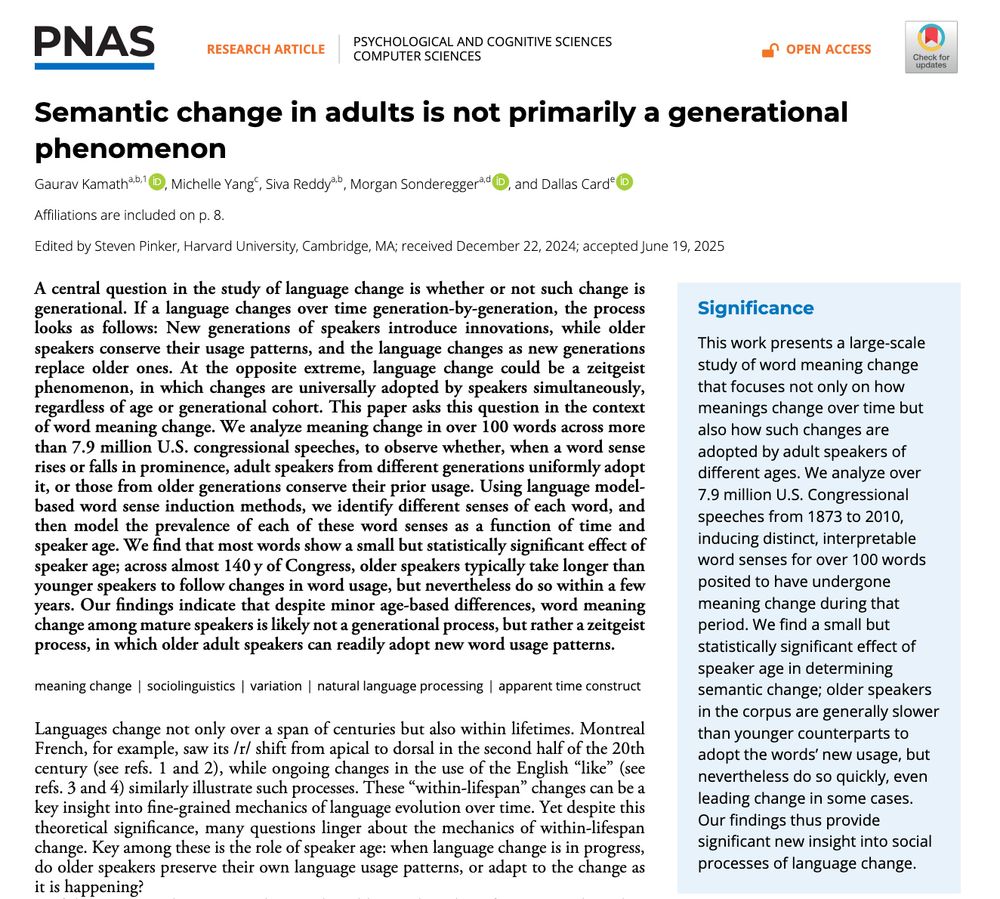

Joint work with Alexander @alexanderhoyle.bsky.social, Mrinmaya Sachan, and Elliott @elliottash.bsky.social.

www.youtube.com/watch?v=qIDj...

Joint work with Alexander @alexanderhoyle.bsky.social, Mrinmaya Sachan, and Elliott @elliottash.bsky.social.

www.youtube.com/watch?v=qIDj...

arxiv.org/abs/2310.17774

arxiv.org/abs/2310.17774