Not quite sure why, but I apparently wrote sixteen long #AI related Sunday Harangues in 2024.. 😅.

Most were first posted on twitter.

👉https://x.com/rao2z/status/1873214567091966189

No. 👇

x.com/i/status/202...

No. 👇

x.com/i/status/202...

𝙇𝙚𝙘𝙩𝙪𝙧𝙚 1: youtube.com/watch?v=_PPV...

𝙇𝙚𝙘𝙩𝙪𝙧𝙚 2: youtube.com/watch?v=fKlm...

𝙇𝙚𝙘𝙩𝙪𝙧𝙚 1: youtube.com/watch?v=_PPV...

𝙇𝙚𝙘𝙩𝙪𝙧𝙚 2: youtube.com/watch?v=fKlm...

@msftresearch.bsky.social India and at IndoML Symposium--were "On the Mythos of LRM Thinking Tokens." Here is a recording of one of them--the talk I gave at MSR India.

www.youtube.com/watch?v=fCQX...

@msftresearch.bsky.social India and at IndoML Symposium--were "On the Mythos of LRM Thinking Tokens." Here is a recording of one of them--the talk I gave at MSR India.

www.youtube.com/watch?v=fCQX...

www.youtube.com/watch?v=rvby...

www.youtube.com/watch?v=rvby...

👉 x.com/rao2z/status...

👉 x.com/rao2z/status...

www.youtube.com/watch?v=L2nA...

www.youtube.com/watch?v=L2nA...

www.linkedin.com/posts/subbar...

www.linkedin.com/posts/subbar...

x.com/rao2z/status...

x.com/rao2z/status...

x.com/rao2z/status...

x.com/rao2z/status...

👉 www.linkedin.com/posts/subbar...

👉 www.linkedin.com/posts/subbar...

👉 x.com/rao2z/status...

👉 x.com/rao2z/status...

̶̶̶A̶̶̶G̶̶̶I̶̶̶ ̶(̶A̶r̶t̶i̶f̶i̶c̶i̶a̶l̶ ̶G̶e̶n̶e̶r̶a̶l̶ ̶I̶n̶t̶e̶l̶l̶i̶g̶e̶n̶c̶e̶)̶

̶̶̶A̶̶̶S̶̶̶I̶̶̶ ̶(̶A̶r̶t̶i̶f̶i̶c̶i̶a̶l̶ ̶S̶u̶p̶e̶r̶ ̶I̶n̶t̶e̶l̶l̶i̶g̶e̶n̶c̶e̶)

ASDI (Artificial Super Duper Intelligence)

Don't get stuck with yesterday's hypeonyms!

Dare to get to the next level!

#AIAphorisms

̶̶̶A̶̶̶G̶̶̶I̶̶̶ ̶(̶A̶r̶t̶i̶f̶i̶c̶i̶a̶l̶ ̶G̶e̶n̶e̶r̶a̶l̶ ̶I̶n̶t̶e̶l̶l̶i̶g̶e̶n̶c̶e̶)̶

̶̶̶A̶̶̶S̶̶̶I̶̶̶ ̶(̶A̶r̶t̶i̶f̶i̶c̶i̶a̶l̶ ̶S̶u̶p̶e̶r̶ ̶I̶n̶t̶e̶l̶l̶i̶g̶e̶n̶c̶e̶)

ASDI (Artificial Super Duper Intelligence)

Don't get stuck with yesterday's hypeonyms!

Dare to get to the next level!

#AIAphorisms

www.youtube.com/playlist?lis...

www.youtube.com/playlist?lis...

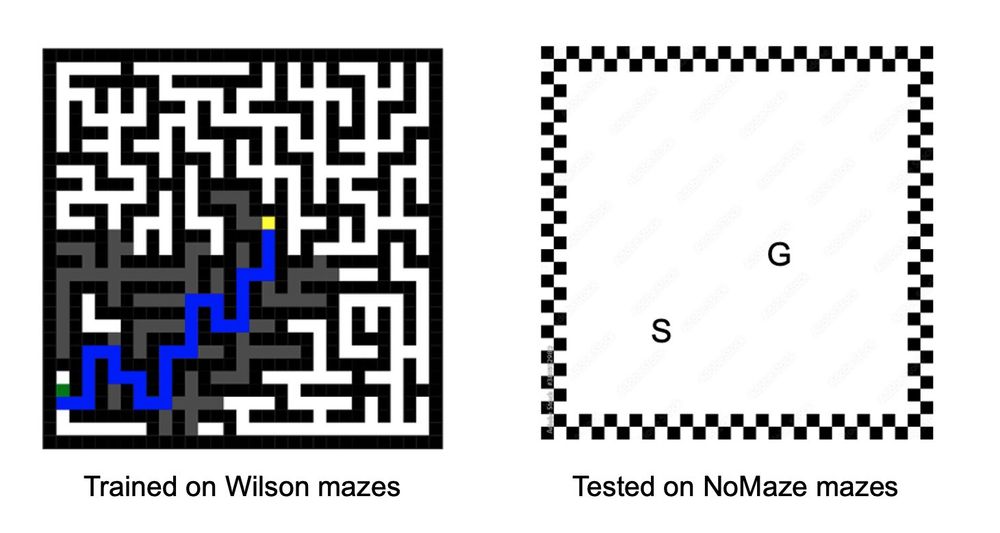

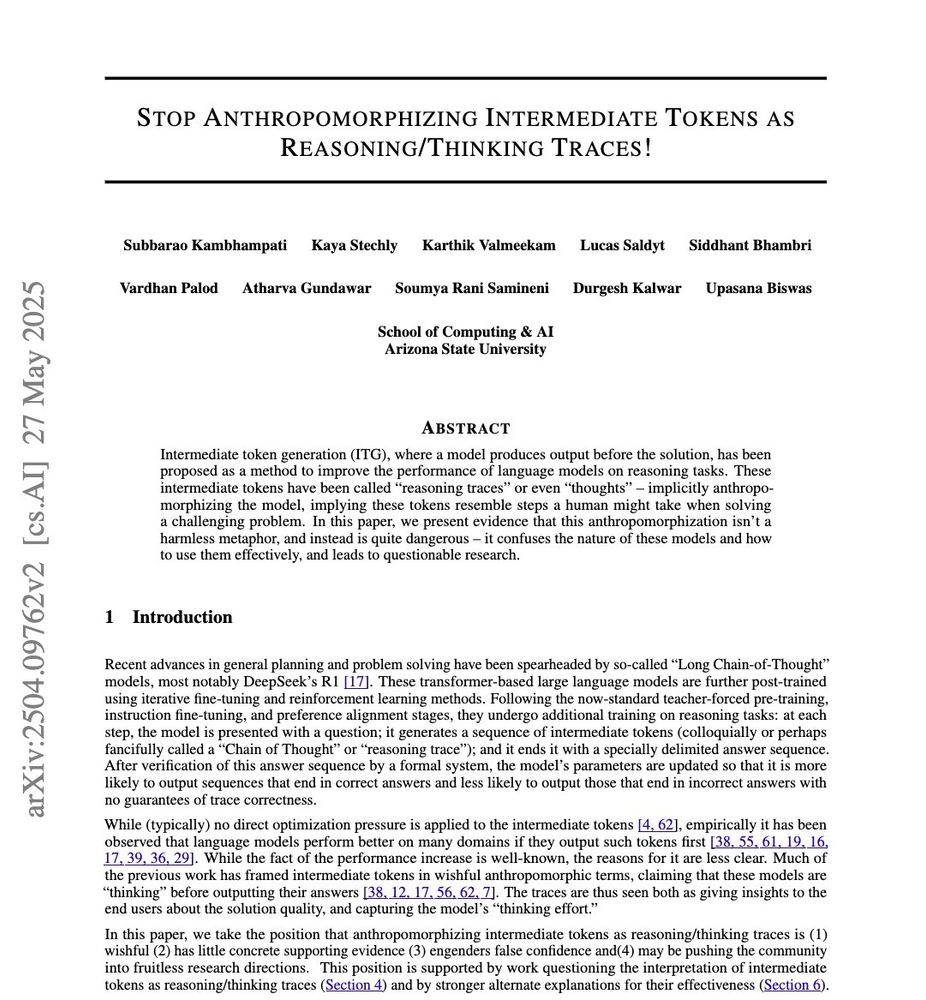

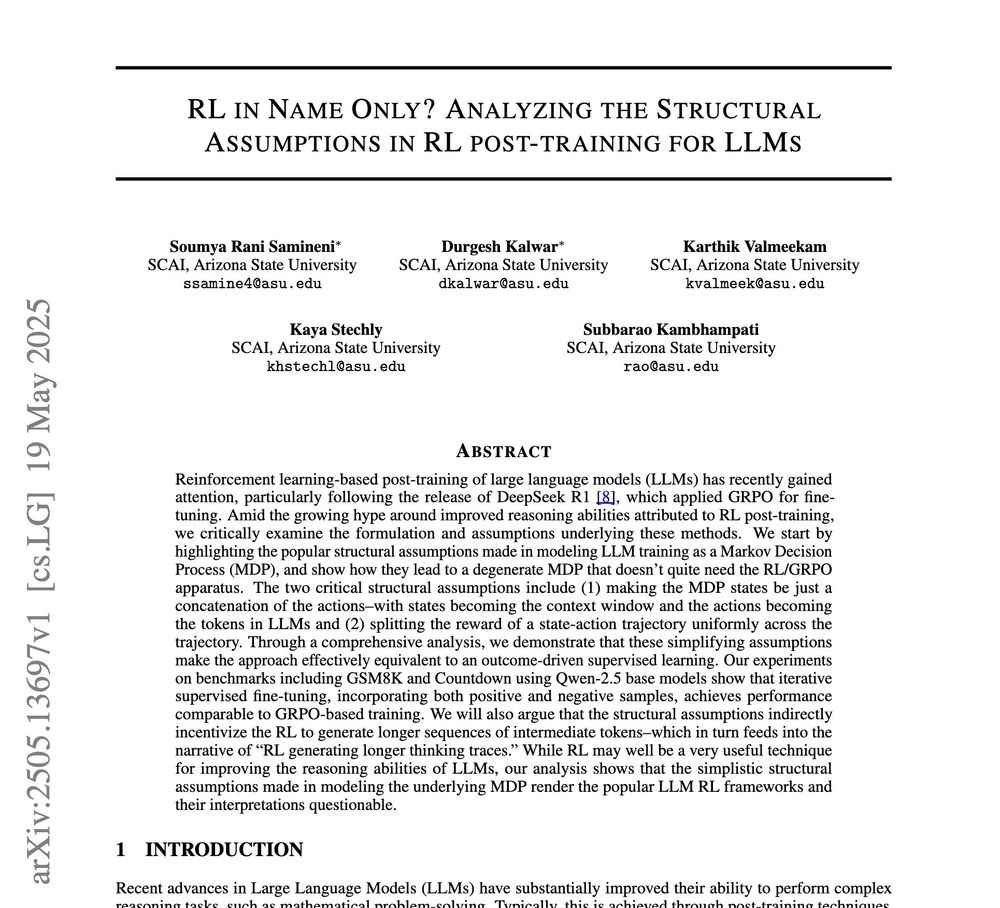

See, e.g., lots of recent papers by Subbarao Kambhampati's group at ASU. (2/2)

See, e.g., lots of recent papers by Subbarao Kambhampati's group at ASU. (2/2)

Lucas Saldyt dives deeper into this issue 👇👇

x.com/SaldytLucas/...

Lucas Saldyt dives deeper into this issue 👇👇

x.com/SaldytLucas/...

Thread 👉 x.com/rao2z/status...

Thread 👉 x.com/rao2z/status...

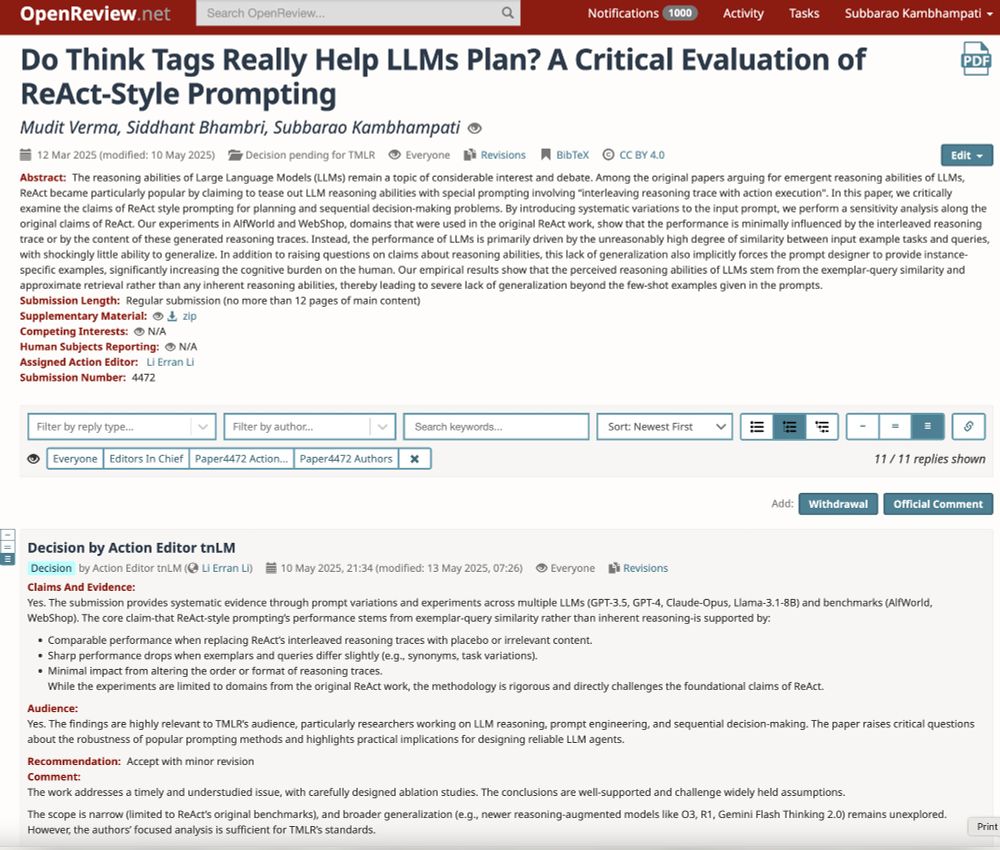

critical evaluation and refutation of the reasoning claims of ReACT has been accepted to #TMLR (Transactions on Machine Learning)

👉https://openreview.net/forum?id=aFAMPSmNHR

critical evaluation and refutation of the reasoning claims of ReACT has been accepted to #TMLR (Transactions on Machine Learning)

👉https://openreview.net/forum?id=aFAMPSmNHR

x.com/rao2z/status...

x.com/rao2z/status...

x.com/rao2z/status...

x.com/rao2z/status...