👉 x.com/rao2z/status...

👉 x.com/rao2z/status...

👉 x.com/rao2z/status...

👉 x.com/rao2z/status...

Thread 👉 x.com/rao2z/status...

Thread 👉 x.com/rao2z/status...

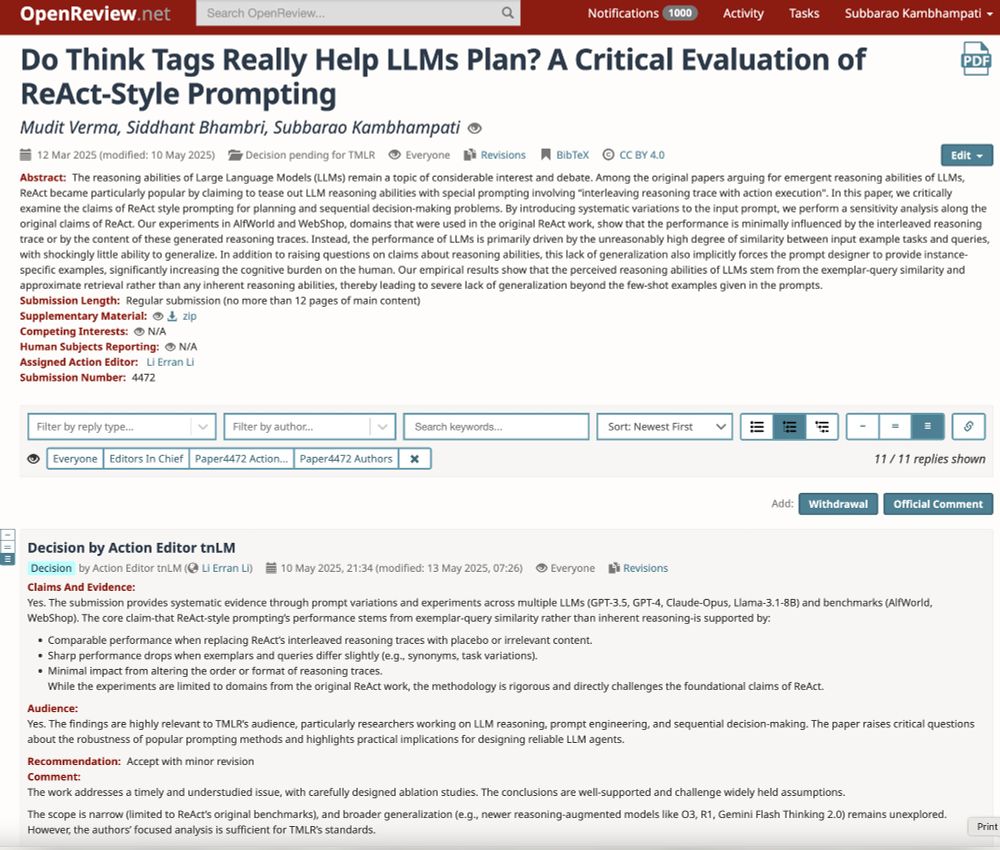

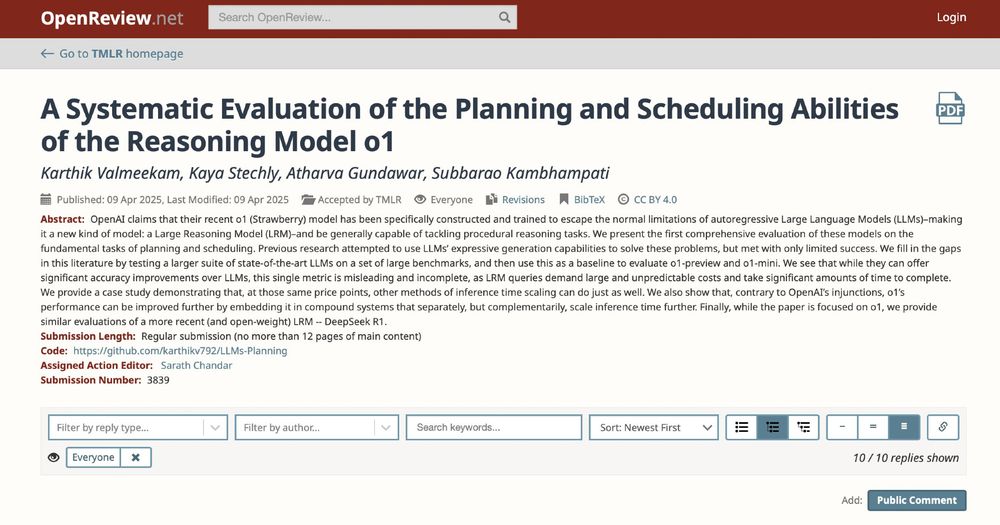

critical evaluation and refutation of the reasoning claims of ReACT has been accepted to #TMLR (Transactions on Machine Learning)

👉https://openreview.net/forum?id=aFAMPSmNHR

critical evaluation and refutation of the reasoning claims of ReACT has been accepted to #TMLR (Transactions on Machine Learning)

👉https://openreview.net/forum?id=aFAMPSmNHR

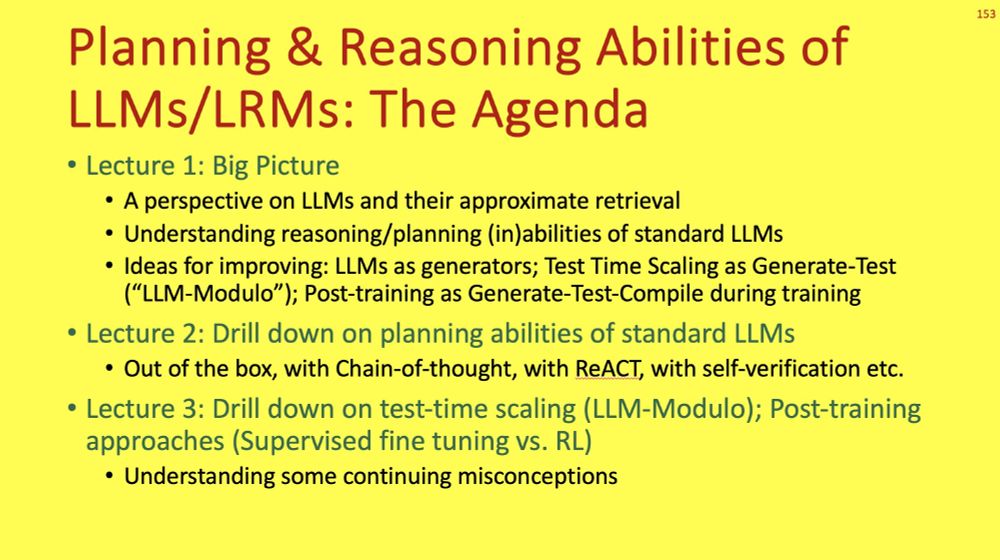

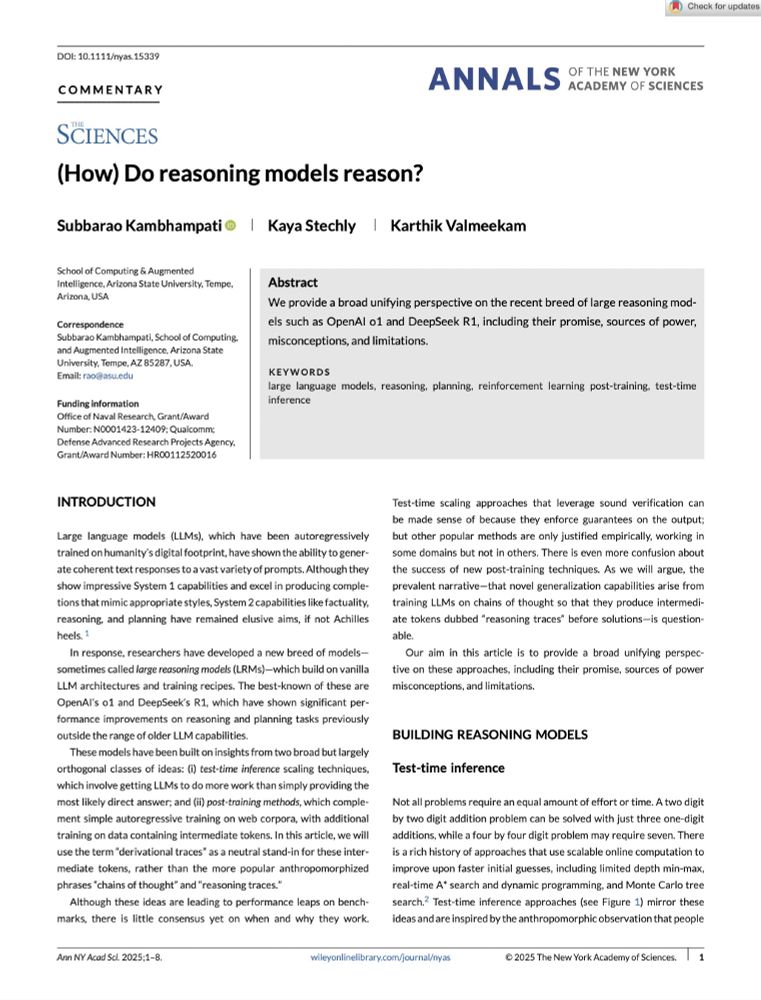

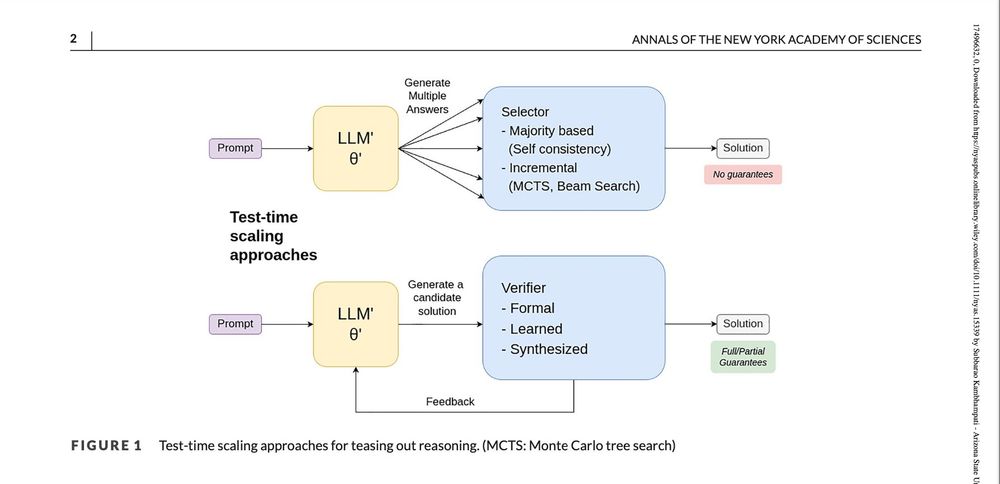

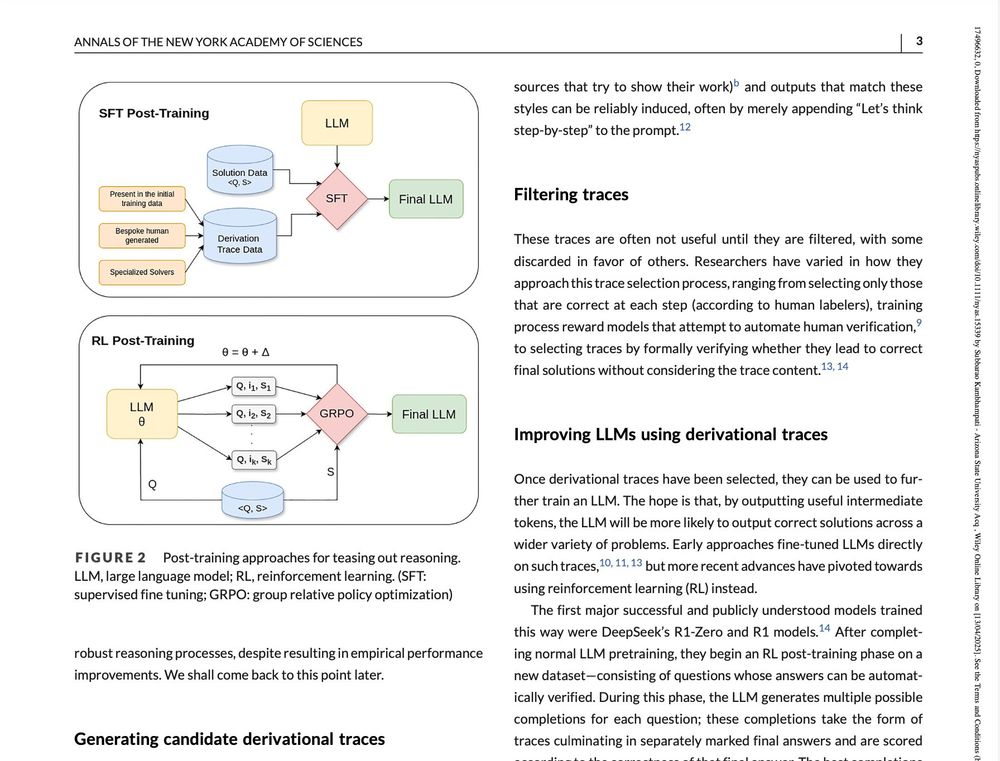

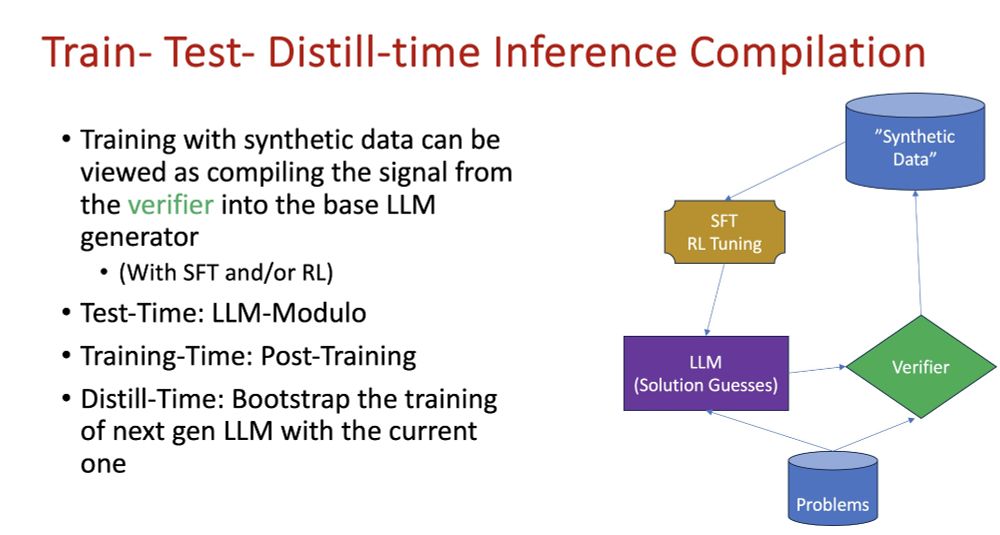

New York Academy of Sciences titled "(How) Do reasoning models reason?" is now online

👉 nyaspubs.onlinelibrary.wiley.com/doi/epdf/10....

It is a written version of my recent talks (and #SundayHarangues) on the recent developments in LRMs..

New York Academy of Sciences titled "(How) Do reasoning models reason?" is now online

👉 nyaspubs.onlinelibrary.wiley.com/doi/epdf/10....

It is a written version of my recent talks (and #SundayHarangues) on the recent developments in LRMs..

👉 openreview.net/forum?id=FkK...

Even a jaded researcher like me has to admit that

Transactions on Machine Learning Research is a veritable oasis among #AI publication venues! 🙏

👉 openreview.net/forum?id=FkK...

Even a jaded researcher like me has to admit that

Transactions on Machine Learning Research is a veritable oasis among #AI publication venues! 🙏

See 👉 x.com/rao2z/status...

Or 👉 www.linkedin.com/posts/subbar...

See 👉 x.com/rao2z/status...

Or 👉 www.linkedin.com/posts/subbar...

x.com/rao2z/status...

x.com/rao2z/status...

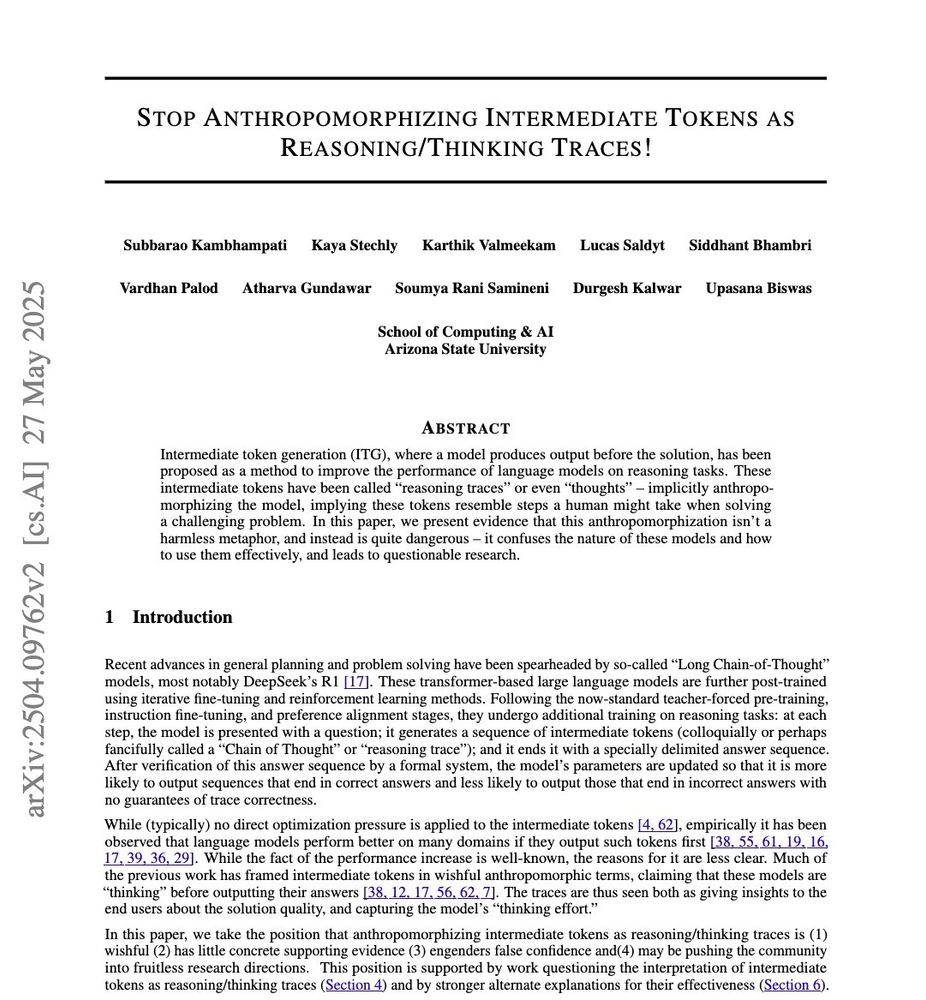

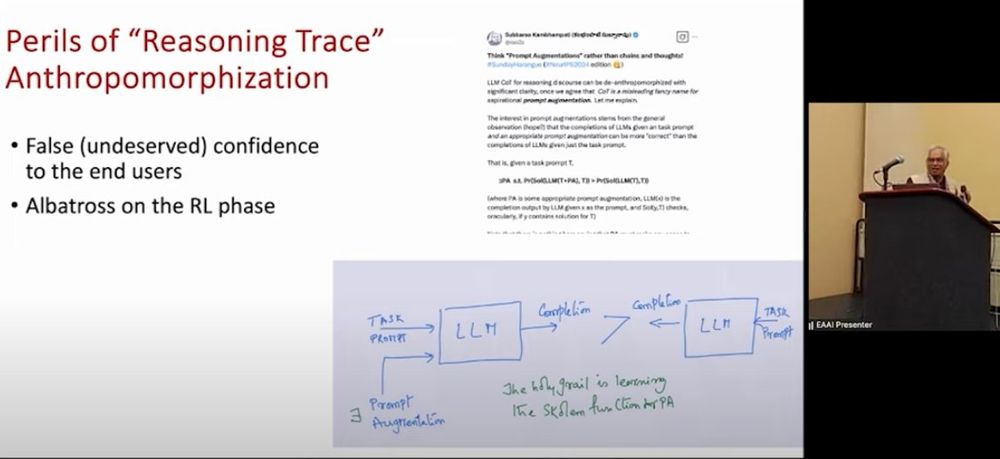

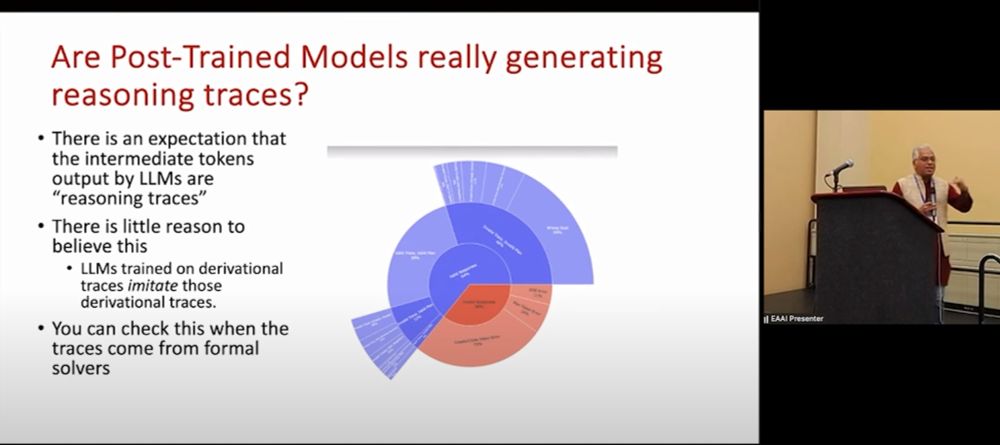

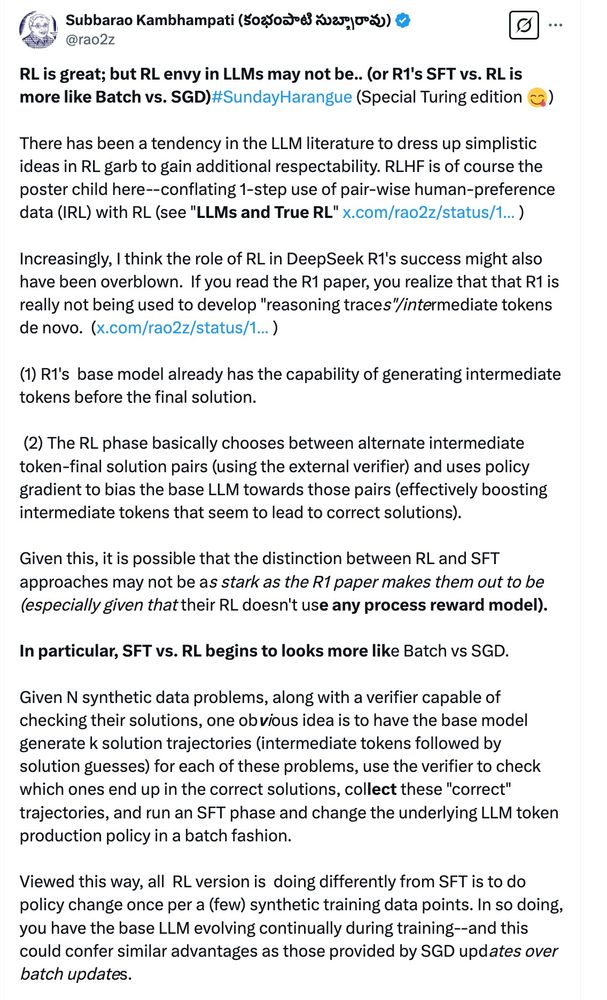

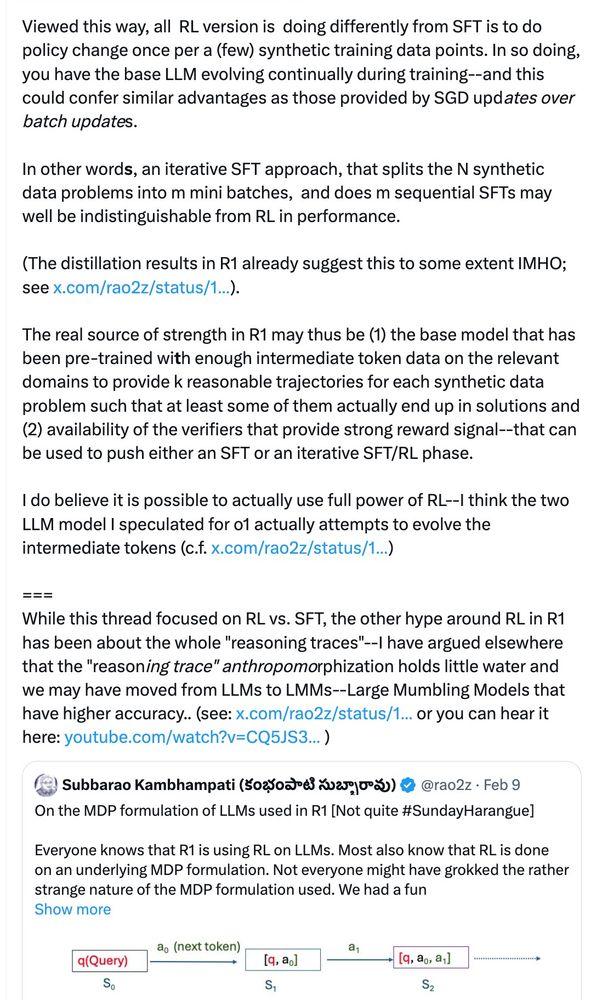

I have been saying for sometime now that the intermediate tokens/"mumblings" that LRMs tell themselves are not to be seen as "reasoning traces" 👉 x.com/rao2z/status...

I have been saying for sometime now that the intermediate tokens/"mumblings" that LRMs tell themselves are not to be seen as "reasoning traces" 👉 x.com/rao2z/status...

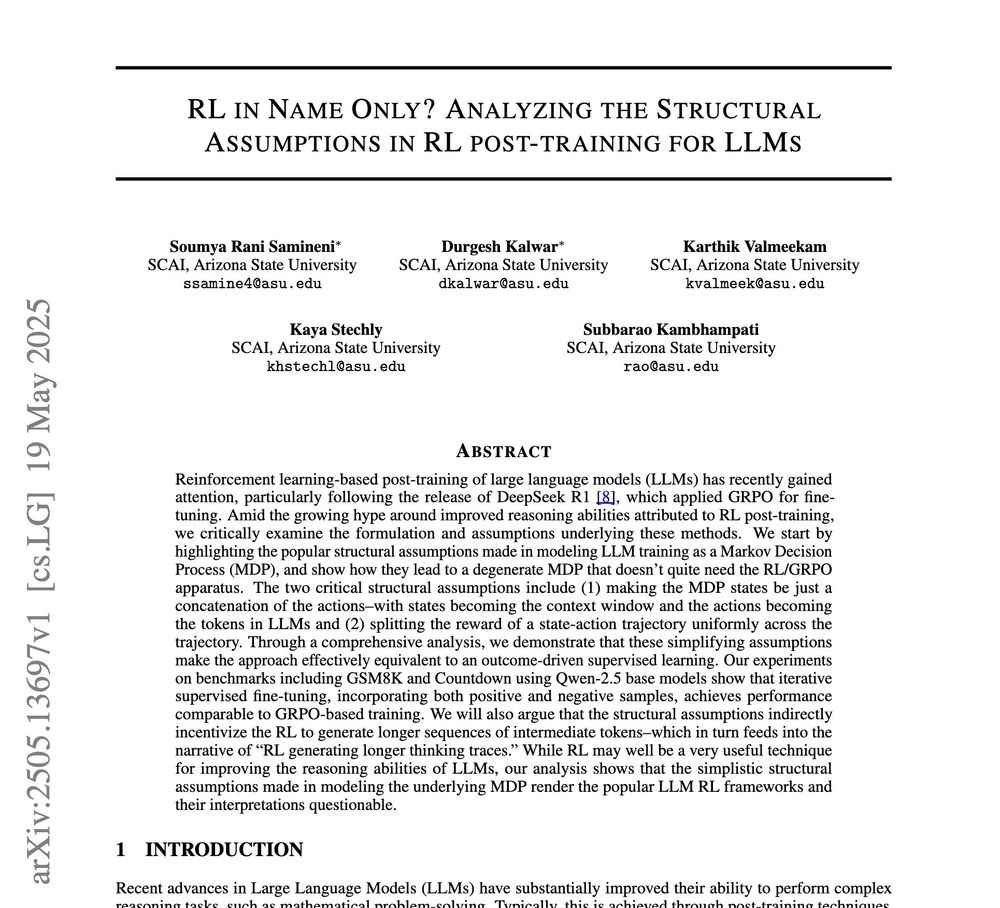

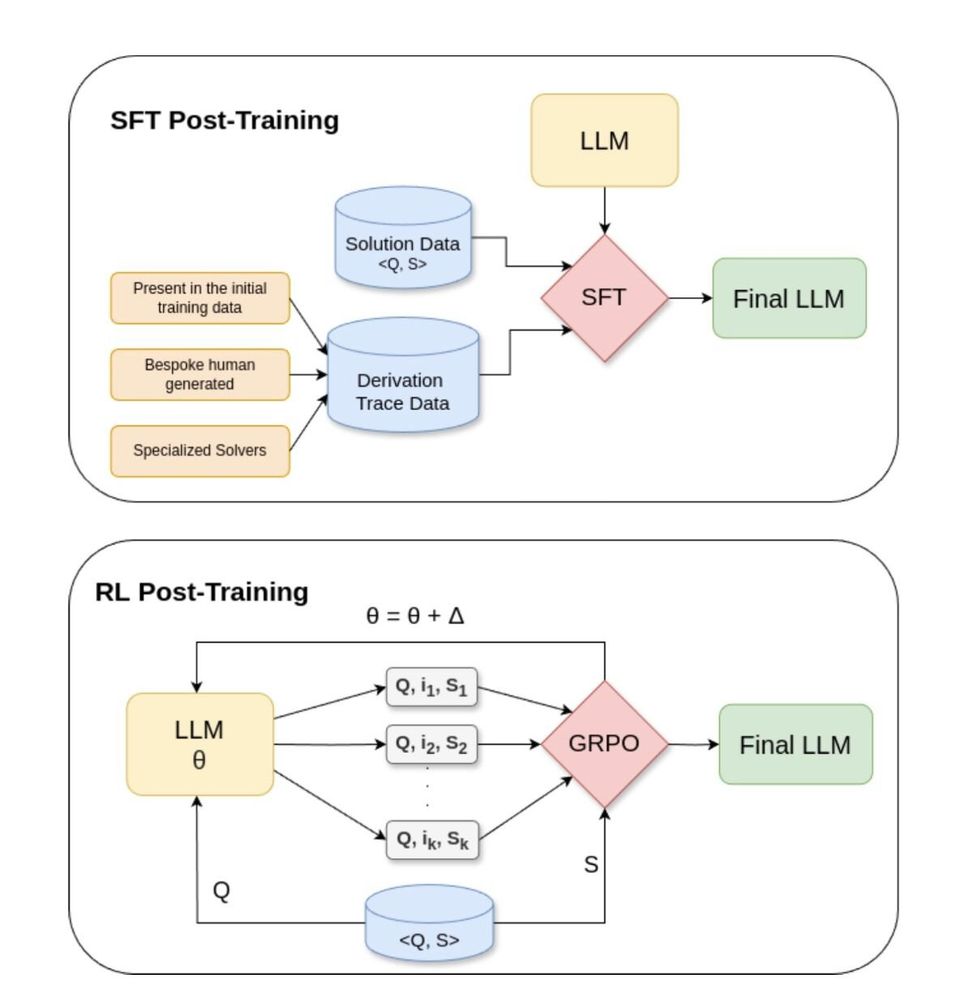

You know DeepSeek R1 uses RL--but did you grok the strange MDP formulation it uses for this? We had a fun 3hr discussion about this in our group meeting last Friday; here is a summary

👉 x.com/rao2z/status...

You know DeepSeek R1 uses RL--but did you grok the strange MDP formulation it uses for this? We had a fun 3hr discussion about this in our group meeting last Friday; here is a summary

👉 x.com/rao2z/status...

Explanatory thread here: x.com/rao2z/status...

Explanatory thread here: x.com/rao2z/status...

[Thoughts on OpenAI/Frontier Math benchmark story]

x.com/rao2z/status...

[Thoughts on OpenAI/Frontier Math benchmark story]

x.com/rao2z/status...

Russell & Norvig recently published this great textbook on AGENTS!!

𝘏𝘦𝘳𝘦 𝘪𝘴 𝘸𝘩𝘢𝘵 𝘪𝘴 𝘪𝘯𝘤𝘭𝘶𝘥𝘦𝘥

AI Complete!

𝘞𝘩𝘦𝘳𝘦 𝘤𝘢𝘯 𝘺𝘰𝘶 𝘭𝘦𝘢𝘳𝘯 𝘢𝘣𝘰𝘶𝘵 𝘪𝘵 𝘢𝘭𝘭?

Your neighborhood Intro #AI course (eg. rakaposhi.eas.asu.edu/cse471 )

See also 👉 x.com/rao2z/status...

Russell & Norvig recently published this great textbook on AGENTS!!

𝘏𝘦𝘳𝘦 𝘪𝘴 𝘸𝘩𝘢𝘵 𝘪𝘴 𝘪𝘯𝘤𝘭𝘶𝘥𝘦𝘥

AI Complete!

𝘞𝘩𝘦𝘳𝘦 𝘤𝘢𝘯 𝘺𝘰𝘶 𝘭𝘦𝘢𝘳𝘯 𝘢𝘣𝘰𝘶𝘵 𝘪𝘵 𝘢𝘭𝘭?

Your neighborhood Intro #AI course (eg. rakaposhi.eas.asu.edu/cse471 )

See also 👉 x.com/rao2z/status...

Not quite sure why, but I apparently wrote sixteen long #AI related Sunday Harangues in 2024.. 😅.

Most were first posted on twitter.

👉https://x.com/rao2z/status/1873214567091966189

Not quite sure why, but I apparently wrote sixteen long #AI related Sunday Harangues in 2024.. 😅.

Most were first posted on twitter.

👉https://x.com/rao2z/status/1873214567091966189