https://pdasigi.github.io/

I learned A LOT about LM post-training working on this project. We wrote it all up so now you can too.

Paper: allenai.org/papers/tulu-...

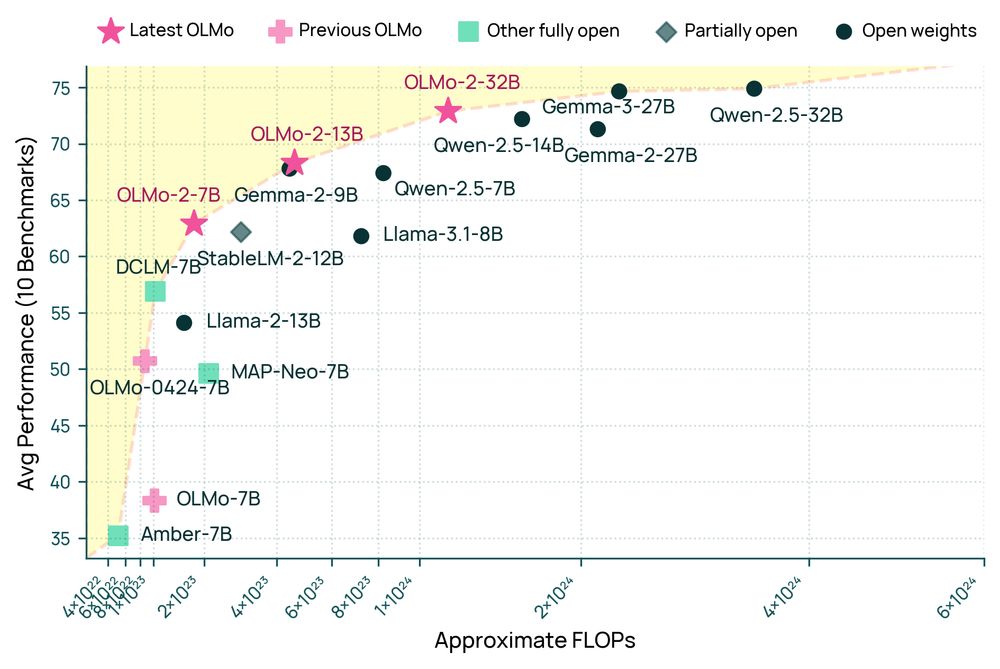

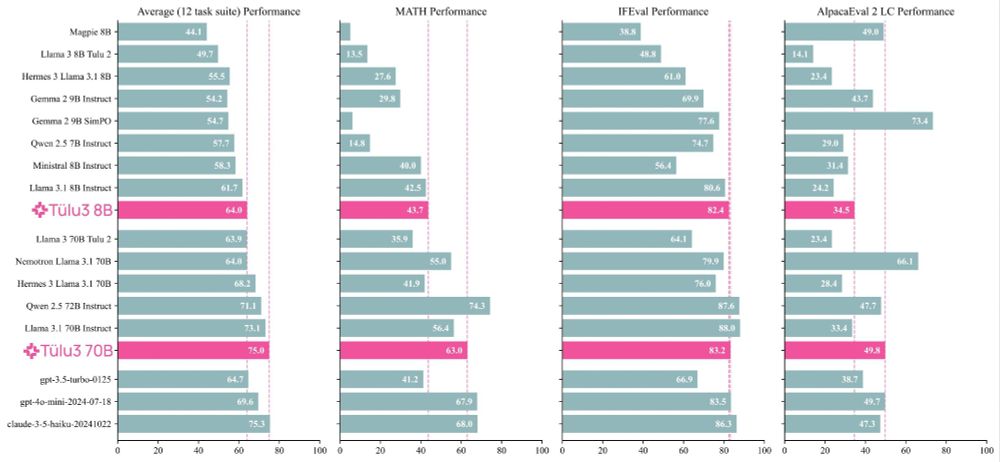

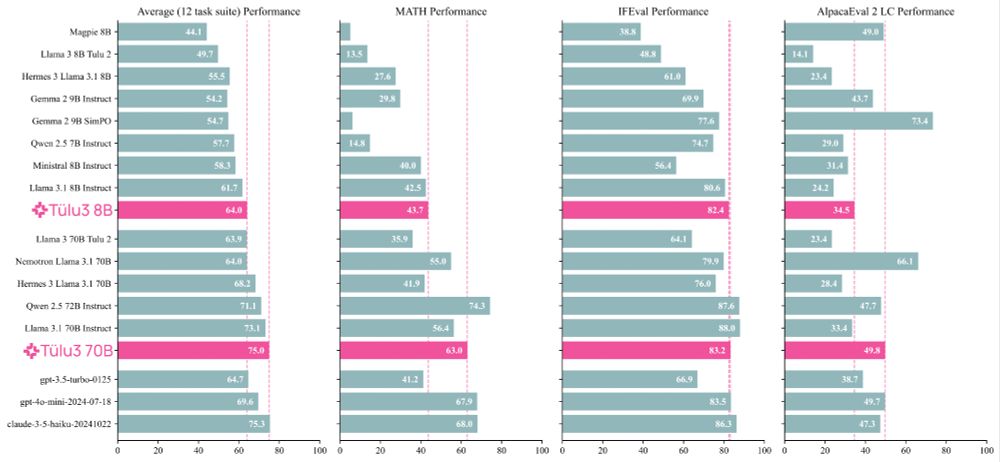

Comparable to best open-weight models, but a fraction of training compute. When you have a good recipe, ✨ magical things happen when you scale it up!

Comparable to best open-weight models, but a fraction of training compute. When you have a good recipe, ✨ magical things happen when you scale it up!

In this work led by @hamishivi.bsky.social we found that simple embedding based methods scale much better than fancier computationally intensive ones.

Turns out, when you look at large, varied data pools, lots of recent methods lag behind simple baselines, and a simple embedding-based method (RDS) does best!

More below ⬇️ (1/8)

In this work led by @hamishivi.bsky.social we found that simple embedding based methods scale much better than fancier computationally intensive ones.

@ljvmiranda.bsky.social (ljvmiranda921.github.io) and Xinxi Lyu (alrope123.github.io) are also applying to phd programs, and @valentinapy.bsky.social (valentinapy.github.io) is on the faculty market, hire them all!!

@ljvmiranda.bsky.social (ljvmiranda921.github.io) and Xinxi Lyu (alrope123.github.io) are also applying to phd programs, and @valentinapy.bsky.social (valentinapy.github.io) is on the faculty market, hire them all!!

Huge shoutout to @hamishivi.bsky.social and @vwxyzjn.bsky.social who led the scale up, and to the rest of the team!

Huge shoutout to @hamishivi.bsky.social and @vwxyzjn.bsky.social who led the scale up, and to the rest of the team!

Next looking for OLMo 2 numbers.

Next looking for OLMo 2 numbers.

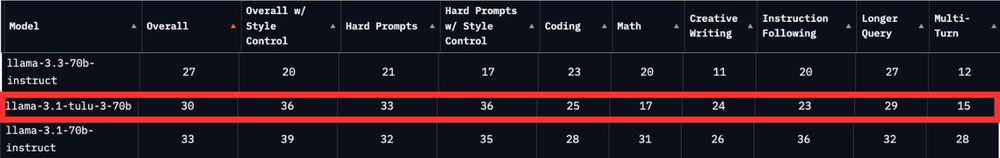

Particularly happy it is top 20 for Math and Multi-turn prompts :)

All the details and data on how to train a model this good are right here: arxiv.org/abs/2411.15124

Particularly happy it is top 20 for Math and Multi-turn prompts :)

All the details and data on how to train a model this good are right here: arxiv.org/abs/2411.15124

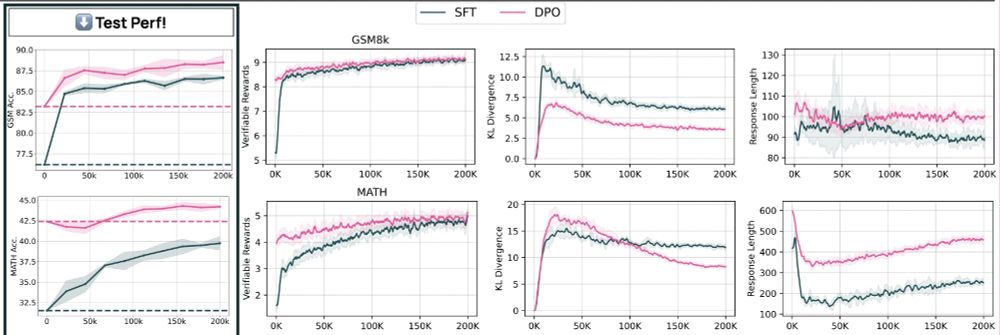

Using RL to train against labels is a simple idea, but very effective (>10pt gains just using GSM8k train set).

It's implemented for you to use in Open-Instruct 😉: github.com/allenai/open...

Using RL to train against labels is a simple idea, but very effective (>10pt gains just using GSM8k train set).

It's implemented for you to use in Open-Instruct 😉: github.com/allenai/open...

Apply here: job-boards.greenhouse.io/thealleninst...

Apply here: job-boards.greenhouse.io/thealleninst...

There are now several training configs that together reproduce the training runs that lead to the final OLMo 2 models.

In particular, all the training data is available, tokenized and shuffled exactly as we trained on it!

There are now several training configs that together reproduce the training runs that lead to the final OLMo 2 models.

In particular, all the training data is available, tokenized and shuffled exactly as we trained on it!

Importantly, we use the same post-training recipe as our recently released Tülu 3, and it works remarkably well, more so at the 13B size.

Importantly, we use the same post-training recipe as our recently released Tülu 3, and it works remarkably well, more so at the 13B size.

huggingface.co/allura-org/T...

huggingface.co/allura-org/T...

I learned A LOT about LM post-training working on this project. We wrote it all up so now you can too.

Paper: allenai.org/papers/tulu-...

I learned A LOT about LM post-training working on this project. We wrote it all up so now you can too.

Paper: allenai.org/papers/tulu-...

I learned A LOT about LM post-training working on this project. We wrote it all up so now you can too.

Paper: allenai.org/papers/tulu-...

I was lucky enough to work on almost every stage of the pipeline in one way or another. Some comments + highlights ⬇️

I was lucky enough to work on almost every stage of the pipeline in one way or another. Some comments + highlights ⬇️

We invented new methods for fine-tuning language models with RL and built upon best practices to scale synthetic instruction and preference data.

Demo, GitHub, paper, and models 👇

We invented new methods for fine-tuning language models with RL and built upon best practices to scale synthetic instruction and preference data.

Demo, GitHub, paper, and models 👇

Some of my personal highlights:

💡 We significantly scaled up our preference data!

💡 RL with Verifiable Rewards to improve targeted skills like math and precise instruction following

💡 evaluation toolkit for post-training (including new unseen evals!)

Some of my personal highlights:

💡 We significantly scaled up our preference data!

💡 RL with Verifiable Rewards to improve targeted skills like math and precise instruction following

💡 evaluation toolkit for post-training (including new unseen evals!)