https://pdasigi.github.io/

@ljvmiranda.bsky.social (ljvmiranda921.github.io) and Xinxi Lyu (alrope123.github.io) are also applying to phd programs, and @valentinapy.bsky.social (valentinapy.github.io) is on the faculty market, hire them all!!

@ljvmiranda.bsky.social (ljvmiranda921.github.io) and Xinxi Lyu (alrope123.github.io) are also applying to phd programs, and @valentinapy.bsky.social (valentinapy.github.io) is on the faculty market, hire them all!!

This is an interesting idea though, and I hope there's a way to make it work.

This is an interesting idea though, and I hope there's a way to make it work.

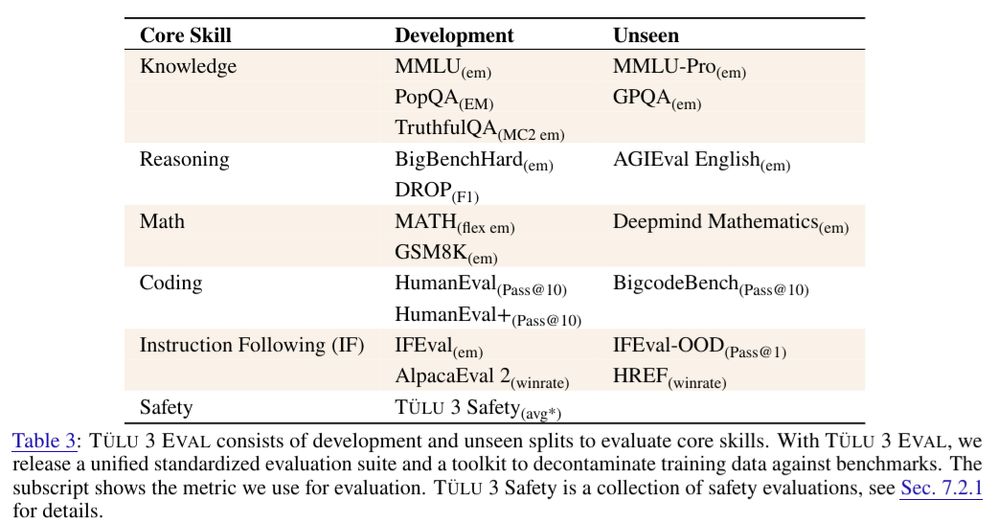

1) IFEval-OOD, a variant of IFEval (Zhou et al., 2023) but with a disjoint set of constraints.

2) HREF, a more general IF eval targeting a diverse set of IF tasks.

Detailed analyses on these evals are coming out soon.

1) IFEval-OOD, a variant of IFEval (Zhou et al., 2023) but with a disjoint set of constraints.

2) HREF, a more general IF eval targeting a diverse set of IF tasks.

Detailed analyses on these evals are coming out soon.

Reproducing LM evaluations can be notoriously difficult. So we released our evaluation framework where you can specify and tweak every last detail and reproduce what we did: github.com/allenai/olmes.

Reproducing LM evaluations can be notoriously difficult. So we released our evaluation framework where you can specify and tweak every last detail and reproduce what we did: github.com/allenai/olmes.