Generating MNIST digits for a decade.

The morning keynotes talked a lot about open source so my slide here might be timely.

The morning keynotes talked a lot about open source so my slide here might be timely.

If you are using bilinear interpolation anywhere, NAF acts as a strict drop-in replacement.

Just swap it in. No retraining required. It’s literally free points for your metrics.📈

If you are using bilinear interpolation anywhere, NAF acts as a strict drop-in replacement.

Just swap it in. No retraining required. It’s literally free points for your metrics.📈

We were curious if we could train diffusion models on sets of point coordinates.

For images, this is a step towards spatial diffusion, with pixels reorganizing themselves, instead of diffusing in rgb values space only.

by: E. Kirby, @mickaelchen.bsky.social, R. Marlet, N. Samet

tl;dr: a diffusion-based method producing lidar point clouds of dataset objects, with an extensive control of the generation

📄 arxiv.org/abs/2412.07385

Code: ✅

We were curious if we could train diffusion models on sets of point coordinates.

For images, this is a step towards spatial diffusion, with pixels reorganizing themselves, instead of diffusing in rgb values space only.

Registration is open (it's free) with priority given to authors of accepted papers: cvprinparis.github.io/CVPR2025InPa...

Big 🧵👇 with details!

Registration is open (it's free) with priority given to authors of accepted papers: cvprinparis.github.io/CVPR2025InPa...

Big 🧵👇 with details!

Conjecture:

As we are get more and more well-aligned text-image data, it will become easier and easier to train models.

This will allow us to explore both more streamlined and more exotic training recipes.

More signals that exciting times are coming!

How far can we go with ImageNet for Text-to-Image generation? w. @arrijitghosh.bsky.social @lucasdegeorge.bsky.social @nicolasdufour.bsky.social @vickykalogeiton.bsky.social

TL;DR: Train a text-to-image model using 1000 less data in 200 GPU hrs!

📜https://arxiv.org/abs/2502.21318

🧵👇

Conjecture:

As we are get more and more well-aligned text-image data, it will become easier and easier to train models.

This will allow us to explore both more streamlined and more exotic training recipes.

More signals that exciting times are coming!

We trained a 1.2B parameter model on 1,800+ hours of raw driving videos.

No labels. No maps. Just pure observation.

And it works! 🤯

🧵👇 [1/10]

We trained a 1.2B parameter model on 1,800+ hours of raw driving videos.

No labels. No maps. Just pure observation.

And it works! 🤯

🧵👇 [1/10]

If you are an organisation concered about ethics of training data, now is probably your best chance to act and be heard.

www.reuters.com/technology/m...

If you are an organisation concered about ethics of training data, now is probably your best chance to act and be heard.

www.reuters.com/technology/m...

Nah, I just made that up. Need to put more thoughts into this. 🤔

Nah, I just made that up. Need to put more thoughts into this. 🤔

It's been true for multimodal data for a while, and semi-automated data as in the Florence-2 paper has been very succesful. arxiv.org/abs/2311.06242

1. Pretraining is dead. The internet has run out of data.

2. What's next? Agents, synthetic data, inference-time compute

3. What's next long term? Superintelligence, reasoning, understanding, self-awareness, and we can't predict what's gonna happen.

It's been true for multimodal data for a while, and semi-automated data as in the Florence-2 paper has been very succesful. arxiv.org/abs/2311.06242

I missed this when it came out, but I love papers like this: a simple change to an already powerful technique, that significantly improves results without introducing complexity or hyperparameters.

I missed this when it came out, but I love papers like this: a simple change to an already powerful technique, that significantly improves results without introducing complexity or hyperparameters.

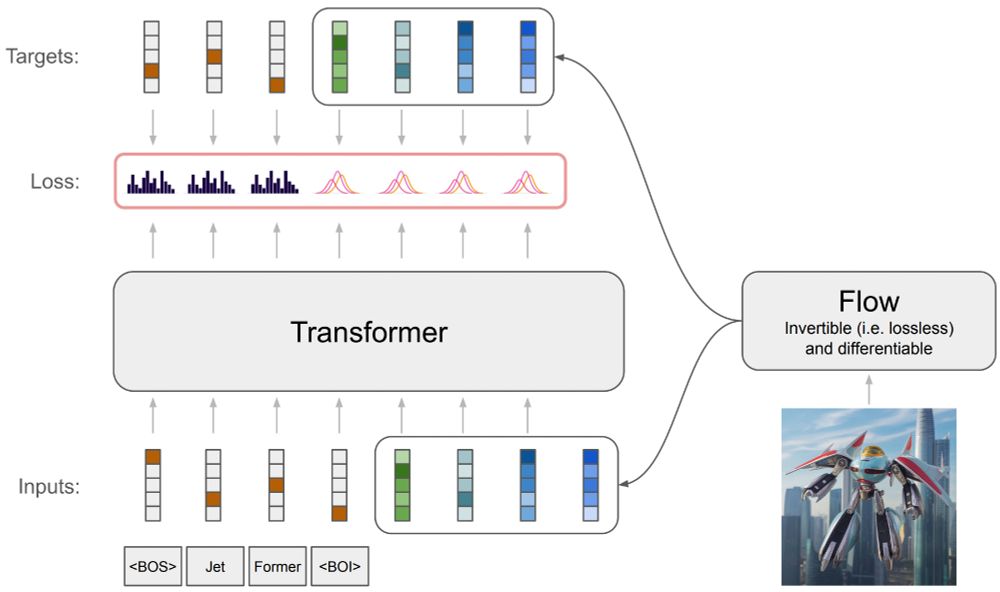

We have been pondering this during summer and developed a new model: JetFormer 🌊🤖

arxiv.org/abs/2411.19722

A thread 👇

1/

We have been pondering this during summer and developed a new model: JetFormer 🌊🤖

arxiv.org/abs/2411.19722

A thread 👇

1/

We have a few MSc research internship openings at valeo.ai in Paris for 2025 on computer vision & machine learning (yeah AI).

You can find the openings in the link below along with the achievements of our amazing previous interns: valeoai.github.io/interns/

Join us!

It brings a change of paradigm in multi-camera bird's-eye-view (BeV) segmentation via a flexible mechanism to produce sparse BeV points that can adapt to situation, task, compute

www.linkedin.com/posts/andrei...

It brings a change of paradigm in multi-camera bird's-eye-view (BeV) segmentation via a flexible mechanism to produce sparse BeV points that can adapt to situation, task, compute

www.linkedin.com/posts/andrei...

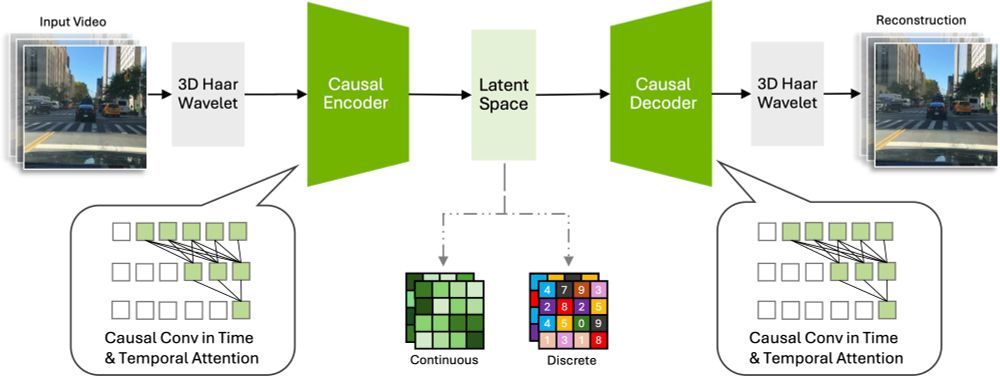

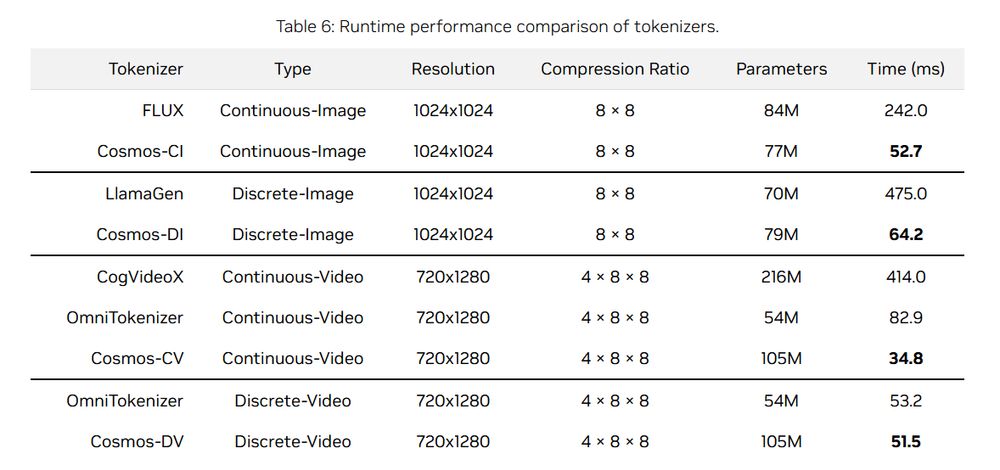

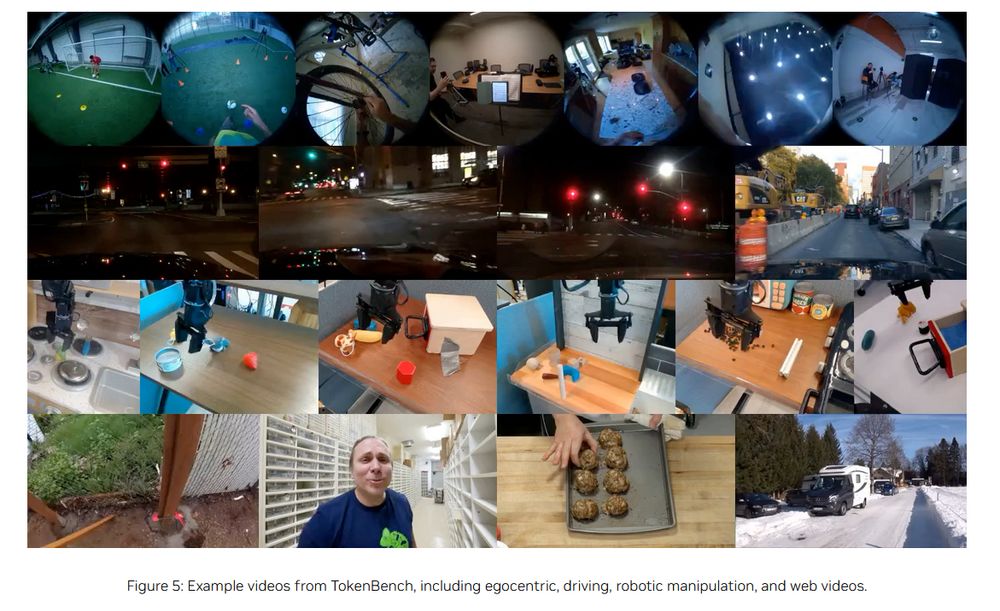

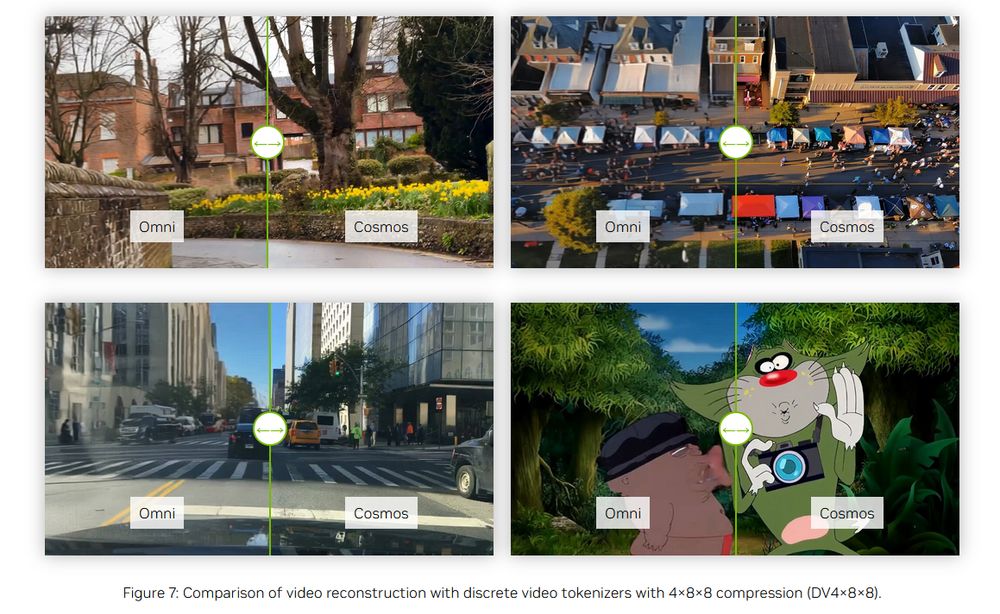

Cosmos is trained on diverse high-res imgs & long-vids, scales well for both discrete & continuous tokens, generalizes to multiple domains (robotics, driving, egocentric ...) & has excellent runtime

github.com/NVIDIA/Cosmo...

Cosmos is trained on diverse high-res imgs & long-vids, scales well for both discrete & continuous tokens, generalizes to multiple domains (robotics, driving, egocentric ...) & has excellent runtime

github.com/NVIDIA/Cosmo...