mitscha.github.io

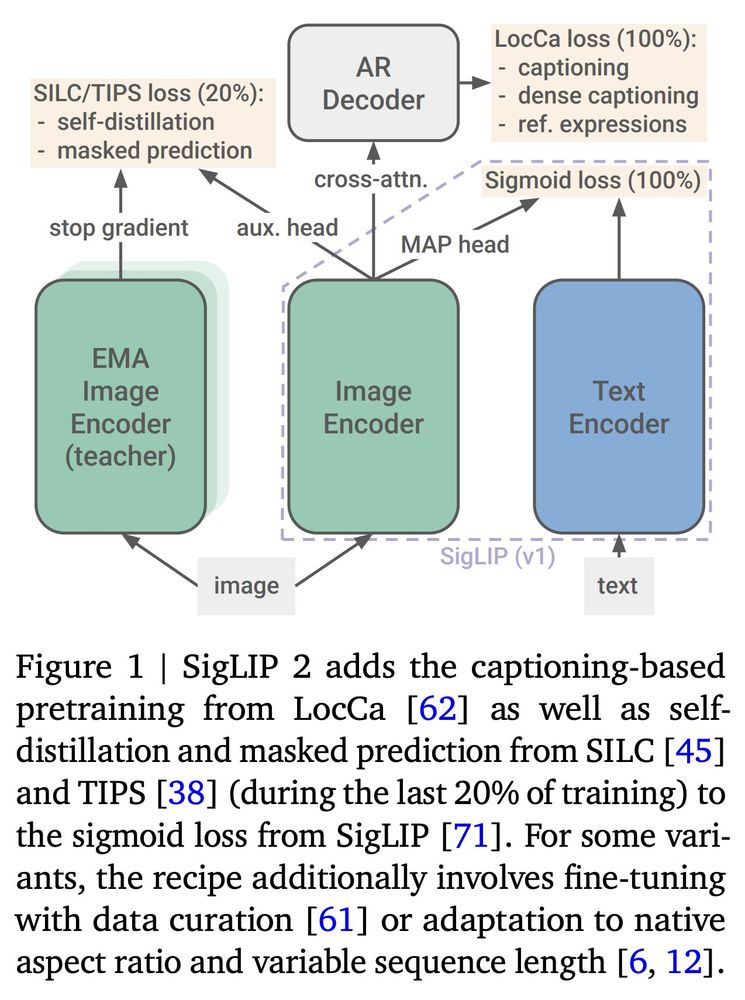

TL;DR: Improved high-level semantics, localization, dense features, and multilingual capabilities via drop-in replacement for v1.

Bonus: Variants supporting native aspect and variable sequence length.

A thread with interesting resources👇

TL;DR: Improved high-level semantics, localization, dense features, and multilingual capabilities via drop-in replacement for v1.

Bonus: Variants supporting native aspect and variable sequence length.

A thread with interesting resources👇

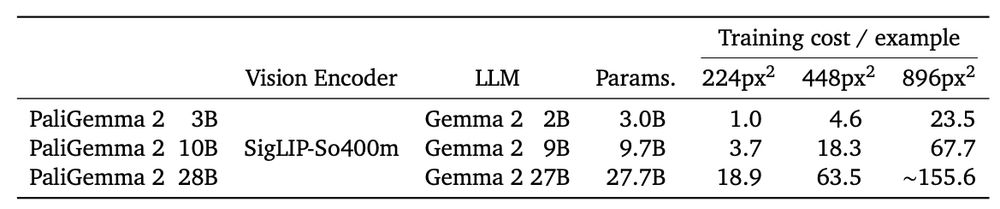

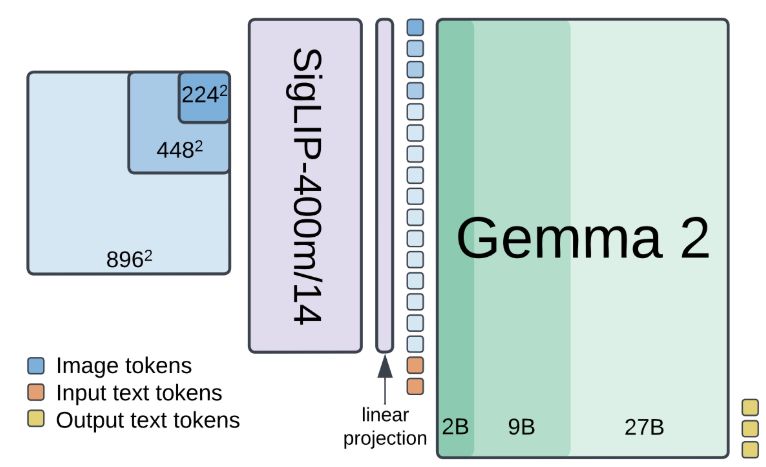

Not sure yet if you want to invest the time 🪄finetuning🪄 on your data? Give it a try with our ready-to-use "mix" checkpoints:

🤗 huggingface.co/blog/paligem...

🎤 developers.googleblog.com/en/introduci...

Not sure yet if you want to invest the time 🪄finetuning🪄 on your data? Give it a try with our ready-to-use "mix" checkpoints:

🤗 huggingface.co/blog/paligem...

🎤 developers.googleblog.com/en/introduci...

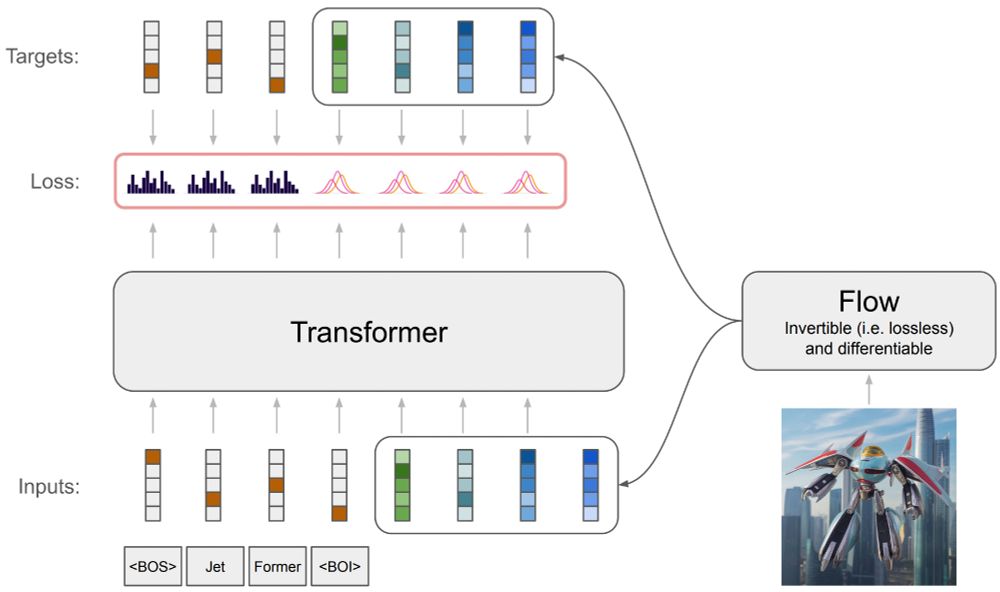

Jet is an important part of JetFormer's engine ⚙️ As a standalone model it is very tame and behaves predictably (e.g. when scaling it up).

Jet is one of the key components of JetFormer, deserving a standalone report. Let's unpack: 🧵⬇️

Jet is an important part of JetFormer's engine ⚙️ As a standalone model it is very tame and behaves predictably (e.g. when scaling it up).

1/7

1/7

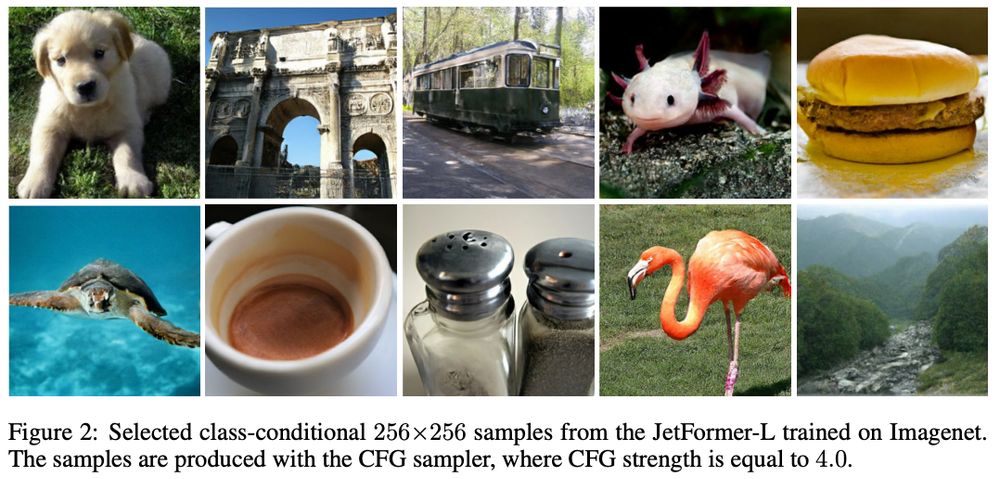

1. optimizes NLL of raw pixel data,

2. generates competitive high-res. natural images,

3. is practical.

But it seemed too good to be true. Until today!

Our new JetFormer model (arxiv.org/abs/2411.19722) ticks on all of these.

🧵

1. optimizes NLL of raw pixel data,

2. generates competitive high-res. natural images,

3. is practical.

But it seemed too good to be true. Until today!

Our new JetFormer model (arxiv.org/abs/2411.19722) ticks on all of these.

🧵

Enter JetFormer: arxiv.org/abs/2411.19722 -- joint work in a dream team: @mtschannen.bsky.social and @kolesnikov.ch

Enter JetFormer: arxiv.org/abs/2411.19722 -- joint work in a dream team: @mtschannen.bsky.social and @kolesnikov.ch

We have been pondering this during summer and developed a new model: JetFormer 🌊🤖

arxiv.org/abs/2411.19722

A thread 👇

1/

We have been pondering this during summer and developed a new model: JetFormer 🌊🤖

arxiv.org/abs/2411.19722

A thread 👇

1/