mitscha.github.io

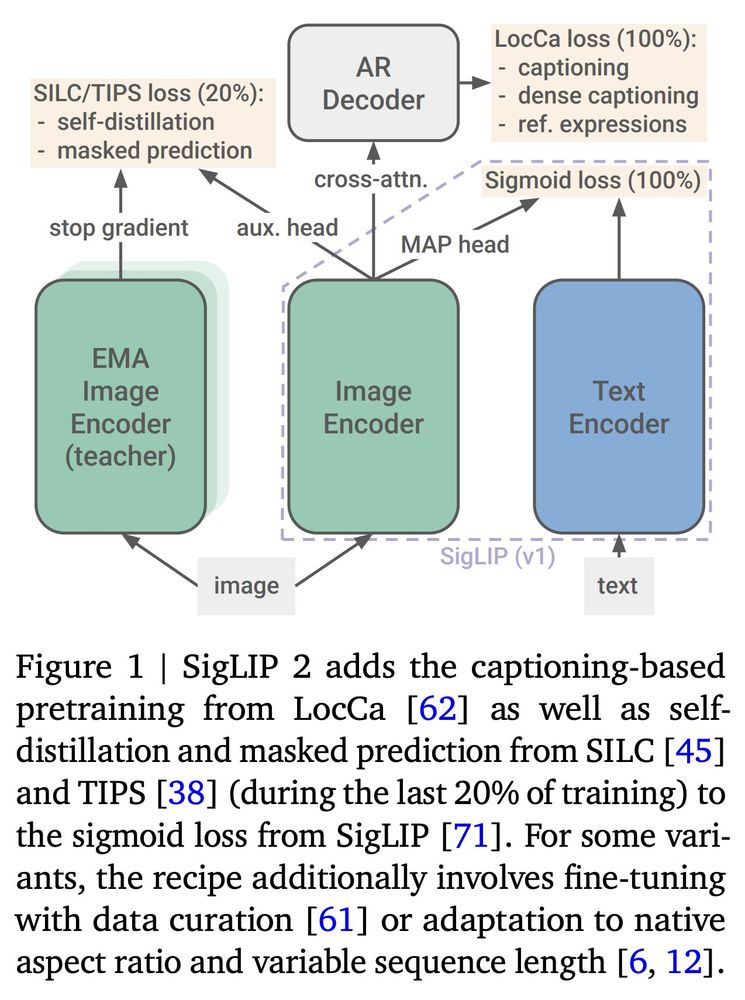

TL;DR: Improved high-level semantics, localization, dense features, and multilingual capabilities via drop-in replacement for v1.

Bonus: Variants supporting native aspect and variable sequence length.

A thread with interesting resources👇

TL;DR: Improved high-level semantics, localization, dense features, and multilingual capabilities via drop-in replacement for v1.

Bonus: Variants supporting native aspect and variable sequence length.

A thread with interesting resources👇

4/

4/

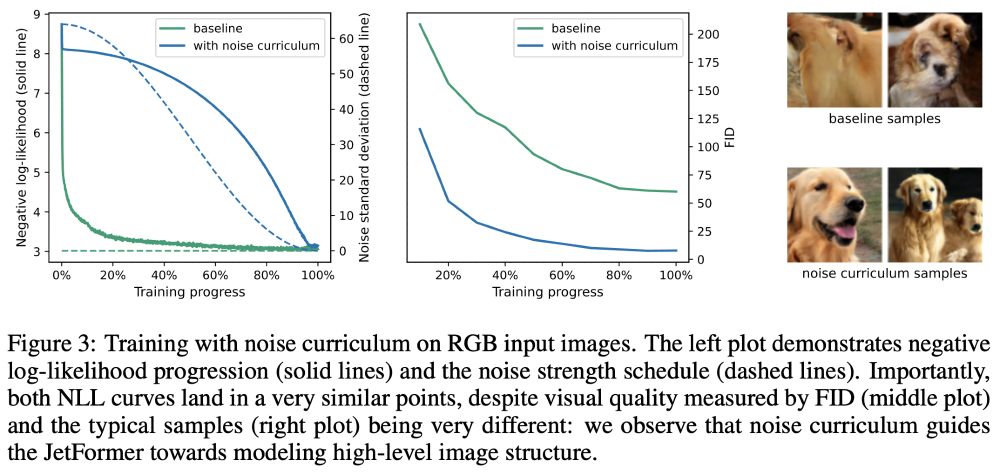

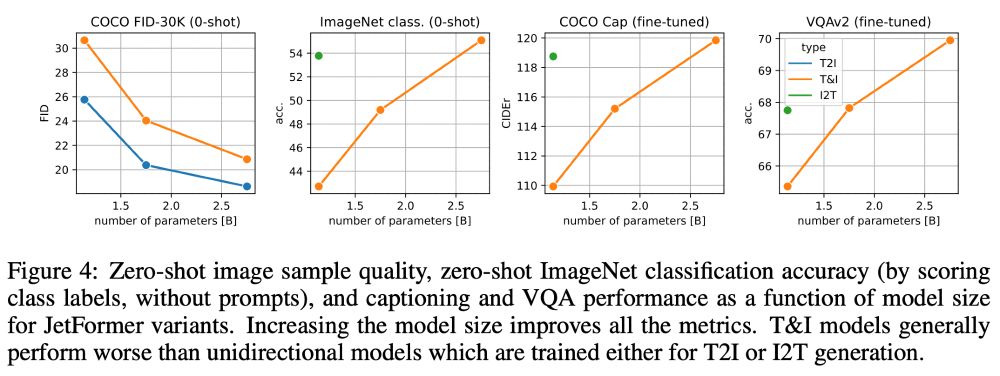

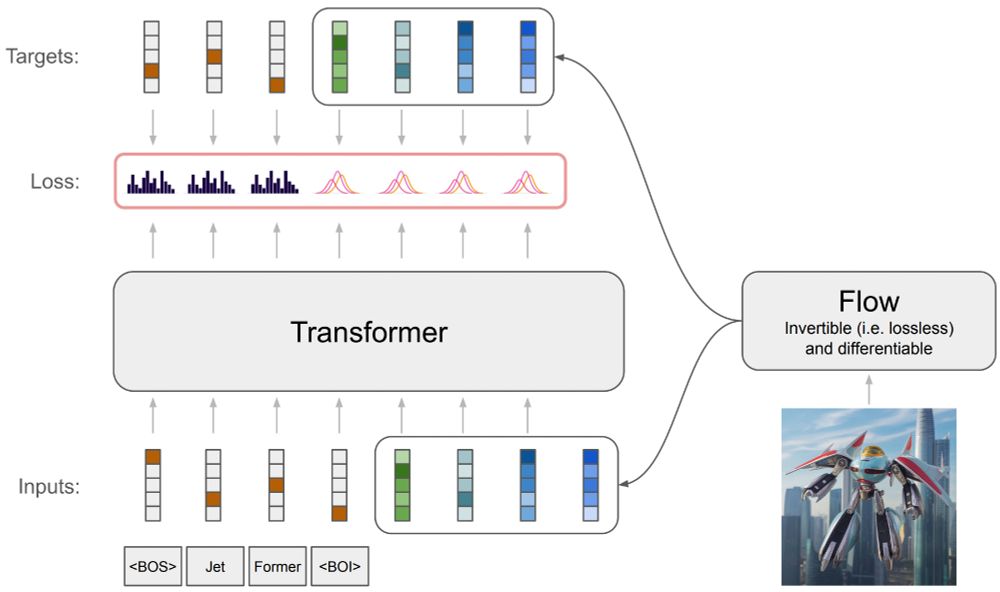

We train JetFormer to maximize the likelihood of the multimodal data, without auxiliary losses (perceptual or similar).

3/

We train JetFormer to maximize the likelihood of the multimodal data, without auxiliary losses (perceptual or similar).

3/

We have been pondering this during summer and developed a new model: JetFormer 🌊🤖

arxiv.org/abs/2411.19722

A thread 👇

1/

We have been pondering this during summer and developed a new model: JetFormer 🌊🤖

arxiv.org/abs/2411.19722

A thread 👇

1/