Happy to share that we're taking a major step towards this by introducing FilBench, an LLM benchmark for Filipino!

Also accepted at EMNLP Main! 🎉

Learn more:

huggingface.co/blog/filbench

Happy to share that we're taking a major step towards this by introducing FilBench, an LLM benchmark for Filipino!

Also accepted at EMNLP Main! 🎉

Learn more:

huggingface.co/blog/filbench

Thank you to everyone who contributed to this project 🥳

Paper: arxiv.org/abs/2503.07920

Project: seacrowd.github.io/seavl-launch/

#ACL2025NLP #SEACrowd #ForSEABySEA

Thank you to everyone who contributed to this project 🥳

Paper: arxiv.org/abs/2503.07920

Project: seacrowd.github.io/seavl-launch/

#ACL2025NLP #SEACrowd #ForSEABySEA

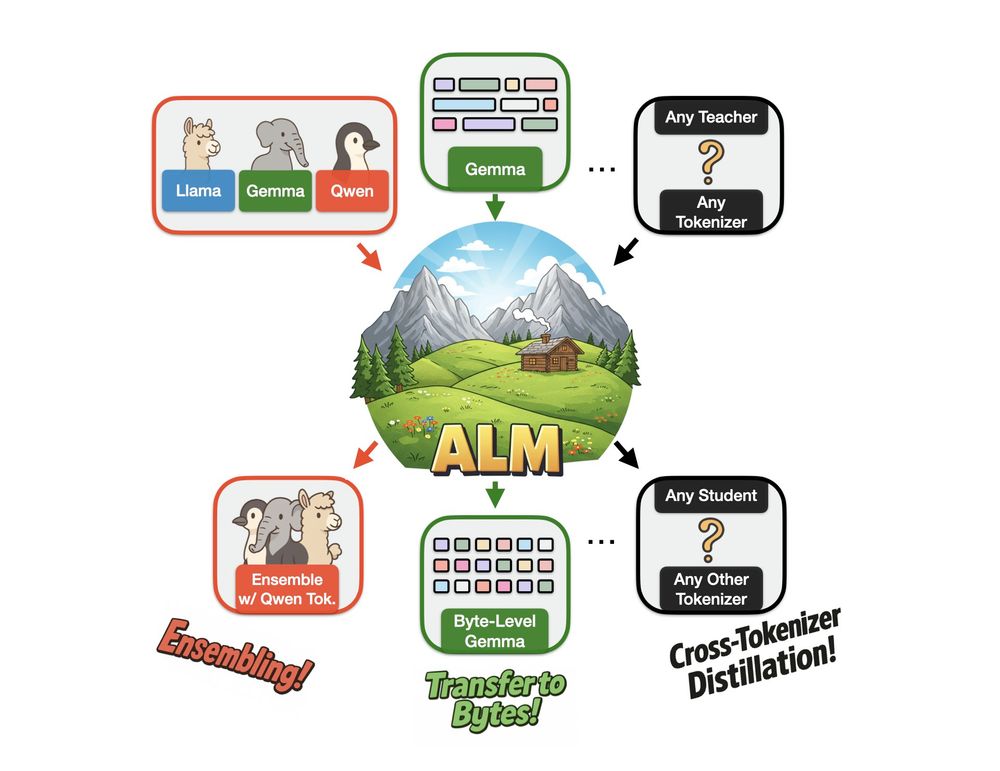

With ALM, you can create ensembles of models from different families, convert existing subword-level models to byte-level and a bunch more🧵

With ALM, you can create ensembles of models from different families, convert existing subword-level models to byte-level and a bunch more🧵

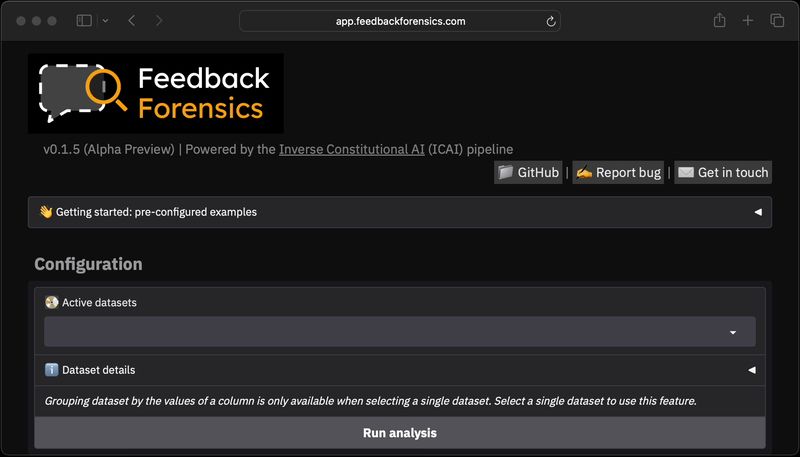

Feedback data is notoriously difficult to interpret and has many known issues – our app aims to help!

Try it at app.feedbackforensics.com

Three example use-cases 👇🧵

Feedback data is notoriously difficult to interpret and has many known issues – our app aims to help!

Try it at app.feedbackforensics.com

Three example use-cases 👇🧵

- Combines 7 datasets

- Filters for instruction-following capability

- Balances on-policy and off-policy prompts

- Enabled successful DPO of OLMo-2-0325-32B model

huggingface.co/datasets/all...

- Combines 7 datasets

- Filters for instruction-following capability

- Balances on-policy and off-policy prompts

- Enabled successful DPO of OLMo-2-0325-32B model

huggingface.co/datasets/all...

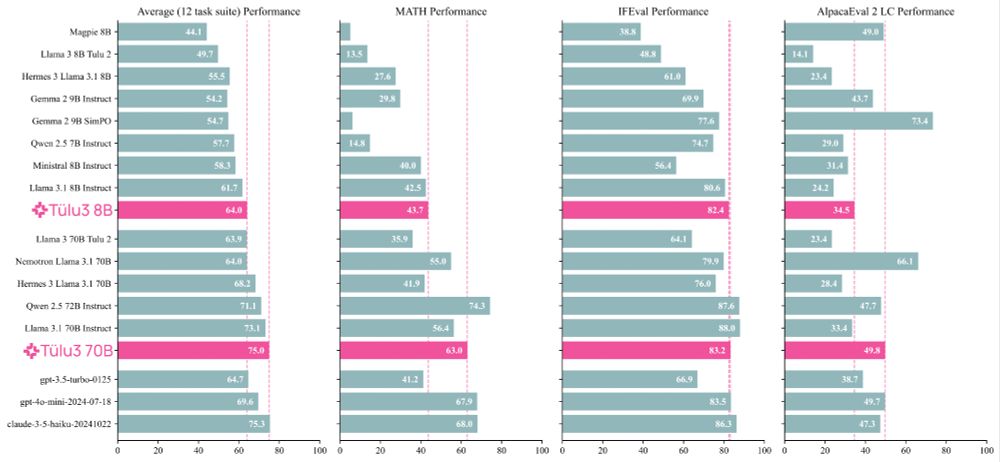

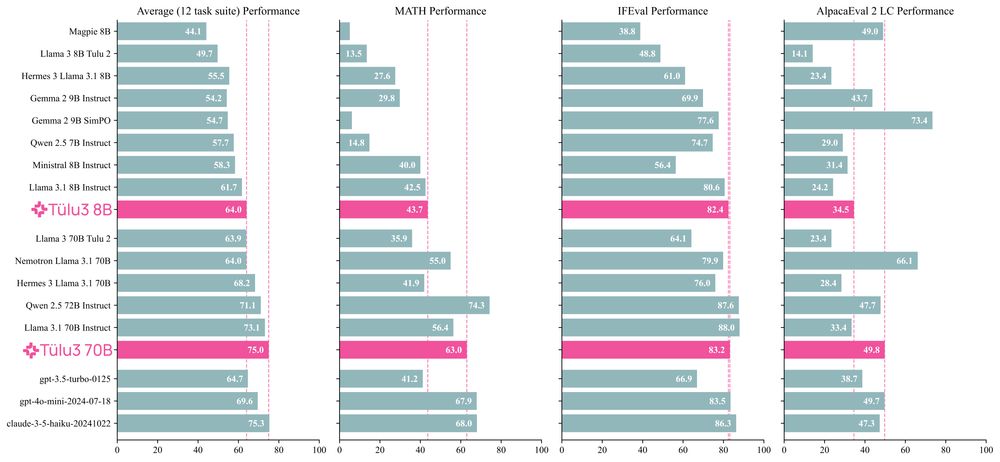

🚗 2 OLMo 2 Furious 🔥 is everythin we learned since OLMo 1, with deep dives into:

🚖 stable pretrain recipe

🚔 lr anneal 🤝 data curricula 🤝 soups

🚘 tulu post-train recipe

🚜 compute infra setup

👇🧵

🚗 2 OLMo 2 Furious 🔥 is everythin we learned since OLMo 1, with deep dives into:

🚖 stable pretrain recipe

🚔 lr anneal 🤝 data curricula 🤝 soups

🚘 tulu post-train recipe

🚜 compute infra setup

👇🧵

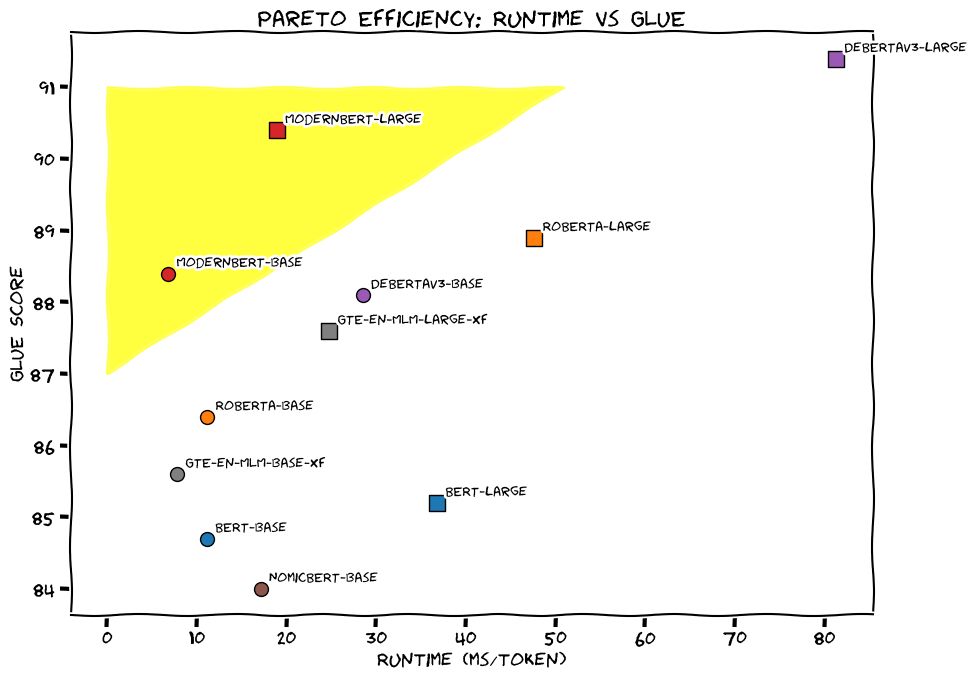

Introducing ModernBERT: 16x larger sequence length, better downstream performance (classification, retrieval), the fastest & most memory efficient encoder on the market.

🧵

Introducing ModernBERT: 16x larger sequence length, better downstream performance (classification, retrieval), the fastest & most memory efficient encoder on the market.

🧵

@shaynelongpre.bsky.social @sarahooker.bsky.social @smw.bsky.social @giadapistilli.com www.technologyreview.com/2024/12/18/1...

@shaynelongpre.bsky.social @sarahooker.bsky.social @smw.bsky.social @giadapistilli.com www.technologyreview.com/2024/12/18/1...

neurips.cc/virtual/2024/tutorial/99528

Are you an AI researcher interested in comparing models/methods? Then your conclusions rely on well-designed experiments. We'll cover best practices + case studies. 👇

neurips.cc/virtual/2024/tutorial/99528

Are you an AI researcher interested in comparing models/methods? Then your conclusions rely on well-designed experiments. We'll cover best practices + case studies. 👇

aiforhumanists.com/guides/models/

aiforhumanists.com/guides/models/

today @akshitab.bsky.social @natolambert.bsky.social and I are giving our #neurips2024 tutorial on language model development.

everything from data, training, adaptation. published or not, no secrets 🫡

tues, 12/10, 9:30am PT ☕️

neurips.cc/virtual/2024...

today @akshitab.bsky.social @natolambert.bsky.social and I are giving our #neurips2024 tutorial on language model development.

everything from data, training, adaptation. published or not, no secrets 🫡

tues, 12/10, 9:30am PT ☕️

neurips.cc/virtual/2024...

🌐 Check our website: grassroots.science.

#NLProc #GrassrootsScience

🌐 Check our website: grassroots.science.

#NLProc #GrassrootsScience

🤗 : huggingface.co/datasets/UD-...

📝 : Paper soon!

🤗 : huggingface.co/datasets/UD-...

📝 : Paper soon!

youtu.be/2AthqCX3h8U

youtu.be/2AthqCX3h8U

Find all artifacts here: huggingface.co/collections/...

Find all artifacts here: huggingface.co/collections/...

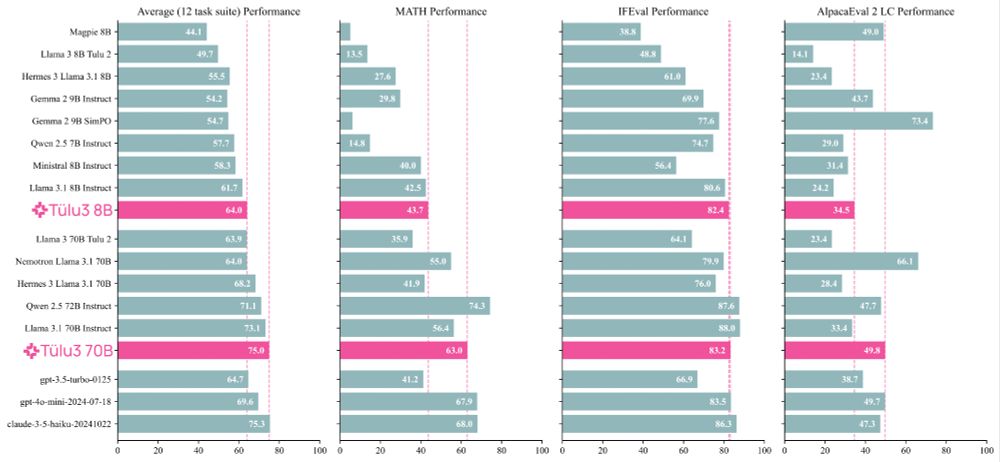

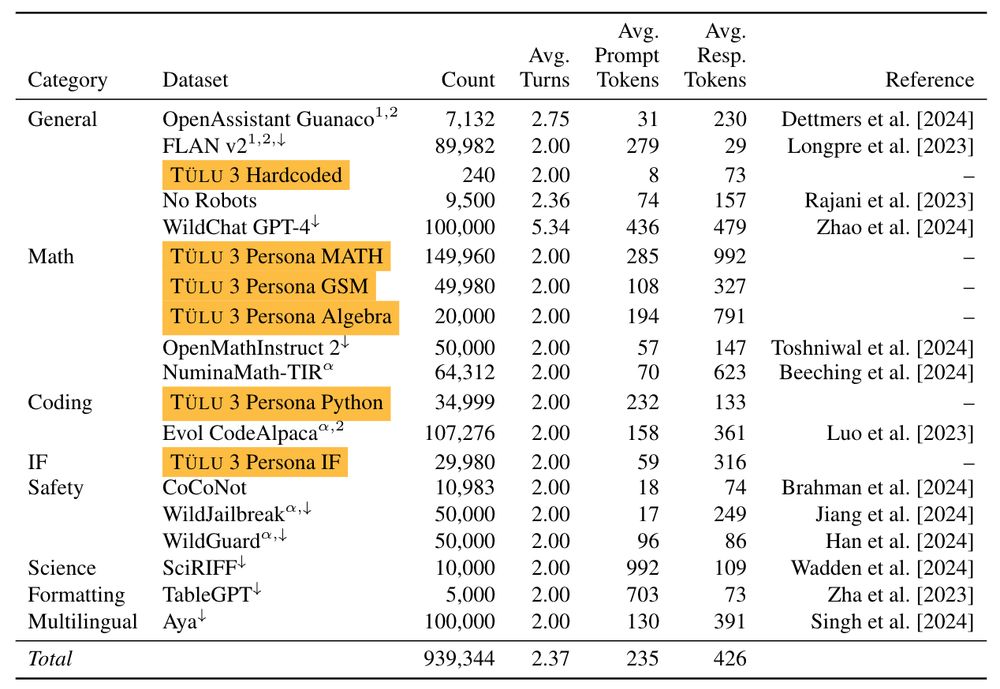

I worked on scaling our synthetic preference data (around 300k preference pairs for 70B) that led to performance gains when trained on using DPO.

We invented new methods for fine-tuning language models with RL and built upon best practices to scale synthetic instruction and preference data.

Demo, GitHub, paper, and models 👇

I worked on scaling our synthetic preference data (around 300k preference pairs for 70B) that led to performance gains when trained on using DPO.

We invented new methods for fine-tuning language models with RL and built upon best practices to scale synthetic instruction and preference data.

Demo, GitHub, paper, and models 👇

We invented new methods for fine-tuning language models with RL and built upon best practices to scale synthetic instruction and preference data.

Demo, GitHub, paper, and models 👇

Thread.

Thread.