You face a choice: a well-calibrated base model or a capable but unreliable instruct model.

What if you didn't have to choose? What if you could navigate the trade-off?

(1/8)

You face a choice: a well-calibrated base model or a capable but unreliable instruct model.

What if you didn't have to choose? What if you could navigate the trade-off?

(1/8)

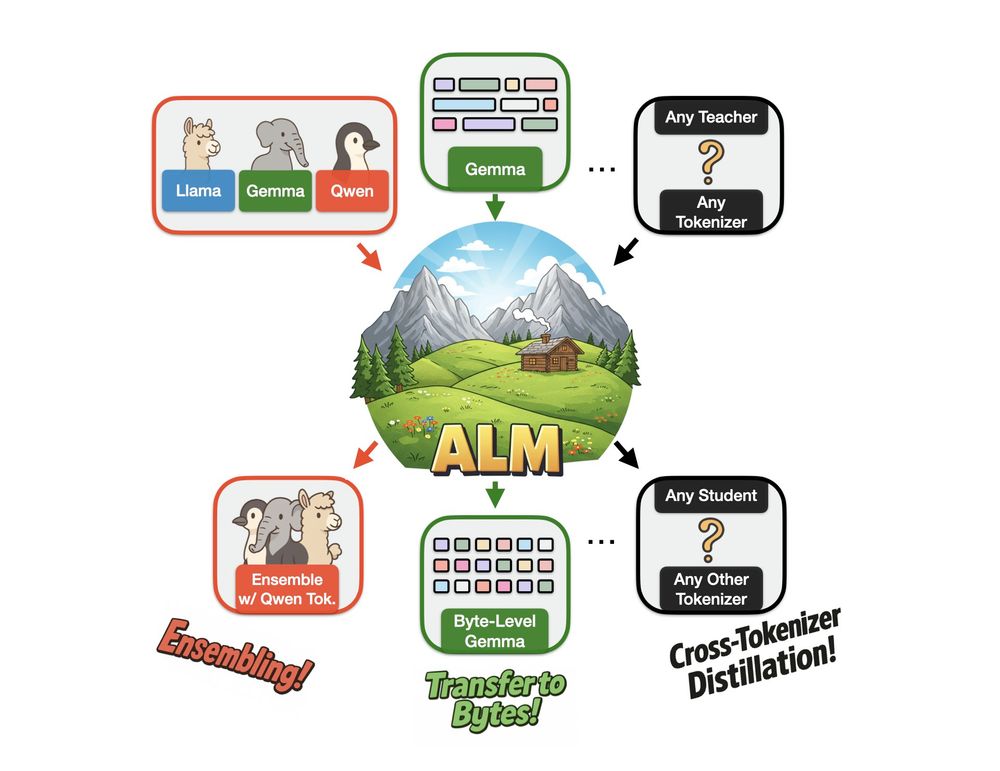

With ALM, you can create ensembles of models from different families, convert existing subword-level models to byte-level and a bunch more🧵

With ALM, you can create ensembles of models from different families, convert existing subword-level models to byte-level and a bunch more🧵

Two amazing papers from my students at #NeurIPS today:

⛓️💥 Switch the vocabulary and embeddings of your LLM tokenizer zero-shot on the fly (@bminixhofer.bsky.social)

neurips.cc/virtual/2024...

🌊 Align your LLM gradient-free with spectral editing of activations (Yifu Qiu)

neurips.cc/virtual/2024...

Two amazing papers from my students at #NeurIPS today:

⛓️💥 Switch the vocabulary and embeddings of your LLM tokenizer zero-shot on the fly (@bminixhofer.bsky.social)

neurips.cc/virtual/2024...

🌊 Align your LLM gradient-free with spectral editing of activations (Yifu Qiu)

neurips.cc/virtual/2024...