bendlaufer.github.io

You can read the latest version of the paper here: www.rafaelmbatista.com/jmp/

This is joint work with the wonderful @jamesross0.bsky.social

You can read the latest version of the paper here: www.rafaelmbatista.com/jmp/

This is joint work with the wonderful @jamesross0.bsky.social

Ben is finishing up his PhD at Cornell (advised by Jon Kleinberg) and is currently on the job market

AI evolution looks kind of like biology, but with some strange twists. 🧬🤖

Ben is finishing up his PhD at Cornell (advised by Jon Kleinberg) and is currently on the job market

via @tedunderwood.me

AI evolution looks kind of like biology, but with some strange twists. 🧬🤖

via @tedunderwood.me

AI evolution looks kind of like biology, but with some strange twists. 🧬🤖

AI evolution looks kind of like biology, but with some strange twists. 🧬🤖

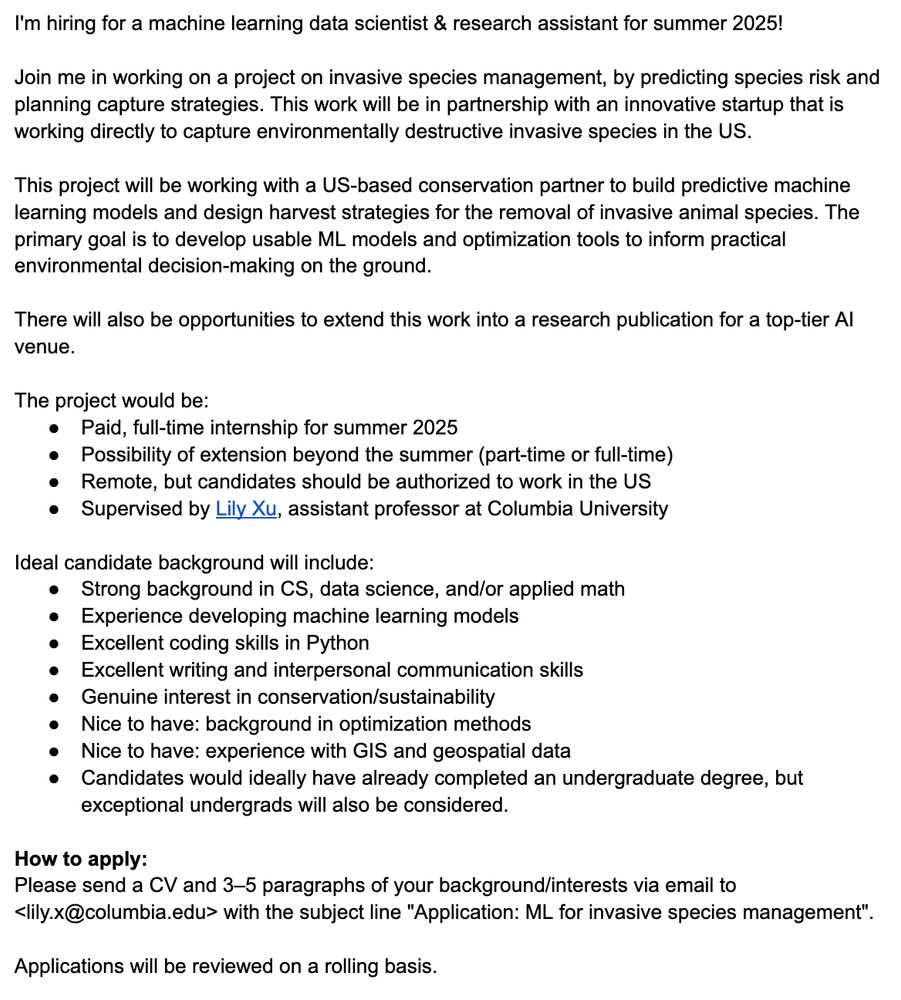

Join me on a project on invasive species management with an innovative startup doing on-the-ground removal of environmentally destructive invasive animals.

Paid, full-time w/ possibility to extend.

Join me on a project on invasive species management with an innovative startup doing on-the-ground removal of environmentally destructive invasive animals.

Paid, full-time w/ possibility to extend.

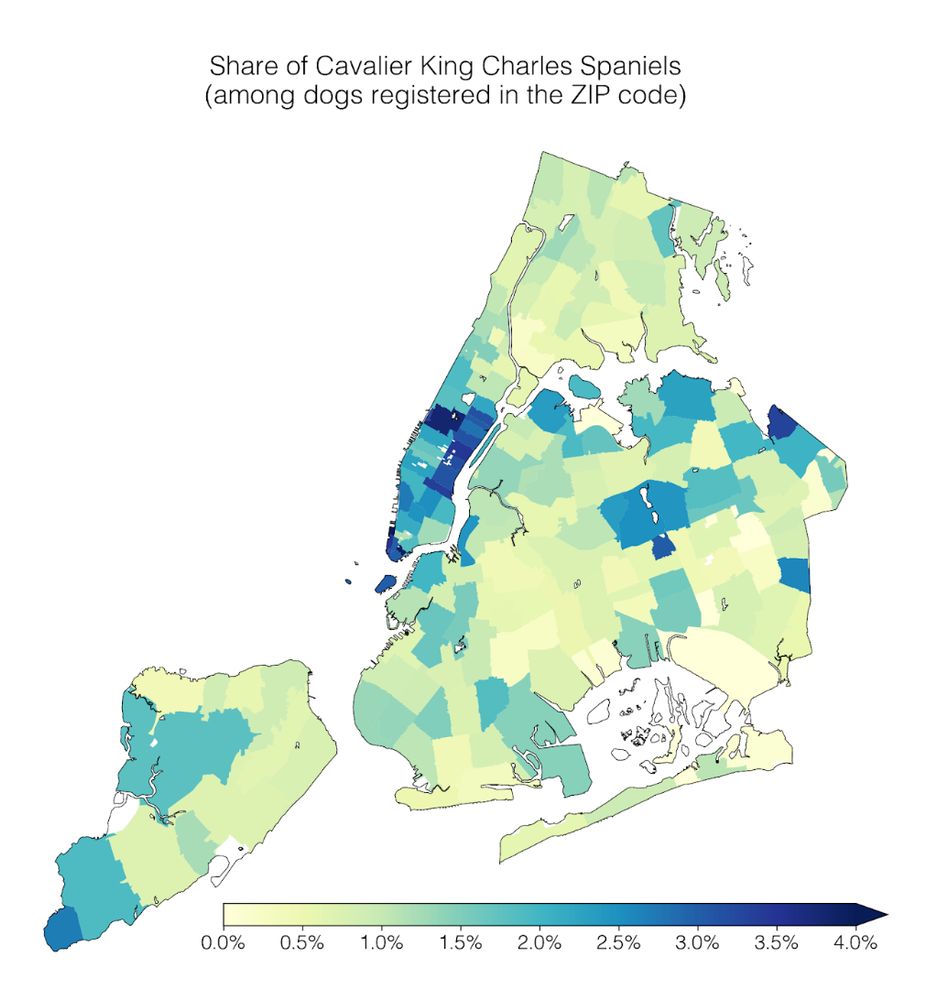

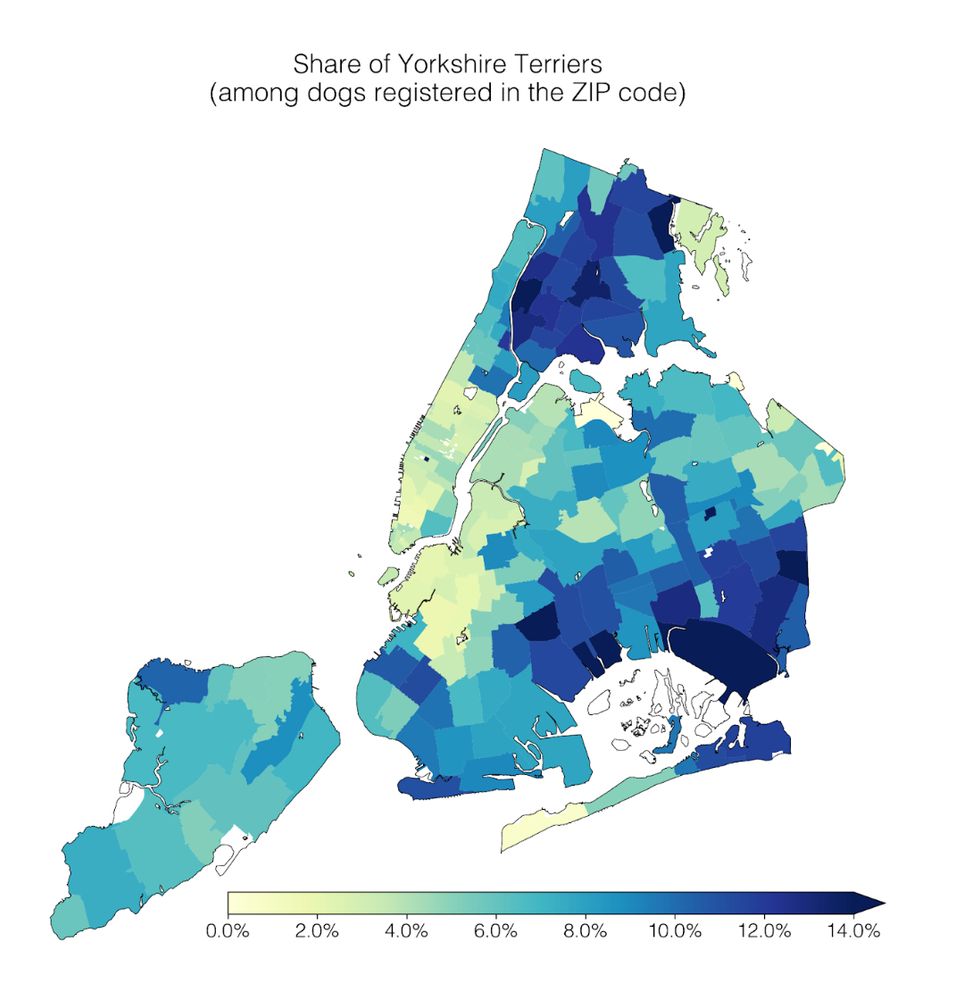

1) Geospatial trends: Cavalier King Charles Spaniels are common in Manhattan; the opposite is true for Yorkshire Terriers.

“Regulation along the AI Development Pipeline for Fairness, Safety and Related Goals”

“Regulation along the AI Development Pipeline for Fairness, Safety and Related Goals”

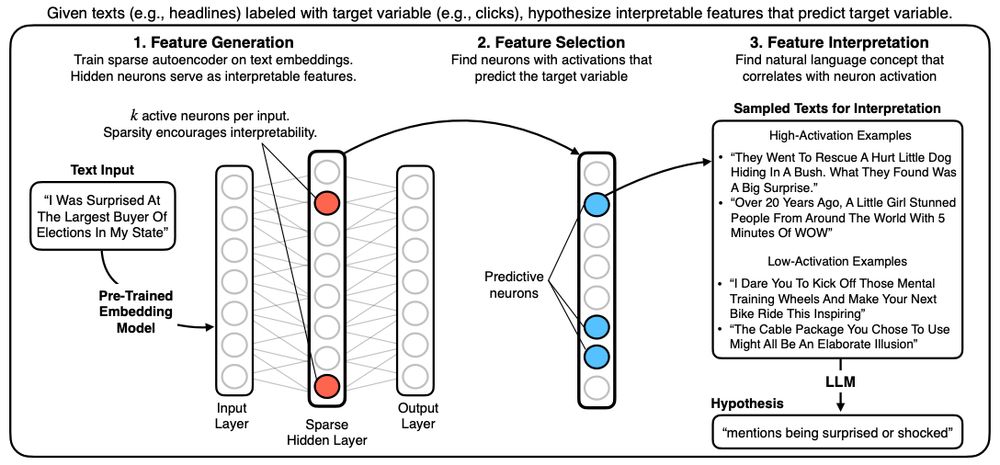

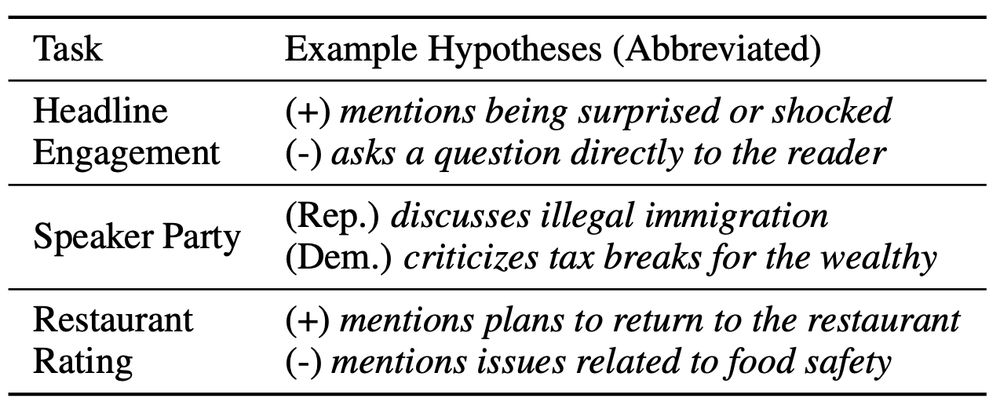

HypotheSAEs generates interpretable features of text data that predict a target variable: What features predict clicks from headlines / party from congressional speech / rating from Yelp review?

arxiv.org/abs/2502.04382

HypotheSAEs generates interpretable features of text data that predict a target variable: What features predict clicks from headlines / party from congressional speech / rating from Yelp review?

arxiv.org/abs/2502.04382

In collaboration with @s010n.bsky.social and Manish Raghavan, we explore strategies and fundamental limits in searching for less discriminatory algorithms.

In collaboration with @s010n.bsky.social and Manish Raghavan, we explore strategies and fundamental limits in searching for less discriminatory algorithms.

citp.princeton.edu/programs/cit...

citp.princeton.edu/programs/cit...

@smw.bsky.social, @davidthewid.bsky.social & I correct the record👇

nature.com/articles/s41...

@smw.bsky.social, @davidthewid.bsky.social & I correct the record👇

nature.com/articles/s41...

the Georgetown Law Journal has published "Less Discriminatory Algorithms." it's been very fun to work on this w/ Emily Black, Pauline Kim, Solon Barocas, and Ming Hsu.

i hope you give it a read — the article is just the beginning of this line of work.

www.law.georgetown.edu/georgetown-l...

the Georgetown Law Journal has published "Less Discriminatory Algorithms." it's been very fun to work on this w/ Emily Black, Pauline Kim, Solon Barocas, and Ming Hsu.

i hope you give it a read — the article is just the beginning of this line of work.

www.law.georgetown.edu/georgetown-l...

waking my account up to share a recent blog post on the subject: rajivmovva.com/2024/11/08/g...

waking my account up to share a recent blog post on the subject: rajivmovva.com/2024/11/08/g...

Thanks to everybody who has supported me along the way!

Thanks to everybody who has supported me along the way!

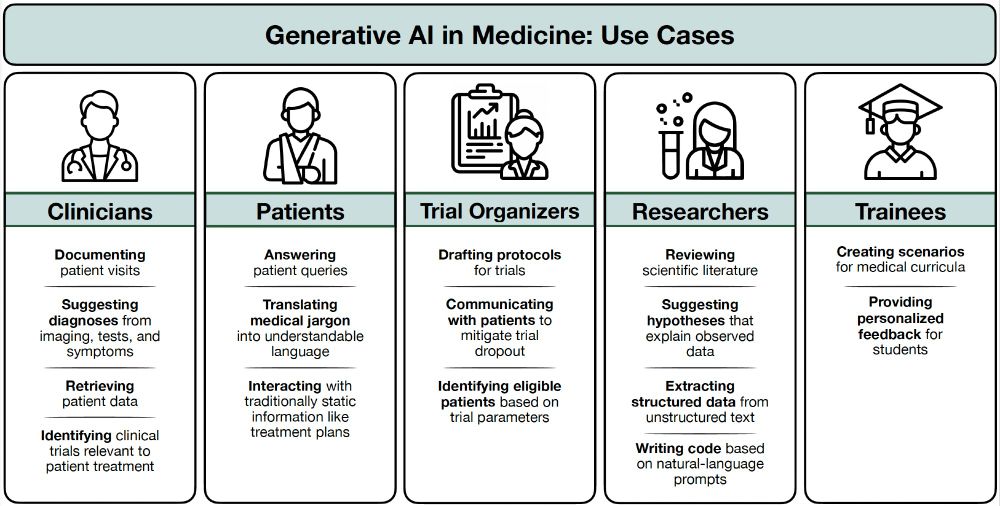

We also discuss generative AI and, broadly, the problems posed by untrustworthy algorithmic systems.

We also discuss generative AI and, broadly, the problems posed by untrustworthy algorithmic systems.

"…algorithmic amplification is problematic because...it chokes out trustworthy processes that we have relied on for guiding valued societal practices and for selecting, elevating, and amplifying content” via @laufer.bsky.social, Helen Nissenbaum

knightcolumbia.org/content/algo...

"…algorithmic amplification is problematic because...it chokes out trustworthy processes that we have relied on for guiding valued societal practices and for selecting, elevating, and amplifying content” via @laufer.bsky.social, Helen Nissenbaum

knightcolumbia.org/content/algo...

The talk is on genAI/ML technologies billed as "general-purpose". I'll discuss: which purposes, why and how? It's ongoing work with Hoda Heidari and Jon Kleinberg.

HMU if you're around to meet, etc!

The talk is on genAI/ML technologies billed as "general-purpose". I'll discuss: which purposes, why and how? It's ongoing work with Hoda Heidari and Jon Kleinberg.

HMU if you're around to meet, etc!