Lab: https://mintresearch.org

Self: https://sethlazar.org

Newsletter: https://philosophyofcomputing.substack.com

philosophyofcomputing.substack.com

discuss how AI agents might affect the realization of democratic values. knightcolumbia.org/content/ai-a...

discuss how AI agents might affect the realization of democratic values. knightcolumbia.org/content/ai-a...

AI agents could help or hurt. And they won't protect democratic values on their own.

AI agents could help or hurt. And they won't protect democratic values on their own.

philosophyofcomputing.substack.com/p/normative-...

philosophyofcomputing.substack.com/p/normative-...

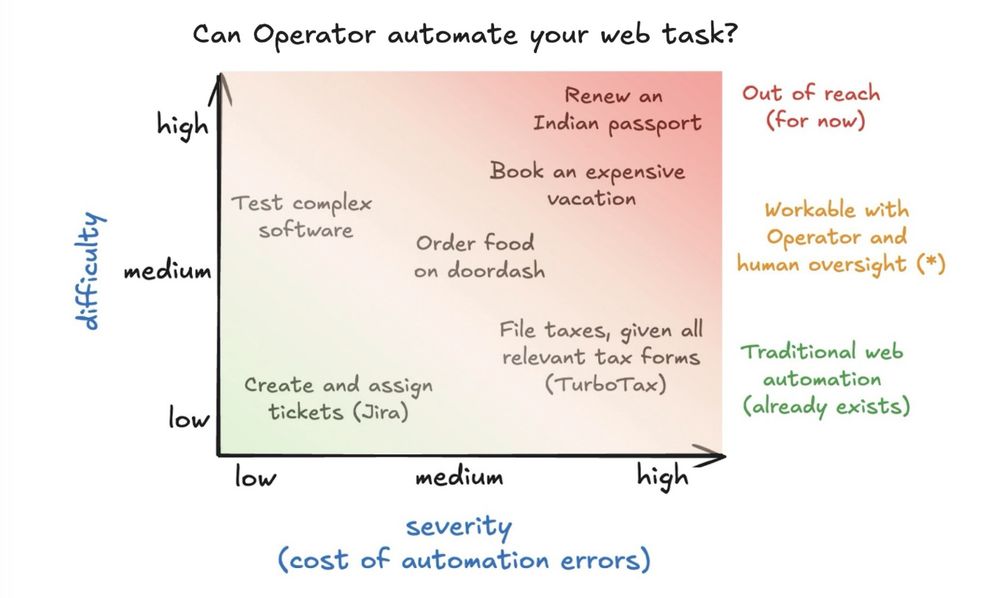

Some insights on what worked, what broke, and why this matters for the future of agents 🧵

Some insights on what worked, what broke, and why this matters for the future of agents 🧵

Looks at what 'agent' means, how LM agents work, what kinds of impacts we should expect, and what norms (and regulations) should govern them.

Looks at what 'agent' means, how LM agents work, what kinds of impacts we should expect, and what norms (and regulations) should govern them.

Trying it out is a lot of fun: simonwillison.net/2024/Dec/24/...

Trying it out is a lot of fun: simonwillison.net/2024/Dec/24/...

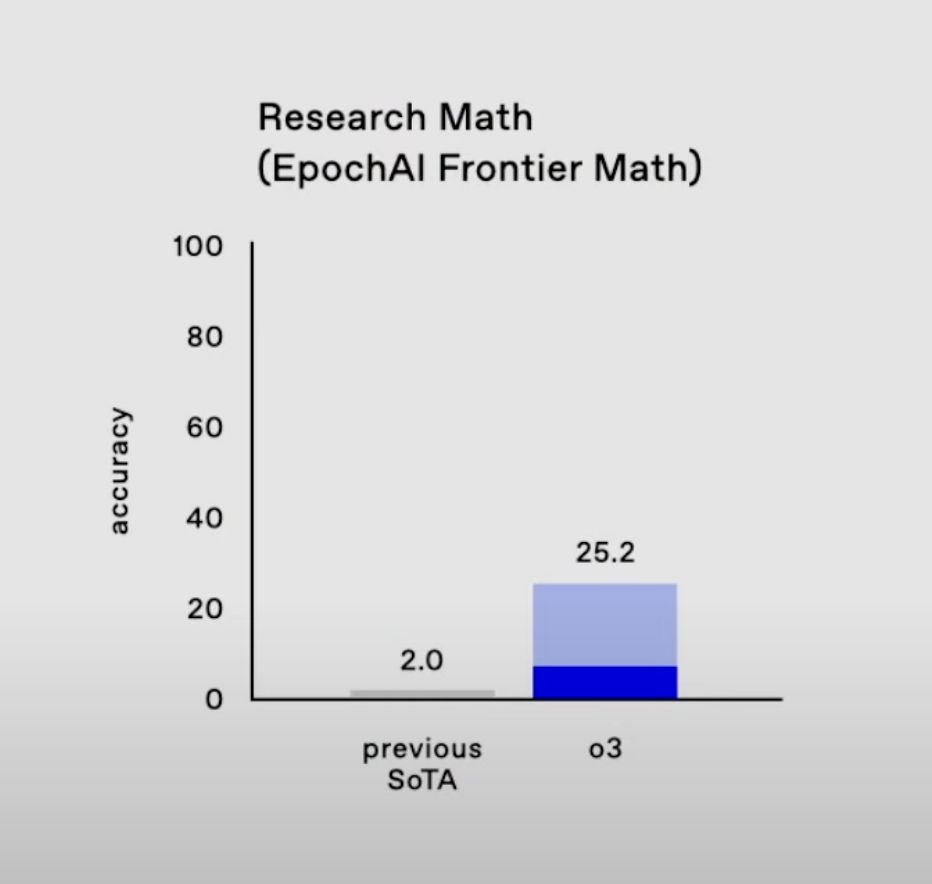

Rip to people who say any of "progress is done," "scale is done," or "llms cant reason"

2024 was awesome. I love my job.

Rip to people who say any of "progress is done," "scale is done," or "llms cant reason"

2024 was awesome. I love my job.

A step change as influential as the release of GPT-4. Reasoning language models are the current and next big thing.

I explain:

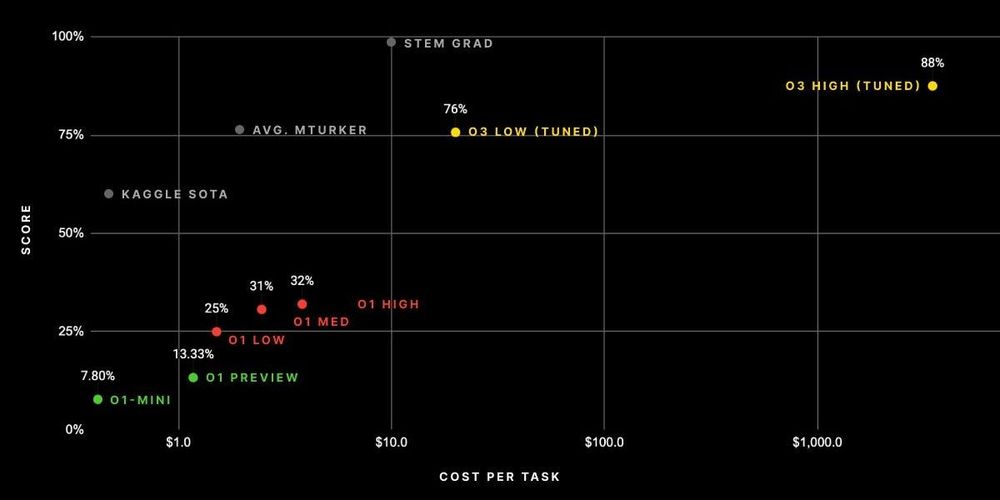

* The ARC prize

* o3 model size / cost

* Dispelling training myths

* Extreme benchmark progress

A step change as influential as the release of GPT-4. Reasoning language models are the current and next big thing.

I explain:

* The ARC prize

* o3 model size / cost

* Dispelling training myths

* Extreme benchmark progress