Website: cs.princeton.edu/~sayashk

Book/Substack: aisnakeoil.com

Join @jbakcoleman.bsky.social, @lukethorburn.com, and myself in San Diego on Aug 4th for the Collective Intelligence x Tech Policy workshop at @acmci.bsky.social!

Join @jbakcoleman.bsky.social, @lukethorburn.com, and myself in San Diego on Aug 4th for the Collective Intelligence x Tech Policy workshop at @acmci.bsky.social!

#CITP #AI #science #AcademiaSky

#CITP #AI #science #AcademiaSky

In a comment for @nature.com, @randomwalker.bsky.social and @sayash.bsky.social warn against an overreliance on AI-driven modeling in science: bit.ly/4icM0hp

In a comment for @nature.com, @randomwalker.bsky.social and @sayash.bsky.social warn against an overreliance on AI-driven modeling in science: bit.ly/4icM0hp

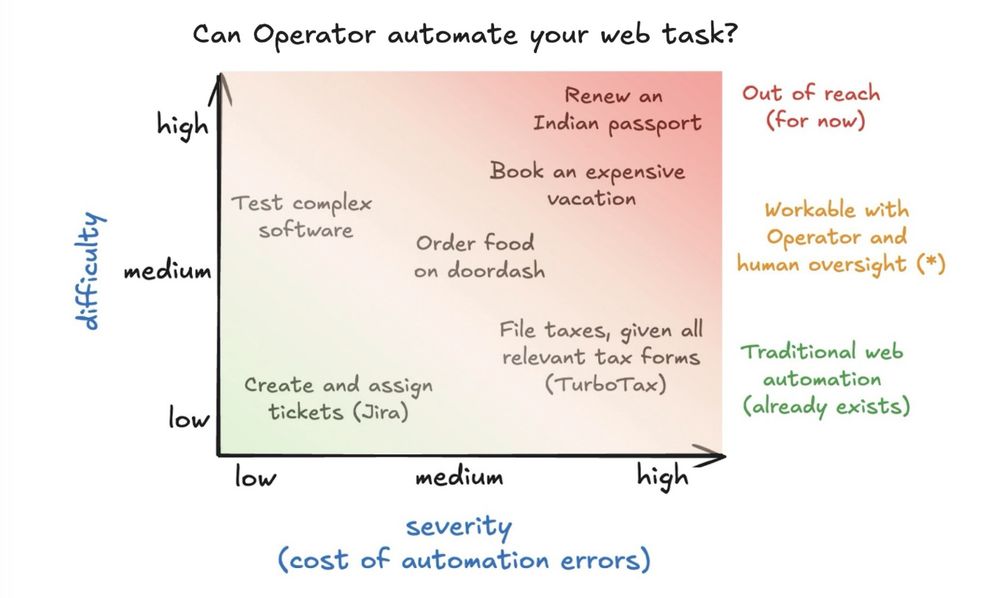

Some insights on what worked, what broke, and why this matters for the future of agents 🧵

Some insights on what worked, what broke, and why this matters for the future of agents 🧵

www.aisnakeoil.com/p/is-ai-prog...

And if you're not subscribed to @randomwalker.bsky.social and @sayash.bsky.social 's great newsletter, what are you waiting for?

www.aisnakeoil.com/p/is-ai-prog...

And if you're not subscribed to @randomwalker.bsky.social and @sayash.bsky.social 's great newsletter, what are you waiting for?

The whole first chapter is available online:

press.princeton.edu/books/hardco...

We hope you find it useful.

The whole first chapter is available online:

press.princeton.edu/books/hardco...

We hope you find it useful.

We analyzed every instance of political AI use this year collected by WIRED. New essay w/@random_walker: 🧵

We analyzed every instance of political AI use this year collected by WIRED. New essay w/@random_walker: 🧵

Always worth reading them.

substack.com/app-link/pos...

Always worth reading them.

substack.com/app-link/pos...

Our recent cross-institutional work asks: Does the available evidence match the current level of attention?

📜 arxiv.org/abs/2412.01946

Our recent cross-institutional work asks: Does the available evidence match the current level of attention?

📜 arxiv.org/abs/2412.01946

📖 About the book:

📖 About the book:

Come through to discuss the future of AI, why AI isn't an existential risk, how we can build AI in/for the public, and what goes into writing a book.

Looking forward to seeing some of you!

Come through to discuss the future of AI, why AI isn't an existential risk, how we can build AI in/for the public, and what goes into writing a book.

Looking forward to seeing some of you!

Come through to discuss the future of AI, why AI isn't an existential risk, how we can build AI in/for the public, and what goes into writing a book.

Looking forward to seeing some of you!

www.aisnakeoil.com

www.aisnakeoil.com