Here is a summary. (1/n)

T152 A Model of Speech Recognition Reproduces Signatures of Human Speech Perception and Reveals Mechanisms of Contextual Integration, by Gasser Elbanna

T171 Hearing-Impaired Deep Neural Networks Predict Real-World Hearing Difficulties, by Mark Saddler

T152 A Model of Speech Recognition Reproduces Signatures of Human Speech Perception and Reveals Mechanisms of Contextual Integration, by Gasser Elbanna

T171 Hearing-Impaired Deep Neural Networks Predict Real-World Hearing Difficulties, by Mark Saddler

talk at 3:15pm: Optimized Models of Uncertainty Explain Human Confidence in Auditory Perception, by Lakshmi Govindarajan

poster 28: In-Silico fMRI Experiments Enable Comparisons of Speech Models to Human Auditory Cortex, by Gasser Elbanna

talk at 3:15pm: Optimized Models of Uncertainty Explain Human Confidence in Auditory Perception, by Lakshmi Govindarajan

poster 28: In-Silico fMRI Experiments Enable Comparisons of Speech Models to Human Auditory Cortex, by Gasser Elbanna

SA53 A Deep Learning Framework for Understanding Cochlear Implants, by Annesya Banerjee

SA189 Robustness to Noise Reveals Cross-Culturally Consistent Properties of Pitch Perception for Harmonic and Inharmonic Sounds, by Malinda McPherson

SA53 A Deep Learning Framework for Understanding Cochlear Implants, by Annesya Banerjee

SA189 Robustness to Noise Reveals Cross-Culturally Consistent Properties of Pitch Perception for Harmonic and Inharmonic Sounds, by Malinda McPherson

The 10th of these, would you believe?

This year we have foundation models, breakthroughs in using light to understand the brain, a gene therapy, and more

Enjoy!

medium.com/the-spike/20...

The 10th of these, would you believe?

This year we have foundation models, breakthroughs in using light to understand the brain, a gene therapy, and more

Enjoy!

medium.com/the-spike/20...

...presumably because of our strong prior expectation that faces are convex.

This is a very nice example of the Hollow-Face illusion promoted by Richard Gregory:

www.richardgregory.org/experiments/

...presumably because of our strong prior expectation that faces are convex.

This is a very nice example of the Hollow-Face illusion promoted by Richard Gregory:

www.richardgregory.org/experiments/

I'm presenting work co-led by Ivy Brundege and me, with @joshhmcdermott.bsky.social, showing that in silico fMRI experiments of speech models reveal notable discrepancies with human auditory cortex.

Here we uncover a simple cortical code for loudness and leverage it reverse sound hypersensitivity disorders in mice.

#neuroskyence

Here we uncover a simple cortical code for loudness and leverage it reverse sound hypersensitivity disorders in mice.

#neuroskyence

We study how our brains perceive and represent the physical world around us using behavioral, computational, and neuroimaging methods.

paulunlab.psych.wisc.edu

#VisionScience #NeuroSkyence

We study how our brains perceive and represent the physical world around us using behavioral, computational, and neuroimaging methods.

paulunlab.psych.wisc.edu

#VisionScience #NeuroSkyence

1. Cross-culturally shared sensitivity to harmonic structure underlies some aspects of pitch perception - Malinda McPherson-McNato

1. Cross-culturally shared sensitivity to harmonic structure underlies some aspects of pitch perception - Malinda McPherson-McNato

Here is a summary. (1/n)

Here is a summary. (1/n)

- if you have a spare buck, give it to Wikipedia, then repost this

- if you don't have a spare buck, just repost

your action is mandatory for the world's best source of information to survive

- if you have a spare buck, give it to Wikipedia, then repost this

- if you don't have a spare buck, just repost

your action is mandatory for the world's best source of information to survive

pnas.org/doi/10.1073/...

@antoinecomite.bsky.social

pnas.org/doi/10.1073/...

@antoinecomite.bsky.social

I'm also recruiting grad students to start next September - come hang out with us! Details about our lab here: www.deckerlab.com

Reposts are very welcome! 🙌 Please help spread the word!

I'm also recruiting grad students to start next September - come hang out with us! Details about our lab here: www.deckerlab.com

Reposts are very welcome! 🙌 Please help spread the word!

#AI #CognitiveScience #AcademicJobs #BrownUniversity

#AI #CognitiveScience #AcademicJobs #BrownUniversity

@martinhebart.bsky.social @gallantlab.org

diedrichsenlab.org/BrainDataSci...

@martinhebart.bsky.social @gallantlab.org

diedrichsenlab.org/BrainDataSci...

Work by the Sound Understanding folks

@GoogleDeepMind

arxiv.org/abs/2509.05256

Work by the Sound Understanding folks

@GoogleDeepMind

arxiv.org/abs/2509.05256

Work done with @joshhmcdermott.bsky.social.

#NeuroAI2025

🧵1/4

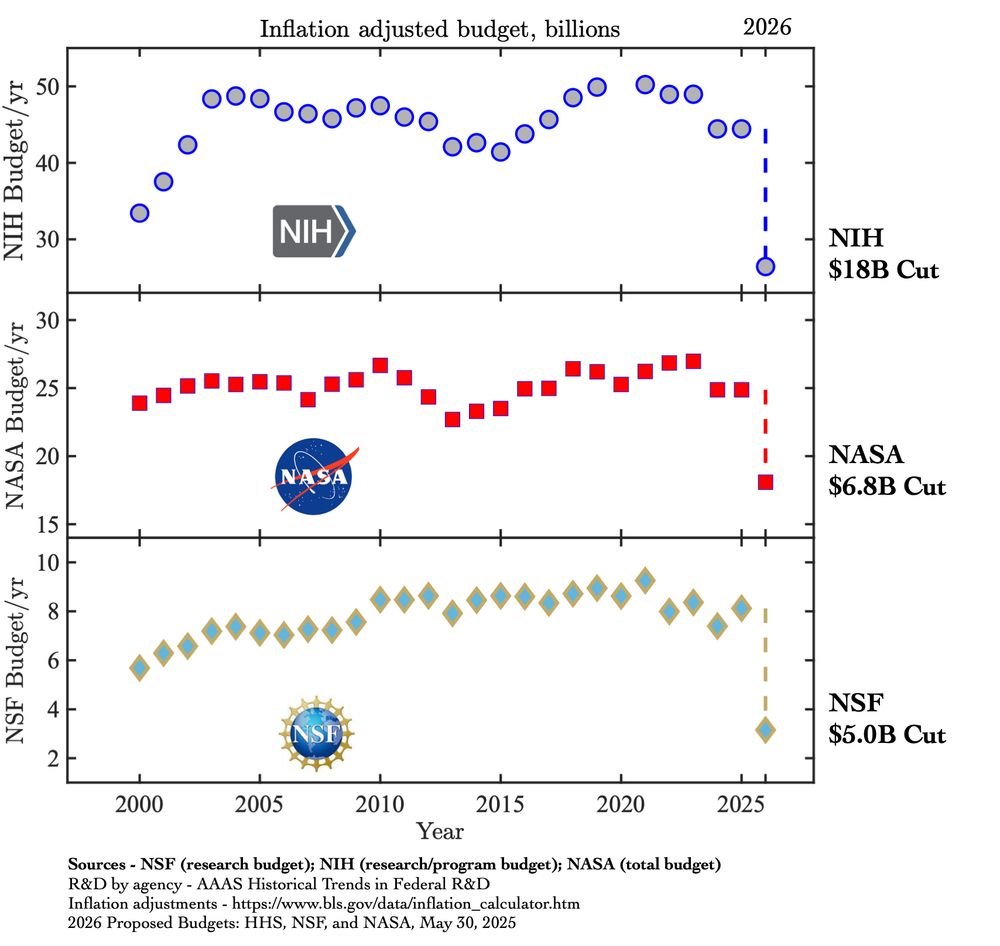

Proposed cuts to #NSF, #NIH, and #NASA will set the US R&D landscape back 25 yrs+, cause economic and job loss now, and undermine innovations to come.

But, this is the WH's *proposed* budget.

Speak up now before it is too late.

(inflation adjusted $-s below)

openreview.net/forum?id=ugX...

openreview.net/forum?id=ugX...

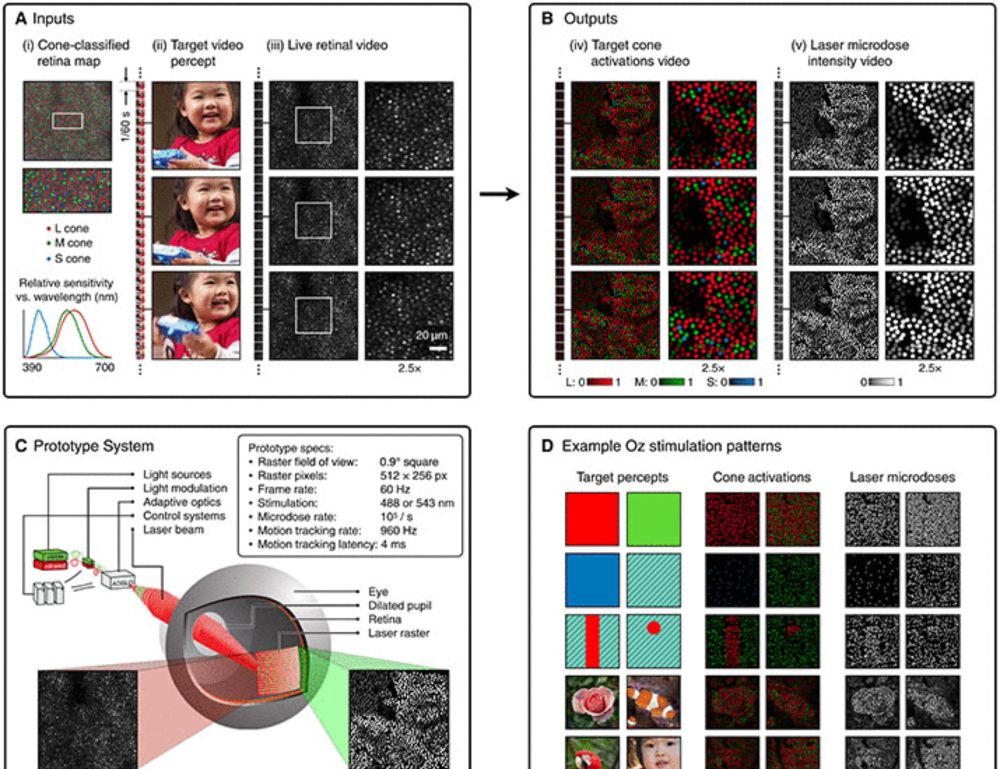

Coming (not too) soon to a theater near you!

www.science.org/doi/10.1126/...

Coming (not too) soon to a theater near you!

www.science.org/doi/10.1126/...

careers.peopleclick.com/careerscp/cl...

......

careers.peopleclick.com/careerscp/cl...

......