Kanishk Gandhi

@gandhikanishk.bsky.social

PhD Student Stanford w/ Noah Goodman, studying reasoning, discovery, and interaction. Trying to build machines that understand people.

StanfordNLP, Stanford AI Lab

StanfordNLP, Stanford AI Lab

Pinned

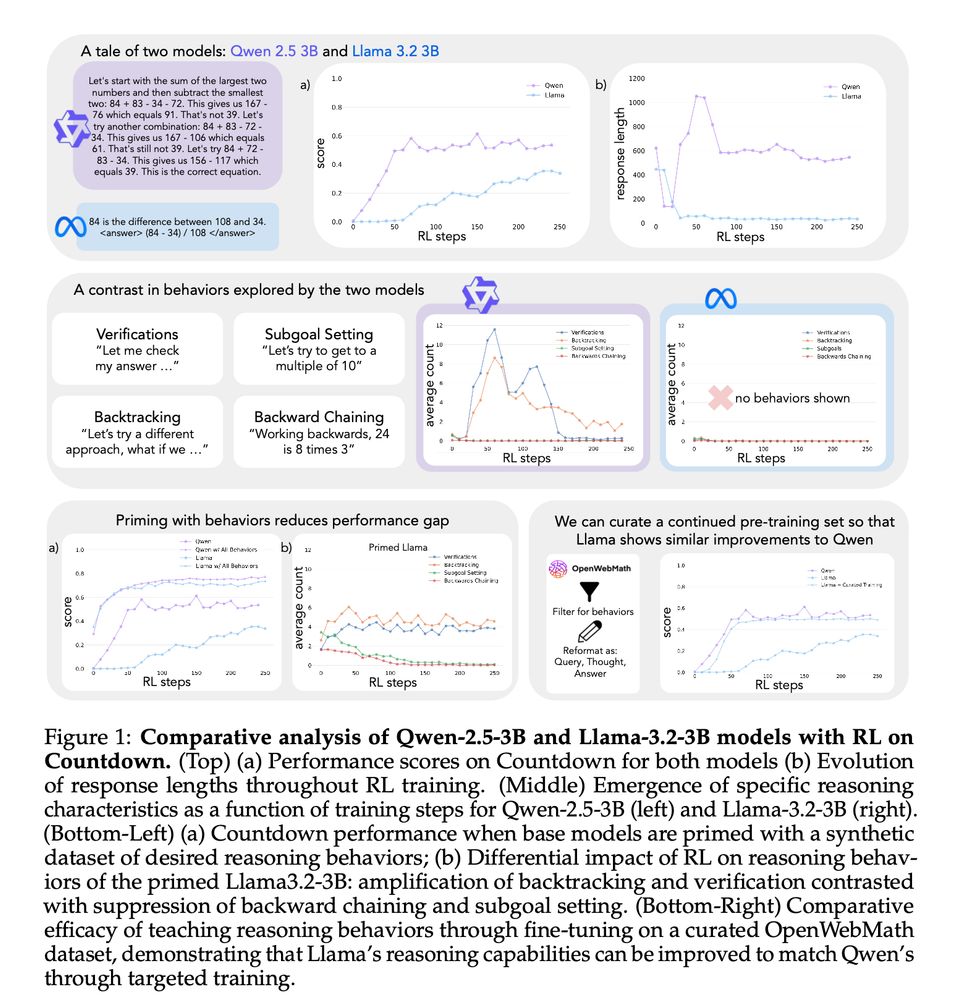

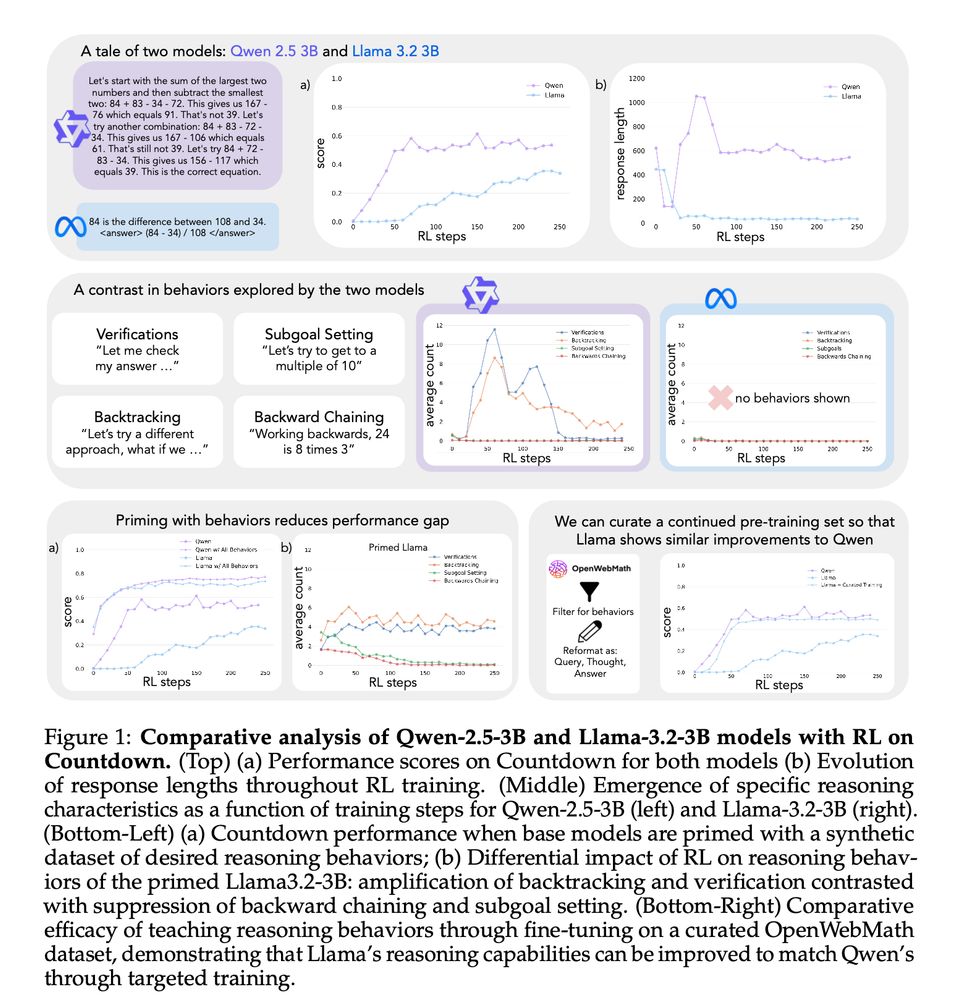

1/13 New Paper!! We try to understand why some LMs self-improve their reasoning while others hit a wall. The key? Cognitive behaviors! Read our paper on how the right cognitive behaviors can make all the difference in a model's ability to improve with RL! 🧵

Reposted by Kanishk Gandhi

How can we combine the process-level insight that think-aloud studies give us with the large scale that modern online experiments permit? In our new CogSci paper, we show that speech-to-text models and LLMs enable us to scale up the think-aloud method to large experiments!

Excited to share a new CogSci paper co-led with @benpry.bsky.social!

Once a cornerstone for studying human reasoning, the think-aloud method declined in popularity as manual coding limited its scale. We introduce a method to automate analysis of verbal reports and scale think-aloud studies. (1/8)🧵

Once a cornerstone for studying human reasoning, the think-aloud method declined in popularity as manual coding limited its scale. We introduce a method to automate analysis of verbal reports and scale think-aloud studies. (1/8)🧵

June 25, 2025 at 5:32 AM

How can we combine the process-level insight that think-aloud studies give us with the large scale that modern online experiments permit? In our new CogSci paper, we show that speech-to-text models and LLMs enable us to scale up the think-aloud method to large experiments!

Can we record and study human chains of thought? Check out our new work led by @danielwurgaft.bsky.social and @benpry.bsky.social !!

Excited to share a new CogSci paper co-led with @benpry.bsky.social!

Once a cornerstone for studying human reasoning, the think-aloud method declined in popularity as manual coding limited its scale. We introduce a method to automate analysis of verbal reports and scale think-aloud studies. (1/8)🧵

Once a cornerstone for studying human reasoning, the think-aloud method declined in popularity as manual coding limited its scale. We introduce a method to automate analysis of verbal reports and scale think-aloud studies. (1/8)🧵

June 25, 2025 at 6:11 PM

Can we record and study human chains of thought? Check out our new work led by @danielwurgaft.bsky.social and @benpry.bsky.social !!

Reposted by Kanishk Gandhi

Some absolutely marvellous work from @gandhikanishk.bsky.social et al! Wow!

March 11, 2025 at 3:57 PM

Some absolutely marvellous work from @gandhikanishk.bsky.social et al! Wow!

1/13 New Paper!! We try to understand why some LMs self-improve their reasoning while others hit a wall. The key? Cognitive behaviors! Read our paper on how the right cognitive behaviors can make all the difference in a model's ability to improve with RL! 🧵

March 4, 2025 at 6:15 PM

1/13 New Paper!! We try to understand why some LMs self-improve their reasoning while others hit a wall. The key? Cognitive behaviors! Read our paper on how the right cognitive behaviors can make all the difference in a model's ability to improve with RL! 🧵

Reposted by Kanishk Gandhi

emotionally, i’m constantly walking into a glass door

February 19, 2025 at 4:44 AM

emotionally, i’m constantly walking into a glass door

Reposted by Kanishk Gandhi

Can Large Language Models THINK and UNDERSTAND? The answer from cognitive science is, of course, lolwut YES!

The more interesting question is CAN TOASTERS LOVE? Intriguingly, the answer is ALSO YES! And they love YOU

The more interesting question is CAN TOASTERS LOVE? Intriguingly, the answer is ALSO YES! And they love YOU

January 19, 2025 at 12:39 PM

Can Large Language Models THINK and UNDERSTAND? The answer from cognitive science is, of course, lolwut YES!

The more interesting question is CAN TOASTERS LOVE? Intriguingly, the answer is ALSO YES! And they love YOU

The more interesting question is CAN TOASTERS LOVE? Intriguingly, the answer is ALSO YES! And they love YOU

Reposted by Kanishk Gandhi

They present a scientifically optimized recipe of “Pasta alla Cacio e pepe” based on their findings, enabling a consistently flawless execution of this classic dish.

"Phase behavior of Cacio and Pepe sauce"

arxiv.org/abs/2501.00536

"Phase behavior of Cacio and Pepe sauce"

arxiv.org/abs/2501.00536

January 6, 2025 at 11:47 PM

They present a scientifically optimized recipe of “Pasta alla Cacio e pepe” based on their findings, enabling a consistently flawless execution of this classic dish.

"Phase behavior of Cacio and Pepe sauce"

arxiv.org/abs/2501.00536

"Phase behavior of Cacio and Pepe sauce"

arxiv.org/abs/2501.00536

Reposted by Kanishk Gandhi

What counts as in-context learning (ICL)? Typically, you might think of it as learning a task from a few examples. However, we’ve just written a perspective (arxiv.org/abs/2412.03782) suggesting interpreting a much broader spectrum of behaviors as ICL! Quick summary thread: 1/7

The broader spectrum of in-context learning

The ability of language models to learn a task from a few examples in context has generated substantial interest. Here, we provide a perspective that situates this type of supervised few-shot learning...

arxiv.org

December 10, 2024 at 6:17 PM

What counts as in-context learning (ICL)? Typically, you might think of it as learning a task from a few examples. However, we’ve just written a perspective (arxiv.org/abs/2412.03782) suggesting interpreting a much broader spectrum of behaviors as ICL! Quick summary thread: 1/7

I'll be at Neurips this week :) looking forward to catching up with folks! Please reach out if you want to chat!!

December 9, 2024 at 5:26 AM

I'll be at Neurips this week :) looking forward to catching up with folks! Please reach out if you want to chat!!

Reposted by Kanishk Gandhi

Okay the people requested one so here is an attempt at a Computational Cognitive Science starter pack -- with apologies to everyone I've missed! LMK if there's anyone I should add!

go.bsky.app/KDTg6pv

go.bsky.app/KDTg6pv

November 11, 2024 at 5:27 PM

Okay the people requested one so here is an attempt at a Computational Cognitive Science starter pack -- with apologies to everyone I've missed! LMK if there's anyone I should add!

go.bsky.app/KDTg6pv

go.bsky.app/KDTg6pv

Reposted by Kanishk Gandhi

I am not actively looking for people this cycle, but re-sharing in case of relevance to others

I have started to receive emails from prospective lab members (yay!). Some words of advice:

1. mention which position you are interested in

2. state clearly what research topics you’re interested in going forward (beyond just "language" or "LLMs")

(contd)

1. mention which position you are interested in

2. state clearly what research topics you’re interested in going forward (beyond just "language" or "LLMs")

(contd)

November 12, 2024 at 12:25 AM

I am not actively looking for people this cycle, but re-sharing in case of relevance to others

Reposted by Kanishk Gandhi

Told my kids about the liar's paradox today and, I'm not lying, they didn't believe me.

December 17, 2023 at 4:00 PM

Told my kids about the liar's paradox today and, I'm not lying, they didn't believe me.