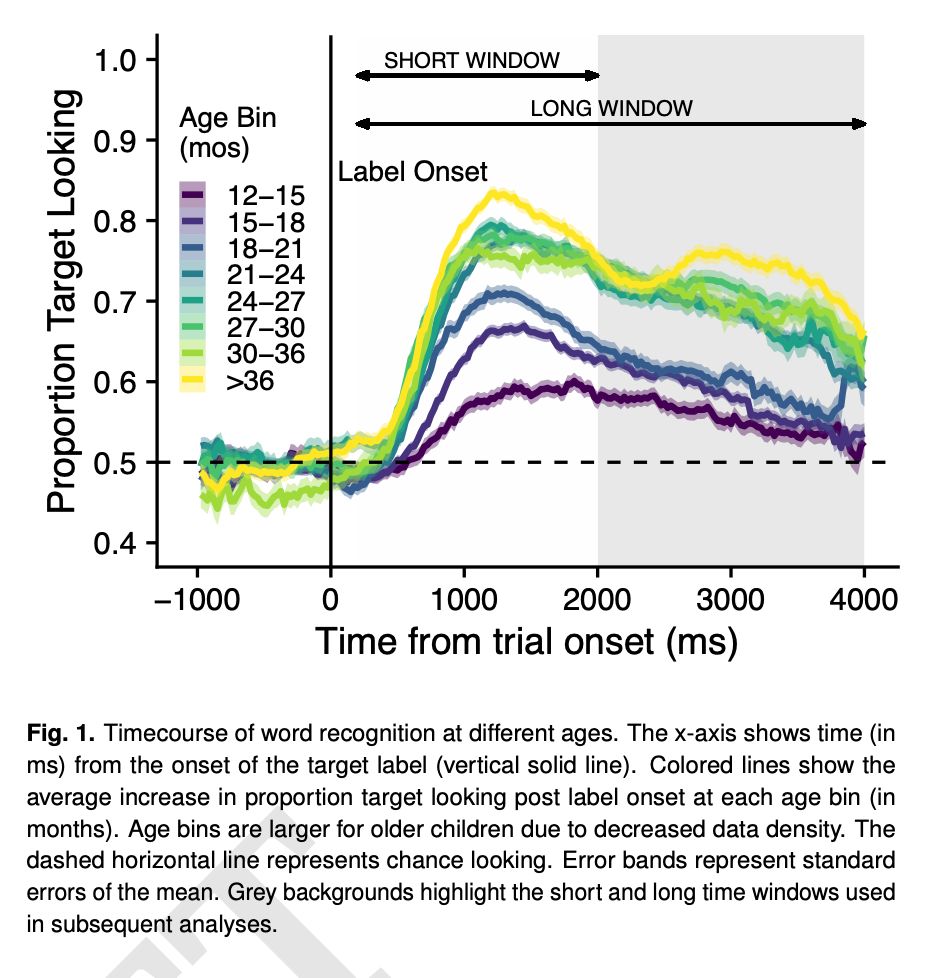

Using data from ~2000 kids ages 1-6, we quantify links between word recognition and early vocabulary growth!

Using data from ~2000 kids ages 1-6, we quantify links between word recognition and early vocabulary growth!

📃 authors.elsevier.com/a/1lo8f2Hx2-...

📃 authors.elsevier.com/a/1lo8f2Hx2-...

We look for patterns. But what are the limits of this ability?

In our new paper at CCN 2025 (@cogcompneuro.bsky.social), we explore the computational constraints of human pattern recognition using the classic game of Rock, Paper, Scissors 🗿📄✂️

We look for patterns. But what are the limits of this ability?

In our new paper at CCN 2025 (@cogcompneuro.bsky.social), we explore the computational constraints of human pattern recognition using the classic game of Rock, Paper, Scissors 🗿📄✂️

psycnet.apa.org/doiLanding?d...

psycnet.apa.org/doiLanding?d...

![What do representations tell us about a system? Image of a mouse with a scope showing a vector of activity patterns, and a neural network with a vector of unit activity patterns

Common analyses of neural representations: Encoding models (relating activity to task features) drawing of an arrow from a trace saying [on_____on____] to a neuron and spike train. Comparing models via neural predictivity: comparing two neural networks by their R^2 to mouse brain activity. RSA: assessing brain-brain or model-brain correspondence using representational dissimilarity matrices](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:e6ewzleebkdi2y2bxhjxoknt/bafkreiav2io2ska33o4kizf57co5bboqyyfdpnozo2gxsicrfr5l7qzjcq@jpeg)

paper: escholarship.org/uc/item/16c4...

paper: escholarship.org/uc/item/16c4...

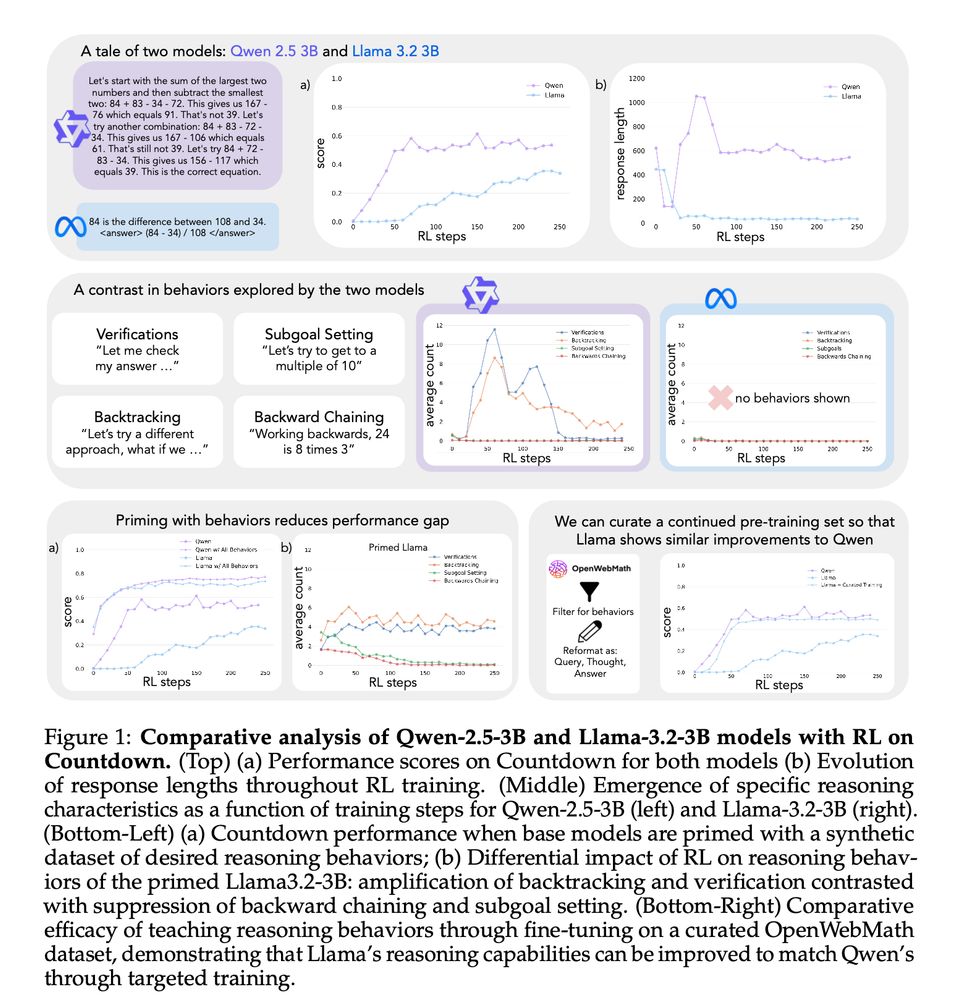

paper: arxiv.org/abs/2505.23931

paper: arxiv.org/abs/2505.23931

paper: escholarship.org/uc/item/5td9...

paper: escholarship.org/uc/item/5td9...

Our work explains this & *predicts Transformer behavior throughout training* without its weights! 🧵

1/

Our work explains this & *predicts Transformer behavior throughout training* without its weights! 🧵

1/

Once a cornerstone for studying human reasoning, the think-aloud method declined in popularity as manual coding limited its scale. We introduce a method to automate analysis of verbal reports and scale think-aloud studies. (1/8)🧵

We're calling it: 🏺Minds in the Making🏺

🔗 minds-making.github.io

June – July 2024, free & open to the public

(all career stages, all disciplines)

We're calling it: 🏺Minds in the Making🏺

🔗 minds-making.github.io

June – July 2024, free & open to the public

(all career stages, all disciplines)

new preprint👇

new preprint👇

recognition support language learning across early

childhood": osf.io/preprints/ps...

recognition support language learning across early

childhood": osf.io/preprints/ps...

📝 preprint: osf.io/preprints/ps...

💻 code: github.com/cogtoolslab/...

📝 preprint: osf.io/preprints/ps...

💻 code: github.com/cogtoolslab/...

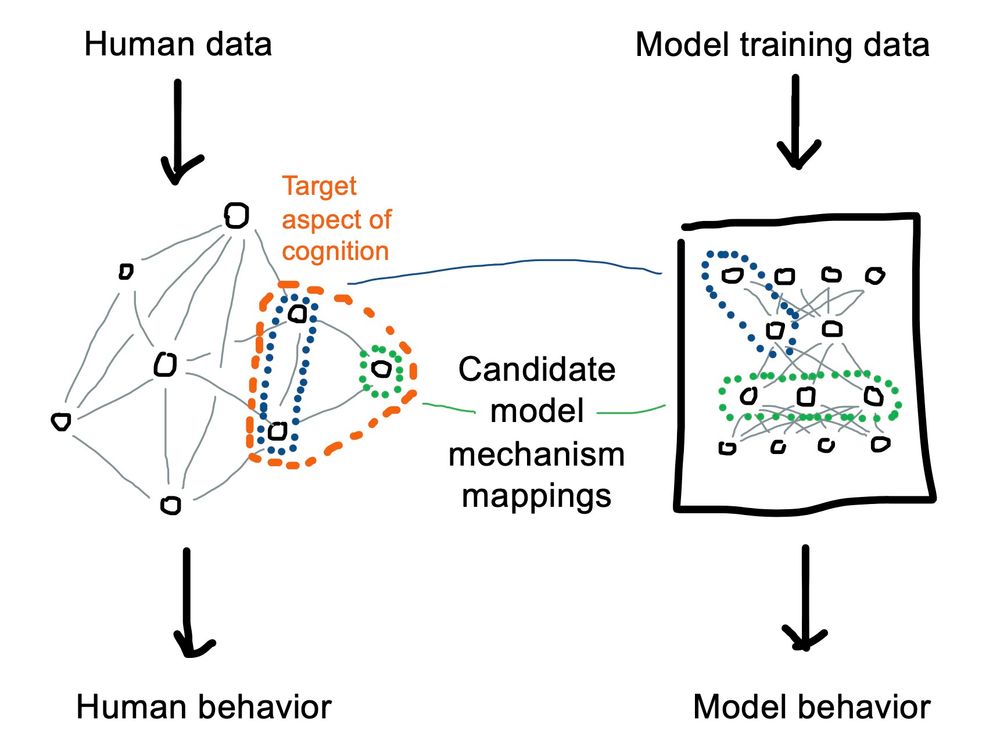

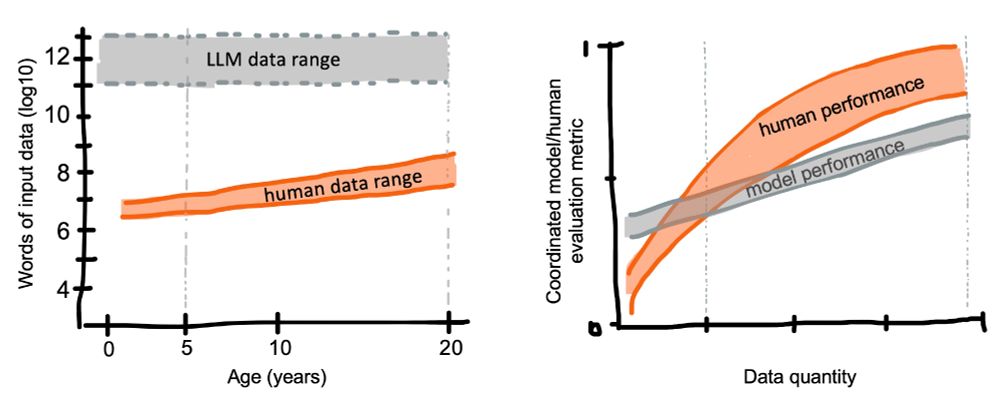

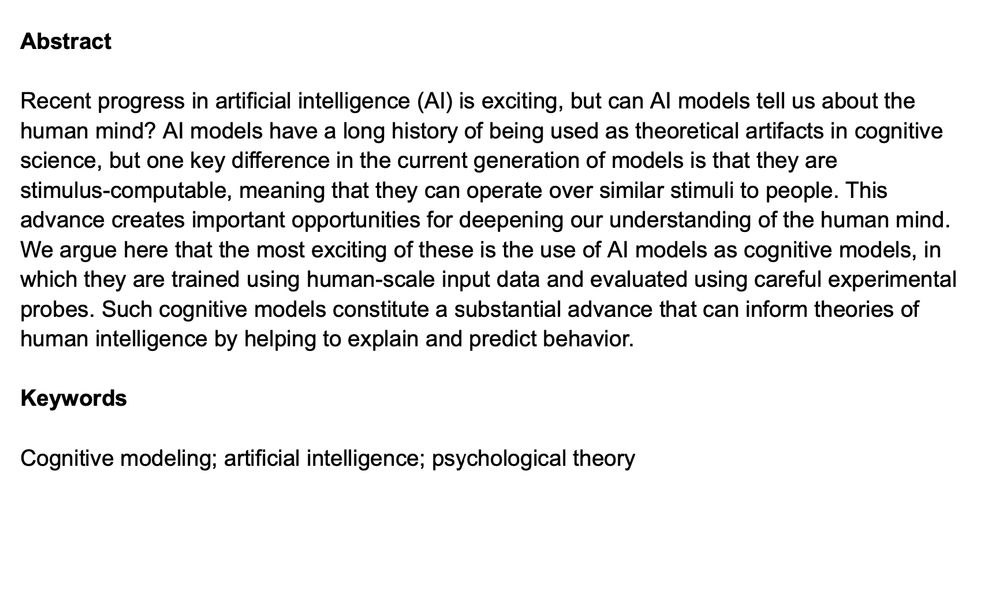

In a new review paper, @noahdgoodman.bsky.social and I discuss how modern AI can be used for cognitive modeling: osf.io/preprints/ps...

In a new review paper, @noahdgoodman.bsky.social and I discuss how modern AI can be used for cognitive modeling: osf.io/preprints/ps...

In "Causation, Meaning, and Communication" Ari Beller (cicl.stanford.edu/member/ari_b...) develops a computational model of how people use & understand expressions like "caused", "enabled", and "affected".

📃 osf.io/preprints/ps...

📎 github.com/cicl-stanfor...

🧵

In "Causation, Meaning, and Communication" Ari Beller (cicl.stanford.edu/member/ari_b...) develops a computational model of how people use & understand expressions like "caused", "enabled", and "affected".

📃 osf.io/preprints/ps...

📎 github.com/cicl-stanfor...

🧵

osf.io/preprints/ps...

osf.io/preprints/ps...

doi.org/10.31234/osf...

doi.org/10.31234/osf...

osf.io/preprints/ps...

osf.io/preprints/ps...