📍 Munich

dl.acm.org/doi/10.1145/...

dl.acm.org/doi/10.1145/...

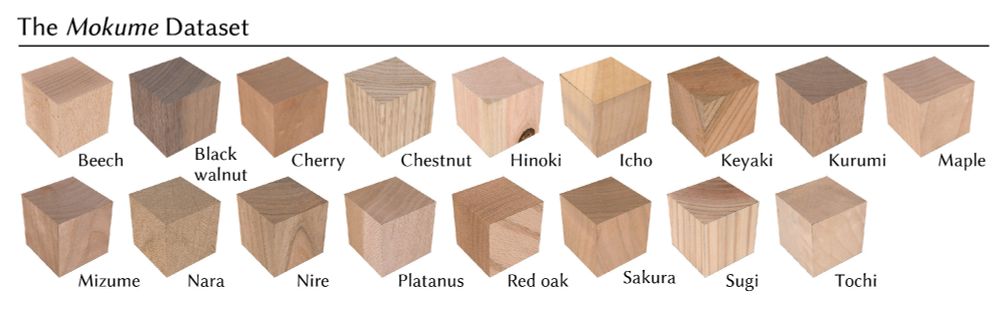

At SIGGRAPH'25 (Thursday!), Maria Larsson will present *Mokume*: a dataset of 190 diverse wood samples and a pipeline that solves this inverse texturing challenge. 🧵👇

At SIGGRAPH'25 (Thursday!), Maria Larsson will present *Mokume*: a dataset of 190 diverse wood samples and a pipeline that solves this inverse texturing challenge. 🧵👇

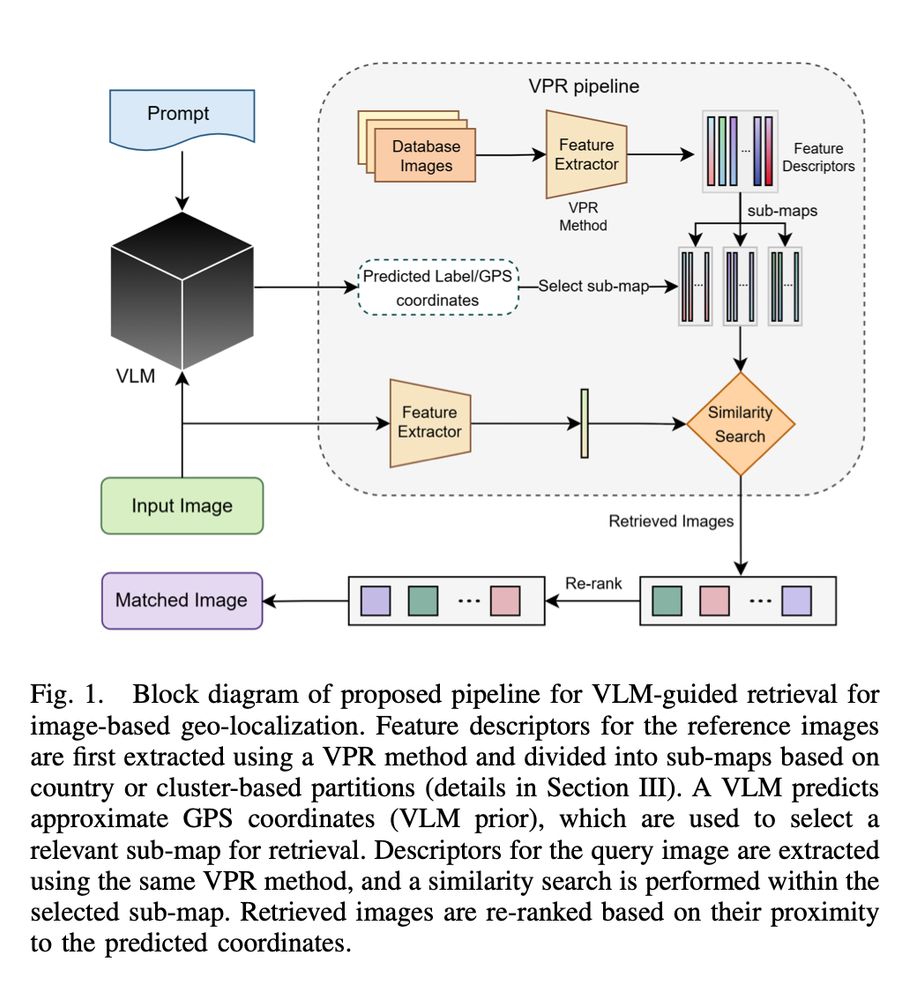

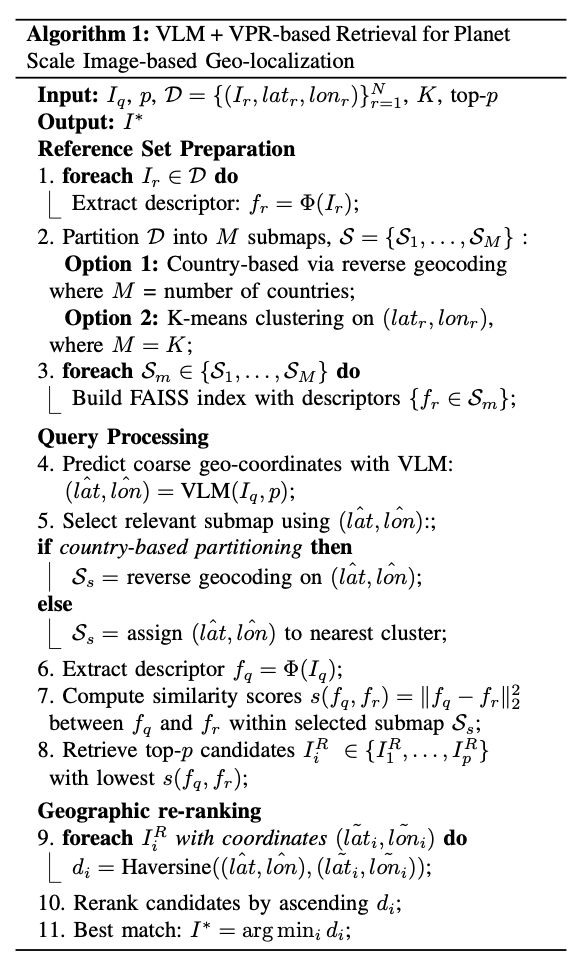

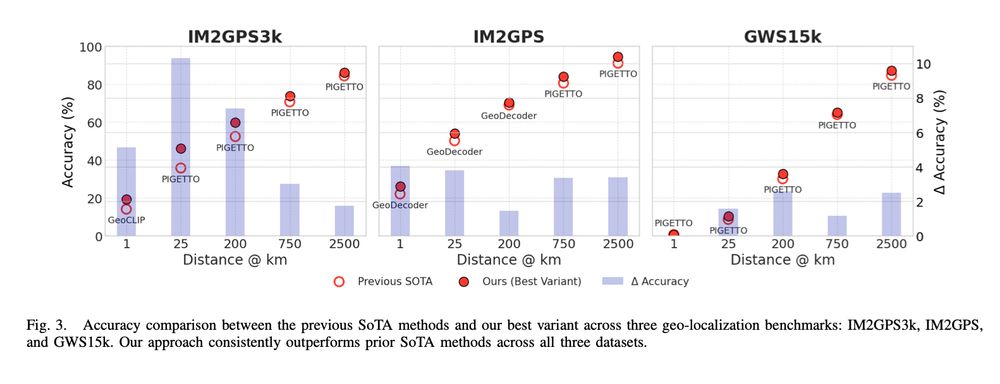

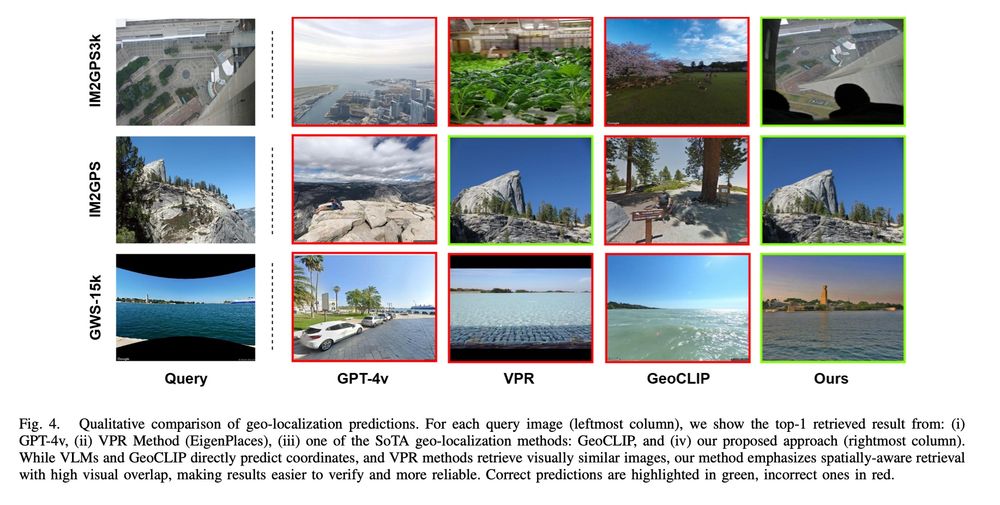

Sania Waheed, Na Min An, Michael Milford , Sarvapali D. Ramchurn, Shoaib Ehsan

tl;dr: in title

arxiv.org/abs/2507.17455

Sania Waheed, Na Min An, Michael Milford , Sarvapali D. Ramchurn, Shoaib Ehsan

tl;dr: in title

arxiv.org/abs/2507.17455

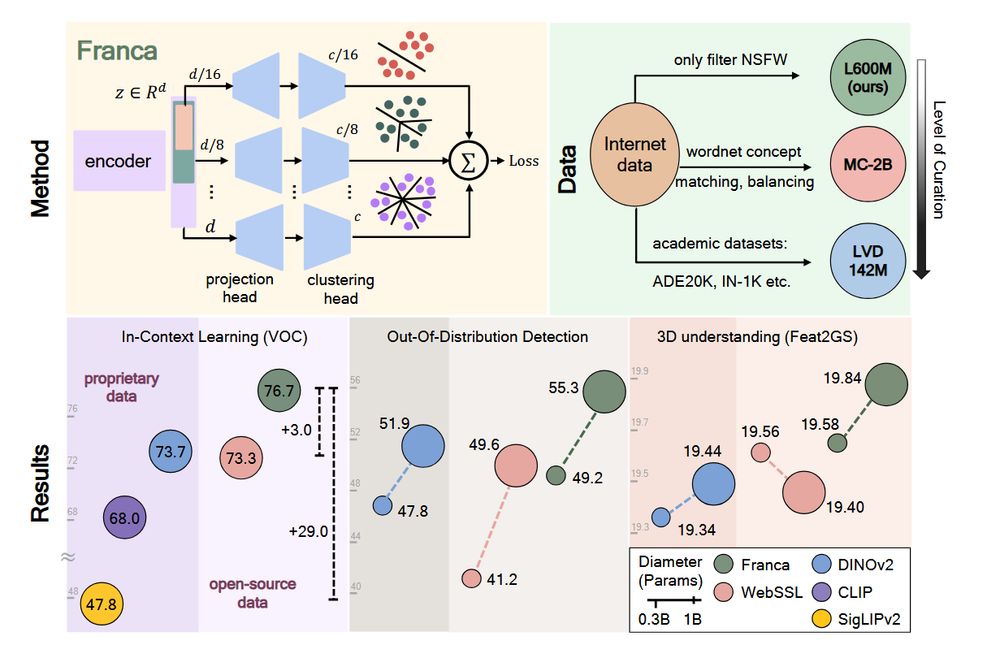

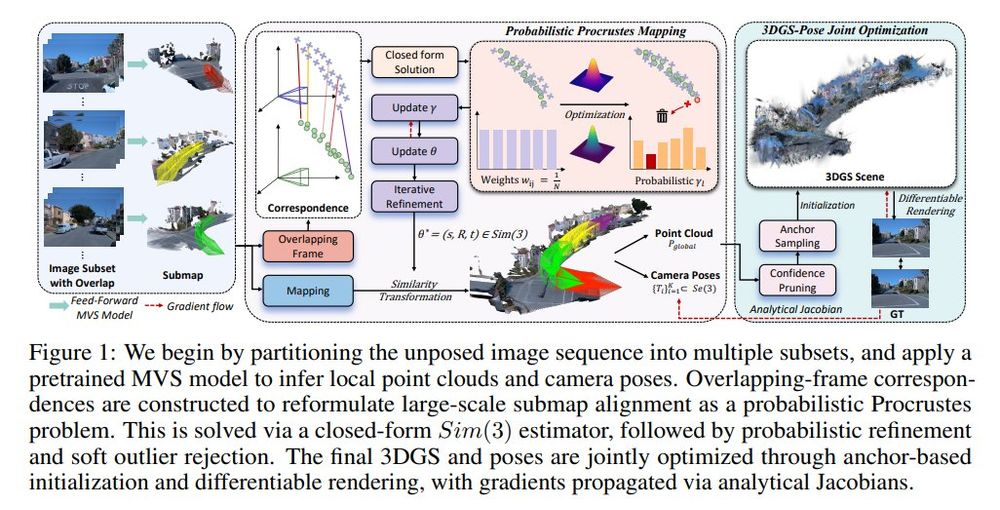

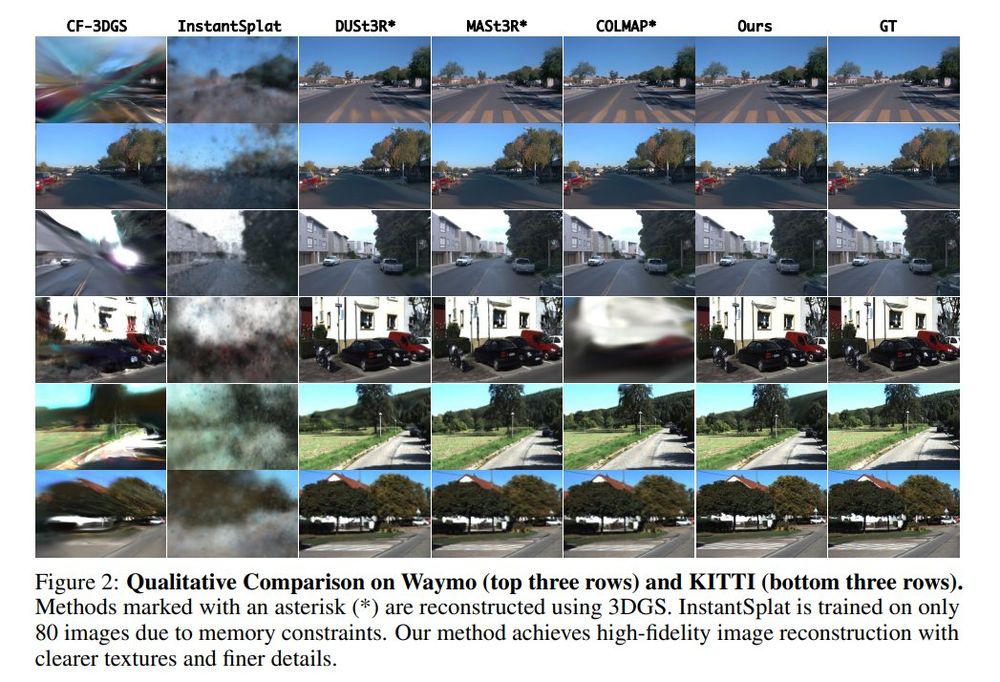

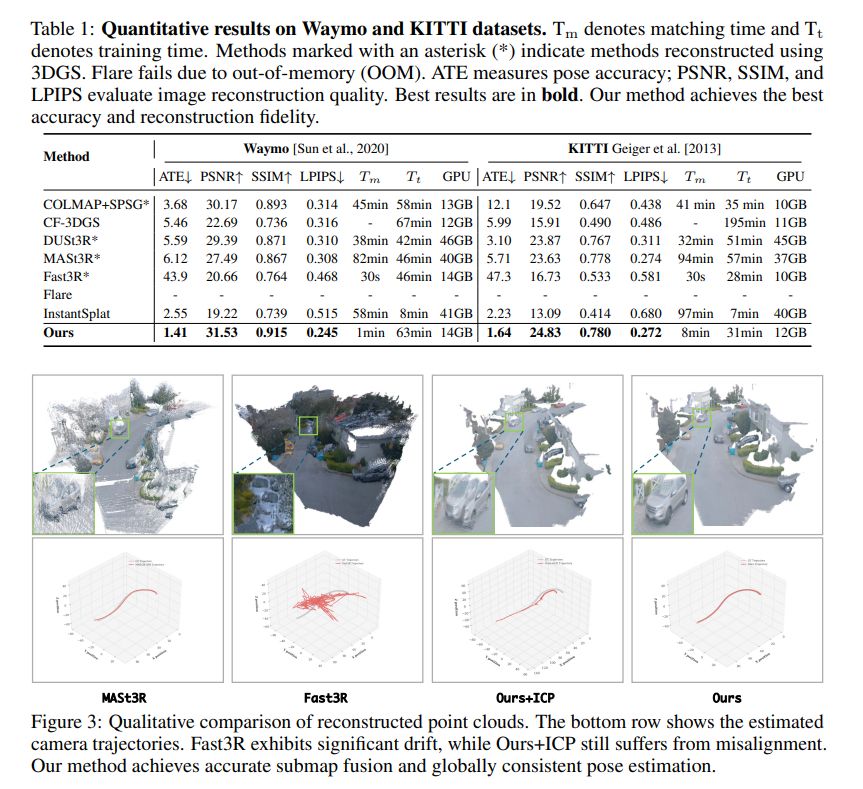

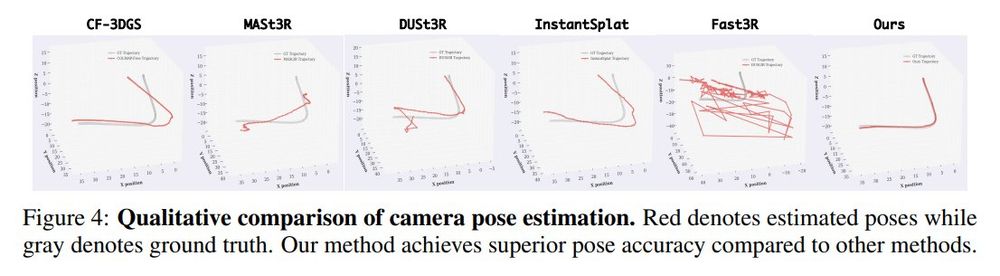

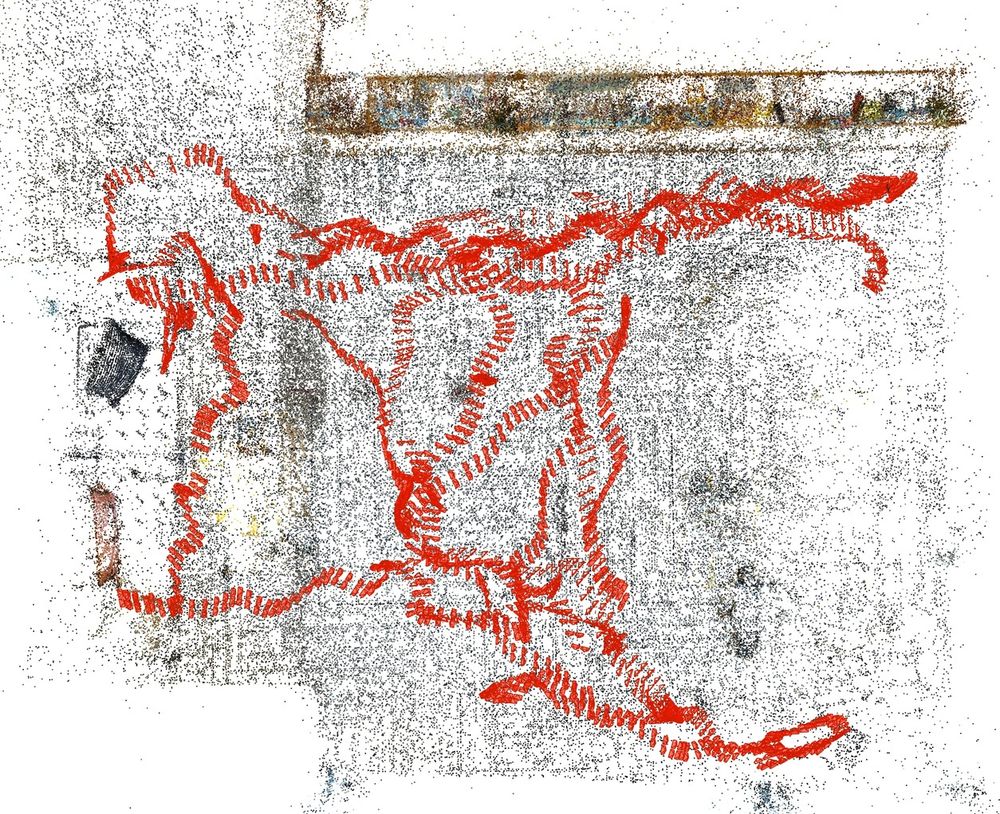

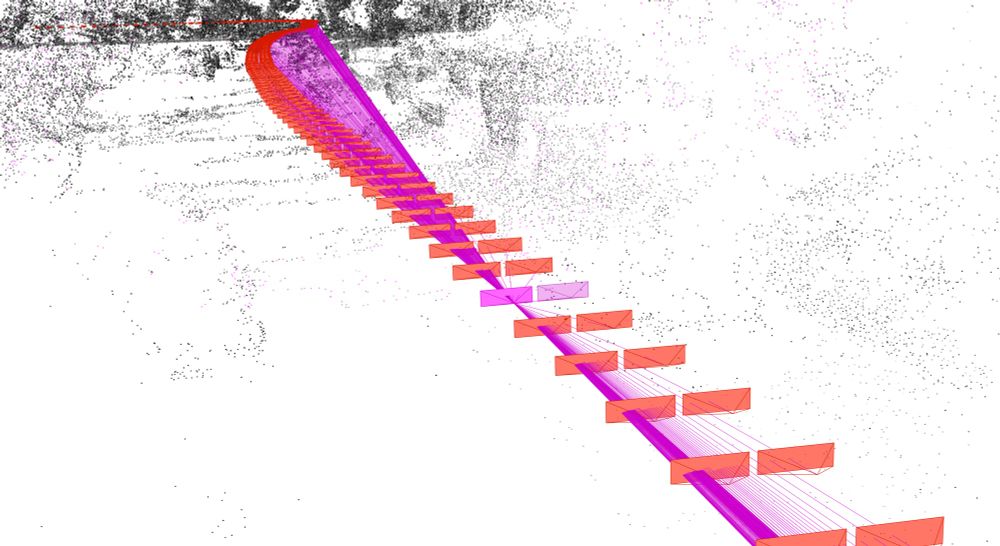

Chong Cheng, Zijian Wang, Sicheng Yu, Yu Hu, Nanjie Yao, Hao Wang

tl;dr: submap alignment->point cloud registration->robust Umeyama algorithm->global point cloud and camera trajectory

arxiv.org/abs/2507.18541

Chong Cheng, Zijian Wang, Sicheng Yu, Yu Hu, Nanjie Yao, Hao Wang

tl;dr: submap alignment->point cloud registration->robust Umeyama algorithm->global point cloud and camera trajectory

arxiv.org/abs/2507.18541

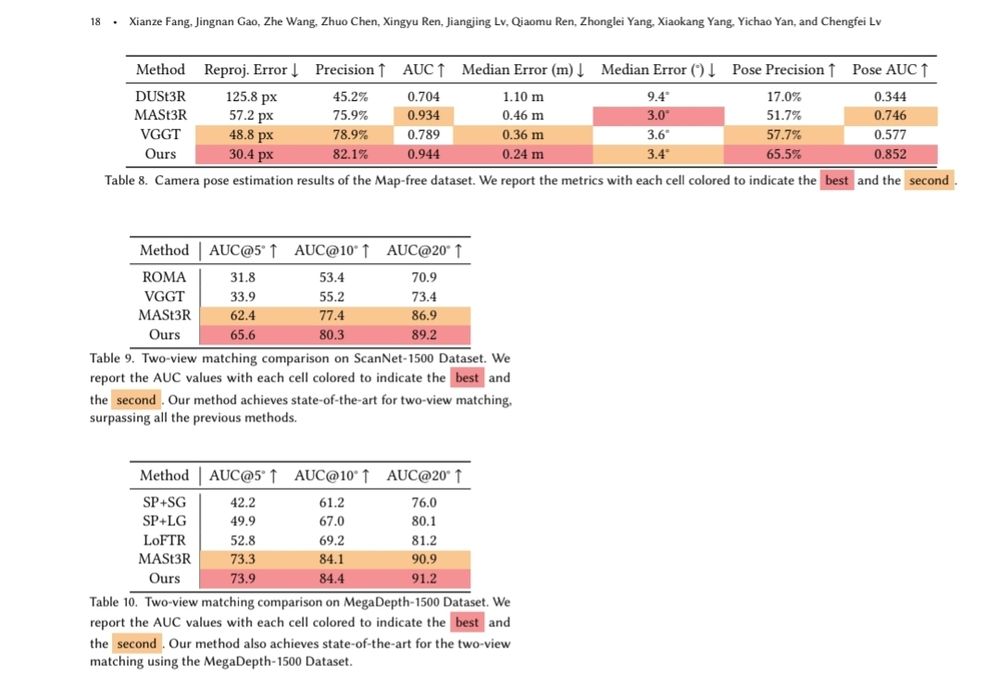

Note: Seems they might have messed up their image matching metrics (seems like acc rather than auc), but should be at least as good as mast3r.

Note: Seems they might have messed up their image matching metrics (seems like acc rather than auc), but should be at least as good as mast3r.

“At least one author of each accepted paper must register for the main conference. A ‘Virtual Only Pass’ is not sufficient.”

“At least one author of each accepted paper must register for the main conference. A ‘Virtual Only Pass’ is not sufficient.”

Stop using WeTransfer.

Stop using WeTransfer.

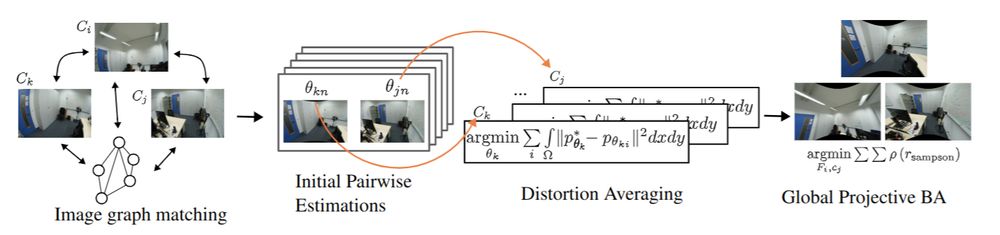

Turns out distortion calibration from multiview 2D correspondences can be fully decoupled from 3D reconstruction, greatly simplifying the problem

arxiv.org/abs/2504.16499

github.com/DaniilSinits...

Turns out distortion calibration from multiview 2D correspondences can be fully decoupled from 3D reconstruction, greatly simplifying the problem

arxiv.org/abs/2504.16499

github.com/DaniilSinits...

🌍: visinf.github.io/scenedino/

📃: arxiv.org/abs/2507.06230

🤗: huggingface.co/spaces/jev-a...

@jev-aleks.bsky.social @fwimbauer.bsky.social @olvrhhn.bsky.social @stefanroth.bsky.social @dcremers.bsky.social

🌍: visinf.github.io/scenedino/

📃: arxiv.org/abs/2507.06230

🤗: huggingface.co/spaces/jev-a...

@jev-aleks.bsky.social @fwimbauer.bsky.social @olvrhhn.bsky.social @stefanroth.bsky.social @dcremers.bsky.social

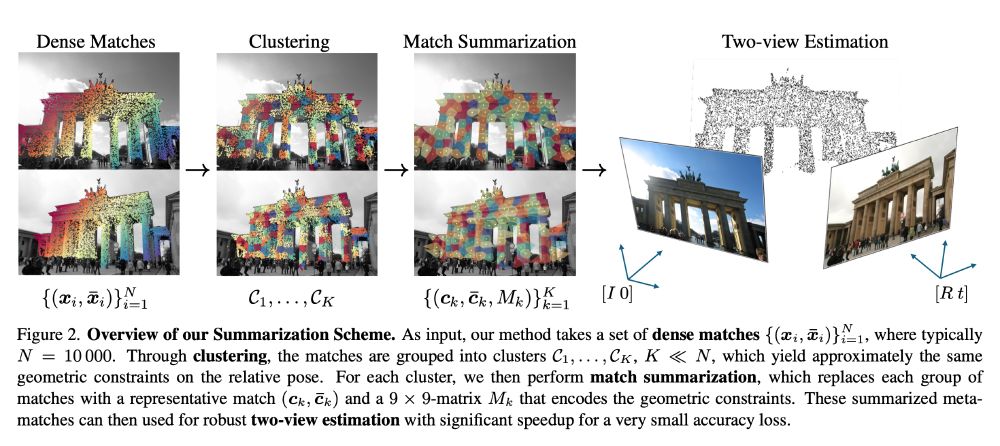

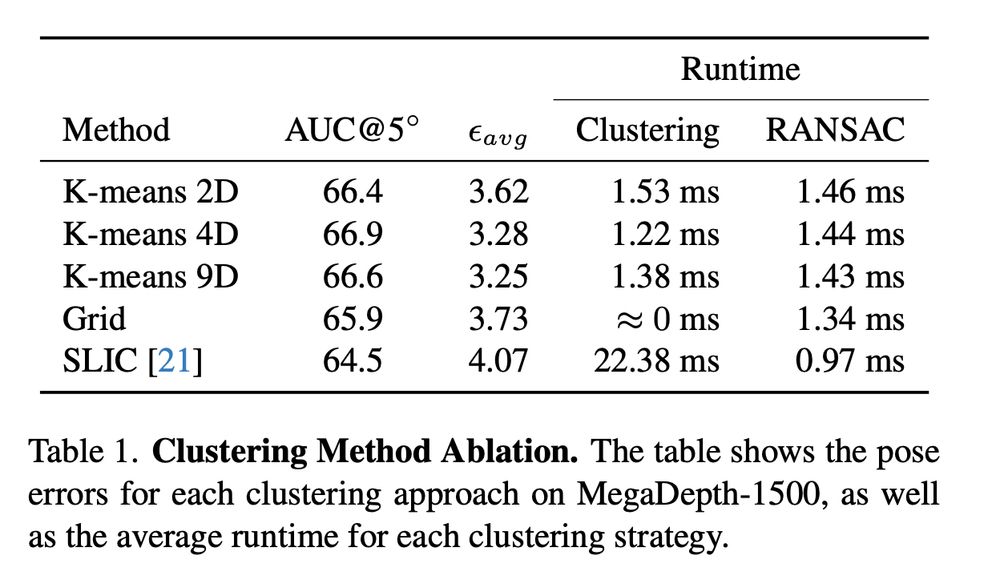

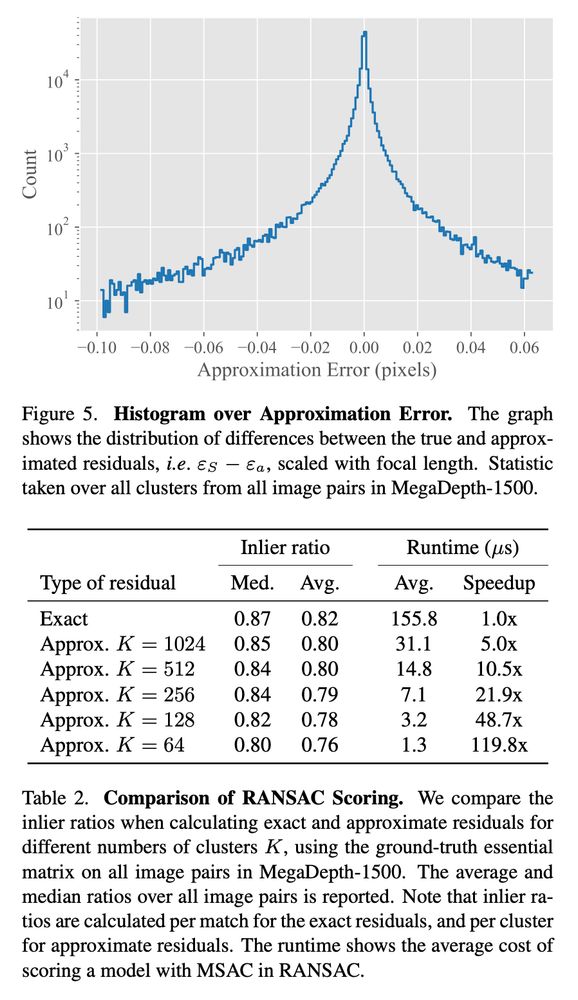

Jonathan Astermark, Anders Heyden, Viktor Larsson

tl;dr: use clustering to reduce RANSAC time when using dense methods like RoMa.

Kudos for eval on WxBS.

P.S. now the same, but for BA?

arxiv.org/abs/2506.028...

Jonathan Astermark, Anders Heyden, Viktor Larsson

tl;dr: use clustering to reduce RANSAC time when using dense methods like RoMa.

Kudos for eval on WxBS.

P.S. now the same, but for BA?

arxiv.org/abs/2506.028...

🔥 Our method produces geometry, texture-consistent, and physically plausible 4D reconstructions

📰 Check our project page sangluisme.github.io/TwoSquared/

❤️ @ricmarin.bsky.social @dcremers.bsky.social

🔥 Our method produces geometry, texture-consistent, and physically plausible 4D reconstructions

📰 Check our project page sangluisme.github.io/TwoSquared/

❤️ @ricmarin.bsky.social @dcremers.bsky.social

Surprisingly, yes!

Our #CVPR2025 paper with @neekans.bsky.social and @dcremers.bsky.social shows that the pairwise distances in both modalities are often enough to find correspondences.

⬇️ 1/4

Surprisingly, yes!

Our #CVPR2025 paper with @neekans.bsky.social and @dcremers.bsky.social shows that the pairwise distances in both modalities are often enough to find correspondences.

⬇️ 1/4

Turns out you can!

In our #CVPR2025 paper AnyCam, we directly train on YouTube videos and achieve SOTA results by using an uncertainty-based flow loss and monocular priors!

⬇️

Turns out you can!

In our #CVPR2025 paper AnyCam, we directly train on YouTube videos and achieve SOTA results by using an uncertainty-based flow loss and monocular priors!

⬇️

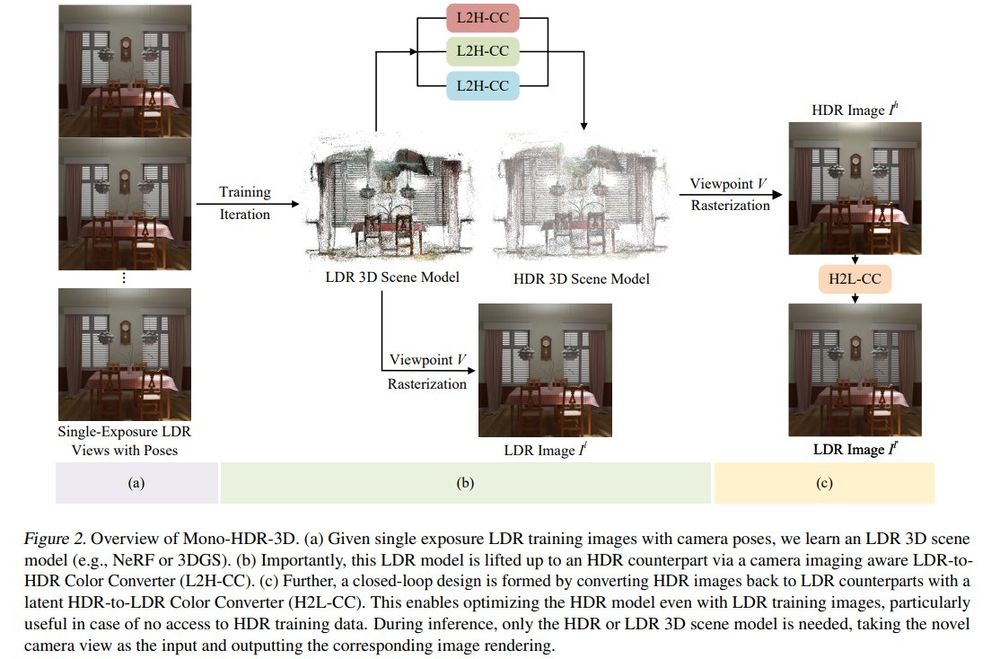

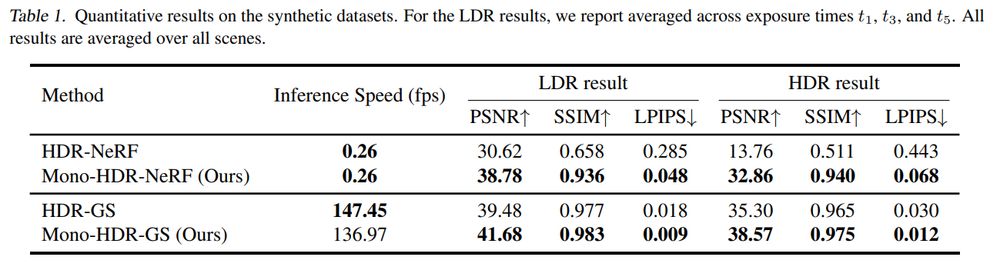

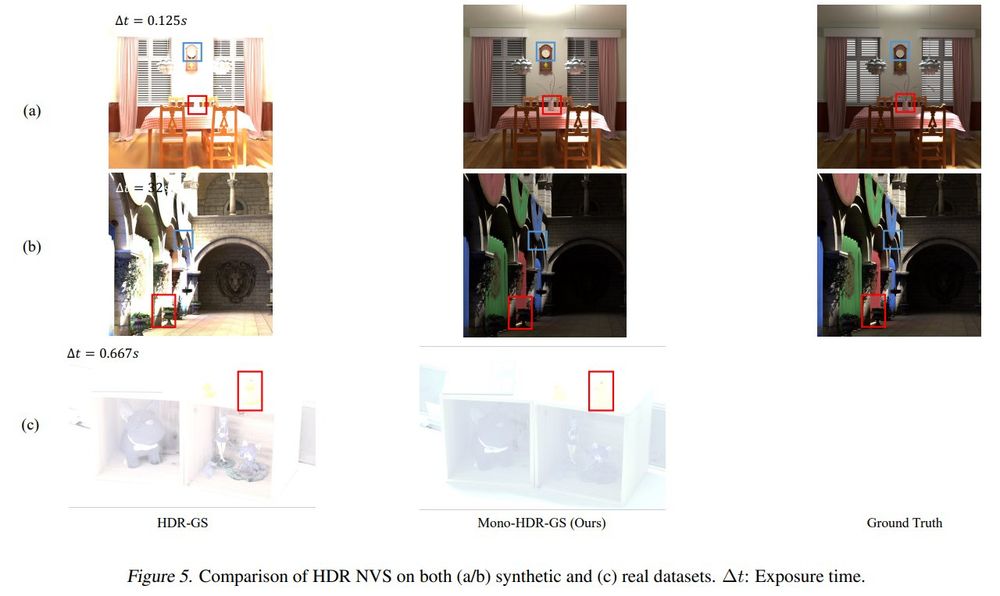

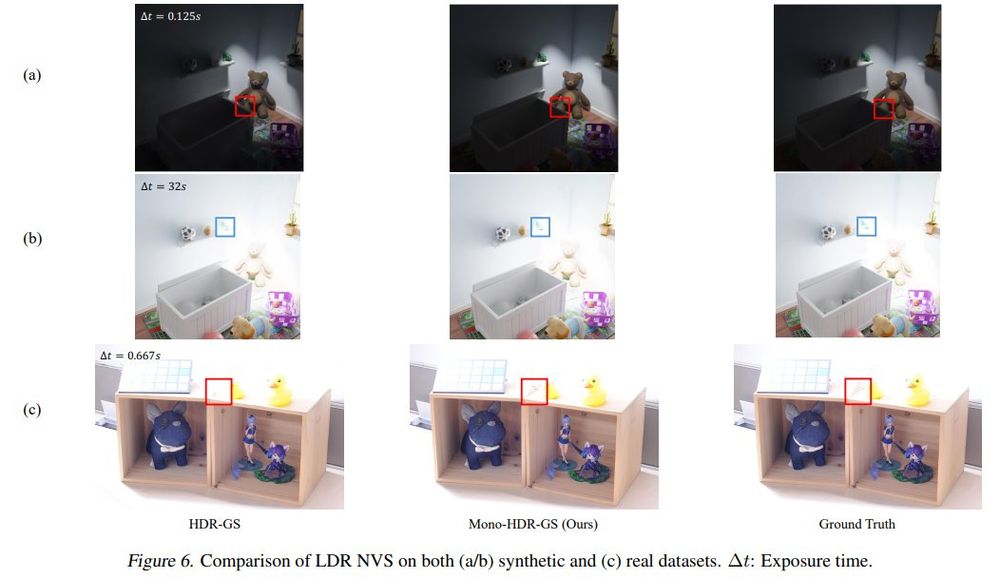

Kaixuan Zhang, Hu Wang, Minxian Li, Mingwu Ren, Mao Ye, Xiatian Zhu

tl;dr:single exposure LDR images in training; LDR image->model+lift->HDR colors; HDR image->LDR image->additional supervision

arxiv.org/abs/2505.01212

Kaixuan Zhang, Hu Wang, Minxian Li, Mingwu Ren, Mao Ye, Xiatian Zhu

tl;dr:single exposure LDR images in training; LDR image->model+lift->HDR colors; HDR image->LDR image->additional supervision

arxiv.org/abs/2505.01212

Can meshes capture fuzzy geometry? Volumetric Surfaces uses adaptive textured shells to model hair, fur without the splatting / volume overhead. It’s fast, looks great, and runs in real time even on budget phones.

🔗 autonomousvision.github.io/volsurfs/

📄 arxiv.org/pdf/2409.02482

Can meshes capture fuzzy geometry? Volumetric Surfaces uses adaptive textured shells to model hair, fur without the splatting / volume overhead. It’s fast, looks great, and runs in real time even on budget phones.

🔗 autonomousvision.github.io/volsurfs/

📄 arxiv.org/pdf/2409.02482

RSVP: www.zurichai.ch/events/zuric...

RSVP: www.zurichai.ch/events/zuric...

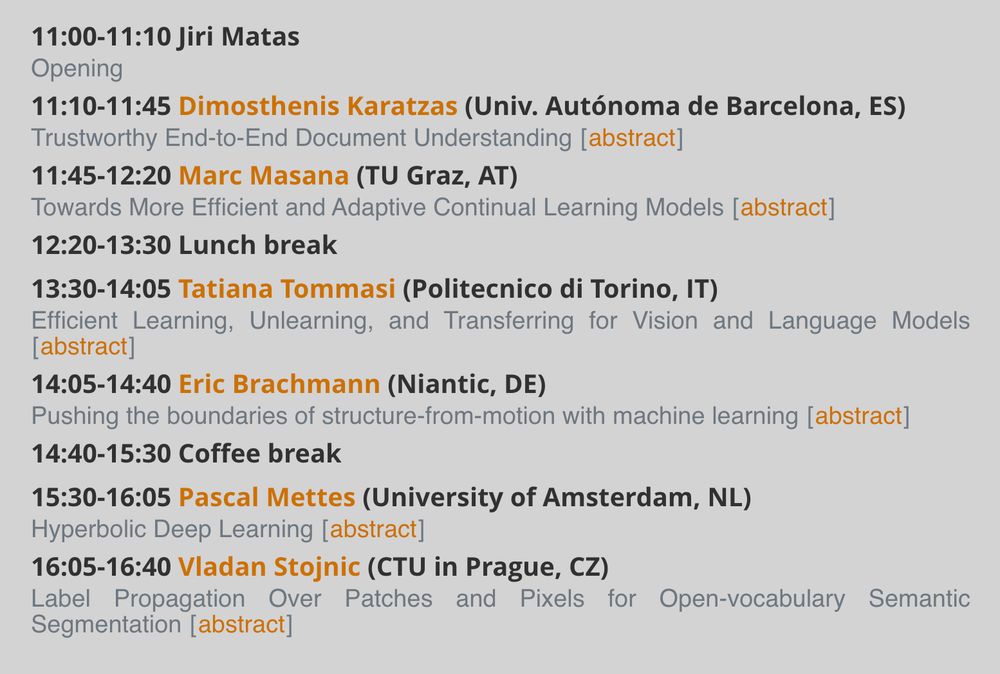

cmp.felk.cvut.cz/colloquium/#...

cmp.felk.cvut.cz/colloquium/#...