Dani Shanley

@danishanley.bsky.social

assistant prof in philosophy @ maastricht university. thinking about ethics and politics (human factors) of new tech. critical of the hype and critical of the critics.

Reposted by Dani Shanley

What an amazing essay from the former chair of Africana Studies at Bowdoin. I'll share a few sections in the reply but seriously, read the whole thing. It's all insightful and beautifully written.

lithub.com/maybe-dont-t...

lithub.com/maybe-dont-t...

Maybe Don’t Talk to the New York Times About Zohran Mamdani

It’s remarkable, the people you’ll hear from. Teach for even a little while at an expensive institution—the term they tend to prefer is “elite”—and odds are that eventually someone who was a studen…

lithub.com

November 8, 2025 at 7:11 PM

What an amazing essay from the former chair of Africana Studies at Bowdoin. I'll share a few sections in the reply but seriously, read the whole thing. It's all insightful and beautifully written.

lithub.com/maybe-dont-t...

lithub.com/maybe-dont-t...

Reposted by Dani Shanley

Reposted by Dani Shanley

Something I've really noticed among institutional "responses" to generative technologies is that "DO NOT USE THIS TOOL, IT'S COMPLETELY INAPPROPRIATE" is fudamentally erased from any possibility of ever being an option

Often paired with fatalist stuff like "this isn't going away"

Often paired with fatalist stuff like "this isn't going away"

November 8, 2025 at 10:15 PM

Something I've really noticed among institutional "responses" to generative technologies is that "DO NOT USE THIS TOOL, IT'S COMPLETELY INAPPROPRIATE" is fudamentally erased from any possibility of ever being an option

Often paired with fatalist stuff like "this isn't going away"

Often paired with fatalist stuff like "this isn't going away"

Reposted by Dani Shanley

In 2008, a blogger named Curtis Yarvin called for a future president to kill foreign aid programs as part of a plan to replace democracy with dictatorship.

Now 600,000 people are dead—and 14 million will die by 2030, according to the Lancet. This is the running death toll of tech fascism.

Now 600,000 people are dead—and 14 million will die by 2030, according to the Lancet. This is the running death toll of tech fascism.

One analytical model shows that, as of November 5th, the dismantling of U.S.A.I.D. has already caused the deaths of 600,000 people, two-thirds of them children. https://newyorkermag.visitlink.me/jUzNSc

The Shutdown of U.S.A.I.D. Has Already Killed Hundreds of Thousands

The short documentary “Rovina’s Choice” tells the story of what goes when aid goes.

newyorkermag.visitlink.me

November 7, 2025 at 10:28 PM

In 2008, a blogger named Curtis Yarvin called for a future president to kill foreign aid programs as part of a plan to replace democracy with dictatorship.

Now 600,000 people are dead—and 14 million will die by 2030, according to the Lancet. This is the running death toll of tech fascism.

Now 600,000 people are dead—and 14 million will die by 2030, according to the Lancet. This is the running death toll of tech fascism.

Reposted by Dani Shanley

A List of Things Said to Have Been Ruined by Women

🧵

🧵

November 6, 2025 at 8:43 PM

A List of Things Said to Have Been Ruined by Women

🧵

🧵

Reposted by Dani Shanley

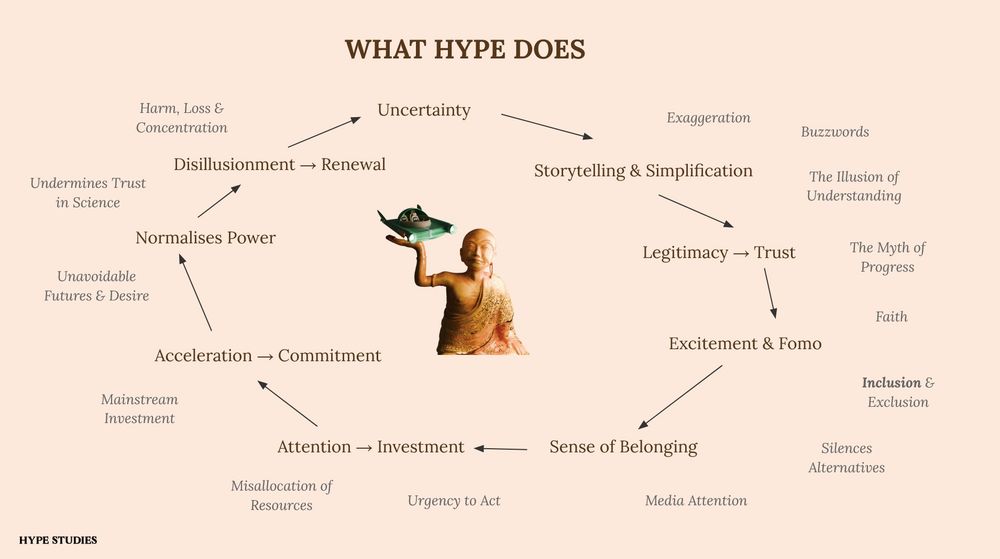

Also at @mozilla.org Fest, check out this AMAZING interactive installation, a visual database of AGI hype discourse, created by a group of talented students: hype-arenas.xyz It’s beautiful to see how one’s own research can step beyond academic language and become this ⬇️

AGI Hype arenas

A visual database of AGI hype discourse mapped into six key dimensions of uncertainty using qualitative research methodologies.

Shown at the Mozilla Festival 2025.

hype-arenas.xyz

November 7, 2025 at 10:42 AM

Also at @mozilla.org Fest, check out this AMAZING interactive installation, a visual database of AGI hype discourse, created by a group of talented students: hype-arenas.xyz It’s beautiful to see how one’s own research can step beyond academic language and become this ⬇️

Reposted by Dani Shanley

the question nobody with a soul is asking, answered by people nobody wants to hear from

November 6, 2025 at 12:58 PM

the question nobody with a soul is asking, answered by people nobody wants to hear from

Reposted by Dani Shanley

me at the end of class: here's a little speculative exercises; imagine you wake up from cryosleep in 2085. what's the kind of tech-society r/ship you'd like to see around you?

students: no AI

I honestly think students' views are missing from the 'should AI be integrated in classrooms' discussion

students: no AI

I honestly think students' views are missing from the 'should AI be integrated in classrooms' discussion

November 6, 2025 at 6:32 AM

me at the end of class: here's a little speculative exercises; imagine you wake up from cryosleep in 2085. what's the kind of tech-society r/ship you'd like to see around you?

students: no AI

I honestly think students' views are missing from the 'should AI be integrated in classrooms' discussion

students: no AI

I honestly think students' views are missing from the 'should AI be integrated in classrooms' discussion

Reposted by Dani Shanley

Getting ready to my talk on Artificial General Intelligence as a form of both Deep Hype and as Futures Governance instrument at @mozilla.org Fest. Come by if you around! schedule.mozillafestival.org/session/159

November 6, 2025 at 12:02 PM

Getting ready to my talk on Artificial General Intelligence as a form of both Deep Hype and as Futures Governance instrument at @mozilla.org Fest. Come by if you around! schedule.mozillafestival.org/session/159

Reposted by Dani Shanley

“I wish Andrew Cuomo only the best in private life”

November 5, 2025 at 4:22 AM

“I wish Andrew Cuomo only the best in private life”

finally some good news to wake up to

This is a landslide. The last NYC mayoral candidate to clear 1 million votes was John Lindsay—over 50 years ago. It will be dismissed by pundits saying it can only happen in New York. That's garbage. Think pledging to freeze rent and offer childcare won't resonate in Nevada? Wisconsin? Texas?

November 5, 2025 at 6:46 AM

finally some good news to wake up to

Thanks for the shout-out, Brian! (and this zine looks amazing. Definitely going to print out some copies for our event) You can still submit today or DM me!

October 31, 2025 at 7:03 AM

Thanks for the shout-out, Brian! (and this zine looks amazing. Definitely going to print out some copies for our event) You can still submit today or DM me!

Reposted by Dani Shanley

📣 I am hiring a postdoc! aial.ie/hiring/postd...

applications from suitable candidates that are passionate about investigating the use of genAI in public service operations with the aim of keeping governments transparent and accountable are welcome

pls share with your networks

applications from suitable candidates that are passionate about investigating the use of genAI in public service operations with the aim of keeping governments transparent and accountable are welcome

pls share with your networks

October 30, 2025 at 7:51 PM

📣 I am hiring a postdoc! aial.ie/hiring/postd...

applications from suitable candidates that are passionate about investigating the use of genAI in public service operations with the aim of keeping governments transparent and accountable are welcome

pls share with your networks

applications from suitable candidates that are passionate about investigating the use of genAI in public service operations with the aim of keeping governments transparent and accountable are welcome

pls share with your networks

Reposted by Dani Shanley

"There are more tech lobbyists than MEPs."

Live from Brussels:

(It's a good study. Check it out.)

Live from Brussels:

(It's a good study. Check it out.)

October 29, 2025 at 4:10 PM

"There are more tech lobbyists than MEPs."

Live from Brussels:

(It's a good study. Check it out.)

Live from Brussels:

(It's a good study. Check it out.)

Reposted by Dani Shanley

I have an article coming out soon in Science & Engineering Ethics which shows how ethics guidelines suppress and background ethical agents. One of the tactics is instrumentalization, which gives agency to AI and hides the true agents. Will update with link to the article once it is published.

Seeking citations: Has anyone written on how "AI" systems can be used to shift/shirk accountability? This seems like an obvious through line in a lot of use cases (esp decision making by govt agencies & military applications), but I'd love to see a treatment tying these together.

September 10, 2025 at 10:44 AM

I have an article coming out soon in Science & Engineering Ethics which shows how ethics guidelines suppress and background ethical agents. One of the tactics is instrumentalization, which gives agency to AI and hides the true agents. Will update with link to the article once it is published.

Reposted by Dani Shanley

*very* excited about @thomas-dekeyser.bsky.social’s new book, out next year w/ @uminnpress.bsky.social 🤖 🚫

www.upress.umn.edu/978151791773...

www.upress.umn.edu/978151791773...

Techno-Negative

A radical history of technology told through acts of resistance, not progressThe history of technology is often told as a history of progress, moving optimis...

www.upress.umn.edu

October 28, 2025 at 10:42 AM

*very* excited about @thomas-dekeyser.bsky.social’s new book, out next year w/ @uminnpress.bsky.social 🤖 🚫

www.upress.umn.edu/978151791773...

www.upress.umn.edu/978151791773...

We're going to have people like @thomas-dekeyser.bsky.social with us talking about his book.

How could I not be excited?

there's still time to submit something... www.aanmelder.nl/techrefusal

How could I not be excited?

there's still time to submit something... www.aanmelder.nl/techrefusal

October 29, 2025 at 9:24 AM

We're going to have people like @thomas-dekeyser.bsky.social with us talking about his book.

How could I not be excited?

there's still time to submit something... www.aanmelder.nl/techrefusal

How could I not be excited?

there's still time to submit something... www.aanmelder.nl/techrefusal

Every time I see a new submission I get even more excited for this 👇

it's gonna be 🔥

it's gonna be 🔥

We're organizing an event on technological refusal in Maastricht, Feb '26 and I'm super excited about it. Details below 👇 Deadline for contributions Oct 31st. Come join us!

www.aanmelder.nl/techrefusal

www.aanmelder.nl/techrefusal

‘No & ...’ : A Forum on Technological Refusal - Home

www.aanmelder.nl

October 29, 2025 at 9:22 AM

Every time I see a new submission I get even more excited for this 👇

it's gonna be 🔥

it's gonna be 🔥

Reposted by Dani Shanley

Friends choose your so-called "critics" carefully.

Its not a binary.

If people do circular citations and happen to never cite the women who laid the groundwork for decades and pay the price, there's a problem.

Its not a binary.

If people do circular citations and happen to never cite the women who laid the groundwork for decades and pay the price, there's a problem.

Who the fuck is this dude who rolled in saying what women researchers have been saying for years? He came on my thread here once to tell me I was wrong about something — I wasn’t. I responded, he said nothing, and disappeared. @alexhanna.bsky.social @safiyanoble.bsky.social

Ed Zitron Gets Paid to Love AI. He Also Gets Paid to Hate AI

He’s one of the loudest voices of the AI haters—even as he does PR for AI companies. Either way, Ed Zitron has your attention.

www.wired.com

October 27, 2025 at 5:09 PM

Friends choose your so-called "critics" carefully.

Its not a binary.

If people do circular citations and happen to never cite the women who laid the groundwork for decades and pay the price, there's a problem.

Its not a binary.

If people do circular citations and happen to never cite the women who laid the groundwork for decades and pay the price, there's a problem.

Reposted by Dani Shanley

A frank essay on what sustainability (or lack thereof) looks like in AI research. What is described here by my friend @natolambert.bsky.social, a CS researcher, is true of social researchers of AI as well. Worth reading by anyone thinking about the human costs of the AI race, which are far reaching.

A new essay on the crazy, all or nothing approach to work happening in AI today, the looming human costs, and the lack of a finish line.

I wouldn't say it's okay, but I'm not sure how to fix it.

www.interconnects.ai/p/burning-out

I wouldn't say it's okay, but I'm not sure how to fix it.

www.interconnects.ai/p/burning-out

Burning out

The international AI industry's collective risk.

www.interconnects.ai

October 26, 2025 at 6:35 PM

A frank essay on what sustainability (or lack thereof) looks like in AI research. What is described here by my friend @natolambert.bsky.social, a CS researcher, is true of social researchers of AI as well. Worth reading by anyone thinking about the human costs of the AI race, which are far reaching.

Reposted by Dani Shanley

your timely reminder that AGI is neither clearly defined/described nor amenable to scientific or engineering principles... it remains an ideology that is rooted in white supremacy, racism and eugenics

October 24, 2025 at 8:18 PM

your timely reminder that AGI is neither clearly defined/described nor amenable to scientific or engineering principles... it remains an ideology that is rooted in white supremacy, racism and eugenics

One week left to submit something!

What's come in already is 🔥

What's come in already is 🔥

We're organizing an event on technological refusal in Maastricht, Feb '26 and I'm super excited about it. Details below 👇 Deadline for contributions Oct 31st. Come join us!

www.aanmelder.nl/techrefusal

www.aanmelder.nl/techrefusal

‘No & ...’ : A Forum on Technological Refusal - Home

www.aanmelder.nl

October 24, 2025 at 4:59 AM

One week left to submit something!

What's come in already is 🔥

What's come in already is 🔥

We're organizing an event on technological refusal in Maastricht, Feb '26 and I'm super excited about it. Details below 👇 Deadline for contributions Oct 31st. Come join us!

www.aanmelder.nl/techrefusal

www.aanmelder.nl/techrefusal

‘No & ...’ : A Forum on Technological Refusal - Home

www.aanmelder.nl

October 20, 2025 at 8:52 AM

We're organizing an event on technological refusal in Maastricht, Feb '26 and I'm super excited about it. Details below 👇 Deadline for contributions Oct 31st. Come join us!

www.aanmelder.nl/techrefusal

www.aanmelder.nl/techrefusal

Reposted by Dani Shanley

fun fact: you make an entire career out of repeating the work of women of colour tech critics in the full knowledge that you as a white guy will get the big slots and they won't

October 16, 2025 at 6:27 PM

fun fact: you make an entire career out of repeating the work of women of colour tech critics in the full knowledge that you as a white guy will get the big slots and they won't