📍Palo Alto, CA

🔗 calebziems.com

May be of interest to @paul-rottger.bsky.social @monadiab77.bsky.social @vinodkpg.bsky.social @dbamman.bsky.social @davidjurgens.bsky.social and you

May be of interest to @paul-rottger.bsky.social @monadiab77.bsky.social @vinodkpg.bsky.social @dbamman.bsky.social @davidjurgens.bsky.social and you

This was an interdisciplinary effort across computer science (@diyiyang.bsky.social, @williamheld.com, Jane Yu) and sociology (David Grusky and Amir Goldberg), and the research process taught me so much!

This was an interdisciplinary effort across computer science (@diyiyang.bsky.social, @williamheld.com, Jane Yu) and sociology (David Grusky and Amir Goldberg), and the research process taught me so much!

We observe positive transfer performance from Cartography to two leading benchmarks: BLEnD (Myung et al., 2024) and CulturalBench (Chiu et al., 2024).

We observe positive transfer performance from Cartography to two leading benchmarks: BLEnD (Myung et al., 2024) and CulturalBench (Chiu et al., 2024).

We evaluate GPT-4o with and without search and find no significant difference in their recall on Cartography data.

Culture Cartography is "Google proof" since search doesn't help.

We evaluate GPT-4o with and without search and find no significant difference in their recall on Cartography data.

Culture Cartography is "Google proof" since search doesn't help.

Qwen-2 72 B recalls 21% less Cartography data than it recalls traditional data (p < .0001)

Even a strong reasoning model (R1) is challenged more by our data.

Qwen-2 72 B recalls 21% less Cartography data than it recalls traditional data (p < .0001)

Even a strong reasoning model (R1) is challenged more by our data.

And to find challenging questions, we let the LLM steer towards topics it has low confidence in.

To find culturally-representative knowledge, we let the human steer towards what they find most salient.

And to find challenging questions, we let the LLM steer towards topics it has low confidence in.

To find culturally-representative knowledge, we let the human steer towards what they find most salient.

Still this is a single-initiative process.

Researchers can’t steer the distribution towards questions of interest (i.e., those that challenge LLMs).

Still this is a single-initiative process.

Researchers can’t steer the distribution towards questions of interest (i.e., those that challenge LLMs).

In traditional annotation, the researcher picks some questions and the annotator passively provides ground truth answers.

This is single-initiative.

Annotators don't steer the process, so their interests and culture may not be represented.

In traditional annotation, the researcher picks some questions and the annotator passively provides ground truth answers.

This is single-initiative.

Annotators don't steer the process, so their interests and culture may not be represented.

Check out our #EMNLP2025 talk: Culture Cartography

🗓️ 11/5, 11:30 AM

📌 A109 (CSS Orals 1)

Compared to traditional benchmarking, our mixed-initiative method finds more knowledge gaps even in reasoning models like R1!

Paper: arxiv.org/pdf/2510.27672

Check out our #EMNLP2025 talk: Culture Cartography

🗓️ 11/5, 11:30 AM

📌 A109 (CSS Orals 1)

Compared to traditional benchmarking, our mixed-initiative method finds more knowledge gaps even in reasoning models like R1!

Paper: arxiv.org/pdf/2510.27672

🔗: arxiv.org/pdf/2506.04419

🔗: arxiv.org/pdf/2506.04419

Thousands are turning to AI chatbots for emotional connection – finding comfort, sharing secrets, and even falling in love. But as AI companionship grows, the line between real and artificial relationships blurs.

Thousands are turning to AI chatbots for emotional connection – finding comfort, sharing secrets, and even falling in love. But as AI companionship grows, the line between real and artificial relationships blurs.

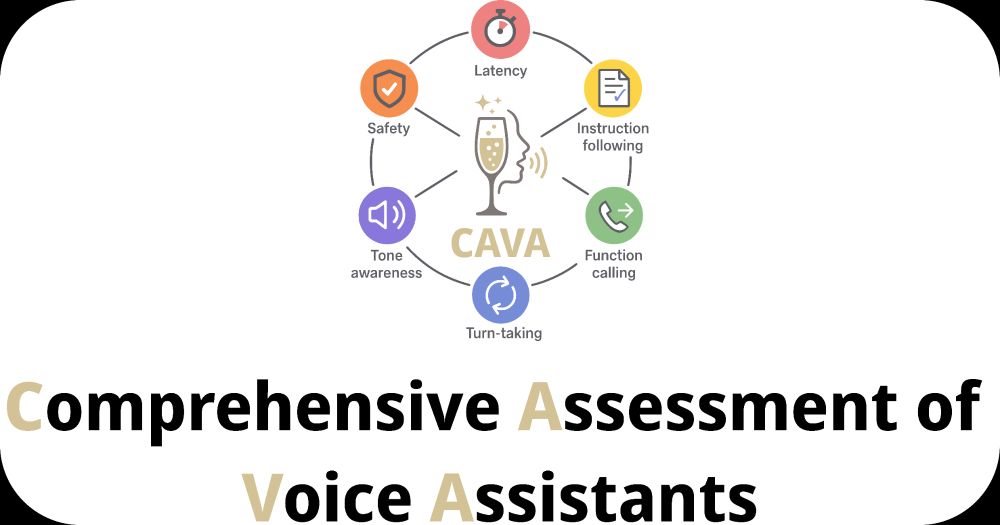

A new benchmark for evaluating the capabilities required for speech-in-speech-out voice assistants!

- Latency

- Instruction following

- Function calling

- Tone awareness

- Turn taking

- Audio Safety

TalkArena.org/cava

A new benchmark for evaluating the capabilities required for speech-in-speech-out voice assistants!

- Latency

- Instruction following

- Function calling

- Tone awareness

- Turn taking

- Audio Safety

TalkArena.org/cava

Excited to share our paper on biases against African American Language in reward models, accepted to #NAACL2025 Findings! 🎉

Paper: arxiv.org/abs/2502.12858 (1/10)

Excited to share our paper on biases against African American Language in reward models, accepted to #NAACL2025 Findings! 🎉

Paper: arxiv.org/abs/2502.12858 (1/10)

But humans are naturally quite good at this (>90% acc.)

Check it out!

➡️ arxiv.org/abs/2502.20490

But humans are naturally quite good at this (>90% acc.)

Check it out!

➡️ arxiv.org/abs/2502.20490

A position paper by me, @dbamman.bsky.social, and @ibleaman.bsky.social on cultural NLP: what we want, what we have, and how sociocultural linguistics can clarify things.

Website: naitian.org/culture-not-...

1/n

A position paper by me, @dbamman.bsky.social, and @ibleaman.bsky.social on cultural NLP: what we want, what we have, and how sociocultural linguistics can clarify things.

Website: naitian.org/culture-not-...

1/n

Introducing Collaborative Gym (Co-Gym), a framework for enabling & evaluating human-agent collaboration! I now get used to agents proactively seeking confirmations or my deep thinking.(🧵 with video)

Introducing Collaborative Gym (Co-Gym), a framework for enabling & evaluating human-agent collaboration! I now get used to agents proactively seeking confirmations or my deep thinking.(🧵 with video)

I was so lucky to know him, and I am grateful every day that he (and Gillian, and Walt, etc) built an academic field where kindness is expected.

I was so lucky to know him, and I am grateful every day that he (and Gillian, and Walt, etc) built an academic field where kindness is expected.

Introducing talkarena.org — an open platform where users speak to LAMs and receive text responses. Through open interaction, we focus on rankings based on user preferences rather than static benchmarks.

🧵 (1/5)

Introducing talkarena.org — an open platform where users speak to LAMs and receive text responses. Through open interaction, we focus on rankings based on user preferences rather than static benchmarks.

🧵 (1/5)

1. most animate objects, men

2. women, water, fire, violence, and exceptional animals

3. edible fruit and vegetables

4. miscellaneous (includes things not classifiable in the first three)

1. most animate objects, men

2. women, water, fire, violence, and exceptional animals

3. edible fruit and vegetables

4. miscellaneous (includes things not classifiable in the first three)

Students do different research, go on the job market, and recruit other students. Ping me and I'll add you!

Students do different research, go on the job market, and recruit other students. Ping me and I'll add you!