🧮 How often?

🧠 Misrepresentations = missing knowledge? spoiler: NO!

At #CHI2026 we are bringing ✨TALES✨ a participatory evaluation of cultural (mis)reps & knowledge in multilingual LLM-stories for India

📜 arxiv.org/abs/2511.21322

1/10

🧮 How often?

🧠 Misrepresentations = missing knowledge? spoiler: NO!

At #CHI2026 we are bringing ✨TALES✨ a participatory evaluation of cultural (mis)reps & knowledge in multilingual LLM-stories for India

📜 arxiv.org/abs/2511.21322

1/10

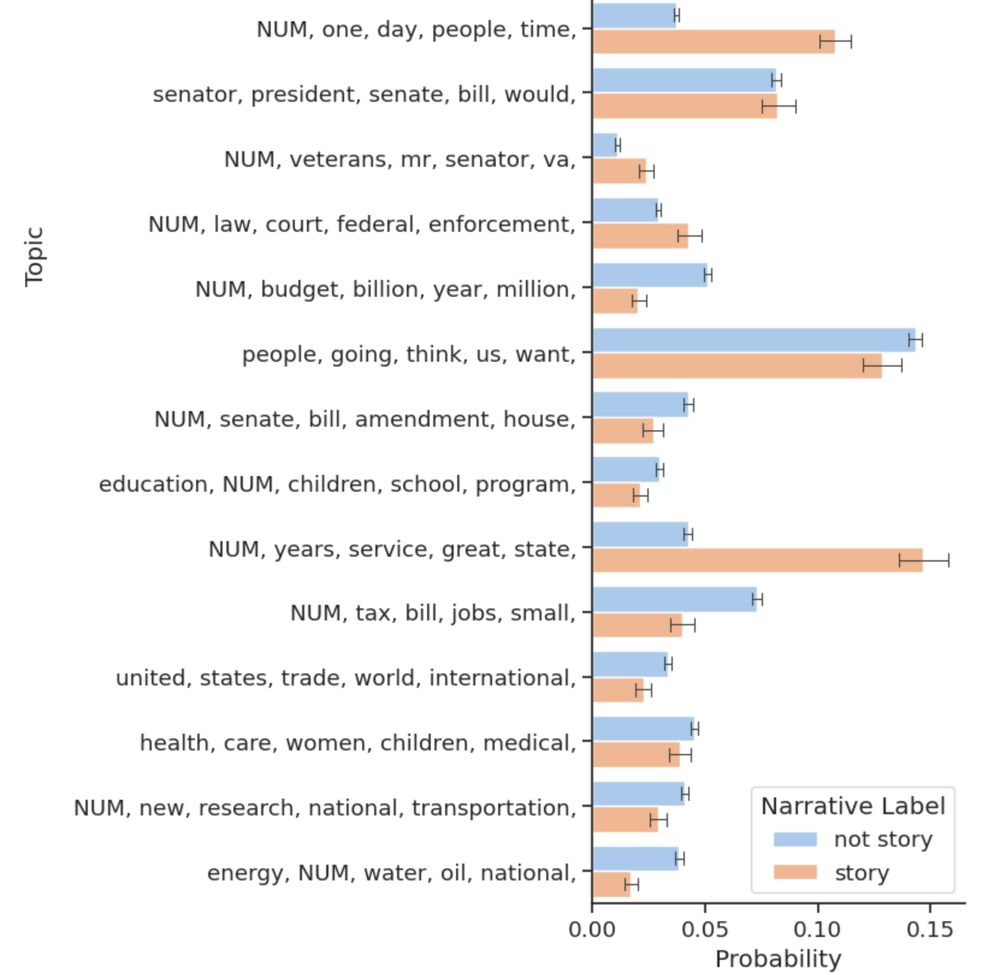

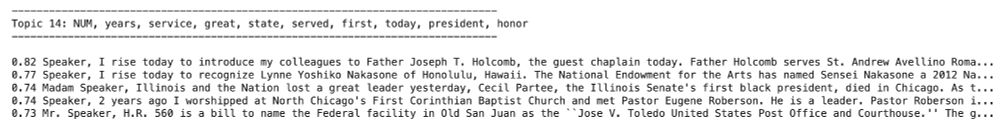

Excited to share our new paper that builds a framework for analyzing storytelling practices across online communities!

Excited to share our new paper that builds a framework for analyzing storytelling practices across online communities!

Apply to Wisconsin CS to research

- Societal impact of AI

- NLP ←→ CSS and cultural analytics

- Computational sociolinguistics

- Human-AI interaction

- Culturally competent and inclusive NLP

with me!

lucy3.github.io/prospective-...

Apply to Wisconsin CS to research

- Societal impact of AI

- NLP ←→ CSS and cultural analytics

- Computational sociolinguistics

- Human-AI interaction

- Culturally competent and inclusive NLP

with me!

lucy3.github.io/prospective-...

We introduce Oolong, a dataset of simple-to-verify information aggregation questions over long inputs. No model achieves >50% accuracy at 128K on Oolong!

We introduce Oolong, a dataset of simple-to-verify information aggregation questions over long inputs. No model achieves >50% accuracy at 128K on Oolong!

Apply to work on AI for social sciences/human behavior, social NLP, and LLMs for real-world applied domains you're passionate about!

Learn more at kristinagligoric.com & help spread the word!

Apply to work on AI for social sciences/human behavior, social NLP, and LLMs for real-world applied domains you're passionate about!

Learn more at kristinagligoric.com & help spread the word!

Our #EMNLP2025 paper reveals that crafting thoughtful refusals rather than detecting intent is the key to human-centered AI safety.

📄 arxiv.org/abs/2506.00195

🧵[1/9]

Our #EMNLP2025 paper reveals that crafting thoughtful refusals rather than detecting intent is the key to human-centered AI safety.

📄 arxiv.org/abs/2506.00195

🧵[1/9]

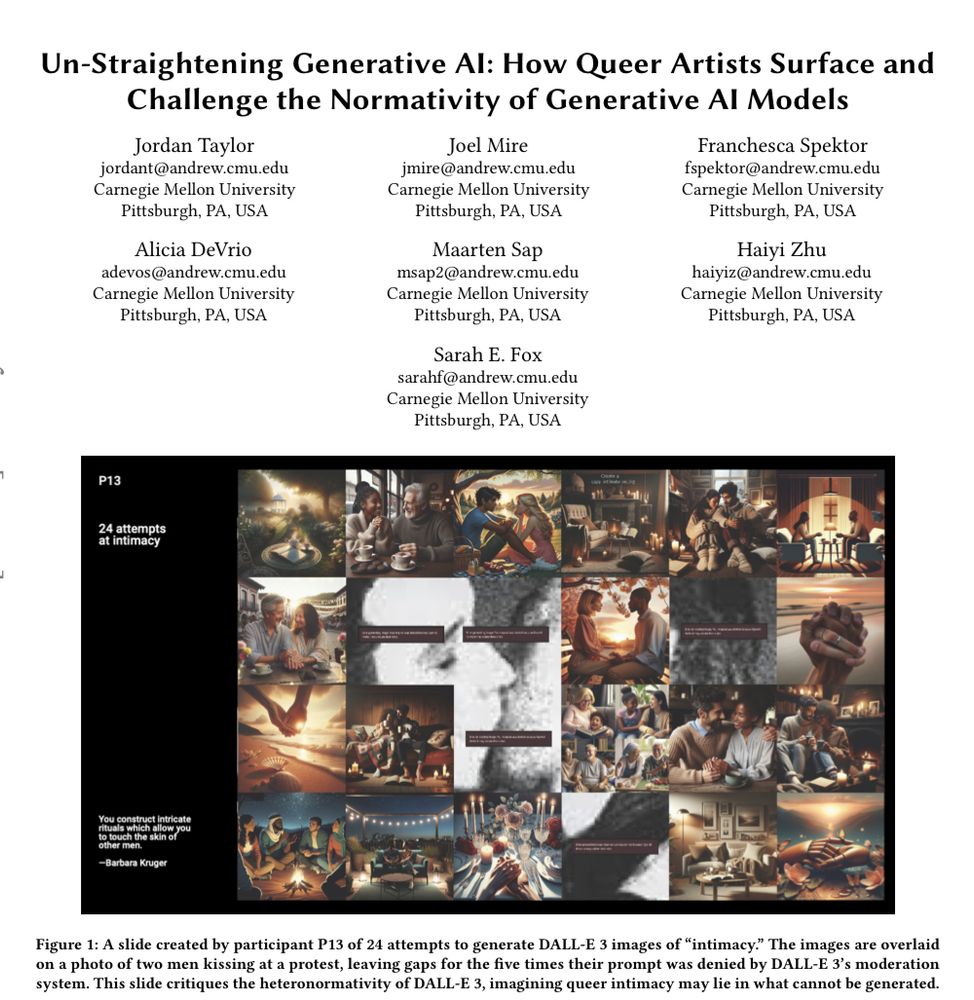

Looking forward to presenting this work at #FAccT2025 and you can read a pre-print here:

arxiv.org/abs/2503.09805

Looking forward to presenting this work at #FAccT2025 and you can read a pre-print here:

arxiv.org/abs/2503.09805

🧵1/9

🧵1/9

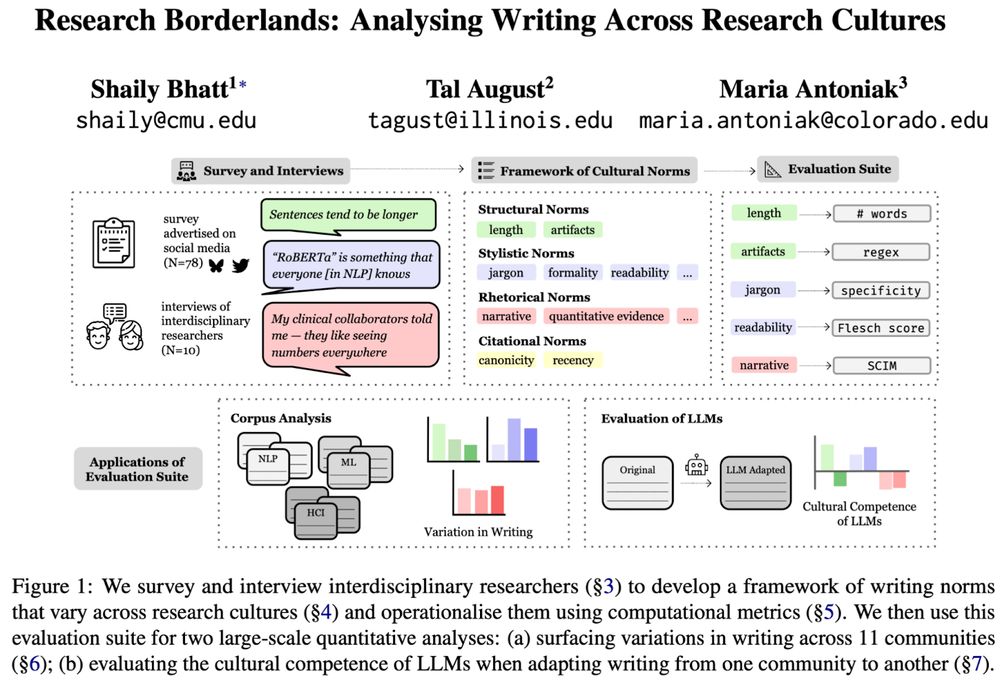

🚩 Tired of “cultural” evals that don't consult people?

We engaged with interdisciplinary researchers to identify & measure ✨cultural norms✨in scientific writing, and show that❗LLMs flatten them❗

📜 arxiv.org/abs/2506.00784

[1/11]

🚩 Tired of “cultural” evals that don't consult people?

We engaged with interdisciplinary researchers to identify & measure ✨cultural norms✨in scientific writing, and show that❗LLMs flatten them❗

📜 arxiv.org/abs/2506.00784

[1/11]

Before then, I'll postdoc for a year in the NLP group at another UW 🏔️ in the Pacific Northwest

Before then, I'll postdoc for a year in the NLP group at another UW 🏔️ in the Pacific Northwest

↳

↳

Some fun results: comparisons of the same frame when expressed in images vs texts. When the "crime" frame is expressed in the article text, there are more political words in the text, but when the frame is expressed in the article image, more police words.

Some fun results: comparisons of the same frame when expressed in images vs texts. When the "crime" frame is expressed in the article text, there are more political words in the text, but when the frame is expressed in the article image, more police words.

Excited to share our paper on biases against African American Language in reward models, accepted to #NAACL2025 Findings! 🎉

Paper: arxiv.org/abs/2502.12858 (1/10)

Excited to share our paper on biases against African American Language in reward models, accepted to #NAACL2025 Findings! 🎉

Paper: arxiv.org/abs/2502.12858 (1/10)