Before we all slip into the ether, I wanted to highlight a few stories I published this year that stuck out. 🧵🪡

Before we all slip into the ether, I wanted to highlight a few stories I published this year that stuck out. 🧵🪡

www.404media.co/flock-expose...

It appears that AI was used to generate a lie, which was then absorbed by AI, then regurgitated to others in a breaking news situation.

www.crikey.com.au/20...

It appears that AI was used to generate a lie, which was then absorbed by AI, then regurgitated to others in a breaking news situation.

www.crikey.com.au/20...

www.aap.com.au/factcheck/mi...

www.aap.com.au/factcheck/mi...

reutersinstitute.politics.ox.ac.uk/news/how-ai-...

reutersinstitute.politics.ox.ac.uk/news/how-ai-...

Can LLMs with reasoning + web search reliably fact-check political claims?

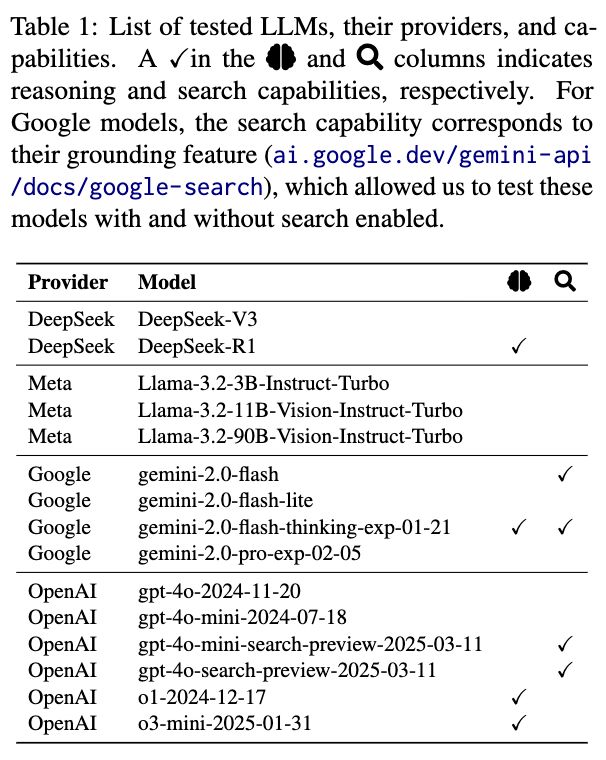

We evaluated 15 models from OpenAI, Google, Meta, and DeepSeek on 6,000+ PolitiFact claims (2007–2024).

Short answer: Not reliably—unless you give them curated evidence.

arxiv.org/abs/2511.18749

Can LLMs with reasoning + web search reliably fact-check political claims?

We evaluated 15 models from OpenAI, Google, Meta, and DeepSeek on 6,000+ PolitiFact claims (2007–2024).

Short answer: Not reliably—unless you give them curated evidence.

arxiv.org/abs/2511.18749

Based on a case study of the @financialtimes.com, Liz Lohn and I argue that transparency about AI in news is a spectrum, evolving with tech, commercial, professional & ethical considerations & audience attitudes.

Based on a case study of the @financialtimes.com, Liz Lohn and I argue that transparency about AI in news is a spectrum, evolving with tech, commercial, professional & ethical considerations & audience attitudes.