(@techimpactpolicy.bsky.social). Formerly IU / Observatory on Social Media.

Computational social science, human-AI interaction, social media, trust and safety, etc.

🧨 matthewdeverna.com

Can LLMs with reasoning + web search reliably fact-check political claims?

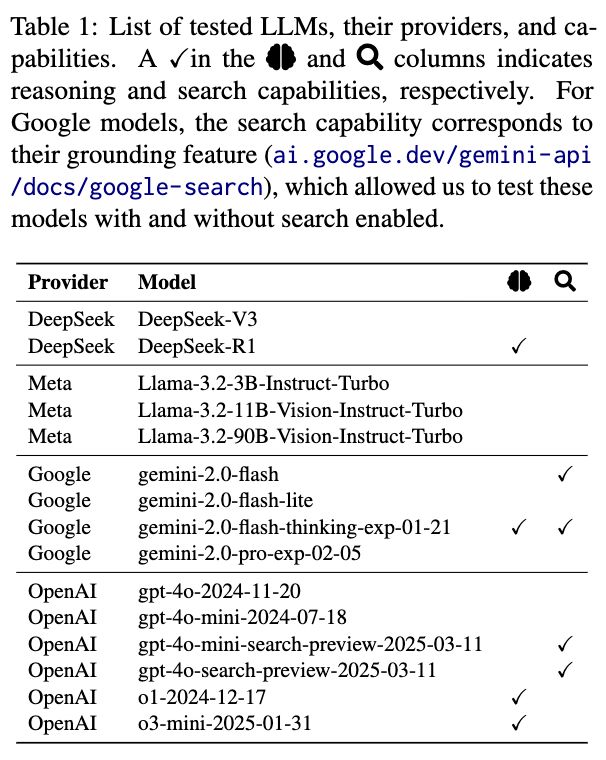

We evaluated 15 models from OpenAI, Google, Meta, and DeepSeek on 6,000+ PolitiFact claims (2007–2024).

Short answer: Not reliably—unless you give them curated evidence.

arxiv.org/abs/2511.18749

We analyze 1.6M factcheck requests on X (grok & Perplexity)

📌Usage is polarized, Grok users more likely to be Reps

📌BUT Rep posts rated as false more often—even by Grok

📌Bot agreement with factchecks is OK but not great; APIs match fact-checkers

osf.io/preprints/ps...

We analyze 1.6M factcheck requests on X (grok & Perplexity)

📌Usage is polarized, Grok users more likely to be Reps

📌BUT Rep posts rated as false more often—even by Grok

📌Bot agreement with factchecks is OK but not great; APIs match fact-checkers

osf.io/preprints/ps...

www.technologyreview.com/2026/01/30/1...

www.technologyreview.com/2026/01/30/1...

A Marketplace for AI-Generated Adult Content and Deepfakes

Preprint: doi.org/10.48550/arX...

A Marketplace for AI-Generated Adult Content and Deepfakes

Preprint: doi.org/10.48550/arX...

#SciencePolicyForum #ScienceResearch 🧪

Paper: doi.org/10.1126/scie...

#SciencePolicyForum #ScienceResearch 🧪

Paper: doi.org/10.1126/scie...

Find all the details here 👉 icwsm.org/2026/submit....

@lespin.bsky.social @msaveski.bsky.social

What happens when you add money + competition to generative AI creation?

On Civitai—a prominent platform for gen-AI content/tools w. millions of users—you get a growing marketplace for NSFW requests and a nontrivial stream of deepfakes.

Preprint: arxiv.org/abs/2601.09117 🧪

What happens when you add money + competition to generative AI creation?

On Civitai—a prominent platform for gen-AI content/tools w. millions of users—you get a growing marketplace for NSFW requests and a nontrivial stream of deepfakes.

Preprint: arxiv.org/abs/2601.09117 🧪

Jan is an external fellow at @sfiscience.bsky.social and serves as Director, Global Head of Trust & Safety at the Wikimedia Foundation @wikimediafoundation.org, the non-profit hosting Wikipedia, Wikidata, and other free knowledge projects.

Jan is an external fellow at @sfiscience.bsky.social and serves as Director, Global Head of Trust & Safety at the Wikimedia Foundation @wikimediafoundation.org, the non-profit hosting Wikipedia, Wikidata, and other free knowledge projects.

Instead, AI creates cognitive and social dependencies—which may be even harder to escape. 🧠🔗

AI represents the infrastructuralization of cognition itself, concentrating unprecedented levels of private power.

Instead, AI creates cognitive and social dependencies—which may be even harder to escape. 🧠🔗

AI represents the infrastructuralization of cognition itself, concentrating unprecedented levels of private power.

After two decades of platforms reshaping markets, communication, and governance, a new shift is underway: AI is transforming society.

What should we expect from the 'AI society'? 🧵

📄 Our new paper faces this question:

osf.io/preprints/so...

After two decades of platforms reshaping markets, communication, and governance, a new shift is underway: AI is transforming society.

What should we expect from the 'AI society'? 🧵

📄 Our new paper faces this question:

osf.io/preprints/so...

Submit a project idea, get matched with a mentor, present virtually at ICWSM’26, and prepare a Sept 2026 submission.

💥 Apply with a max 2-page proposal

More details: icwsm.org/2026/submit....

#icwsm @journalqd.bsky.social

🚨 There are only 10 days left to apply to ICWSM/JQD Global Initiative!

🚀 Apply by Jan 15: www.icwsm.org/2026/submit....

Submit a project idea, get matched with a mentor, present virtually at ICWSM’26, and prepare a Sept 2026 submission.

💥 Apply with a max 2-page proposal

More details: icwsm.org/2026/submit....

#icwsm @journalqd.bsky.social

📅 The ICWSM January submission deadline is just ten days away (Jan 15)

Find all the information to submit your full paper, poster, demo, dataset, and tutorial proposal here: www.icwsm.org/2026/submit....

🔔 Note that the deadline for workshop proposals is Jan 30!

"we invite your input as we consider hosting a virtual day to enable participation from people who cannot (or do not feel comfortable) traveling to the US."

"we invite your input as we consider hosting a virtual day to enable participation from people who cannot (or do not feel comfortable) traveling to the US."

🚨 There are only 10 days left to apply to ICWSM/JQD Global Initiative!

🚀 Apply by Jan 15: www.icwsm.org/2026/submit....

Milkman is best known for her award-winning research on to identify and overcome barriers that prevent positive behavioral change.

Milkman is best known for her award-winning research on to identify and overcome barriers that prevent positive behavioral change.