senior lecturer in statistics, penn

NYC & Philadelphia

https://www.stat.berkeley.edu/~winston

Reposted by Winston T. Lin

Desk reject more stuff with actionable feedback.

Don’t request second reviews

Build larger editorial boards of volunteers

Wait to submit your work until it’s ready; a.k.a don’t send in your half-baked trash hoping for feedback

6/7

Reposted by Winston T. Lin

Reposted by Chris Hanretty, Winston T. Lin

I'm a quant. social scientist (PhD Yale ’24, NYU) focused on causal inference, experiments, and large-scale data.

Feel free to get in touch or share; all leads appreciated. dwstommes@gmail.com

RegCheck was built to help make this process easier.

Today, we launch RegCheck V2.

🧵

regcheck.app

www.brookings.edu/articles/for...

nicholasdecker.substack.com/p/should-we-...

Reposted by Winston T. Lin

statmodeling.stat.columbia.edu/2026/01/17/c...

Reposted by Winston T. Lin

Reposted by Ian Hussey, Matt N Williams, Winston T. Lin , and 1 more Ian Hussey, Matt N Williams, Winston T. Lin, Maksym Polyakov

legacy.iza.org/en/webconten...

Reposted by Winston T. Lin

www.the100.ci/2025/07/28/w...

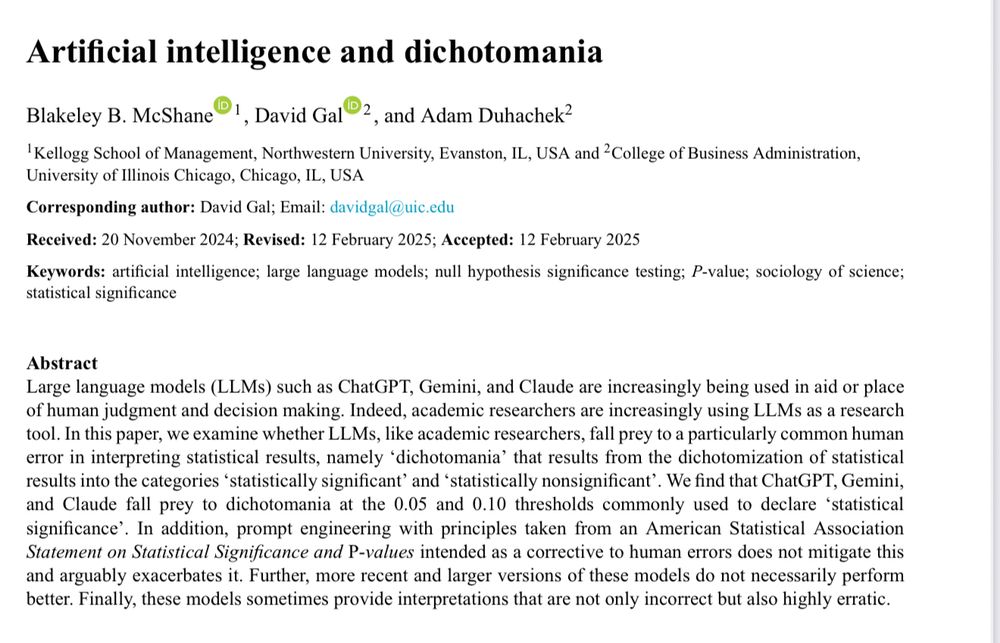

www.blakemcshane.com/Papers/natur...

www.journals.uchicago.edu/doi/abs/10.1...

Reposted by Winston T. Lin

bjsm.bmj.com/content/earl...

Reposted by Winston T. Lin

IMO, there are only 3 good reasons to do it. One of them needs to be true--otherwise, don't.

medium.com/the-quantast...

www.aeaweb.org/articles?id=...

Reposted by Magnus Johansson, Winston T. Lin

Reposted by Winston T. Lin

Reposted by Winston T. Lin

Reposted by Winston T. Lin

Some notable changes:

-items on analysis populations, missing data methods, and sensitivity analyses

-reporting of non-adherence and concomitant care

-reporting of changes to any study methods, not just outcomes

-and lots of other things

www.bmj.com/content/389/...

Reposted by Winston T. Lin