All models, datasets, code etc released.

Really excited about this project! Sharan, the lead student author, was a joy to work with.

All models, datasets, code etc released.

Really excited about this project! Sharan, the lead student author, was a joy to work with.

My favorites:

1) CAD representation

2) synthetic data to help city-scale reconstruction

3) trends in 3D vision

4) visual chain-of-thoughts?

My favorites:

1) CAD representation

2) synthetic data to help city-scale reconstruction

3) trends in 3D vision

4) visual chain-of-thoughts?

@billpsomas.bsky.social George Retsinas @nikos-efth.bsky.social Panagiotis Filntisis,Yannis Avrithis, Petros Maragos, Ondrej Chum, @gtolias.bsky.social

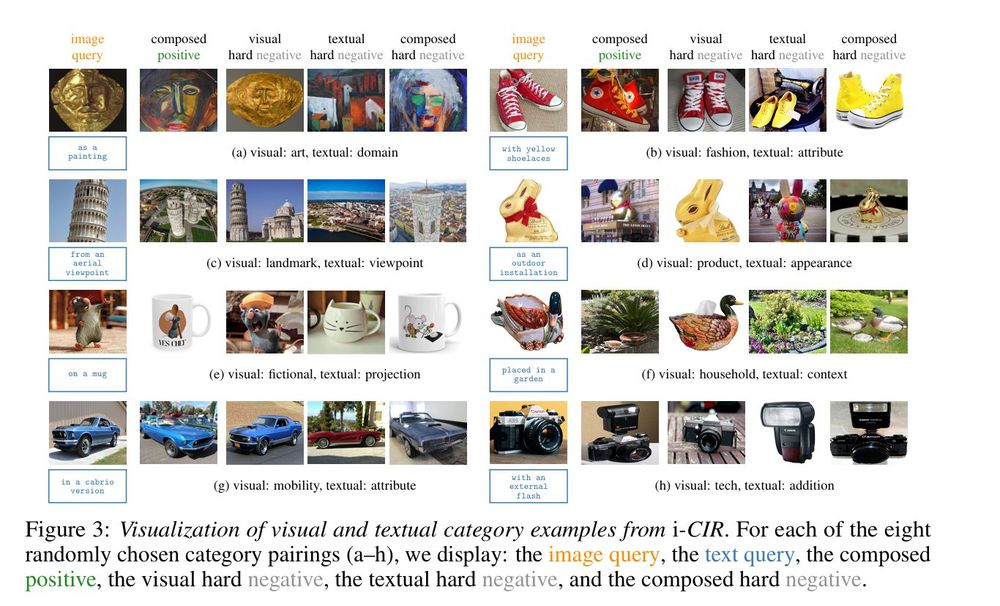

tl;dr: condition-based retrieval (+dataset) - old photo/sunset/night/aerial/model arxiv.org/abs/2510.25387

@billpsomas.bsky.social George Retsinas @nikos-efth.bsky.social Panagiotis Filntisis,Yannis Avrithis, Petros Maragos, Ondrej Chum, @gtolias.bsky.social

tl;dr: condition-based retrieval (+dataset) - old photo/sunset/night/aerial/model arxiv.org/abs/2510.25387

We show that synthetic data (e.g., LLM simulations) can significantly improve the performance of inference tasks. The key intuition lies in the interactions between the moment residuals of synthetic data and those of real data

We show that synthetic data (e.g., LLM simulations) can significantly improve the performance of inference tasks. The key intuition lies in the interactions between the moment residuals of synthetic data and those of real data

Kinaema: A recurrent sequence model for memory and pose in motion

arxiv.org/abs/2510.20261

By @mbsariyildiz.bsky.social, @weinzaepfelp.bsky.social, G. Bono, G. Monaci and myself

@naverlabseurope.bsky.social

1/9

Kinaema: A recurrent sequence model for memory and pose in motion

arxiv.org/abs/2510.20261

By @mbsariyildiz.bsky.social, @weinzaepfelp.bsky.social, G. Bono, G. Monaci and myself

@naverlabseurope.bsky.social

1/9

We built **PLSemanticsBench** to find out.

The results: a wild mix.

✅The Brilliant:

Top reasoning models can execute complex, fuzzer-generated programs -- even with 5+ levels of nested loops! 🤯

❌The Brittle: 🧵

We built **PLSemanticsBench** to find out.

The results: a wild mix.

✅The Brilliant:

Top reasoning models can execute complex, fuzzer-generated programs -- even with 5+ levels of nested loops! 🤯

❌The Brittle: 🧵

When contexting your feed-forward 3D point-map estimator, don't use full image pairs -- just randomly subsample! -> fast compute, more images.

When contexting your feed-forward 3D point-map estimator, don't use full image pairs -- just randomly subsample! -> fast compute, more images.

Zhiting Mei, Ola Shorinwa, Anirudha Majumdar

tl;dr: who cares, look at those dino icons!

OK, distilling DINO into NERF -> better object localization, than VGGT.

arxiv.org/abs/2510.03104

Zhiting Mei, Ola Shorinwa, Anirudha Majumdar

tl;dr: who cares, look at those dino icons!

OK, distilling DINO into NERF -> better object localization, than VGGT.

arxiv.org/abs/2510.03104

Find out in a new episode of RoboPapers: robopapers.substack.com/p/ep34-roboa...

Find out in a new episode of RoboPapers: robopapers.substack.com/p/ep34-roboa...

My notes here: simonwillison.net/2025/Oct/4/d...

My notes here: simonwillison.net/2025/Oct/4/d...

This could be relevant to your research...

This could be relevant to your research...

thinkingmachines.ai/blog/modular...

thinkingmachines.ai/blog/modular...

Joint angles: GENIMA genima-robot.github.io

Trajectories: VINT arxiv.org/abs/2306.14846

Object pose: MFOS arxiv.org/abs/2310.01897

Waypoints: PIVOT arxiv.org/abs/2402.07872

(Repost+update of a tweet from 2024)

Joint angles: GENIMA genima-robot.github.io

Trajectories: VINT arxiv.org/abs/2306.14846

Object pose: MFOS arxiv.org/abs/2310.01897

Waypoints: PIVOT arxiv.org/abs/2402.07872

(Repost+update of a tweet from 2024)

Sebastian Ray Mason, Anders Gjølbye, Phillip Chavarria Højbjerg, Lenka Tětková, Lars Kai Hansen

tl;dr: DINOv3,CLIP know 3D geometry, but only in middle layers. MAE bad, ImageNet bad, cls token bad.

arxiv.org/abs/2509.15271

Sebastian Ray Mason, Anders Gjølbye, Phillip Chavarria Højbjerg, Lenka Tětková, Lars Kai Hansen

tl;dr: DINOv3,CLIP know 3D geometry, but only in middle layers. MAE bad, ImageNet bad, cls token bad.

arxiv.org/abs/2509.15271

buff.ly/jAAhHnQ

buff.ly/jAAhHnQ

@parskatt.bsky.social PhD Thesis.

tl;dr: would be useful in teaching image matching - nice explanations. (too) Fancy and stylish notation. Cool Ack section and cover image.

liu.diva-portal.org/smash/record...

@parskatt.bsky.social PhD Thesis.

tl;dr: would be useful in teaching image matching - nice explanations. (too) Fancy and stylish notation. Cool Ack section and cover image.

liu.diva-portal.org/smash/record...

𝐖𝐨𝐫𝐥𝐝𝐄𝐱𝐩𝐥𝐨𝐫𝐞𝐫 (#SIGGRAPHAsia25) creates fully-navigable scenes via autoregressive video generation.

Text input -> 3DGS scene output & interactive rendering!

🌍http://mschneider456.github.io/world-explorer/

📽️https://youtu.be/N6NJsNyiv6I

𝐖𝐨𝐫𝐥𝐝𝐄𝐱𝐩𝐥𝐨𝐫𝐞𝐫 (#SIGGRAPHAsia25) creates fully-navigable scenes via autoregressive video generation.

Text input -> 3DGS scene output & interactive rendering!

🌍http://mschneider456.github.io/world-explorer/

📽️https://youtu.be/N6NJsNyiv6I

buff.ly/lqDL5wa

It's always been hard to say what RLHF does to a model within a more complex post-training pipeline.

buff.ly/lqDL5wa

It's always been hard to say what RLHF does to a model within a more complex post-training pipeline.

We take a simple (but underappreciated) closed form solution for estimating H, and replace the homog part with whatever distortion model we have.

This gives us simpler and faster solutions across the board. Super cool work imo!

Mårten Wadenbäck, Marcus Valtonen Örnhag, @parskatt.bsky.social

tl;dr: minimal solvers for one-sided/two-sided equal/two-sided independent radial distortion homography

arxiv.org/abs/2508.21190

We take a simple (but underappreciated) closed form solution for estimating H, and replace the homog part with whatever distortion model we have.

This gives us simpler and faster solutions across the board. Super cool work imo!

Do LLMs know when stories are suspenseful?

We ran LLMs through a bunch of classical psychology suspense studies

The answer: kinda, sorta? Honestly, better than I expected. But the results are nuanced...

1/

Do LLMs know when stories are suspenseful?

We ran LLMs through a bunch of classical psychology suspense studies

The answer: kinda, sorta? Honestly, better than I expected. But the results are nuanced...

1/

How far can we go with ImageNet for Text-to-Image generation? w. @arrijitghosh.bsky.social @lucasdegeorge.bsky.social @nicolasdufour.bsky.social @vickykalogeiton.bsky.social

TL;DR: Train a text-to-image model using 1000 less data in 200 GPU hrs!

📜https://arxiv.org/abs/2502.21318

🧵👇

How far can we go with ImageNet for Text-to-Image generation? w. @arrijitghosh.bsky.social @lucasdegeorge.bsky.social @nicolasdufour.bsky.social @vickykalogeiton.bsky.social

TL;DR: Train a text-to-image model using 1000 less data in 200 GPU hrs!

📜https://arxiv.org/abs/2502.21318

🧵👇