My research aims to characterize aesthetics experiences 🖼️ and creativity processes 🎨 in human mind and brain.

#Aesthetics #Creativity

@cp-trendscognisci.bsky.social

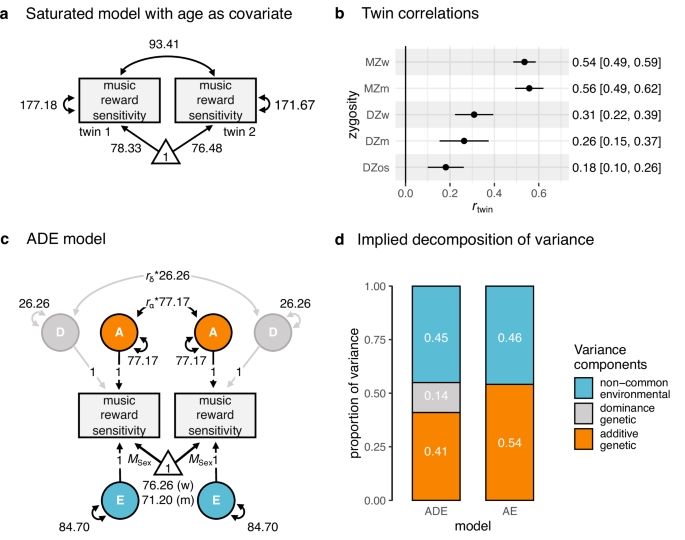

@ub.edu Ernest Mas-Herrero, Robert J. Zatorre

@mcgill.ca Josep Marco-Pallarés

@cp-trendscognisci.bsky.social

@ub.edu Ernest Mas-Herrero, Robert J. Zatorre

@mcgill.ca Josep Marco-Pallarés

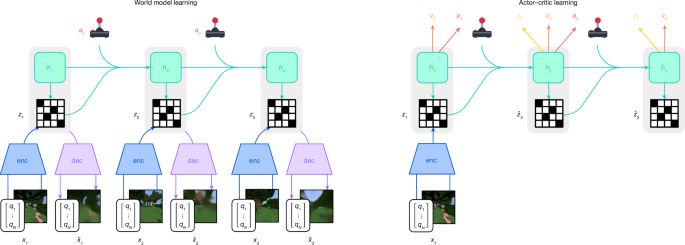

How can we model natural scene representations in visual cortex? A solution is in active vision: predict the features of the next glimpse! arxiv.org/abs/2511.12715

+ @adriendoerig.bsky.social , @alexanderkroner.bsky.social , @carmenamme.bsky.social , @timkietzmann.bsky.social

🧵 1/14

How can we model natural scene representations in visual cortex? A solution is in active vision: predict the features of the next glimpse! arxiv.org/abs/2511.12715

+ @adriendoerig.bsky.social , @alexanderkroner.bsky.social , @carmenamme.bsky.social , @timkietzmann.bsky.social

🧵 1/14

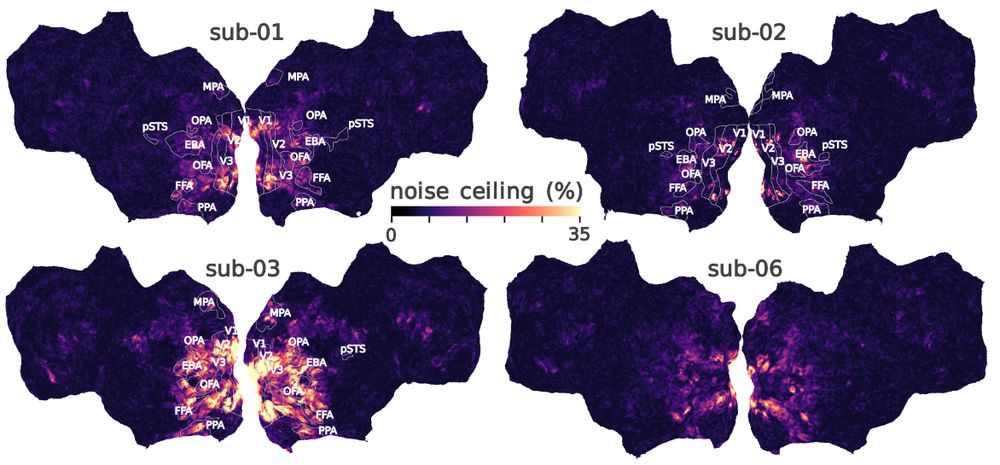

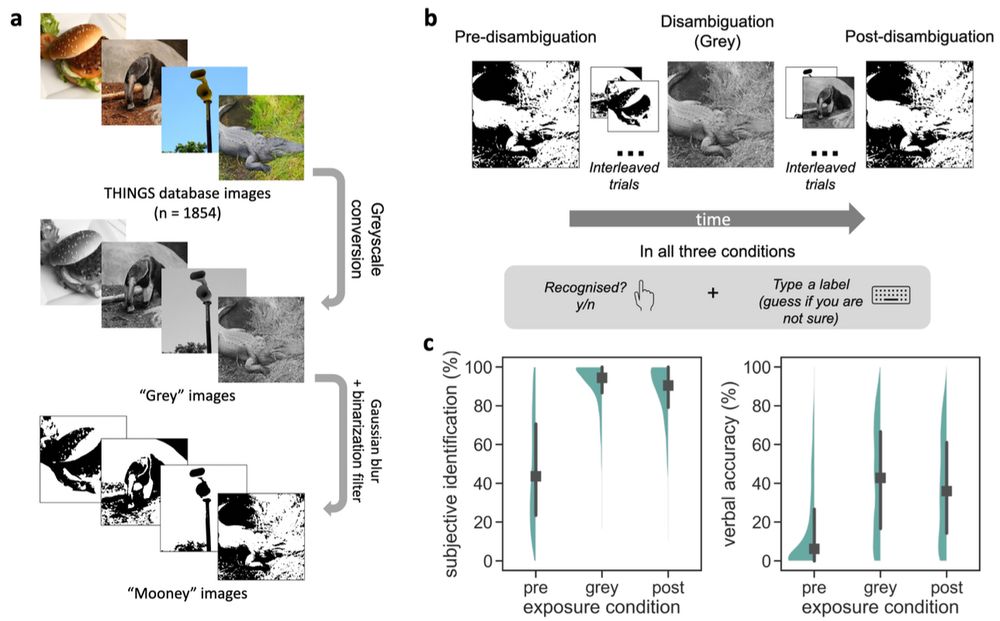

We created ~2k images and collected ~100k responses to study visual ambiguity.

www.biorxiv.org/content/10.1...

We created ~2k images and collected ~100k responses to study visual ambiguity.

www.biorxiv.org/content/10.1...

we've found that visual cortex, even when just viewing natural scenes, predicts *higher-level* visual features

The aligns with developments in ML, but challenges some assumptions about early sensory cortex

www.biorxiv.org/content/10.1...

we've found that visual cortex, even when just viewing natural scenes, predicts *higher-level* visual features

The aligns with developments in ML, but challenges some assumptions about early sensory cortex

www.biorxiv.org/content/10.1...

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

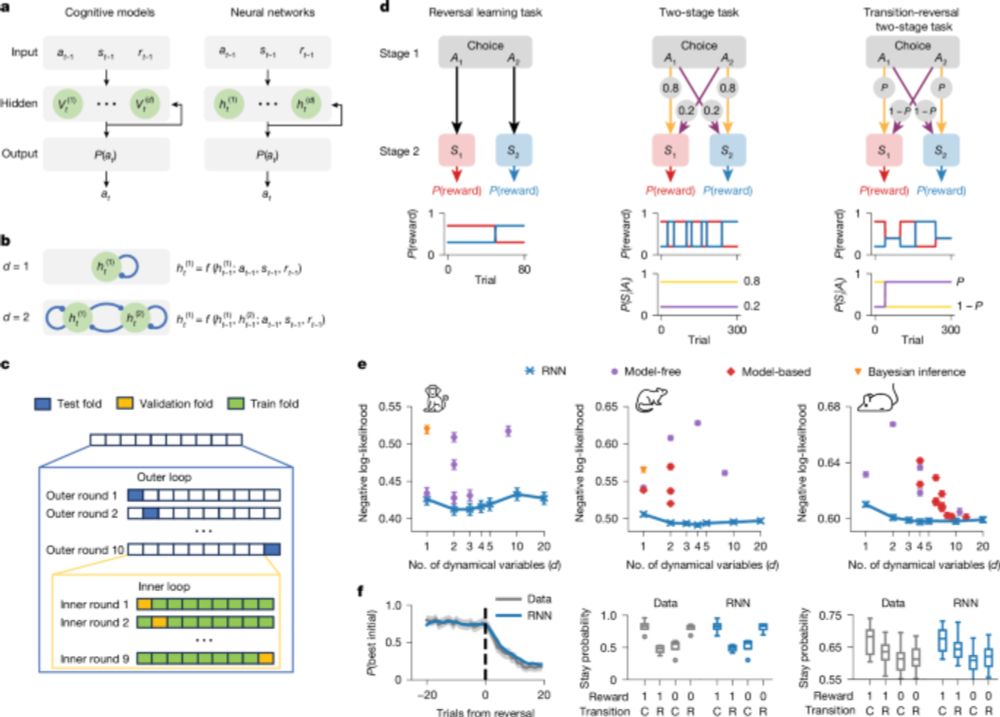

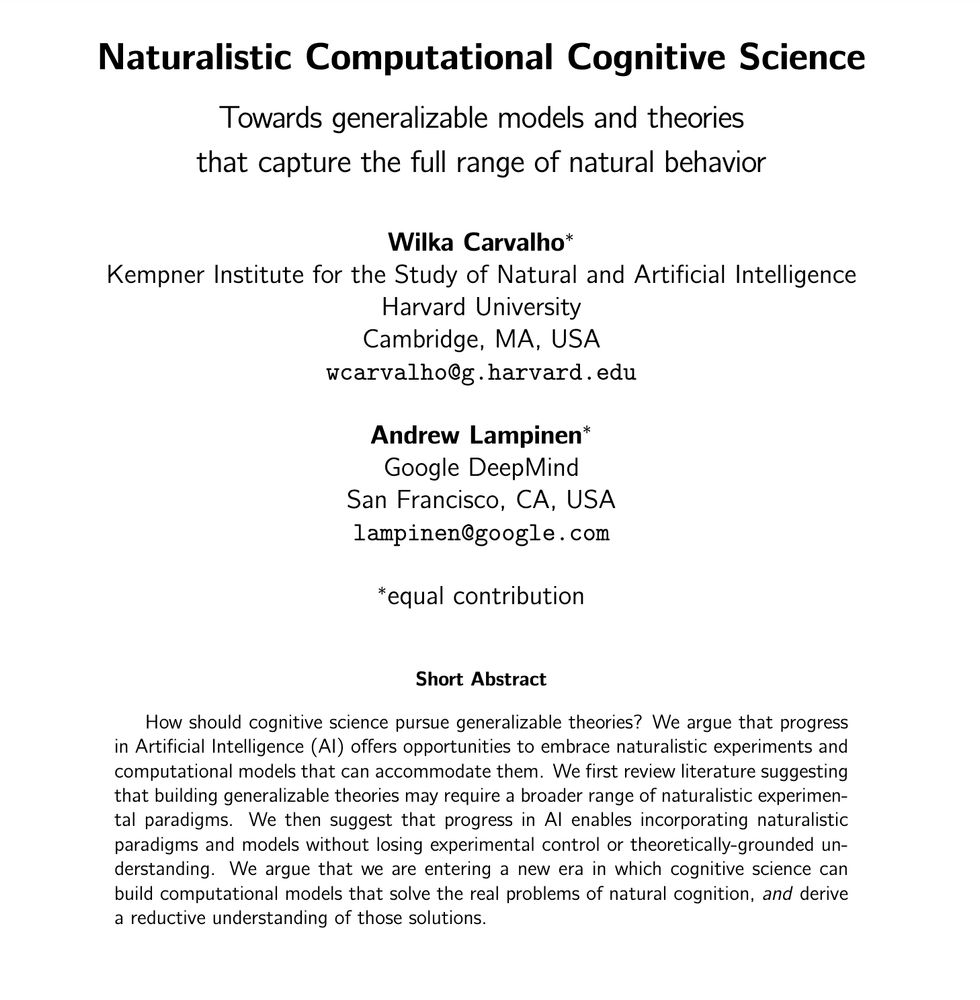

We're calling this direction "Naturalistic Computational Cognitive Science"

We're calling this direction "Naturalistic Computational Cognitive Science"

The Explicandum: The evolution in the human lineage of vocalizations that are:

* Loud

* Synchronized

* Variable

🧵

The Explicandum: The evolution in the human lineage of vocalizations that are:

* Loud

* Synchronized

* Variable

🧵

In @nathumbehav.nature.com, @chazfirestone.bsky.social & I take an experimental approach to style perception! osf.io/preprints/ps...

In @nathumbehav.nature.com, @chazfirestone.bsky.social & I take an experimental approach to style perception! osf.io/preprints/ps...

buff.ly/SXw5LPy

buff.ly/SXw5LPy

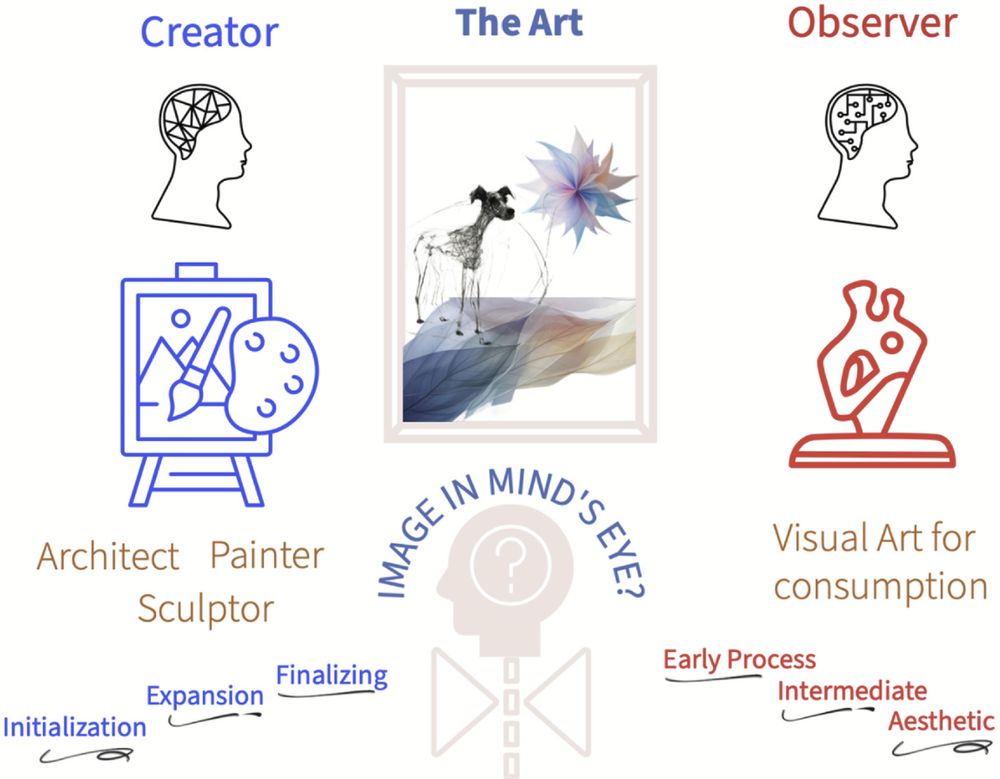

New paper with Oshin Vartanian, Delaram Farzanfar and Pablo Tinio in Neuropsychologia.

doi.org/10.1016/j.ne...

@uoftpsychology.bsky.social

@uoft.bsky.social

New paper with Oshin Vartanian, Delaram Farzanfar and Pablo Tinio in Neuropsychologia.

doi.org/10.1016/j.ne...

@uoftpsychology.bsky.social

@uoft.bsky.social

https://go.nature.com/3YigkQB

https://go.nature.com/3YigkQB

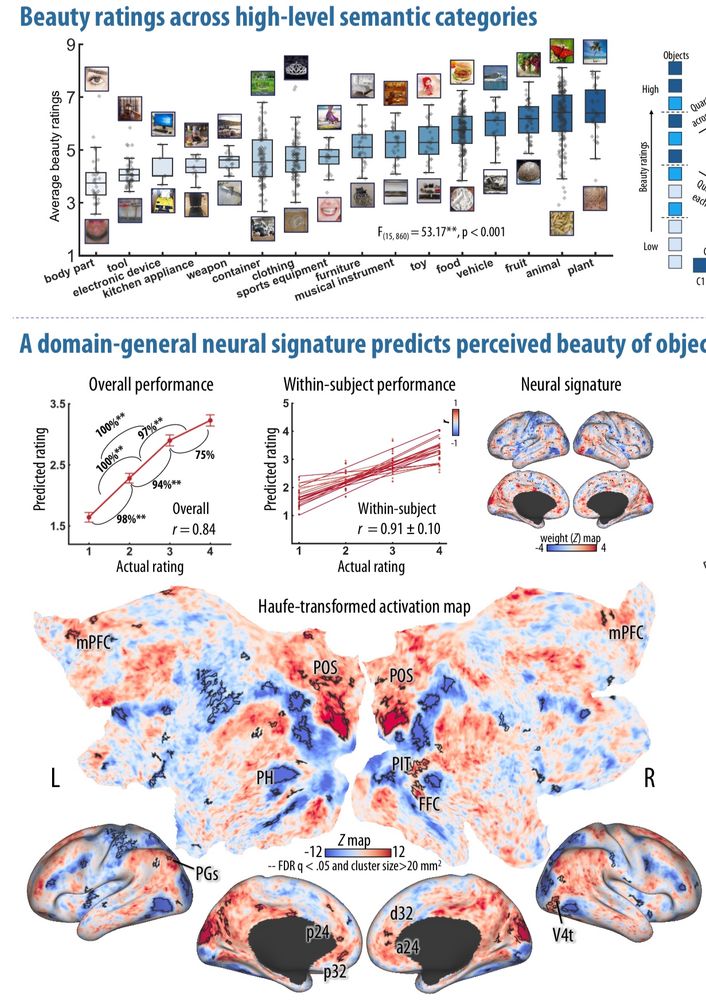

www.pnas.org/doi/10.1073/...

www.pnas.org/doi/10.1073/...

Group Arts Activitites can have moderate benefits for #anxiety and #depression for older adults.

🎨🖌️🎭👵🏻

www.nature.com/articles/s44...

#creativeageing @naturementalhealth.bsky.social

Group Arts Activitites can have moderate benefits for #anxiety and #depression for older adults.

🎨🖌️🎭👵🏻

www.nature.com/articles/s44...

#creativeageing @naturementalhealth.bsky.social

It can can be used to easily and transparently compute a wide range of quantitative image properties for digital images 📸

📄 link.springer.com/article/10.3...

It can can be used to easily and transparently compute a wide range of quantitative image properties for digital images 📸

📄 link.springer.com/article/10.3...

From childhood on, people can create novel, playful, and creative goals. Models have yet to capture this ability. We propose a new way to represent goals and report a model that can generate human-like goals in a playful setting... 1/N

From childhood on, people can create novel, playful, and creative goals. Models have yet to capture this ability. We propose a new way to represent goals and report a model that can generate human-like goals in a playful setting... 1/N

Our paper is published in Nature Human Behavior!

tinyurl.com/p28jy3bx

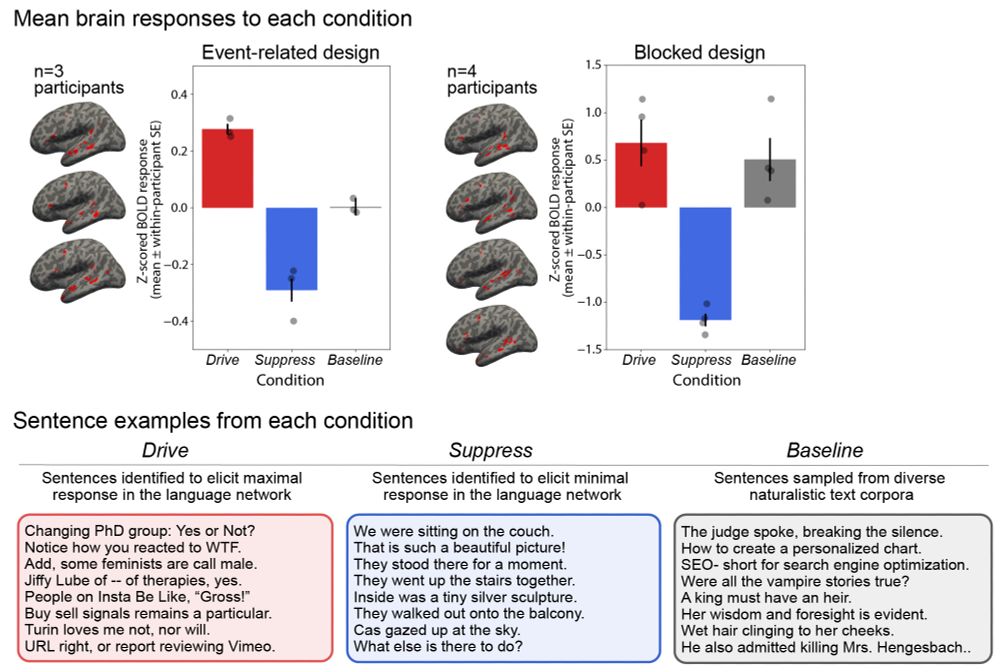

Driving and suppressing brain activity in the human language network with model (GPT)-selected stimuli

With A. Sathe, S. Srikant, M. Taliaferro, M. Wang, @mschrimpf.bsky.social, K. Kay, @evfedorenko.bsky.social

Our paper is published in Nature Human Behavior!

tinyurl.com/p28jy3bx

Driving and suppressing brain activity in the human language network with model (GPT)-selected stimuli

With A. Sathe, S. Srikant, M. Taliaferro, M. Wang, @mschrimpf.bsky.social, K. Kay, @evfedorenko.bsky.social