PhD from LMU Munich, prev. UT Austin, Princeton, @ltiatcmu.bsky.social, Cambridge

computational linguistics, construction grammar, morphosyntax

leonieweissweiler.github.io

Linguistic evaluations of LLMs often implicitly assume that language is generated by symbolic rules.

In a new position paper, @adelegoldberg.bsky.social, @kmahowald.bsky.social and I argue that languages are not Lego sets, and evaluations should reflect this!

arxiv.org/pdf/2502.13195

In each of these sentences, a verb that doesn't usually encode motion is being used to convey that an object is moving to a destination.

Given that these usages are rare, complex, and creative, we ask:

Do LLMs understand what's going on in them?

🧵1/7

In each of these sentences, a verb that doesn't usually encode motion is being used to convey that an object is moving to a destination.

Given that these usages are rare, complex, and creative, we ask:

Do LLMs understand what's going on in them?

🧵1/7

Come check it out if your interested in multilingual linguistic evaluation of LLMs (there will be parse trees on the slides! There's still use for syntactic structure!)

arxiv.org/abs/2504.02768

Come check it out if your interested in multilingual linguistic evaluation of LLMs (there will be parse trees on the slides! There's still use for syntactic structure!)

arxiv.org/abs/2504.02768

The deadline is Nov 26th, the expected start date is Feb 1st.

Feel free to shoot me an email if you want to hear more about this department and I will continue gushing about it.

Postdoc opportunity — also open to recent or soon-to-be PhD graduates (within 1–2 months).

uu.varbi.com/en/what:job/...

The deadline is Nov 26th, the expected start date is Feb 1st.

Feel free to shoot me an email if you want to hear more about this department and I will continue gushing about it.

Not presenting anything but here are two posters you should visit:

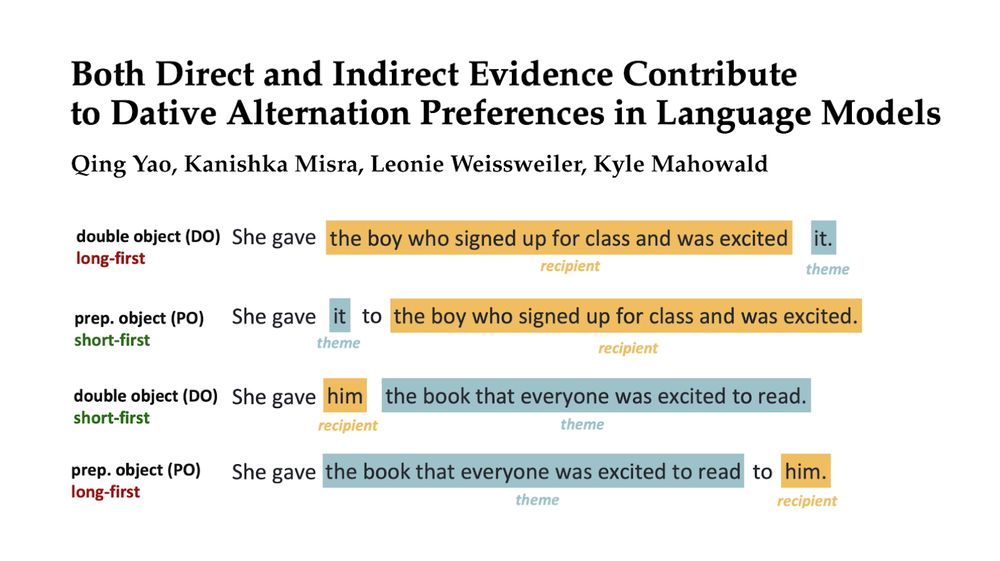

1. @qyao.bsky.social on Controlled rearing for direct and indirect evidence for datives (w/ me, @weissweiler.bsky.social and @kmahowald.bsky.social), W morning

Paper: arxiv.org/abs/2503.20850

Not presenting anything but here are two posters you should visit:

1. @qyao.bsky.social on Controlled rearing for direct and indirect evidence for datives (w/ me, @weissweiler.bsky.social and @kmahowald.bsky.social), W morning

Paper: arxiv.org/abs/2503.20850

🥳I'm excited to share that I've started as a postdoc at Uppsala University NLP @uppsalanlp.bsky.social, working with Joakim Nivre on topics related to constructions and multilinguality!

🙏Many thanks to the Walter Benjamin Programme of the DFG for making this possible.

🥳I'm excited to share that I've started as a postdoc at Uppsala University NLP @uppsalanlp.bsky.social, working with Joakim Nivre on topics related to constructions and multilinguality!

🙏Many thanks to the Walter Benjamin Programme of the DFG for making this possible.

This could be a surprise to many – models rely heavily on stored examples and draw analogies when dealing with unfamiliar words, much as humans do. Check out this new study led by @valentinhofmann.bsky.social to learn how they made the discovery 💡

We show that linguistic generalization in language models can be due to underlying analogical mechanisms.

Shoutout to my amazing co-authors @weissweiler.bsky.social, @davidrmortensen.bsky.social, Hinrich Schütze, and Janet Pierrehumbert!

What generalization mechanisms shape the language skills of LLMs?

Prior work has claimed that LLMs learn language via rules.

We revisit the question and find that superficially rule-like behavior of LLMs can be traced to underlying analogical processes.

🧵

This could be a surprise to many – models rely heavily on stored examples and draw analogies when dealing with unfamiliar words, much as humans do. Check out this new study led by @valentinhofmann.bsky.social to learn how they made the discovery 💡

We show that linguistic generalization in language models can be due to underlying analogical mechanisms.

Shoutout to my amazing co-authors @weissweiler.bsky.social, @davidrmortensen.bsky.social, Hinrich Schütze, and Janet Pierrehumbert!

What generalization mechanisms shape the language skills of LLMs?

Prior work has claimed that LLMs learn language via rules.

We revisit the question and find that superficially rule-like behavior of LLMs can be traced to underlying analogical processes.

🧵

We show that linguistic generalization in language models can be due to underlying analogical mechanisms.

Shoutout to my amazing co-authors @weissweiler.bsky.social, @davidrmortensen.bsky.social, Hinrich Schütze, and Janet Pierrehumbert!

🗨️ Beyond “noisy” text: How (and why) to process dialect data

🗓️ Saturday, May 3, 9:30–10:30

🗨️ Beyond “noisy” text: How (and why) to process dialect data

🗓️ Saturday, May 3, 9:30–10:30

I could not be more excited for this to be out!

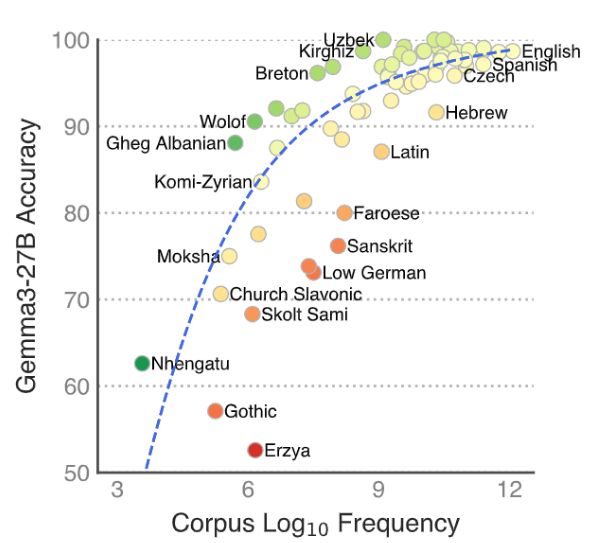

With a fully automated pipeline based on Universal Dependencies, 43 non-Indoeuropean languages, and the best LLMs only scoring 90.2%, I hope this will be a challenging and interesting benchmark for multilingual NLP.

Go test your language models!

Introducing 🌍MultiBLiMP 1.0: A Massively Multilingual Benchmark of Minimal Pairs for Subject-Verb Agreement, covering 101 languages!

We present over 125,000 minimal pairs and evaluate 17 LLMs, finding that support is still lacking for many languages.

🧵⬇️

I could not be more excited for this to be out!

With a fully automated pipeline based on Universal Dependencies, 43 non-Indoeuropean languages, and the best LLMs only scoring 90.2%, I hope this will be a challenging and interesting benchmark for multilingual NLP.

Go test your language models!

Here, we find that dative alternation preferences are learned from dative-specific input statistics *and* from more general short-first preferences.

Great work by @qyao.bsky.social, go follow him!

arxiv.org/abs/2503.20850

Here, we find that dative alternation preferences are learned from dative-specific input statistics *and* from more general short-first preferences.

Great work by @qyao.bsky.social, go follow him!

Is it that they just saw a lot of such examples in pretraining or is it generalization and deeper understanding?

alphaxiv.org/pdf/2503.20850

Is it that they just saw a lot of such examples in pretraining or is it generalization and deeper understanding?

alphaxiv.org/pdf/2503.20850