I'll be giving an oral presentation for Creativity Index on Fri 25th 11:06, Garnet 212&219 🎙️

I'll also be presenting posters:

📍ExploreToM, Sat 26th 10:00, Hall 3 + 2B #49

📍CreativityIndex, Fri 25th 15:00, Hall 3 + 2B #618

Hope to see you there!

I'll be giving an oral presentation for Creativity Index on Fri 25th 11:06, Garnet 212&219 🎙️

I'll also be presenting posters:

📍ExploreToM, Sat 26th 10:00, Hall 3 + 2B #49

📍CreativityIndex, Fri 25th 15:00, Hall 3 + 2B #618

Hope to see you there!

Tired 😴 of reasoning benchmarks full of math & code? In our work we consider the problem of reasoning for plot holes in stories -- inconsistencies in a storyline that break the internal logic or rules of a story’s world 🌎

W @melaniesclar.bsky.social, and @tsvetshop.bsky.social

1/n

Tired 😴 of reasoning benchmarks full of math & code? In our work we consider the problem of reasoning for plot holes in stories -- inconsistencies in a storyline that break the internal logic or rules of a story’s world 🌎

W @melaniesclar.bsky.social, and @tsvetshop.bsky.social

1/n

We propose information-guided probes, a method to uncover memorization evidence in *completely black-box* models,

without requiring access to

🙅♀️ Model weights

🙅♀️ Training data

🙅♀️ Token probabilities 🧵 (1/5)

We propose information-guided probes, a method to uncover memorization evidence in *completely black-box* models,

without requiring access to

🙅♀️ Model weights

🙅♀️ Training data

🙅♀️ Token probabilities 🧵 (1/5)

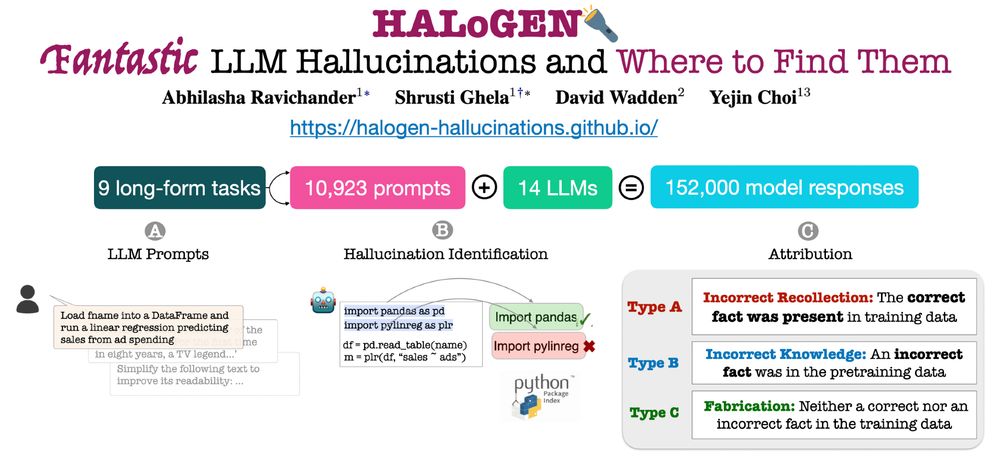

New work w/ Shrusti Ghela*, David Wadden, and Yejin Choi 💫

📝 Paper: arxiv.org/abs/2501.08292

🚀 Code/Data: github.com/AbhilashaRav...

🌐 Website: halogen-hallucinations.github.io 🧵 [1/n]

New work w/ Shrusti Ghela*, David Wadden, and Yejin Choi 💫

📝 Paper: arxiv.org/abs/2501.08292

🚀 Code/Data: github.com/AbhilashaRav...

🌐 Website: halogen-hallucinations.github.io 🧵 [1/n]

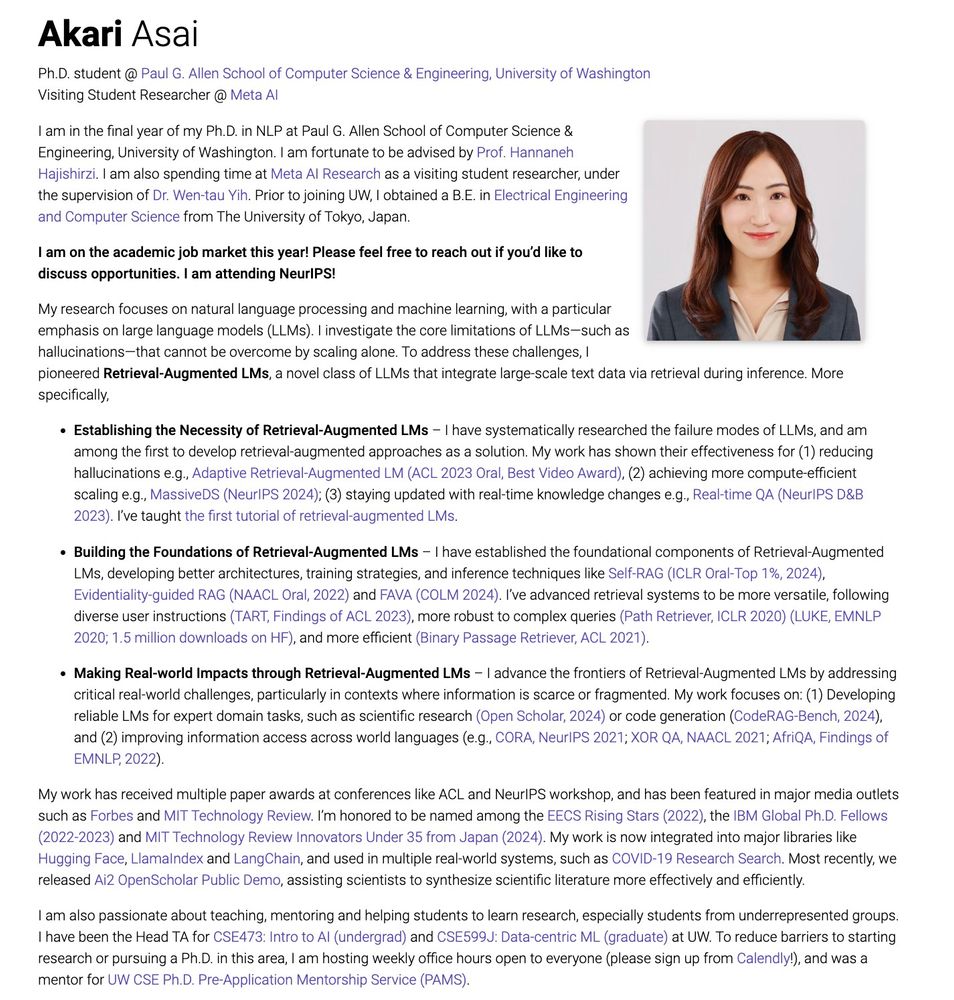

My Ph.D. work focuses on Retrieval-Augmented LMs to create more reliable AI systems 🧵

My Ph.D. work focuses on Retrieval-Augmented LMs to create more reliable AI systems 🧵

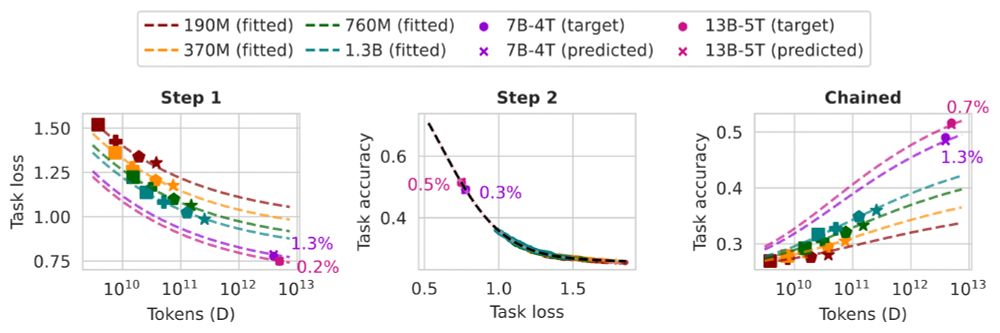

We develop task scaling laws and model ladders, which predict the accuracy on individual tasks by OLMo 2 7B & 13B models within 2 points of absolute error. The cost is 1% of the compute used to pretrain them.

We develop task scaling laws and model ladders, which predict the accuracy on individual tasks by OLMo 2 7B & 13B models within 2 points of absolute error. The cost is 1% of the compute used to pretrain them.

🔗 arxiv.org/abs/2407.16607

🔗 arxiv.org/abs/2407.16607

Introducing CREATIVITY INDEX: a metric that quantifies the linguistic creativity of a text by reconstructing it from existing text snippets on the web. Spoiler: professional human writers like Hemingway are still far more creative than LLMs! 😲

@uwnlp.bsky.social & Ai2

With open models & 45M-paper datastores, it outperforms proprietary systems & match human experts.

Try out our demo!

openscholar.allen.ai

@uwnlp.bsky.social & Ai2

With open models & 45M-paper datastores, it outperforms proprietary systems & match human experts.

Try out our demo!

openscholar.allen.ai