Will Turner

@renrutmailliw.bsky.social

Pinned

Will Turner

@renrutmailliw.bsky.social

· May 23

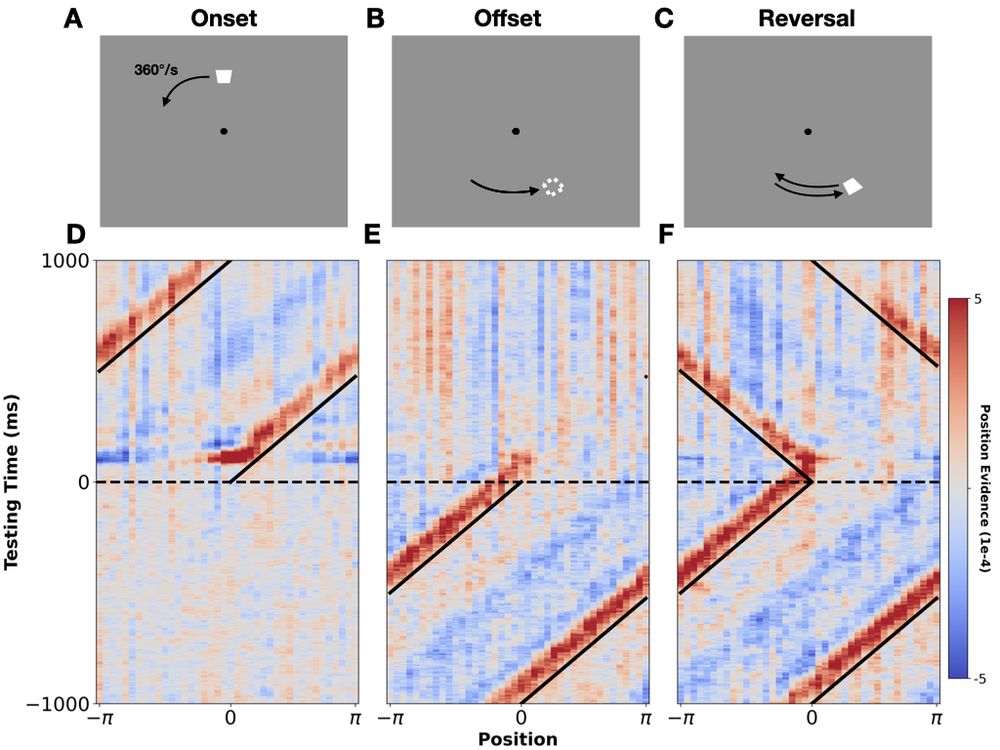

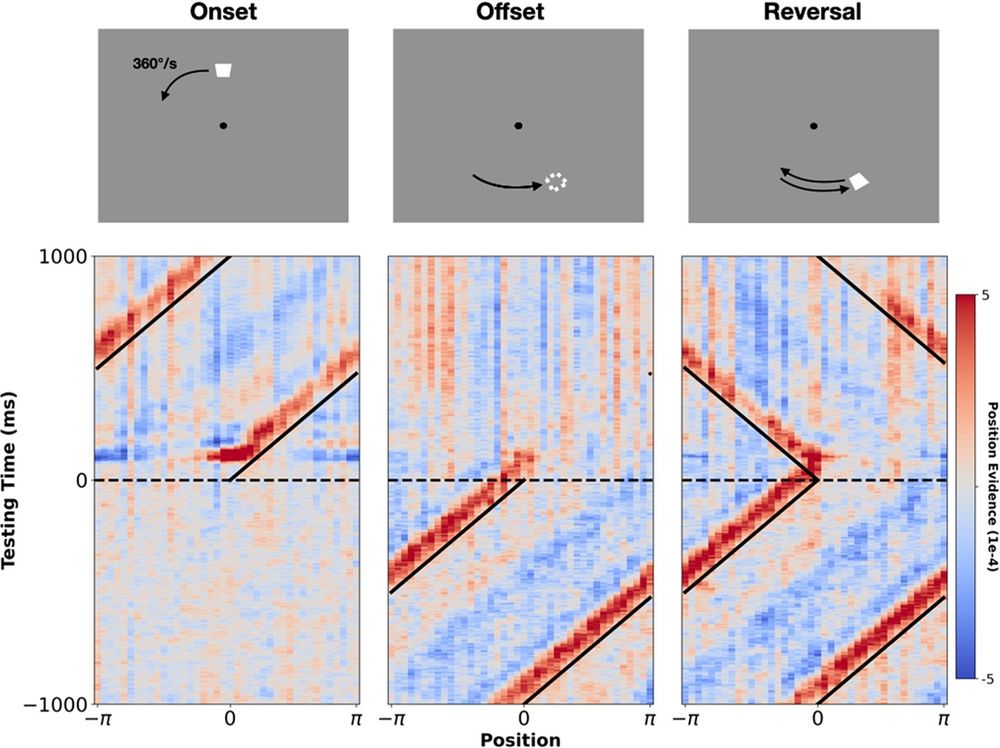

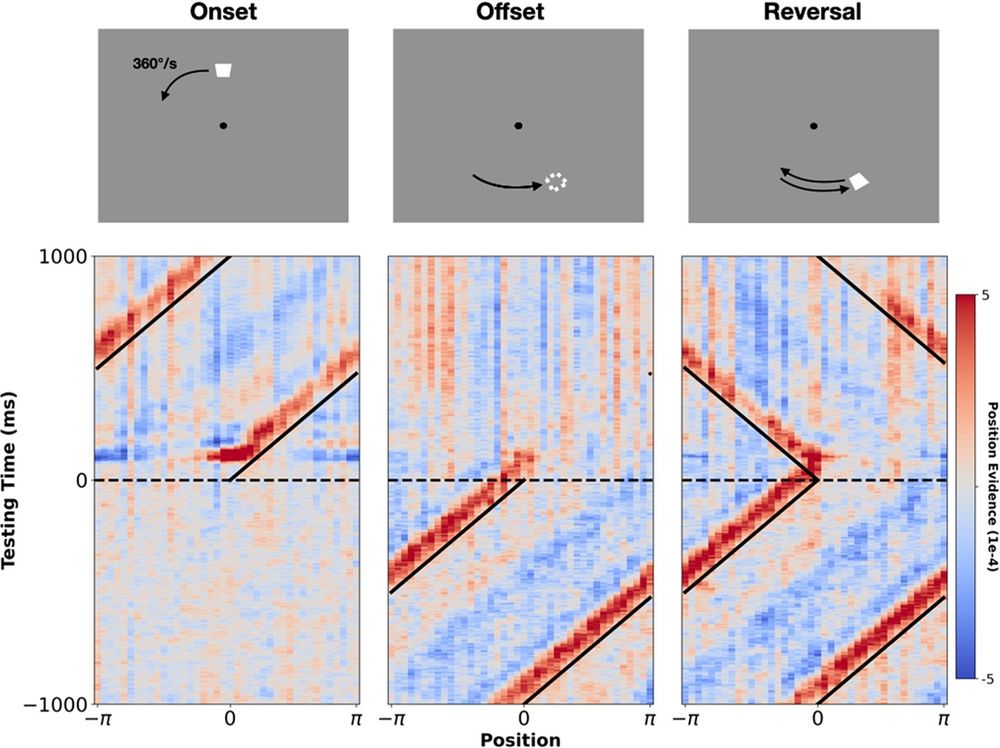

New paper out in @plosbiology.org w/ Charlie, @phil-johnson.bsky.social, Ella, and Hinze 🎉

We track moving stimuli via EEG, find evidence that motion is extrapolated across distinct stages of processing + show how this effect may emerge from a simple synaptic learning rule!

tinyurl.com/2szh6w5c

We track moving stimuli via EEG, find evidence that motion is extrapolated across distinct stages of processing + show how this effect may emerge from a simple synaptic learning rule!

tinyurl.com/2szh6w5c

Reposted by Will Turner

Our new preprint on the FOODEEG open dataset is out! EEG recordings and behavioural responses on food cognition tasks for 117 participants will be made publicly available 🧠 @danfeuerriegel.bsky.social @tgro.bsky.social

www.biorxiv.org/content/10.1...

www.biorxiv.org/content/10.1...

FOODEEG: An open dataset of human electroencephalographic and behavioural responses to food images

Investigating the neurocognitive mechanisms underlying food choices has the potential to advance our understanding of eating behaviour and inform health-targeted interventions and policy. Large, publi...

www.biorxiv.org

November 10, 2025 at 11:34 PM

Our new preprint on the FOODEEG open dataset is out! EEG recordings and behavioural responses on food cognition tasks for 117 participants will be made publicly available 🧠 @danfeuerriegel.bsky.social @tgro.bsky.social

www.biorxiv.org/content/10.1...

www.biorxiv.org/content/10.1...

Reposted by Will Turner

happy to share our new paper, out now in Neuron! led by the incredible Yizhen Zhang, we explore how the brain segments continuous speech into word-forms and uses adaptive dynamics to code for relative time - www.sciencedirect.com/science/arti...

Human cortical dynamics of auditory word form encoding

We perceive continuous speech as a series of discrete words, despite the lack of clear acoustic boundaries. The superior temporal gyrus (STG) encodes …

www.sciencedirect.com

November 7, 2025 at 6:16 PM

happy to share our new paper, out now in Neuron! led by the incredible Yizhen Zhang, we explore how the brain segments continuous speech into word-forms and uses adaptive dynamics to code for relative time - www.sciencedirect.com/science/arti...

Reposted by Will Turner

I am so honored to have received an Outstanding PhD Thesis award from the Luxembourg National Research Fund @fnr.lu! 🏆

My PhD research was about how language is processed in the brain, with a focus on patients with a language disorder called aphasia 🧠 Find out more➡️ youtu.be/E-Zww-B1jFQ?...

My PhD research was about how language is processed in the brain, with a focus on patients with a language disorder called aphasia 🧠 Find out more➡️ youtu.be/E-Zww-B1jFQ?...

FNR Awards 2025: Outstanding PhD Thesis - Jill Kries

YouTube video by FNRLux

youtu.be

November 5, 2025 at 5:29 PM

I am so honored to have received an Outstanding PhD Thesis award from the Luxembourg National Research Fund @fnr.lu! 🏆

My PhD research was about how language is processed in the brain, with a focus on patients with a language disorder called aphasia 🧠 Find out more➡️ youtu.be/E-Zww-B1jFQ?...

My PhD research was about how language is processed in the brain, with a focus on patients with a language disorder called aphasia 🧠 Find out more➡️ youtu.be/E-Zww-B1jFQ?...

Reposted by Will Turner

Delighted to share our new paper, now out in PNAS! www.pnas.org/doi/10.1073/...

"Hierarchical dynamic coding coordinates speech comprehension in the brain"

with dream team @alecmarantz.bsky.social, @davidpoeppel.bsky.social, @jeanremiking.bsky.social

Summary 👇

1/8

"Hierarchical dynamic coding coordinates speech comprehension in the brain"

with dream team @alecmarantz.bsky.social, @davidpoeppel.bsky.social, @jeanremiking.bsky.social

Summary 👇

1/8

PNAS

Proceedings of the National Academy of Sciences (PNAS), a peer reviewed journal of the National Academy of Sciences (NAS) - an authoritative source of high-impact, original research that broadly spans...

www.pnas.org

October 22, 2025 at 5:21 AM

Delighted to share our new paper, now out in PNAS! www.pnas.org/doi/10.1073/...

"Hierarchical dynamic coding coordinates speech comprehension in the brain"

with dream team @alecmarantz.bsky.social, @davidpoeppel.bsky.social, @jeanremiking.bsky.social

Summary 👇

1/8

"Hierarchical dynamic coding coordinates speech comprehension in the brain"

with dream team @alecmarantz.bsky.social, @davidpoeppel.bsky.social, @jeanremiking.bsky.social

Summary 👇

1/8

Reposted by Will Turner

Fantastic commentary on @smfleming.bsky.social & @matthiasmichel.bsky.social's BBS paper by @renrutmailliw.bsky.social, @lauragwilliams.bsky.social & Hinze Hogendoorn. Hits lots of nails on the head. As @neddo.bsky.social & I also argue: postdiction doesn't prove consciousness is slow! 1/3

New BBS article w/ @lauragwilliams.bsky.social and Hinze Hogendoorn, just accepted! We respond to a thought-provoking article by @smfleming.bsky.social & @matthiasmichel.bsky.social, and argue that it's premature to conclude that conscious perception is delayed by 350-450ms: bit.ly/4nYNTlb

OSF

bit.ly

October 29, 2025 at 1:22 PM

Fantastic commentary on @smfleming.bsky.social & @matthiasmichel.bsky.social's BBS paper by @renrutmailliw.bsky.social, @lauragwilliams.bsky.social & Hinze Hogendoorn. Hits lots of nails on the head. As @neddo.bsky.social & I also argue: postdiction doesn't prove consciousness is slow! 1/3

Reposted by Will Turner

Super happy to share my very first first-author paper out in

@sfnjournals.bsky.social! We show content-specific predictions are represented in an alpha rhythm. It’s been a beautiful, inspiring, yet challenging journey.

Huge thanks to everyone, especially @peterkok.bsky.social @jhaarsma.bsky.social

@sfnjournals.bsky.social! We show content-specific predictions are represented in an alpha rhythm. It’s been a beautiful, inspiring, yet challenging journey.

Huge thanks to everyone, especially @peterkok.bsky.social @jhaarsma.bsky.social

@dotproduct.bsky.social's first first author paper is finally out in @sfnjournals.bsky.social! Her findings show that content-specific predictions fluctuate with alpha frequencies, suggesting a more specific role for alpha oscillations than we may have thought. With @jhaarsma.bsky.social. 🧠🟦 🧠🤖

Contents of visual predictions oscillate at alpha frequencies

Predictions of future events have a major impact on how we process sensory signals. However, it remains unclear how the brain keeps predictions online in anticipation of future inputs. Here, we combin...

www.jneurosci.org

October 21, 2025 at 3:57 PM

Super happy to share my very first first-author paper out in

@sfnjournals.bsky.social! We show content-specific predictions are represented in an alpha rhythm. It’s been a beautiful, inspiring, yet challenging journey.

Huge thanks to everyone, especially @peterkok.bsky.social @jhaarsma.bsky.social

@sfnjournals.bsky.social! We show content-specific predictions are represented in an alpha rhythm. It’s been a beautiful, inspiring, yet challenging journey.

Huge thanks to everyone, especially @peterkok.bsky.social @jhaarsma.bsky.social

Reposted by Will Turner

@dotproduct.bsky.social's first first author paper is finally out in @sfnjournals.bsky.social! Her findings show that content-specific predictions fluctuate with alpha frequencies, suggesting a more specific role for alpha oscillations than we may have thought. With @jhaarsma.bsky.social. 🧠🟦 🧠🤖

Contents of visual predictions oscillate at alpha frequencies

Predictions of future events have a major impact on how we process sensory signals. However, it remains unclear how the brain keeps predictions online in anticipation of future inputs. Here, we combin...

www.jneurosci.org

October 21, 2025 at 11:05 AM

@dotproduct.bsky.social's first first author paper is finally out in @sfnjournals.bsky.social! Her findings show that content-specific predictions fluctuate with alpha frequencies, suggesting a more specific role for alpha oscillations than we may have thought. With @jhaarsma.bsky.social. 🧠🟦 🧠🤖

Reposted by Will Turner

Age and gender distortion in online media and large language models

"Furthermore, when generating and evaluating resumes, ChatGPT assumes that women are younger and less experienced, rating older male applicants as of higher quality."

No surprise, but now documented:

www.nature.com/articles/s41...

"Furthermore, when generating and evaluating resumes, ChatGPT assumes that women are younger and less experienced, rating older male applicants as of higher quality."

No surprise, but now documented:

www.nature.com/articles/s41...

Age and gender distortion in online media and large language models - Nature

Stereotypes of age-related gender bias are socially distorted, as evidenced by the age gap in the representations of women and men across various media and algorithms, despite no systematic age differ...

www.nature.com

October 16, 2025 at 8:53 AM

Age and gender distortion in online media and large language models

"Furthermore, when generating and evaluating resumes, ChatGPT assumes that women are younger and less experienced, rating older male applicants as of higher quality."

No surprise, but now documented:

www.nature.com/articles/s41...

"Furthermore, when generating and evaluating resumes, ChatGPT assumes that women are younger and less experienced, rating older male applicants as of higher quality."

No surprise, but now documented:

www.nature.com/articles/s41...

Reposted by Will Turner

really fun getting to think about the "time to consciousness" with this dream team! we discuss interesting parallels between vision and language processing on phenomena like postdictive perceptual effects, among other things! check it out 😄

New BBS article w/ @lauragwilliams.bsky.social and Hinze Hogendoorn, just accepted! We respond to a thought-provoking article by @smfleming.bsky.social & @matthiasmichel.bsky.social, and argue that it's premature to conclude that conscious perception is delayed by 350-450ms: bit.ly/4nYNTlb

OSF

bit.ly

October 1, 2025 at 7:04 PM

really fun getting to think about the "time to consciousness" with this dream team! we discuss interesting parallels between vision and language processing on phenomena like postdictive perceptual effects, among other things! check it out 😄

Reposted by Will Turner

🚨 Out now in @commspsychol.nature.com 🚨

doi.org/10.1038/s442...

Our #RegisteredReport tested whether the order of task decisions and confidence ratings bias #metacognition.

Some said decisions → confidence enhances metacognition. If true, decades of findings will be affected.

doi.org/10.1038/s442...

Our #RegisteredReport tested whether the order of task decisions and confidence ratings bias #metacognition.

Some said decisions → confidence enhances metacognition. If true, decades of findings will be affected.

September 30, 2025 at 8:11 AM

🚨 Out now in @commspsychol.nature.com 🚨

doi.org/10.1038/s442...

Our #RegisteredReport tested whether the order of task decisions and confidence ratings bias #metacognition.

Some said decisions → confidence enhances metacognition. If true, decades of findings will be affected.

doi.org/10.1038/s442...

Our #RegisteredReport tested whether the order of task decisions and confidence ratings bias #metacognition.

Some said decisions → confidence enhances metacognition. If true, decades of findings will be affected.

New BBS article w/ @lauragwilliams.bsky.social and Hinze Hogendoorn, just accepted! We respond to a thought-provoking article by @smfleming.bsky.social & @matthiasmichel.bsky.social, and argue that it's premature to conclude that conscious perception is delayed by 350-450ms: bit.ly/4nYNTlb

OSF

bit.ly

September 29, 2025 at 7:00 PM

New BBS article w/ @lauragwilliams.bsky.social and Hinze Hogendoorn, just accepted! We respond to a thought-provoking article by @smfleming.bsky.social & @matthiasmichel.bsky.social, and argue that it's premature to conclude that conscious perception is delayed by 350-450ms: bit.ly/4nYNTlb

Reposted by Will Turner

We present our preprint on ViV1T, a transformer for dynamic mouse V1 response prediction. We reveal novel response properties and confirm them in vivo.

With @wulfdewolf.bsky.social, Danai Katsanevaki, @arnoonken.bsky.social, @rochefortlab.bsky.social.

Paper and code at the end of the thread!

🧵1/7

With @wulfdewolf.bsky.social, Danai Katsanevaki, @arnoonken.bsky.social, @rochefortlab.bsky.social.

Paper and code at the end of the thread!

🧵1/7

September 19, 2025 at 12:37 PM

We present our preprint on ViV1T, a transformer for dynamic mouse V1 response prediction. We reveal novel response properties and confirm them in vivo.

With @wulfdewolf.bsky.social, Danai Katsanevaki, @arnoonken.bsky.social, @rochefortlab.bsky.social.

Paper and code at the end of the thread!

🧵1/7

With @wulfdewolf.bsky.social, Danai Katsanevaki, @arnoonken.bsky.social, @rochefortlab.bsky.social.

Paper and code at the end of the thread!

🧵1/7

Reposted by Will Turner

🚨Our preprint is online!🚨

www.biorxiv.org/content/10.1...

How do #dopamine neurons perform the key calculations in reinforcement #learning?

Read on to find out more! 🧵

www.biorxiv.org/content/10.1...

How do #dopamine neurons perform the key calculations in reinforcement #learning?

Read on to find out more! 🧵

September 19, 2025 at 1:05 PM

🚨Our preprint is online!🚨

www.biorxiv.org/content/10.1...

How do #dopamine neurons perform the key calculations in reinforcement #learning?

Read on to find out more! 🧵

www.biorxiv.org/content/10.1...

How do #dopamine neurons perform the key calculations in reinforcement #learning?

Read on to find out more! 🧵

Reposted by Will Turner

I’m hiring!! 🎉 Looking for a full-time Lab Manager to help launch the Minds, Experiences, and Language Lab at Stanford. We’ll use all-day language recording, eye tracking, & neuroimaging to study how kids & families navigate unequal structural constraints. Please share:

phxc1b.rfer.us/STANFORDWcqUYo

phxc1b.rfer.us/STANFORDWcqUYo

Research Coordinator, Minds, Experiences, and Language Lab in Graduate School of Education, Stanford, California, United States

The Stanford Graduate School of Education (GSE) seeks a full-time Research Coordinator (acting lab manager) to help launch and coordinate the Minds,.....

phxc1b.rfer.us

September 15, 2025 at 6:57 PM

I’m hiring!! 🎉 Looking for a full-time Lab Manager to help launch the Minds, Experiences, and Language Lab at Stanford. We’ll use all-day language recording, eye tracking, & neuroimaging to study how kids & families navigate unequal structural constraints. Please share:

phxc1b.rfer.us/STANFORDWcqUYo

phxc1b.rfer.us/STANFORDWcqUYo

Reposted by Will Turner

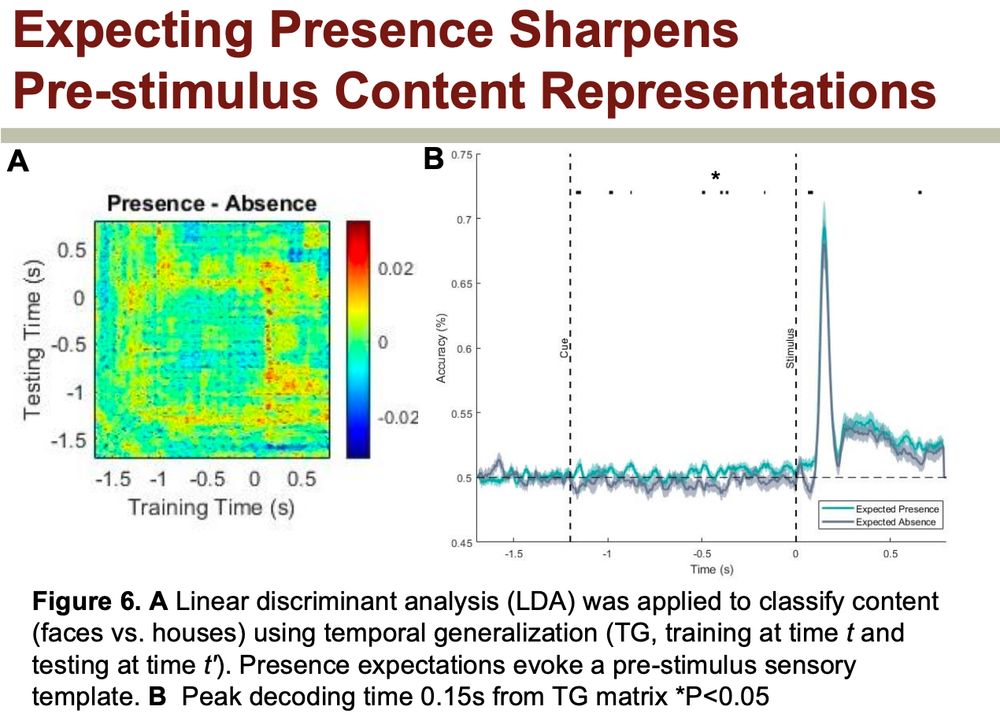

Looking forward to #ICON2025 next week! We will have several presentations on mental imagery, reality monitoring and expectations:

To kick us off, on Tuesday at 15:30, Martha Cottam will present:

P2.12 | Presence Expectations Modulate the Neural Signatures of Content Prediction Errors

To kick us off, on Tuesday at 15:30, Martha Cottam will present:

P2.12 | Presence Expectations Modulate the Neural Signatures of Content Prediction Errors

September 11, 2025 at 3:28 PM

Looking forward to #ICON2025 next week! We will have several presentations on mental imagery, reality monitoring and expectations:

To kick us off, on Tuesday at 15:30, Martha Cottam will present:

P2.12 | Presence Expectations Modulate the Neural Signatures of Content Prediction Errors

To kick us off, on Tuesday at 15:30, Martha Cottam will present:

P2.12 | Presence Expectations Modulate the Neural Signatures of Content Prediction Errors

Reposted by Will Turner

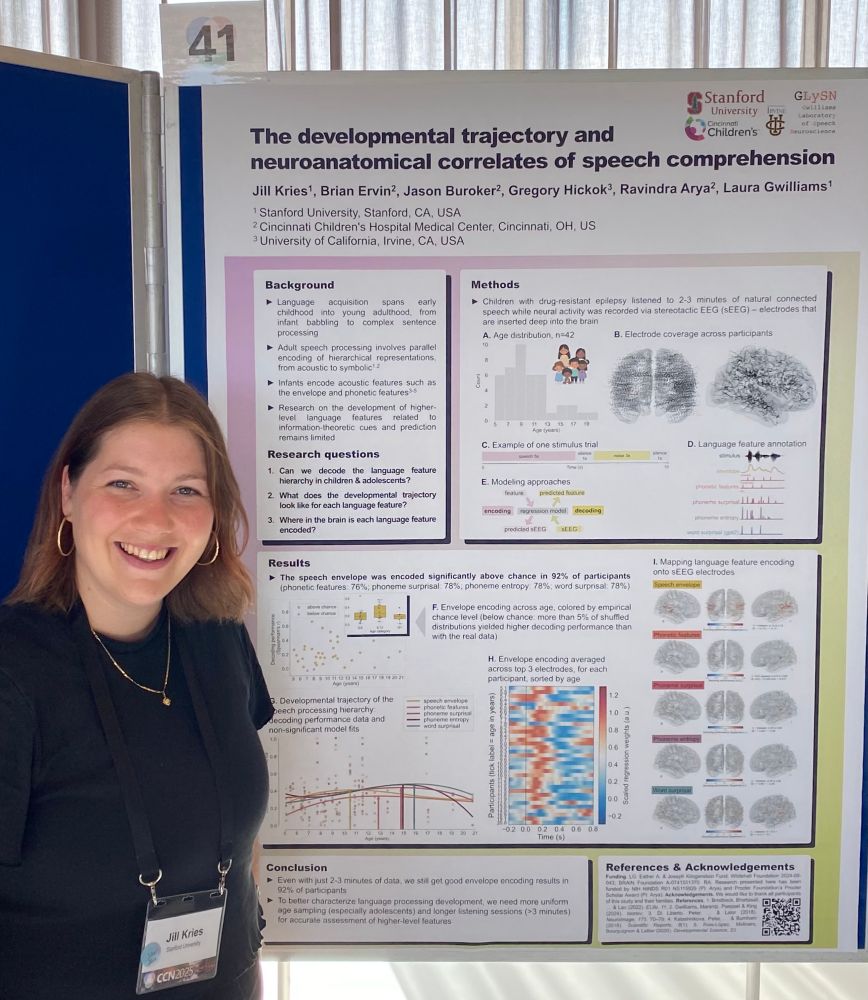

In August I had the pleasure to present a poster at the Cognitive Computational Neuroscience (CCN) conference in Amsterdam. My poster was about 𝘁𝗵𝗲 𝗱𝗲𝘃𝗲𝗹𝗼𝗽𝗺𝗲𝗻𝘁𝗮𝗹 𝘁𝗿𝗮𝗷𝗲𝗰𝘁𝗼𝗿𝘆 𝗮𝗻𝗱 𝗻𝗲𝘂𝗿𝗼𝗮𝗻𝗮𝘁𝗼𝗺𝗶𝗰𝗮𝗹 𝗰𝗼𝗿𝗿𝗲𝗹𝗮𝘁𝗲𝘀 𝗼𝗳 𝘀𝗽𝗲𝗲𝗰𝗵 𝗰𝗼𝗺𝗽𝗿𝗲𝗵𝗲𝗻𝘀𝗶𝗼𝗻 🧒➡️🧑 🧠

September 8, 2025 at 9:50 PM

In August I had the pleasure to present a poster at the Cognitive Computational Neuroscience (CCN) conference in Amsterdam. My poster was about 𝘁𝗵𝗲 𝗱𝗲𝘃𝗲𝗹𝗼𝗽𝗺𝗲𝗻𝘁𝗮𝗹 𝘁𝗿𝗮𝗷𝗲𝗰𝘁𝗼𝗿𝘆 𝗮𝗻𝗱 𝗻𝗲𝘂𝗿𝗼𝗮𝗻𝗮𝘁𝗼𝗺𝗶𝗰𝗮𝗹 𝗰𝗼𝗿𝗿𝗲𝗹𝗮𝘁𝗲𝘀 𝗼𝗳 𝘀𝗽𝗲𝗲𝗰𝗵 𝗰𝗼𝗺𝗽𝗿𝗲𝗵𝗲𝗻𝘀𝗶𝗼𝗻 🧒➡️🧑 🧠

Reposted by Will Turner

🚨Pre-print of some cool data from my PhD days!

doi.org/10.1101/2025...

☝️Did you know that visual surprise is (probably) a domain-general signal and/or operates at the object-level?

✌️Did you also know that the timing of this response depends on the specific attribute that violates an expectation?

doi.org/10.1101/2025...

☝️Did you know that visual surprise is (probably) a domain-general signal and/or operates at the object-level?

✌️Did you also know that the timing of this response depends on the specific attribute that violates an expectation?

The Latency of a Domain-General Visual Surprise Signal is Attribute Dependent

Predictions concerning upcoming visual input play a key role in resolving percepts. Sometimes input is surprising, under which circumstances the brain must calibrate erroneous predictions so that perc...

doi.org

August 19, 2025 at 12:30 AM

🚨Pre-print of some cool data from my PhD days!

doi.org/10.1101/2025...

☝️Did you know that visual surprise is (probably) a domain-general signal and/or operates at the object-level?

✌️Did you also know that the timing of this response depends on the specific attribute that violates an expectation?

doi.org/10.1101/2025...

☝️Did you know that visual surprise is (probably) a domain-general signal and/or operates at the object-level?

✌️Did you also know that the timing of this response depends on the specific attribute that violates an expectation?

Reposted by Will Turner

Humans largely learn language through speech. In contrast, most LLMs learn from pre-tokenized text.

In our #Interspeech2025 paper, we introduce AuriStream: a simple, causal model that learns phoneme, word & semantic information from speech.

Poster P6, tomorrow (Aug 19) at 1:30 pm, Foyer 2.2!

In our #Interspeech2025 paper, we introduce AuriStream: a simple, causal model that learns phoneme, word & semantic information from speech.

Poster P6, tomorrow (Aug 19) at 1:30 pm, Foyer 2.2!

August 19, 2025 at 1:12 AM

Humans largely learn language through speech. In contrast, most LLMs learn from pre-tokenized text.

In our #Interspeech2025 paper, we introduce AuriStream: a simple, causal model that learns phoneme, word & semantic information from speech.

Poster P6, tomorrow (Aug 19) at 1:30 pm, Foyer 2.2!

In our #Interspeech2025 paper, we introduce AuriStream: a simple, causal model that learns phoneme, word & semantic information from speech.

Poster P6, tomorrow (Aug 19) at 1:30 pm, Foyer 2.2!

Reposted by Will Turner

looking forward to seeing everyone at #CCN2025! here's a snapshot of the work from my lab that we'll be presenting on speech neuroscience 🧠 ✨

August 10, 2025 at 6:09 PM

looking forward to seeing everyone at #CCN2025! here's a snapshot of the work from my lab that we'll be presenting on speech neuroscience 🧠 ✨

Reposted by Will Turner

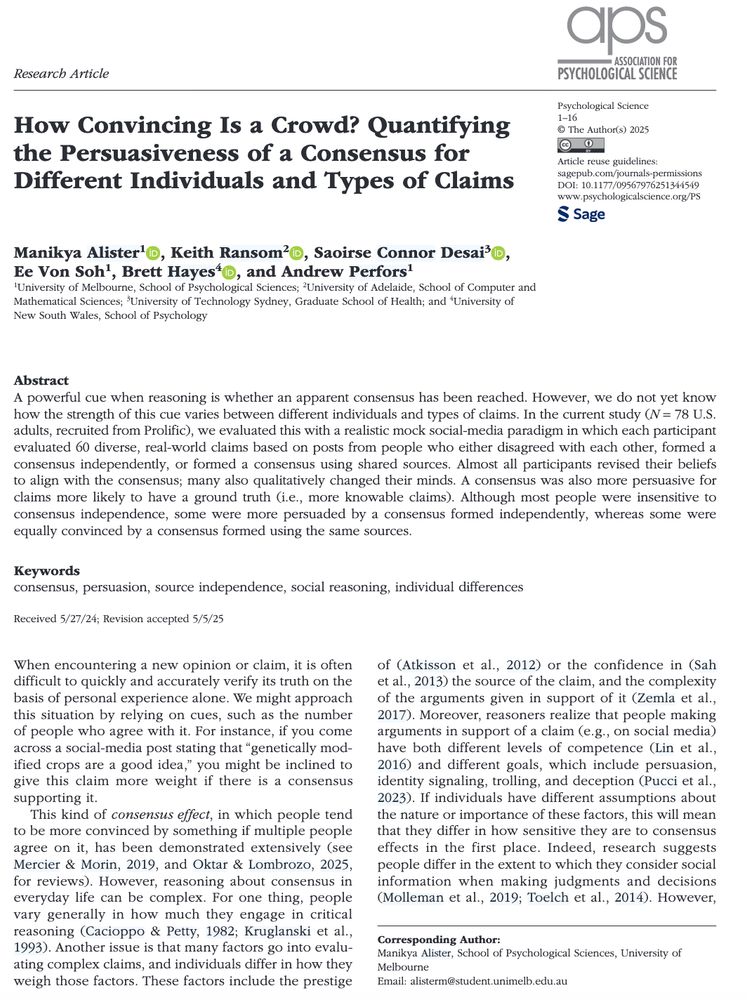

We know that a consensus of opinions is persuasive, but how reliable is this effect across people and types of consensus, and are there any kinds of claims where people care less about what other people think? This is what we tested in our new(ish) paper in @psychscience.bsky.social

August 10, 2025 at 11:12 PM

We know that a consensus of opinions is persuasive, but how reliable is this effect across people and types of consensus, and are there any kinds of claims where people care less about what other people think? This is what we tested in our new(ish) paper in @psychscience.bsky.social

Reposted by Will Turner

I really like this paper. I fear that people think the authors are claiming that the brain isn’t predictive though, which this study cannot (and does not) address. As the title says, the data purely show that evoked responses are not necessarily prediction errors, which makes sense!

1/3) This may be a very important paper, it suggests that there are no prediction error encoding neurons in sensory areas of cortex:

www.biorxiv.org/content/10.1...

I personally am a big fan of the idea that cortical regions (allo and neo) are doing sequence prediction.

But...

🧠📈 🧪

www.biorxiv.org/content/10.1...

I personally am a big fan of the idea that cortical regions (allo and neo) are doing sequence prediction.

But...

🧠📈 🧪

Sensory responses of visual cortical neurons are not prediction errors

Predictive coding is theorized to be a ubiquitous cortical process to explain sensory responses. It asserts that the brain continuously predicts sensory information and imposes those predictions on lo...

www.biorxiv.org

July 15, 2025 at 11:43 AM

I really like this paper. I fear that people think the authors are claiming that the brain isn’t predictive though, which this study cannot (and does not) address. As the title says, the data purely show that evoked responses are not necessarily prediction errors, which makes sense!

Reposted by Will Turner

It takes time for the #brain to process information, so how can we catch a flying ball? @renrutmailliw.bsky.social &co reveal a multi-stage #motion #extrapolation occurring in the #HumanBrain, shifting the represented position of moving objects closer to real time @plosbiology.org 🧪 plos.io/3Fm83Fc

May 27, 2025 at 6:06 PM

It takes time for the #brain to process information, so how can we catch a flying ball? @renrutmailliw.bsky.social &co reveal a multi-stage #motion #extrapolation occurring in the #HumanBrain, shifting the represented position of moving objects closer to real time @plosbiology.org 🧪 plos.io/3Fm83Fc

Reposted by Will Turner

It takes time for the #brain to process information, so how can we catch a flying ball? This study provides evidence of multi-stage #motion #extrapolation occurring in the #HumanBrain, shifting the represented position of moving objects closer to real time @plosbiology.org 🧪 plos.io/3Fm83Fc

May 27, 2025 at 1:17 PM

It takes time for the #brain to process information, so how can we catch a flying ball? This study provides evidence of multi-stage #motion #extrapolation occurring in the #HumanBrain, shifting the represented position of moving objects closer to real time @plosbiology.org 🧪 plos.io/3Fm83Fc

Reposted by Will Turner

New preprint from the lab!

We used EEG⚡🧠 to map how 12 different food attributes are represented in the brain. 🍎🥦🥪🍙🍮

www.biorxiv.org/content/10.1...

Led by Violet Chae in collaboration with @tgro.bsky.social

We used EEG⚡🧠 to map how 12 different food attributes are represented in the brain. 🍎🥦🥪🍙🍮

www.biorxiv.org/content/10.1...

Led by Violet Chae in collaboration with @tgro.bsky.social

Characterising the neural time-courses of food attribute representations

Dietary decisions involve the consideration of multiple, often conflicting, food attributes that precede the computation of an overall value for a food. The differences in the speed at which attribute...

www.biorxiv.org

May 18, 2025 at 1:47 AM

New preprint from the lab!

We used EEG⚡🧠 to map how 12 different food attributes are represented in the brain. 🍎🥦🥪🍙🍮

www.biorxiv.org/content/10.1...

Led by Violet Chae in collaboration with @tgro.bsky.social

We used EEG⚡🧠 to map how 12 different food attributes are represented in the brain. 🍎🥦🥪🍙🍮

www.biorxiv.org/content/10.1...

Led by Violet Chae in collaboration with @tgro.bsky.social

Reposted by Will Turner

What are the organizing dimensions of language processing?

We show that voxel responses during comprehension are organized along 2 main axes: processing difficulty & meaning abstractness—revealing an interpretable, topographic representational basis for language processing shared across individuals

We show that voxel responses during comprehension are organized along 2 main axes: processing difficulty & meaning abstractness—revealing an interpretable, topographic representational basis for language processing shared across individuals

May 23, 2025 at 5:00 PM

What are the organizing dimensions of language processing?

We show that voxel responses during comprehension are organized along 2 main axes: processing difficulty & meaning abstractness—revealing an interpretable, topographic representational basis for language processing shared across individuals

We show that voxel responses during comprehension are organized along 2 main axes: processing difficulty & meaning abstractness—revealing an interpretable, topographic representational basis for language processing shared across individuals