curious about how we obtain and process information across time, also how our perception is influenced by our prior knowledge.

loves cats and bikes. 🐈⬛

@sfnjournals.bsky.social! We show content-specific predictions are represented in an alpha rhythm. It’s been a beautiful, inspiring, yet challenging journey.

Huge thanks to everyone, especially @peterkok.bsky.social @jhaarsma.bsky.social

academic.oup.com/nc/article/2...

academic.oup.com/nc/article/2...

www.academictransfer.com/nl/jobs/3576...

www.academictransfer.com/nl/jobs/3576...

Interested in brain stimulation?

Excited by cutting edge neurotech?

Come do a PhD with me!

www.findaphd.com/phds/project...

(thread)

Interested in brain stimulation?

Excited by cutting edge neurotech?

Come do a PhD with me!

www.findaphd.com/phds/project...

(thread)

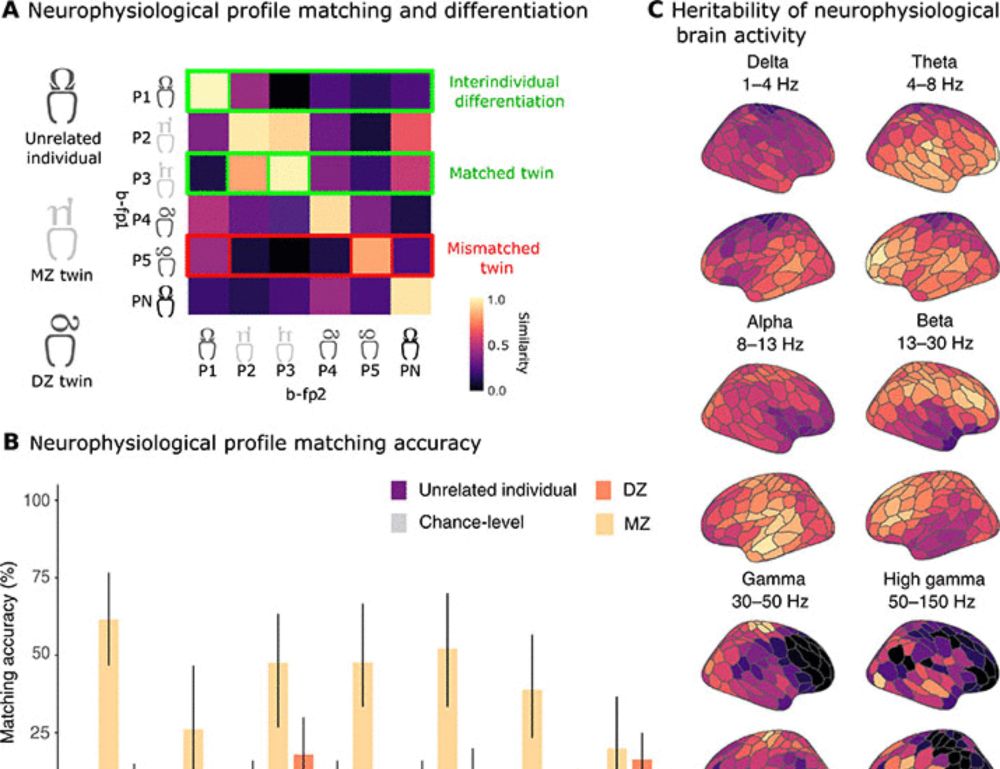

🚨 New paper 🚨 out now in @cp-iscience.bsky.social with @paulapena.bsky.social and @mruz.bsky.social

www.cell.com/iscience/ful...

Summary 🧵 below 👇

🚨 New paper 🚨 out now in @cp-iscience.bsky.social with @paulapena.bsky.social and @mruz.bsky.social

www.cell.com/iscience/ful...

Summary 🧵 below 👇

www.in.fil.ion.ucl.ac.uk/news/blog-po...

Together with Daniel Kaiser (@dkaiserlab.bsky.social), we investigated how internal models shape inter-individual differences in the perception and neural processing of natural scenes.

Preprint: osf.io/preprints/ps...

1/n

Together with Daniel Kaiser (@dkaiserlab.bsky.social), we investigated how internal models shape inter-individual differences in the perception and neural processing of natural scenes.

Preprint: osf.io/preprints/ps...

1/n

doi.org/10.31234/osf...

doi.org/10.31234/osf...

Contents of visual predictions oscillate at alpha frequencies

www.jneurosci.org/content/earl...

#neuroscience

Contents of visual predictions oscillate at alpha frequencies

www.jneurosci.org/content/earl...

#neuroscience

@sfnjournals.bsky.social! We show content-specific predictions are represented in an alpha rhythm. It’s been a beautiful, inspiring, yet challenging journey.

Huge thanks to everyone, especially @peterkok.bsky.social @jhaarsma.bsky.social

@sfnjournals.bsky.social! We show content-specific predictions are represented in an alpha rhythm. It’s been a beautiful, inspiring, yet challenging journey.

Huge thanks to everyone, especially @peterkok.bsky.social @jhaarsma.bsky.social

royalsocietypublishing.org/doi/10.1098/...

royalsocietypublishing.org/doi/10.1098/...

P6.36 | Pre-stimulus Shape Predictions Fluctuate At Alpha Rhythms And Bias Subsequent Perception.

Showing how content-specific pre-stimulus alpha-band oscillations influence perception.

@thechbh.bsky.social #neuroskyence

@thechbh.bsky.social #neuroskyence

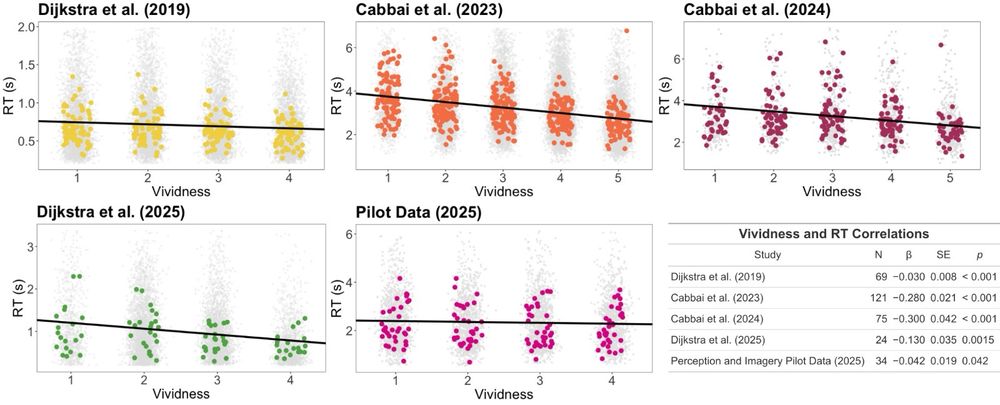

We report a novel and robust effect across five different datasets: vivid imagery is reported faster than weak imagery.

📝: osf.io/preprints/ps...

There are amazing talks and very cool science happening all around! MEGUKI 2025👌

There are amazing talks and very cool science happening all around! MEGUKI 2025👌

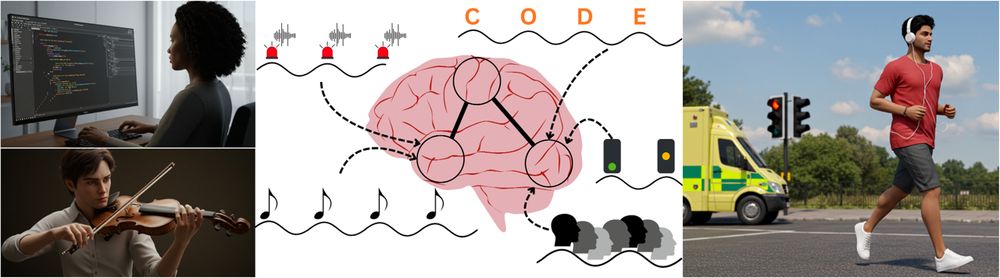

Are sensory sampling rhythms fixed by intrinsically-determined processes, or do they couple to external structure? Here we highlight the incompatibility between these accounts and propose a resolution [1/6]

Are sensory sampling rhythms fixed by intrinsically-determined processes, or do they couple to external structure? Here we highlight the incompatibility between these accounts and propose a resolution [1/6]

MEG‑UKI 2025 lands in London (16–18 July)! A 3-day deep dive into the brain—naturalistic neuroscience, OP-MEG, cutting-edge methods, and real-world impact. Keynotes by Dominik Bach & Jamie Ward. Art, abstracts, and more!

Register here: meguk.ac.uk/registration/

MEG‑UKI 2025 lands in London (16–18 July)! A 3-day deep dive into the brain—naturalistic neuroscience, OP-MEG, cutting-edge methods, and real-world impact. Keynotes by Dominik Bach & Jamie Ward. Art, abstracts, and more!

Register here: meguk.ac.uk/registration/

Using MEG and Rapid Invisible Frequency Tagging (RIFT) in a classic visual search paradigm we show that neuronal excitability in V1 is modulated in line with a priority-map-based mechanism to boost targets and suppress distractors!

Using MEG and Rapid Invisible Frequency Tagging (RIFT) in a classic visual search paradigm we show that neuronal excitability in V1 is modulated in line with a priority-map-based mechanism to boost targets and suppress distractors!

Open Access link: doi.org/10.3758/s134...

Open Access link: doi.org/10.3758/s134...

www.jobs.ac.uk/job/DNJ330/p...

www.jobs.ac.uk/job/DNJ330/p...