pierreablin.com

Turns out that this behaviour can be described with a bound from *convex, nonsmooth* optimization.

A short thread on our latest paper 🚞

arxiv.org/abs/2501.18965

Turns out that this behaviour can be described with a bound from *convex, nonsmooth* optimization.

A short thread on our latest paper 🚞

arxiv.org/abs/2501.18965

Made with ❤️ at Apple

Thanks to my co-authors David Grangier, Angelos Katharopoulos, and Skyler Seto!

arxiv.org/abs/2502.01804

Made with ❤️ at Apple

Thanks to my co-authors David Grangier, Angelos Katharopoulos, and Skyler Seto!

arxiv.org/abs/2502.01804

1) Joint Learning of Energy-based Models and their Partition Function

arxiv.org/abs/2501.18528

2) Loss Functions and Operators Generated by f-Divergences

arxiv.org/abs/2501.18537

A thread.

1) Joint Learning of Energy-based Models and their Partition Function

arxiv.org/abs/2501.18528

2) Loss Functions and Operators Generated by f-Divergences

arxiv.org/abs/2501.18537

A thread.

With José A. Carrillo, @gabrielpeyre.bsky.social and @pierreablin.bsky.social, we tackle this in our new preprint: A Unified Perspective on the Dynamics of Deep Transformers arxiv.org/abs/2501.18322

ML and PDE lovers, check it out!

With José A. Carrillo, @gabrielpeyre.bsky.social and @pierreablin.bsky.social, we tackle this in our new preprint: A Unified Perspective on the Dynamics of Deep Transformers arxiv.org/abs/2501.18322

ML and PDE lovers, check it out!

• Large Language Models for French medical texts

• Evaluating digital medical devices: statistics and causal inference

• Large Language Models for French medical texts

• Evaluating digital medical devices: statistics and causal inference

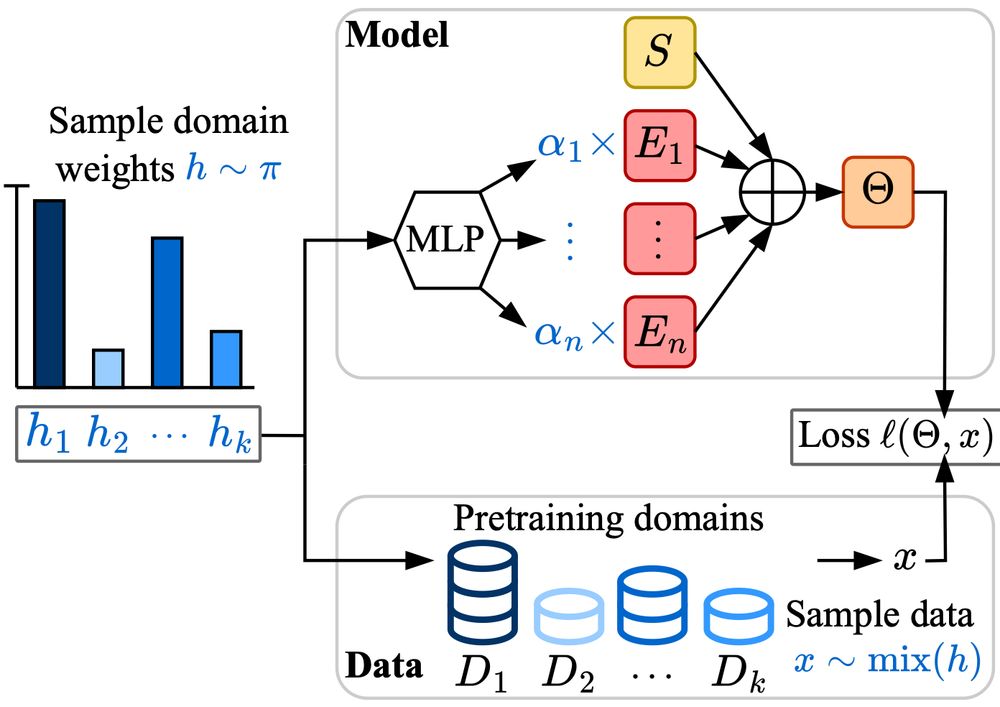

Check out this awesome work by Samira et al. about scaling laws for mixture of experts !

We explored this through the lens of MoEs:

Check out this awesome work by Samira et al. about scaling laws for mixture of experts !

We explored this through the lens of MoEs:

We explored this through the lens of MoEs:

@Apple

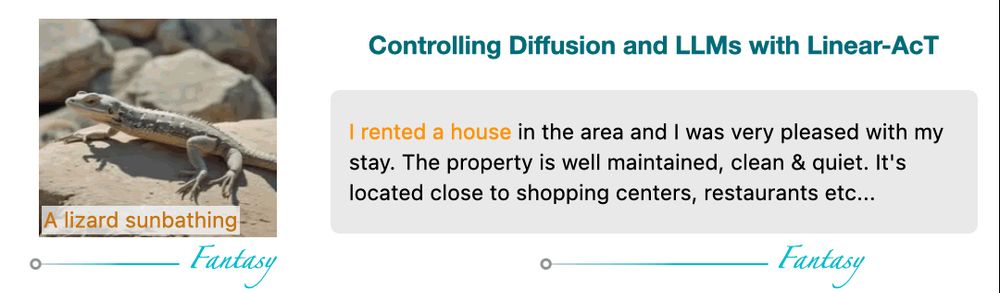

where we achieve interpretable and fine-grained control of LLMs and Diffusion models via Activation Transport 🔥

📄 arxiv.org/abs/2410.23054

🛠️ github.com/apple/ml-act

0/9 🧵

@Apple

where we achieve interpretable and fine-grained control of LLMs and Diffusion models via Activation Transport 🔥

📄 arxiv.org/abs/2410.23054

🛠️ github.com/apple/ml-act

0/9 🧵

Make attention ~18% faster with a drop-in replacement 🚀

Code:

github.com/apple/ml-sig...

Paper

arxiv.org/abs/2409.04431

Make attention ~18% faster with a drop-in replacement 🚀

Code:

github.com/apple/ml-sig...

Paper

arxiv.org/abs/2409.04431

Delighted to share AIMv2, a family of strong, scalable, and open vision encoders that excel at multimodal understanding, recognition, and grounding 🧵

paper: arxiv.org/abs/2411.14402

code: github.com/apple/ml-aim

HF: huggingface.co/collections/...

Delighted to share AIMv2, a family of strong, scalable, and open vision encoders that excel at multimodal understanding, recognition, and grounding 🧵

paper: arxiv.org/abs/2411.14402

code: github.com/apple/ml-aim

HF: huggingface.co/collections/...

Delighted to share AIMv2, a family of strong, scalable, and open vision encoders that excel at multimodal understanding, recognition, and grounding 🧵

paper: arxiv.org/abs/2411.14402

code: github.com/apple/ml-aim

HF: huggingface.co/collections/...

the MinHashEncoder is fast, stateless, and excellent with tree-based learners.

It's in @skrub-data.bsky.social

youtu.be/ZMQrNFef8fg

the MinHashEncoder is fast, stateless, and excellent with tree-based learners.

It's in @skrub-data.bsky.social

youtu.be/ZMQrNFef8fg